# Ultralytics Yolov8

> description: Transform complex data into insightful heatmaps using Ultralytics YOLO11. Discover patterns, trends, and anomalies with vibrant visualizations.

---

# Source: https://raw.githubusercontent.com/ultralytics/ultralytics/main/docs/en/guides/heatmaps.md

---

comments: true

description: Transform complex data into insightful heatmaps using Ultralytics YOLO11. Discover patterns, trends, and anomalies with vibrant visualizations.

keywords: Ultralytics, YOLO11, heatmaps, data visualization, data analysis, complex data, patterns, trends, anomalies

---

# Advanced [Data Visualization](https://www.ultralytics.com/glossary/data-visualization): Heatmaps using Ultralytics YOLO11 🚀

## Introduction to Heatmaps

A heatmap generated with [Ultralytics YOLO11](https://github.com/ultralytics/ultralytics/) transforms complex data into a vibrant, color-coded matrix. This visual tool employs a spectrum of colors to represent varying data values, where warmer hues indicate higher intensities and cooler tones signify lower values. Heatmaps excel in visualizing intricate data patterns, correlations, and anomalies, offering an accessible and engaging approach to data interpretation across diverse domains.

Watch: Heatmaps using Ultralytics YOLO11

## Why Choose Heatmaps for Data Analysis?

- **Intuitive Data Distribution Visualization:** Heatmaps simplify the comprehension of data concentration and distribution, converting complex datasets into easy-to-understand visual formats.

- **Efficient Pattern Detection:** By visualizing data in heatmap format, it becomes easier to spot trends, clusters, and outliers, facilitating quicker analysis and insights.

- **Enhanced Spatial Analysis and Decision-Making:** Heatmaps are instrumental in illustrating spatial relationships, aiding in decision-making processes in sectors such as business intelligence, environmental studies, and urban planning.

## Real World Applications

| Transportation | Retail |

| :--------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------: |

|  |  |

| Ultralytics YOLO11 Transportation Heatmap | Ultralytics YOLO11 Retail Heatmap |

!!! example "Heatmaps using Ultralytics YOLO"

=== "CLI"

```bash

# Run a heatmap example

yolo solutions heatmap show=True

# Pass a source video

yolo solutions heatmap source="path/to/video.mp4"

# Pass a custom colormap

yolo solutions heatmap colormap=cv2.COLORMAP_INFERNO

# Heatmaps + object counting

yolo solutions heatmap region="[(20, 400), (1080, 400), (1080, 360), (20, 360)]"

```

=== "Python"

```python

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

# Video writer

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("heatmap_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# For object counting with heatmap, you can pass region points.

# region_points = [(20, 400), (1080, 400)] # line points

# region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360)] # rectangle region

# region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360), (20, 400)] # polygon points

# Initialize heatmap object

heatmap = solutions.Heatmap(

show=True, # display the output

model="yolo11n.pt", # path to the YOLO11 model file

colormap=cv2.COLORMAP_PARULA, # colormap of heatmap

# region=region_points, # object counting with heatmaps, you can pass region_points

# classes=[0, 2], # generate heatmap for specific classes, e.g., person and car.

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = heatmap(im0)

# print(results) # access the output

video_writer.write(results.plot_im) # write the processed frame.

cap.release()

video_writer.release()

cv2.destroyAllWindows() # destroy all opened windows

```

### `Heatmap()` Arguments

Here's a table with the `Heatmap` arguments:

{% from "macros/solutions-args.md" import param_table %}

{{ param_table(["model", "colormap", "show_in", "show_out", "region"]) }}

You can also apply different `track` arguments in the `Heatmap` solution.

{% from "macros/track-args.md" import param_table %}

{{ param_table(["tracker", "conf", "iou", "classes", "verbose", "device"]) }}

Additionally, the supported visualization arguments are listed below:

{% from "macros/visualization-args.md" import param_table %}

{{ param_table(["show", "line_width", "show_conf", "show_labels"]) }}

#### Heatmap COLORMAPs

| Colormap Name | Description |

| ------------------------------- | -------------------------------------- |

| `cv::COLORMAP_AUTUMN` | Autumn color map |

| `cv::COLORMAP_BONE` | Bone color map |

| `cv::COLORMAP_JET` | Jet color map |

| `cv::COLORMAP_WINTER` | Winter color map |

| `cv::COLORMAP_RAINBOW` | Rainbow color map |

| `cv::COLORMAP_OCEAN` | Ocean color map |

| `cv::COLORMAP_SUMMER` | Summer color map |

| `cv::COLORMAP_SPRING` | Spring color map |

| `cv::COLORMAP_COOL` | Cool color map |

| `cv::COLORMAP_HSV` | HSV (Hue, Saturation, Value) color map |

| `cv::COLORMAP_PINK` | Pink color map |

| `cv::COLORMAP_HOT` | Hot color map |

| `cv::COLORMAP_PARULA` | Parula color map |

| `cv::COLORMAP_MAGMA` | Magma color map |

| `cv::COLORMAP_INFERNO` | Inferno color map |

| `cv::COLORMAP_PLASMA` | Plasma color map |

| `cv::COLORMAP_VIRIDIS` | Viridis color map |

| `cv::COLORMAP_CIVIDIS` | Cividis color map |

| `cv::COLORMAP_TWILIGHT` | Twilight color map |

| `cv::COLORMAP_TWILIGHT_SHIFTED` | Shifted Twilight color map |

| `cv::COLORMAP_TURBO` | Turbo color map |

| `cv::COLORMAP_DEEPGREEN` | Deep Green color map |

These colormaps are commonly used for visualizing data with different color representations.

## How Heatmaps Work in Ultralytics YOLO11

The [Heatmap solution](../reference/solutions/heatmap.md) in Ultralytics YOLO11 extends the [ObjectCounter](../reference/solutions/object_counter.md) class to generate and visualize movement patterns in video streams. When initialized, the solution creates a blank heatmap layer that gets updated as objects move through the frame.

For each detected object, the solution:

1. Tracks the object across frames using YOLO11's tracking capabilities

2. Updates the heatmap intensity at the object's location

3. Applies a selected colormap to visualize the intensity values

4. Overlays the colored heatmap on the original frame

The result is a dynamic visualization that builds up over time, revealing traffic patterns, crowd movements, or other spatial behaviors in your video data.

## FAQ

### How does Ultralytics YOLO11 generate heatmaps and what are their benefits?

Ultralytics YOLO11 generates heatmaps by transforming complex data into a color-coded matrix where different hues represent data intensities. Heatmaps make it easier to visualize patterns, correlations, and anomalies in the data. Warmer hues indicate higher values, while cooler tones represent lower values. The primary benefits include intuitive visualization of data distribution, efficient pattern detection, and enhanced spatial analysis for decision-making. For more details and configuration options, refer to the [Heatmap Configuration](#heatmap-arguments) section.

### Can I use Ultralytics YOLO11 to perform object tracking and generate a heatmap simultaneously?

Yes, Ultralytics YOLO11 supports object tracking and heatmap generation concurrently. This can be achieved through its `Heatmap` solution integrated with object tracking models. To do so, you need to initialize the heatmap object and use YOLO11's tracking capabilities. Here's a simple example:

```python

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

heatmap = solutions.Heatmap(colormap=cv2.COLORMAP_PARULA, show=True, model="yolo11n.pt")

while cap.isOpened():

success, im0 = cap.read()

if not success:

break

results = heatmap(im0)

cap.release()

cv2.destroyAllWindows()

```

For further guidance, check the [Tracking Mode](../modes/track.md) page.

### What makes Ultralytics YOLO11 heatmaps different from other data visualization tools like those from [OpenCV](https://www.ultralytics.com/glossary/opencv) or Matplotlib?

Ultralytics YOLO11 heatmaps are specifically designed for integration with its [object detection](https://www.ultralytics.com/glossary/object-detection) and tracking models, providing an end-to-end solution for real-time data analysis. Unlike generic visualization tools like OpenCV or Matplotlib, YOLO11 heatmaps are optimized for performance and automated processing, supporting features like persistent tracking, decay factor adjustment, and real-time video overlay. For more information on YOLO11's unique features, visit the [Ultralytics YOLO11 Introduction](https://www.ultralytics.com/blog/introducing-ultralytics-yolov8).

### How can I visualize only specific object classes in heatmaps using Ultralytics YOLO11?

You can visualize specific object classes by specifying the desired classes in the `track()` method of the YOLO model. For instance, if you only want to visualize cars and persons (assuming their class indices are 0 and 2), you can set the `classes` parameter accordingly.

```python

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

heatmap = solutions.Heatmap(show=True, model="yolo11n.pt", classes=[0, 2])

while cap.isOpened():

success, im0 = cap.read()

if not success:

break

results = heatmap(im0)

cap.release()

cv2.destroyAllWindows()

```

### Why should businesses choose Ultralytics YOLO11 for heatmap generation in data analysis?

Ultralytics YOLO11 offers seamless integration of advanced object detection and real-time heatmap generation, making it an ideal choice for businesses looking to visualize data more effectively. The key advantages include intuitive data distribution visualization, efficient pattern detection, and enhanced spatial analysis for better decision-making. Additionally, YOLO11's cutting-edge features such as persistent tracking, customizable colormaps, and support for various export formats make it superior to other tools like [TensorFlow](https://www.ultralytics.com/glossary/tensorflow) and OpenCV for comprehensive data analysis. Learn more about business applications at [Ultralytics Plans](https://www.ultralytics.com/plans).

---

# Source: https://raw.githubusercontent.com/ultralytics/ultralytics/main/docs/en/guides/index.md

---

comments: true

description: Master YOLO with Ultralytics tutorials covering training, deployment and optimization. Find solutions, improve metrics, and deploy with ease.

keywords: Ultralytics, YOLO, tutorials, guides, object detection, deep learning, PyTorch, training, deployment, optimization, computer vision

---

# Comprehensive Tutorials for Ultralytics YOLO

Welcome to Ultralytics' YOLO Guides. Our comprehensive tutorials cover various aspects of the YOLO [object detection](https://www.ultralytics.com/glossary/object-detection) model, ranging from training and prediction to deployment. Built on [PyTorch](https://www.ultralytics.com/glossary/pytorch), YOLO stands out for its exceptional speed and [accuracy](https://www.ultralytics.com/glossary/accuracy) in real-time object detection tasks.

Whether you're a beginner or an expert in [deep learning](https://www.ultralytics.com/glossary/deep-learning-dl), our tutorials offer valuable insights into the implementation and optimization of YOLO for your [computer vision](https://www.ultralytics.com/glossary/computer-vision-cv) projects.

Watch: Ultralytics YOLO11 Guides Overview

## Guides

Here's a compilation of in-depth guides to help you master different aspects of Ultralytics YOLO.

- [A Guide on Model Testing](model-testing.md): A thorough guide on testing your computer vision models in realistic settings. Learn how to verify accuracy, reliability, and performance in line with project goals.

- [AzureML Quickstart](azureml-quickstart.md): Get up and running with Ultralytics YOLO models on Microsoft's Azure [Machine Learning](https://www.ultralytics.com/glossary/machine-learning-ml) platform. Learn how to train, deploy, and scale your object detection projects in the cloud.

- [Best Practices for Model Deployment](model-deployment-practices.md): Walk through tips and best practices for efficiently deploying models in computer vision projects, with a focus on optimization, troubleshooting, and security.

- [Conda Quickstart](conda-quickstart.md): Step-by-step guide to setting up a [Conda](https://anaconda.org/conda-forge/ultralytics) environment for Ultralytics. Learn how to install and start using the Ultralytics package efficiently with Conda.

- [Data Collection and Annotation](data-collection-and-annotation.md): Explore the tools, techniques, and best practices for collecting and annotating data to create high-quality inputs for your computer vision models.

- [DeepStream on NVIDIA Jetson](deepstream-nvidia-jetson.md): Quickstart guide for deploying YOLO models on NVIDIA Jetson devices using DeepStream and TensorRT.

- [Defining A Computer Vision Project's Goals](defining-project-goals.md): Walk through how to effectively define clear and measurable goals for your computer vision project. Learn the importance of a well-defined problem statement and how it creates a roadmap for your project.

- [Docker Quickstart](docker-quickstart.md): Complete guide to setting up and using Ultralytics YOLO models with [Docker](https://hub.docker.com/r/ultralytics/ultralytics). Learn how to install Docker, manage GPU support, and run YOLO models in isolated containers for consistent development and deployment.

- [Edge TPU on Raspberry Pi](coral-edge-tpu-on-raspberry-pi.md): [Google Edge TPU](https://developers.google.com/coral) accelerates YOLO inference on [Raspberry Pi](https://www.raspberrypi.com/).

- [Hyperparameter Tuning](hyperparameter-tuning.md): Discover how to optimize your YOLO models by fine-tuning hyperparameters using the Tuner class and genetic evolution algorithms.

- [Insights on Model Evaluation and Fine-Tuning](model-evaluation-insights.md): Gain insights into the strategies and best practices for evaluating and fine-tuning your computer vision models. Learn about the iterative process of refining models to achieve optimal results.

- [Isolating Segmentation Objects](isolating-segmentation-objects.md): Step-by-step recipe and explanation on how to extract and/or isolate objects from images using Ultralytics Segmentation.

- [K-Fold Cross Validation](kfold-cross-validation.md): Learn how to improve model generalization using K-Fold cross-validation technique.

- [Maintaining Your Computer Vision Model](model-monitoring-and-maintenance.md): Understand the key practices for monitoring, maintaining, and documenting computer vision models to guarantee accuracy, spot anomalies, and mitigate data drift.

- [Model Deployment Options](model-deployment-options.md): Overview of YOLO [model deployment](https://www.ultralytics.com/glossary/model-deployment) formats like ONNX, OpenVINO, and TensorRT, with pros and cons for each to inform your deployment strategy.

- [Model YAML Configuration Guide](model-yaml-config.md): A comprehensive deep dive into Ultralytics' model architecture definitions. Explore the YAML format, understand the module resolution system, and learn how to integrate custom modules seamlessly.

- [NVIDIA Jetson](nvidia-jetson.md): Quickstart guide for deploying YOLO models on NVIDIA Jetson devices.

- [OpenVINO Latency vs Throughput Modes](optimizing-openvino-latency-vs-throughput-modes.md): Learn latency and throughput optimization techniques for peak YOLO inference performance.

- [Preprocessing Annotated Data](preprocessing_annotated_data.md): Learn about preprocessing and augmenting image data in computer vision projects using YOLO11, including normalization, dataset augmentation, splitting, and exploratory data analysis (EDA).

- [Raspberry Pi](raspberry-pi.md): Quickstart tutorial to run YOLO models on the latest Raspberry Pi hardware.

- [ROS Quickstart](ros-quickstart.md): Learn how to integrate YOLO with the Robot Operating System (ROS) for real-time object detection in robotics applications, including Point Cloud and Depth images.

- [SAHI Tiled Inference](sahi-tiled-inference.md): Comprehensive guide on leveraging SAHI's sliced inference capabilities with YOLO11 for object detection in high-resolution images.

- [Steps of a Computer Vision Project](steps-of-a-cv-project.md): Learn about the key steps involved in a computer vision project, including defining goals, selecting models, preparing data, and evaluating results.

- [Tips for Model Training](model-training-tips.md): Explore tips on optimizing [batch sizes](https://www.ultralytics.com/glossary/batch-size), using [mixed precision](https://www.ultralytics.com/glossary/mixed-precision), applying pretrained weights, and more to make training your computer vision model a breeze.

- [Triton Inference Server Integration](triton-inference-server.md): Dive into the integration of Ultralytics YOLO11 with NVIDIA's Triton Inference Server for scalable and efficient deep learning inference deployments.

- [Vertex AI Deployment with Docker](vertex-ai-deployment-with-docker.md): Streamlined guide to containerizing YOLO models with Docker and deploying them on Google Cloud Vertex AI—covering build, push, autoscaling, and monitoring.

- [View Inference Images in a Terminal](view-results-in-terminal.md): Use VSCode's integrated terminal to view inference results when using Remote Tunnel or SSH sessions.

- [YOLO Common Issues](yolo-common-issues.md) ⭐ RECOMMENDED: Practical solutions and troubleshooting tips to the most frequently encountered issues when working with Ultralytics YOLO models.

- [YOLO Data Augmentation](yolo-data-augmentation.md): Master the complete range of data augmentation techniques in YOLO, from basic transformations to advanced strategies for improving model robustness and performance.

- [YOLO Performance Metrics](yolo-performance-metrics.md) ⭐ ESSENTIAL: Understand the key metrics like mAP, IoU, and [F1 score](https://www.ultralytics.com/glossary/f1-score) used to evaluate the performance of your YOLO models. Includes practical examples and tips on how to improve detection accuracy and speed.

- [YOLO Thread-Safe Inference](yolo-thread-safe-inference.md): Guidelines for performing inference with YOLO models in a thread-safe manner. Learn the importance of thread safety and best practices to prevent race conditions and ensure consistent predictions.

## Contribute to Our Guides

We welcome contributions from the community! If you've mastered a particular aspect of Ultralytics YOLO that's not yet covered in our guides, we encourage you to share your expertise. Writing a guide is a great way to give back to the community and help us make our documentation more comprehensive and user-friendly.

To get started, please read our [Contributing Guide](../help/contributing.md) for guidelines on how to open a Pull Request (PR). We look forward to your contributions.

## FAQ

### How do I train a custom object detection model using Ultralytics YOLO?

Training a custom object detection model with Ultralytics YOLO is straightforward. Start by preparing your dataset in the correct format and installing the Ultralytics package. Use the following code to initiate training:

!!! example

=== "Python"

```python

from ultralytics import YOLO

model = YOLO("yolo11n.pt") # Load a pretrained YOLO model

model.train(data="path/to/dataset.yaml", epochs=50) # Train on custom dataset

```

=== "CLI"

```bash

yolo task=detect mode=train model=yolo11n.pt data=path/to/dataset.yaml epochs=50

```

For detailed dataset formatting and additional options, refer to our [Tips for Model Training](model-training-tips.md) guide.

### What performance metrics should I use to evaluate my YOLO model?

Evaluating your YOLO model performance is crucial to understanding its efficacy. Key metrics include [Mean Average Precision](https://www.ultralytics.com/glossary/mean-average-precision-map) (mAP), [Intersection over Union](https://www.ultralytics.com/glossary/intersection-over-union-iou) (IoU), and F1 score. These metrics help assess the accuracy and [precision](https://www.ultralytics.com/glossary/precision) of object detection tasks. You can learn more about these metrics and how to improve your model in our [YOLO Performance Metrics](yolo-performance-metrics.md) guide.

### Why should I use Ultralytics HUB for my computer vision projects?

Ultralytics HUB is a no-code platform that simplifies managing, training, and deploying YOLO models. It supports seamless integration, real-time tracking, and cloud training, making it ideal for both beginners and professionals. Discover more about its features and how it can streamline your workflow with our [Ultralytics HUB](https://docs.ultralytics.com/hub/) quickstart guide.

### What are the common issues faced during YOLO model training, and how can I resolve them?

Common issues during YOLO model training include data formatting errors, model architecture mismatches, and insufficient [training data](https://www.ultralytics.com/glossary/training-data). To address these, ensure your dataset is correctly formatted, check for compatible model versions, and augment your training data. For a comprehensive list of solutions, refer to our [YOLO Common Issues](yolo-common-issues.md) guide.

### How can I deploy my YOLO model for real-time object detection on edge devices?

Deploying YOLO models on edge devices like NVIDIA Jetson and Raspberry Pi requires converting the model to a compatible format such as TensorRT or TFLite. Follow our step-by-step guides for [NVIDIA Jetson](nvidia-jetson.md) and [Raspberry Pi](raspberry-pi.md) deployments to get started with real-time object detection on edge hardware. These guides will walk you through installation, configuration, and performance optimization.

---

# Source: https://raw.githubusercontent.com/ultralytics/ultralytics/main/docs/en/guides/object-counting.md

---

comments: true

description: Learn to accurately identify and count objects in real-time using Ultralytics YOLO11 for applications like crowd analysis and surveillance.

keywords: object counting, YOLO11, Ultralytics, real-time object detection, AI, deep learning, object tracking, crowd analysis, surveillance, resource optimization

---

# Object Counting using Ultralytics YOLO11

## What is Object Counting?

Object counting with [Ultralytics YOLO11](https://github.com/ultralytics/ultralytics/) involves accurate identification and counting of specific objects in videos and camera streams. YOLO11 excels in real-time applications, providing efficient and precise object counting for various scenarios like crowd analysis and surveillance, thanks to its state-of-the-art algorithms and [deep learning](https://www.ultralytics.com/glossary/deep-learning-dl) capabilities.

Watch: How to Perform Real-Time Object Counting with Ultralytics YOLO11 🍏

## Advantages of Object Counting

- **Resource Optimization:** Object counting facilitates efficient resource management by providing accurate counts, optimizing resource allocation in applications like [inventory management](https://docs.ultralytics.com/guides/analytics/).

- **Enhanced Security:** Object counting enhances security and surveillance by accurately tracking and counting entities, aiding in proactive [threat detection](https://docs.ultralytics.com/guides/security-alarm-system/).

- **Informed Decision-Making:** Object counting offers valuable insights for decision-making, optimizing processes in retail, [traffic management](https://www.ultralytics.com/blog/ai-in-traffic-management-from-congestion-to-coordination), and various other domains.

## Real World Applications

| Logistics | Aquaculture |

| :-----------------------------------------------------------------------------------------------------------------------------------------------------: | :----------------------------------------------------------------------------------------------------------------------------------------------------------: |

|  |  |

| Conveyor Belt Packets Counting Using Ultralytics YOLO11 | Fish Counting in Sea using Ultralytics YOLO11 |

!!! example "Object Counting using Ultralytics YOLO"

=== "CLI"

```bash

# Run a counting example

yolo solutions count show=True

# Pass a source video

yolo solutions count source="path/to/video.mp4"

# Pass region coordinates

yolo solutions count region="[(20, 400), (1080, 400), (1080, 360), (20, 360)]"

```

The `region` argument accepts either two points (for a line) or a polygon with three or more points. Define the coordinates in the order they should be connected so the counter knows exactly where entries and exits occur.

=== "Python"

```python

import cv2

from ultralytics import solutions

cap = cv2.VideoCapture("path/to/video.mp4")

assert cap.isOpened(), "Error reading video file"

# region_points = [(20, 400), (1080, 400)] # line counting

region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360)] # rectangular region

# region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360), (20, 400)] # polygon region

# Video writer

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter("object_counting_output.avi", cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

# Initialize object counter object

counter = solutions.ObjectCounter(

show=True, # display the output

region=region_points, # pass region points

model="yolo11n.pt", # model="yolo11n-obb.pt" for object counting with OBB model.

# classes=[0, 2], # count specific classes, e.g., person and car with the COCO pretrained model.

# tracker="botsort.yaml", # choose trackers, e.g., "bytetrack.yaml"

)

# Process video

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = counter(im0)

# print(results) # access the output

video_writer.write(results.plot_im) # write the processed frame.

cap.release()

video_writer.release()

cv2.destroyAllWindows() # destroy all opened windows

```

### `ObjectCounter` Arguments

Here's a table with the `ObjectCounter` arguments:

{% from "macros/solutions-args.md" import param_table %}

{{ param_table(["model", "show_in", "show_out", "region"]) }}

The `ObjectCounter` solution allows the use of several `track` arguments:

{% from "macros/track-args.md" import param_table %}

{{ param_table(["tracker", "conf", "iou", "classes", "verbose", "device"]) }}

Additionally, the visualization arguments listed below are supported:

{% from "macros/visualization-args.md" import param_table %}

{{ param_table(["show", "line_width", "show_conf", "show_labels"]) }}

## FAQ

### How do I count objects in a video using Ultralytics YOLO11?

To count objects in a video using Ultralytics YOLO11, you can follow these steps:

1. Import the necessary libraries (`cv2`, `ultralytics`).

2. Define the counting region (e.g., a polygon, line, etc.).

3. Set up the video capture and initialize the object counter.

4. Process each frame to track objects and count them within the defined region.

Here's a simple example for counting in a region:

```python

import cv2

from ultralytics import solutions

def count_objects_in_region(video_path, output_video_path, model_path):

"""Count objects in a specific region within a video."""

cap = cv2.VideoCapture(video_path)

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter(output_video_path, cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

region_points = [(20, 400), (1080, 400), (1080, 360), (20, 360)]

counter = solutions.ObjectCounter(show=True, region=region_points, model=model_path)

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = counter(im0)

video_writer.write(results.plot_im)

cap.release()

video_writer.release()

cv2.destroyAllWindows()

count_objects_in_region("path/to/video.mp4", "output_video.avi", "yolo11n.pt")

```

For more advanced configurations and options, check out the [RegionCounter solution](https://docs.ultralytics.com/guides/region-counting/) for counting objects in multiple regions simultaneously.

### What are the advantages of using Ultralytics YOLO11 for object counting?

Using Ultralytics YOLO11 for object counting offers several advantages:

1. **Resource Optimization:** It facilitates efficient resource management by providing accurate counts, helping optimize resource allocation in industries like [inventory management](https://www.ultralytics.com/blog/ai-for-smarter-retail-inventory-management).

2. **Enhanced Security:** It enhances security and surveillance by accurately tracking and counting entities, aiding in proactive threat detection and [security systems](https://docs.ultralytics.com/guides/security-alarm-system/).

3. **Informed Decision-Making:** It offers valuable insights for decision-making, optimizing processes in domains like retail, traffic management, and more.

4. **Real-time Processing:** YOLO11's architecture enables [real-time inference](https://www.ultralytics.com/glossary/real-time-inference), making it suitable for live video streams and time-sensitive applications.

For implementation examples and practical applications, explore the [TrackZone solution](https://docs.ultralytics.com/guides/trackzone/) for tracking objects in specific zones.

### How can I count specific classes of objects using Ultralytics YOLO11?

To count specific classes of objects using Ultralytics YOLO11, you need to specify the classes you are interested in during the tracking phase. Below is a Python example:

```python

import cv2

from ultralytics import solutions

def count_specific_classes(video_path, output_video_path, model_path, classes_to_count):

"""Count specific classes of objects in a video."""

cap = cv2.VideoCapture(video_path)

assert cap.isOpened(), "Error reading video file"

w, h, fps = (int(cap.get(x)) for x in (cv2.CAP_PROP_FRAME_WIDTH, cv2.CAP_PROP_FRAME_HEIGHT, cv2.CAP_PROP_FPS))

video_writer = cv2.VideoWriter(output_video_path, cv2.VideoWriter_fourcc(*"mp4v"), fps, (w, h))

line_points = [(20, 400), (1080, 400)]

counter = solutions.ObjectCounter(show=True, region=line_points, model=model_path, classes=classes_to_count)

while cap.isOpened():

success, im0 = cap.read()

if not success:

print("Video frame is empty or processing is complete.")

break

results = counter(im0)

video_writer.write(results.plot_im)

cap.release()

video_writer.release()

cv2.destroyAllWindows()

count_specific_classes("path/to/video.mp4", "output_specific_classes.avi", "yolo11n.pt", [0, 2])

```

In this example, `classes_to_count=[0, 2]` means it counts objects of class `0` and `2` (e.g., person and car in the COCO dataset). You can find more information about class indices in the [COCO dataset documentation](https://docs.ultralytics.com/datasets/detect/coco/).

### Why should I use YOLO11 over other [object detection](https://www.ultralytics.com/glossary/object-detection) models for real-time applications?

Ultralytics YOLO11 provides several advantages over other object detection models like [Faster R-CNN](https://docs.ultralytics.com/compare/yolo11-vs-efficientdet/), SSD, and previous YOLO versions:

1. **Speed and Efficiency:** YOLO11 offers real-time processing capabilities, making it ideal for applications requiring high-speed inference, such as surveillance and [autonomous driving](https://www.ultralytics.com/blog/ai-in-self-driving-cars).

2. **[Accuracy](https://www.ultralytics.com/glossary/accuracy):** It provides state-of-the-art accuracy for object detection and tracking tasks, reducing the number of false positives and improving overall system reliability.

3. **Ease of Integration:** YOLO11 offers seamless integration with various platforms and devices, including mobile and [edge devices](https://docs.ultralytics.com/guides/nvidia-jetson/), which is crucial for modern AI applications.

4. **Flexibility:** Supports various tasks like object detection, [segmentation](https://docs.ultralytics.com/tasks/segment/), and tracking with configurable models to meet specific use-case requirements.

Check out Ultralytics [YOLO11 Documentation](https://docs.ultralytics.com/models/yolo11/) for a deeper dive into its features and performance comparisons.

### Can I use YOLO11 for advanced applications like crowd analysis and traffic management?

Yes, Ultralytics YOLO11 is perfectly suited for advanced applications like crowd analysis and traffic management due to its real-time detection capabilities, scalability, and integration flexibility. Its advanced features allow for high-accuracy object tracking, counting, and classification in dynamic environments. Example use cases include:

- **Crowd Analysis:** Monitor and manage large gatherings, ensuring safety and optimizing crowd flow with [region-based counting](https://docs.ultralytics.com/guides/region-counting/).

- **Traffic Management:** Track and count vehicles, analyze traffic patterns, and manage congestion in real-time with [speed estimation](https://docs.ultralytics.com/guides/speed-estimation/) capabilities.

- **Retail Analytics:** Analyze customer movement patterns and product interactions to optimize store layouts and improve customer experience.

- **Industrial Automation:** Count products on conveyor belts and monitor production lines for quality control and efficiency improvements.

For more specialized applications, explore [Ultralytics Solutions](https://docs.ultralytics.com/solutions/) for a comprehensive set of tools designed for real-world computer vision challenges.

---

# Source: https://raw.githubusercontent.com/ultralytics/ultralytics/main/docs/en/guides/steps-of-a-cv-project.md

---

comments: true

description: Discover essential steps for launching a successful computer vision project, from defining goals to model deployment and maintenance.

keywords: Computer Vision, AI, Object Detection, Image Classification, Instance Segmentation, Data Annotation, Model Training, Model Evaluation, Model Deployment

---

# Understanding the Key Steps in a Computer Vision Project

## Introduction

Computer vision is a subfield of [artificial intelligence](https://www.ultralytics.com/glossary/artificial-intelligence-ai) (AI) that helps computers see and understand the world like humans do. It processes and analyzes images or videos to extract information, recognize patterns, and make decisions based on that data.

Watch: How to Do Computer Vision Projects | A Step-by-Step Guide

Computer vision techniques like [object detection](../tasks/detect.md), [image classification](../tasks/classify.md), and [instance segmentation](../tasks/segment.md) can be applied across various industries, from [autonomous driving](https://www.ultralytics.com/solutions/ai-in-automotive) to [medical imaging](https://www.ultralytics.com/solutions/ai-in-healthcare) to gain valuable insights.

Working on your own computer vision projects is a great way to understand and learn more about computer vision. However, a computer vision project can consist of many steps, and it might seem confusing at first. By the end of this guide, you'll be familiar with the steps involved in a computer vision project. We'll walk through everything from the beginning to the end of a project, explaining why each part is important.

## An Overview of a Computer Vision Project

Before discussing the details of each step involved in a computer vision project, let's look at the overall process. If you started a computer vision project today, you'd take the following steps:

- Your first priority would be to understand your project's requirements.

- Then, you'd collect and accurately label the images that will help train your model.

- Next, you'd clean your data and apply augmentation techniques to prepare it for model training.

- After model training, you'd thoroughly test and evaluate your model to make sure it performs consistently under different conditions.

- Finally, you'd deploy your model into the real world and update it based on new insights and feedback.

Now that we know what to expect, let's dive right into the steps and get your project moving forward.

## Step 1: Defining Your Project's Goals

The first step in any computer vision project is clearly defining the problem you're trying to solve. Knowing the end goal helps you start to build a solution. This is especially true when it comes to computer vision because your project's objective will directly affect which computer vision task you need to focus on.

Here are some examples of project objectives and the computer vision tasks that can be used to reach these objectives:

- **Objective:** To develop a system that can monitor and manage the flow of different vehicle types on highways, improving traffic management and safety.

- **Computer Vision Task:** Object detection is ideal for traffic monitoring because it efficiently locates and identifies multiple vehicles. It is less computationally demanding than image segmentation, which provides unnecessary detail for this task, ensuring faster, real-time analysis.

- **Objective:** To develop a tool that assists radiologists by providing precise, pixel-level outlines of tumors in medical imaging scans.

- **Computer Vision Task:** Image segmentation is suitable for medical imaging because it provides accurate and detailed boundaries of tumors that are crucial for assessing size, shape, and treatment planning.

- **Objective:** To create a digital system that categorizes various documents (e.g., invoices, receipts, legal paperwork) to improve organizational efficiency and document retrieval.

- **Computer Vision Task:** [Image classification](https://www.ultralytics.com/glossary/image-classification) is ideal here as it handles one document at a time, without needing to consider the document's position in the image. This approach simplifies and accelerates the sorting process.

### Step 1.5: Selecting the Right Model and Training Approach

After understanding the project objective and suitable computer vision tasks, an essential part of defining the project goal is [selecting the right model](../models/index.md) and training approach.

Depending on the objective, you might choose to select the model first or after seeing what data you are able to collect in Step 2. For example, suppose your project is highly dependent on the availability of specific types of data. In that case, it may be more practical to gather and analyze the data first before selecting a model. On the other hand, if you have a clear understanding of the model requirements, you can choose the model first and then collect data that fits those specifications.

Choosing between training from scratch or using [transfer learning](https://www.ultralytics.com/glossary/transfer-learning) affects how you prepare your data. Training from scratch requires a diverse dataset to build the model's understanding from the ground up. Transfer learning, on the other hand, allows you to use a pretrained model and adapt it with a smaller, more specific dataset. Also, choosing a specific model to train will determine how you need to prepare your data, such as resizing images or adding annotations, according to the model's specific requirements.

Note: When choosing a model, consider its [deployment](./model-deployment-options.md) to ensure compatibility and performance. For example, lightweight models are ideal for [edge computing](https://www.ultralytics.com/glossary/edge-computing) due to their efficiency on resource-constrained devices. To learn more about the key points related to defining your project, read [our guide](./defining-project-goals.md) on defining your project's goals and selecting the right model.

Before getting into the hands-on work of a computer vision project, it's important to have a clear understanding of these details. Double-check that you've considered the following before moving on to Step 2:

- Clearly define the problem you're trying to solve.

- Determine the end goal of your project.

- Identify the specific computer vision task needed (e.g., object detection, image classification, image segmentation).

- Decide whether to train a model from scratch or use transfer learning.

- Select the appropriate model for your task and deployment needs.

## Step 2: Data Collection and Data Annotation

The quality of your computer vision models depends on the quality of your dataset. You can either collect images from the internet, take your own pictures, or use pre-existing datasets. Here are some great resources for downloading high-quality datasets: [Google Dataset Search Engine](https://datasetsearch.research.google.com/), [UC Irvine Machine Learning Repository](https://archive.ics.uci.edu/), and [Kaggle Datasets](https://www.kaggle.com/datasets).

Some libraries, like Ultralytics, provide [built-in support for various datasets](../datasets/index.md), making it easier to get started with high-quality data. These libraries often include utilities for using popular datasets seamlessly, which can save you a lot of time and effort in the initial stages of your project.

However, if you choose to collect images or take your own pictures, you'll need to annotate your data. Data annotation is the process of labeling your data to impart knowledge to your model. The type of data annotation you'll work with depends on your specific computer vision technique. Here are some examples:

- **Image Classification:** You'll label the entire image as a single class.

- **[Object Detection](https://www.ultralytics.com/glossary/object-detection):** You'll draw bounding boxes around each object in the image and label each box.

- **[Image Segmentation](https://www.ultralytics.com/glossary/image-segmentation):** You'll label each pixel in the image according to the object it belongs to, creating detailed object boundaries.

[Data collection and annotation](./data-collection-and-annotation.md) can be a time-consuming manual effort. Annotation tools can help make this process easier. Here are some useful open annotation tools: [LabeI Studio](https://github.com/HumanSignal/label-studio), [CVAT](https://github.com/cvat-ai/cvat), and [Labelme](https://github.com/wkentaro/labelme).

## Step 3: Data Augmentation and Splitting Your Dataset

After collecting and annotating your image data, it's important to first split your dataset into training, validation, and test sets before performing [data augmentation](https://www.ultralytics.com/glossary/data-augmentation). Splitting your dataset before augmentation is crucial to test and validate your model on original, unaltered data. It helps accurately assess how well the model generalizes to new, unseen data.

Here's how to split your data:

- **Training Set:** It is the largest portion of your data, typically 70-80% of the total, used to train your model.

- **Validation Set:** Usually around 10-15% of your data; this set is used to tune hyperparameters and validate the model during training, helping to prevent [overfitting](https://www.ultralytics.com/glossary/overfitting).

- **Test Set:** The remaining 10-15% of your data is set aside as the test set. It is used to evaluate the model's performance on unseen data after training is complete.

After splitting your data, you can perform data augmentation by applying transformations like rotating, scaling, and flipping images to artificially increase the size of your dataset. Data augmentation makes your model more robust to variations and improves its performance on unseen images.

Libraries like [OpenCV](https://www.ultralytics.com/glossary/opencv), [Albumentations](../integrations/albumentations.md), and [TensorFlow](https://www.ultralytics.com/glossary/tensorflow) offer flexible augmentation functions that you can use. Additionally, some libraries, such as Ultralytics, have [built-in augmentation settings](../modes/train.md) directly within its model training function, simplifying the process.

To understand your data better, you can use tools like [Matplotlib](https://matplotlib.org/) or [Seaborn](https://seaborn.pydata.org/) to visualize the images and analyze their distribution and characteristics. Visualizing your data helps identify patterns, anomalies, and the effectiveness of your augmentation techniques. You can also use [Ultralytics Explorer](../datasets/explorer/index.md), a tool for exploring computer vision datasets with semantic search, SQL queries, and vector similarity search.

By properly [understanding, splitting, and augmenting your data](./preprocessing_annotated_data.md), you can develop a well-trained, validated, and tested model that performs well in real-world applications.

## Step 4: Model Training

Once your dataset is ready for training, you can focus on setting up the necessary environment, managing your datasets, and training your model.

First, you'll need to make sure your environment is configured correctly. Typically, this includes the following:

- Installing essential libraries and frameworks like TensorFlow, [PyTorch](https://www.ultralytics.com/glossary/pytorch), or [Ultralytics](../quickstart.md).

- If you are using a GPU, installing libraries like CUDA and cuDNN will help enable GPU acceleration and speed up the training process.

Then, you can load your training and validation datasets into your environment. Normalize and preprocess the data through resizing, format conversion, or augmentation. With your model selected, configure the layers and specify hyperparameters. Compile the model by setting the [loss function](https://www.ultralytics.com/glossary/loss-function), optimizer, and performance metrics.

Libraries like Ultralytics simplify the training process. You can [start training](../modes/train.md) by feeding data into the model with minimal code. These libraries handle weight adjustments, [backpropagation](https://www.ultralytics.com/glossary/backpropagation), and validation automatically. They also offer tools to monitor progress and adjust hyperparameters easily. After training, save the model and its weights with a few commands.

It's important to keep in mind that proper dataset management is vital for efficient training. Use version control for datasets to track changes and ensure reproducibility. Tools like [DVC (Data Version Control)](../integrations/dvc.md) can help manage large datasets.

## Step 5: Model Evaluation and Model Finetuning

It's important to assess your model's performance using various metrics and refine it to improve [accuracy](https://www.ultralytics.com/glossary/accuracy). [Evaluating](../modes/val.md) helps identify areas where the model excels and where it may need improvement. [Fine-tuning](https://www.ultralytics.com/glossary/fine-tuning) ensures the model is optimized for the best possible performance.

- **[Performance Metrics](./yolo-performance-metrics.md):** Use metrics like accuracy, [precision](https://www.ultralytics.com/glossary/precision), [recall](https://www.ultralytics.com/glossary/recall), and F1-score to evaluate your model's performance. These metrics provide insights into how well your model is making predictions.

- **[Hyperparameter Tuning](./hyperparameter-tuning.md):** Adjust hyperparameters to optimize model performance. Techniques like grid search or random search can help find the best hyperparameter values.

- **Fine-Tuning:** Make small adjustments to the model architecture or training process to enhance performance. This might involve tweaking [learning rates](https://www.ultralytics.com/glossary/learning-rate), [batch sizes](https://www.ultralytics.com/glossary/batch-size), or other model parameters.

For a deeper understanding of model evaluation and fine-tuning techniques, check out our [model evaluation insights guide](./model-evaluation-insights.md).

## Step 6: Model Testing

In this step, you can make sure that your model performs well on completely unseen data, confirming its readiness for deployment. The difference between model testing and model evaluation is that it focuses on verifying the final model's performance rather than iteratively improving it.

It's important to thoroughly test and debug any common issues that may arise. Test your model on a separate test dataset that was not used during training or validation. This dataset should represent real-world scenarios to ensure the model's performance is consistent and reliable.

Also, address common problems such as overfitting, [underfitting](https://www.ultralytics.com/glossary/underfitting), and data leakage. Use techniques like [cross-validation](https://www.ultralytics.com/glossary/cross-validation) and [anomaly detection](https://www.ultralytics.com/glossary/anomaly-detection) to identify and fix these issues. For comprehensive testing strategies, refer to our [model testing guide](./model-testing.md).

## Step 7: Model Deployment

Once your model has been thoroughly tested, it's time to deploy it. [Model deployment](https://www.ultralytics.com/glossary/model-deployment) involves making your model available for use in a production environment. Here are the steps to deploy a computer vision model:

- **Setting Up the Environment:** Configure the necessary infrastructure for your chosen deployment option, whether it's cloud-based (AWS, Google Cloud, Azure) or edge-based (local devices, IoT).

- **[Exporting the Model](../modes/export.md):** Export your model to the appropriate format (e.g., ONNX, TensorRT, CoreML for YOLO11) to ensure compatibility with your deployment platform.

- **Deploying the Model:** Deploy the model by setting up APIs or endpoints and integrating it with your application.

- **Ensuring Scalability:** Implement load balancers, auto-scaling groups, and monitoring tools to manage resources and handle increasing data and user requests.

For more detailed guidance on deployment strategies and best practices, check out our [model deployment practices guide](./model-deployment-practices.md).

## Step 8: Monitoring, Maintenance, and Documentation

Once your model is deployed, it's important to continuously monitor its performance, maintain it to handle any issues, and document the entire process for future reference and improvements.

Monitoring tools can help you track key performance indicators (KPIs) and detect anomalies or drops in accuracy. By monitoring the model, you can be aware of model drift, where the model's performance declines over time due to changes in the input data. Periodically retrain the model with updated data to maintain accuracy and relevance.

In addition to monitoring and maintenance, documentation is also key. Thoroughly document the entire process, including model architecture, training procedures, hyperparameters, data preprocessing steps, and any changes made during deployment and maintenance. Good documentation ensures reproducibility and makes future updates or troubleshooting easier. By effectively [monitoring, maintaining, and documenting your model](./model-monitoring-and-maintenance.md), you can ensure it remains accurate, reliable, and easy to manage over its lifecycle.

## Engaging with the Community

Connecting with a community of computer vision enthusiasts can help you tackle any issues you face while working on your computer vision project with confidence. Here are some ways to learn, troubleshoot, and network effectively.

### Community Resources

- **GitHub Issues:** Check out the [YOLO11 GitHub repository](https://github.com/ultralytics/ultralytics/issues) and use the Issues tab to ask questions, report bugs, and suggest new features. The active community and maintainers are there to help with specific issues.

- **Ultralytics Discord Server:** Join the [Ultralytics Discord server](https://discord.com/invite/ultralytics) to interact with other users and developers, get support, and share insights.

### Official Documentation

- **Ultralytics YOLO11 Documentation:** Explore the [official YOLO11 documentation](./index.md) for detailed guides with helpful tips on different computer vision tasks and projects.

Using these resources will help you overcome challenges and stay updated with the latest trends and best practices in the computer vision community.

## Next Steps

Taking on a computer vision project can be exciting and rewarding. By following the steps in this guide, you can build a solid foundation for success. Each step is crucial for developing a solution that meets your objectives and works well in real-world scenarios. As you gain experience, you'll discover advanced techniques and tools to improve your projects.

## FAQ

### How do I choose the right computer vision task for my project?

Choosing the right computer vision task depends on your project's end goal. For instance, if you want to monitor traffic, **object detection** is suitable as it can locate and identify multiple vehicle types in real-time. For medical imaging, **image segmentation** is ideal for providing detailed boundaries of tumors, aiding in diagnosis and treatment planning. Learn more about specific tasks like [object detection](../tasks/detect.md), [image classification](../tasks/classify.md), and [instance segmentation](../tasks/segment.md).

### Why is data annotation crucial in computer vision projects?

Data annotation is vital for teaching your model to recognize patterns. The type of annotation varies with the task:

- **Image Classification**: Entire image labeled as a single class.

- **Object Detection**: Bounding boxes drawn around objects.

- **Image Segmentation**: Each pixel labeled according to the object it belongs to.

Tools like [Label Studio](https://github.com/HumanSignal/label-studio), [CVAT](https://github.com/cvat-ai/cvat), and [Labelme](https://github.com/wkentaro/labelme) can assist in this process. For more details, refer to our [data collection and annotation guide](./data-collection-and-annotation.md).

### What steps should I follow to augment and split my dataset effectively?

Splitting your dataset before augmentation helps validate model performance on original, unaltered data. Follow these steps:

- **Training Set**: 70-80% of your data.

- **Validation Set**: 10-15% for [hyperparameter tuning](https://www.ultralytics.com/glossary/hyperparameter-tuning).

- **Test Set**: Remaining 10-15% for final evaluation.

After splitting, apply data augmentation techniques like rotation, scaling, and flipping to increase dataset diversity. Libraries such as [Albumentations](../integrations/albumentations.md) and OpenCV can help. Ultralytics also offers [built-in augmentation settings](../modes/train.md) for convenience.

### How can I export my trained computer vision model for deployment?

Exporting your model ensures compatibility with different deployment platforms. Ultralytics provides multiple formats, including [ONNX](../integrations/onnx.md), [TensorRT](../integrations/tensorrt.md), and [CoreML](../integrations/coreml.md). To export your YOLO11 model, follow this guide:

- Use the `export` function with the desired format parameter.

- Ensure the exported model fits the specifications of your deployment environment (e.g., edge devices, cloud).

For more information, check out the [model export guide](../modes/export.md).

### What are the best practices for monitoring and maintaining a deployed computer vision model?

Continuous monitoring and maintenance are essential for a model's long-term success. Implement tools for tracking Key Performance Indicators (KPIs) and detecting anomalies. Regularly retrain the model with updated data to counteract model drift. Document the entire process, including model architecture, hyperparameters, and changes, to ensure reproducibility and ease of future updates. Learn more in our [monitoring and maintenance guide](./model-monitoring-and-maintenance.md).

---

# Source: https://raw.githubusercontent.com/ultralytics/ultralytics/main/docs/en/help/index.md

---

comments: true

description: Explore the Ultralytics Help Center with guides, FAQs, CI processes, and policies to support your YOLO model experience and contributions.

keywords: Ultralytics, YOLO, help center, documentation, guides, FAQ, contributing, CI, MRE, CLA, code of conduct, security policy, privacy policy

---

# Help

Welcome to the Ultralytics Help page. This page brings together practical guides, policies, and FAQs to support your work with Ultralytics YOLO models and repositories.

- [Frequently Asked Questions (FAQ)](FAQ.md): Find answers to common questions and issues encountered by the community of Ultralytics YOLO users and contributors.

- [Contributing Guide](contributing.md): Discover the protocols for making contributions, including how to submit pull requests, report bugs, and more.

- [Continuous Integration (CI) Guide](CI.md): Gain insights into the CI processes we employ, complete with status reports for each Ultralytics repository.

- [Contributor License Agreement (CLA)](CLA.md): Review the CLA to understand the rights and responsibilities associated with contributing to Ultralytics projects.

- [Minimum Reproducible Example (MRE) Guide](minimum-reproducible-example.md): Learn the process for creating an MRE, which is crucial for the timely and effective resolution of bug reports.

- [Code of Conduct](code-of-conduct.md): Our community guidelines support a respectful and open atmosphere for all collaborators.

- [Environmental, Health, and Safety (EHS) Policy](environmental-health-safety.md): Delve into our commitment to sustainability and the well-being of all our stakeholders.

- [Security Policy](security.md): Familiarize yourself with our security protocols and the procedure for reporting vulnerabilities.

- [Privacy Policy](privacy.md): Read our privacy policy to understand how we protect your data and respect your privacy in all our services and operations.

We encourage you to review these resources for a smooth and productive experience. If you need additional support, reach out via [GitHub Issues](https://github.com/ultralytics/ultralytics/issues) or the [Ultralytics Community](https://community.ultralytics.com/).

## FAQ

### What is Ultralytics YOLO and how does it benefit my [machine learning](https://www.ultralytics.com/glossary/machine-learning-ml) projects?

Ultralytics YOLO (You Only Look Once) is a state-of-the-art, real-time [object detection](https://www.ultralytics.com/glossary/object-detection) model. Its latest version, YOLO11, enhances speed, [accuracy](https://www.ultralytics.com/glossary/accuracy), and versatility, making it ideal for a wide range of applications, from real-time video analytics to advanced machine learning research. YOLO's efficiency in detecting objects in images and videos has made it the go-to solution for businesses and researchers looking to integrate robust [computer vision](https://www.ultralytics.com/glossary/computer-vision-cv) capabilities into their projects.

For more details on YOLO11, visit the [YOLO11 documentation](../models/yolo11.md).

### How do I contribute to Ultralytics YOLO repositories?

Contributing to Ultralytics YOLO repositories is straightforward. Start by reviewing the [Contributing Guide](contributing.md) to understand the protocols for submitting pull requests, reporting bugs, and more. You'll also need to sign the [Contributor License Agreement (CLA)](CLA.md) to ensure your contributions are legally recognized. For effective bug reporting, refer to the [Minimum Reproducible Example (MRE) Guide](minimum-reproducible-example.md).

### Why should I use Ultralytics HUB for my machine learning projects?

Ultralytics HUB offers a seamless, no-code solution for managing your machine learning projects. It enables you to generate, train, and deploy AI models like YOLO11 effortlessly. Unique features include cloud training, real-time tracking, and intuitive dataset management. Ultralytics HUB simplifies the entire workflow, from data processing to [model deployment](https://www.ultralytics.com/glossary/model-deployment), making it an indispensable tool for both beginners and advanced users.

To get started, visit [Ultralytics HUB Quickstart](../hub/quickstart.md).

### What is Continuous Integration (CI) in Ultralytics, and how does it ensure high-quality code?

Continuous Integration (CI) in Ultralytics involves automated processes that ensure the integrity and quality of the codebase. Our CI setup includes Docker deployment, broken link checks, [CodeQL analysis](https://github.com/github/codeql), and PyPI publishing. These processes help maintain stable and secure repositories by automatically running tests and checks on new code submissions.

Learn more in the [Continuous Integration (CI) Guide](CI.md).

### How is [data privacy](https://www.ultralytics.com/glossary/data-privacy) handled by Ultralytics?

Ultralytics takes data privacy seriously. Our [Privacy Policy](privacy.md) outlines how we collect and use anonymized data to improve the YOLO package while prioritizing user privacy and control. We adhere to strict data protection regulations to ensure your information is secure at all times.

For more information, review our [Privacy Policy](privacy.md).

---

# Source: https://raw.githubusercontent.com/ultralytics/ultralytics/main/docs/en/index.md

---

comments: true

description: Discover Ultralytics YOLO - the latest in real-time object detection and image segmentation. Learn about its features and maximize its potential in your projects.

keywords: Ultralytics, YOLO, YOLO11, object detection, image segmentation, deep learning, computer vision, AI, machine learning, documentation, tutorial

---

# Home

Introducing Ultralytics [YOLO11](models/yolo11.md), the latest version of the acclaimed real-time object detection and image segmentation model. YOLO11 is built on cutting-edge advancements in [deep learning](https://www.ultralytics.com/glossary/deep-learning-dl) and [computer vision](https://www.ultralytics.com/blog/everything-you-need-to-know-about-computer-vision-in-2025), offering unparalleled performance in terms of speed and [accuracy](https://www.ultralytics.com/glossary/accuracy). Its streamlined design makes it suitable for various applications and easily adaptable to different hardware platforms, from edge devices to cloud APIs.

Explore the Ultralytics Docs, a comprehensive resource designed to help you understand and utilize its features and capabilities. Whether you are a seasoned [machine learning](https://www.ultralytics.com/glossary/machine-learning-ml) practitioner or new to the field, this hub aims to maximize YOLO's potential in your projects.

## Where to Start

- :material-clock-fast:{ .lg .middle } **Getting Started**

***

Install `ultralytics` with pip and get up and running in minutes to train a YOLO model

***

[:octicons-arrow-right-24: Quickstart](quickstart.md)

- :material-image:{ .lg .middle } **Predict**

***

Predict on new images, videos and streams with YOLO

***

[:octicons-arrow-right-24: Learn more](modes/predict.md)

- :fontawesome-solid-brain:{ .lg .middle } **Train a Model**

***

Train a new YOLO model on your own custom dataset from scratch or load and train on a pretrained model

***

[:octicons-arrow-right-24: Learn more](modes/train.md)

- :material-magnify-expand:{ .lg .middle } **Explore Computer Vision Tasks**

***

Discover YOLO tasks like detect, segment, classify, pose, OBB and track

***

[:octicons-arrow-right-24: Explore Tasks](tasks/index.md)

- :rocket:{ .lg .middle } **Explore YOLO11 🚀**

***

Discover Ultralytics' latest state-of-the-art YOLO11 models and their capabilities

***

[:octicons-arrow-right-24: YOLO11 Models 🚀](models/yolo11.md)

- :material-select-all:{ .lg .middle } **SAM 3: Segment Anything with Concepts 🚀 NEW**

***

Meta's latest SAM 3 with Promptable Concept Segmentation - segment all instances using text or image exemplars

***

[:octicons-arrow-right-24: SAM 3 Models](models/sam-3.md)

- :material-scale-balance:{ .lg .middle } **Open Source, AGPL-3.0**

***

Ultralytics offers two YOLO licenses: AGPL-3.0 and Enterprise. Explore YOLO on [GitHub](https://github.com/ultralytics/ultralytics).

***

[:octicons-arrow-right-24: YOLO License](https://www.ultralytics.com/license)

Watch: How to Train a YOLO11 model on Your Custom Dataset in Google Colab.

## YOLO: A Brief History

[YOLO](models/index.md) (You Only Look Once), a popular [object detection](https://www.ultralytics.com/glossary/object-detection) and [image segmentation](https://www.ultralytics.com/glossary/image-segmentation) model, was developed by Joseph Redmon and Ali Farhadi at the University of Washington. Launched in 2015, YOLO gained popularity for its high speed and accuracy.

- [YOLOv2](models/index.md), released in 2016, improved the original model by incorporating batch normalization, anchor boxes, and dimension clusters.

- [YOLOv3](models/yolov3.md), launched in 2018, further enhanced the model's performance using a more efficient backbone network, multiple anchors, and spatial pyramid pooling.

- [YOLOv4](models/yolov4.md) was released in 2020, introducing innovations like Mosaic [data augmentation](https://www.ultralytics.com/glossary/data-augmentation), a new anchor-free detection head, and a new [loss function](https://www.ultralytics.com/glossary/loss-function).

- [YOLOv5](models/yolov5.md) further improved the model's performance and added new features such as hyperparameter optimization, integrated experiment tracking, and automatic export to popular export formats.

- [YOLOv6](models/yolov6.md) was open-sourced by [Meituan](https://www.meituan.com/) in 2022 and is used in many of the company's autonomous delivery robots.

- [YOLOv7](models/yolov7.md) added additional tasks such as pose estimation on the COCO keypoints dataset.

- [YOLOv8](models/yolov8.md) released in 2023 by Ultralytics, introduced new features and improvements for enhanced performance, flexibility, and efficiency, supporting a full range of vision AI tasks.

- [YOLOv9](models/yolov9.md) introduces innovative methods like Programmable Gradient Information (PGI) and the Generalized Efficient Layer Aggregation Network (GELAN).

- [YOLOv10](models/yolov10.md) created by researchers from [Tsinghua University](https://www.tsinghua.edu.cn/en/) using the [Ultralytics](https://www.ultralytics.com/) [Python package](https://pypi.org/project/ultralytics/), provides real-time [object detection](tasks/detect.md) advancements by introducing an End-to-End head that eliminates Non-Maximum Suppression (NMS) requirements.

- **[YOLO11](models/yolo11.md) 🚀**: Ultralytics' latest YOLO models deliver state-of-the-art (SOTA) performance across multiple tasks, including [object detection](tasks/detect.md), [segmentation](tasks/segment.md), [pose estimation](tasks/pose.md), [tracking](modes/track.md), and [classification](tasks/classify.md), enabling deployment across diverse AI applications and domains.

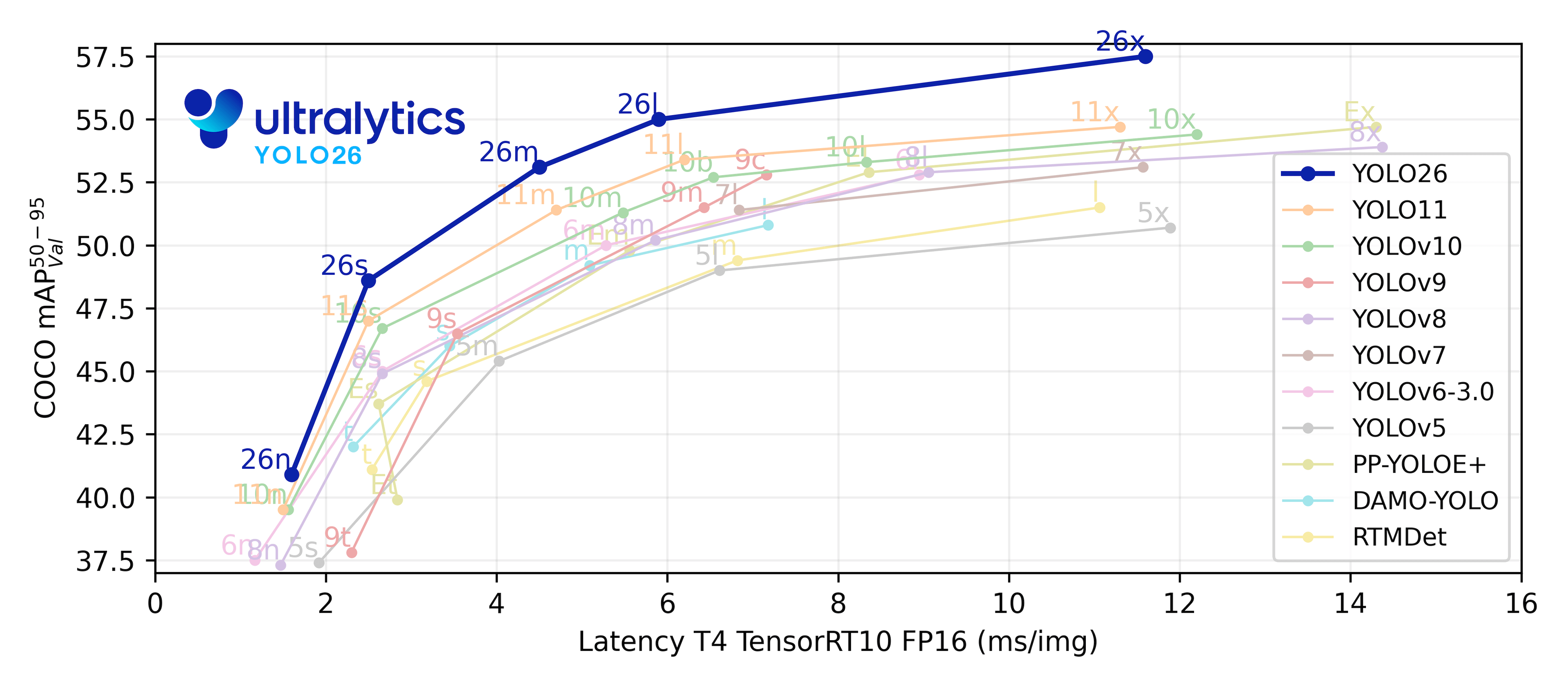

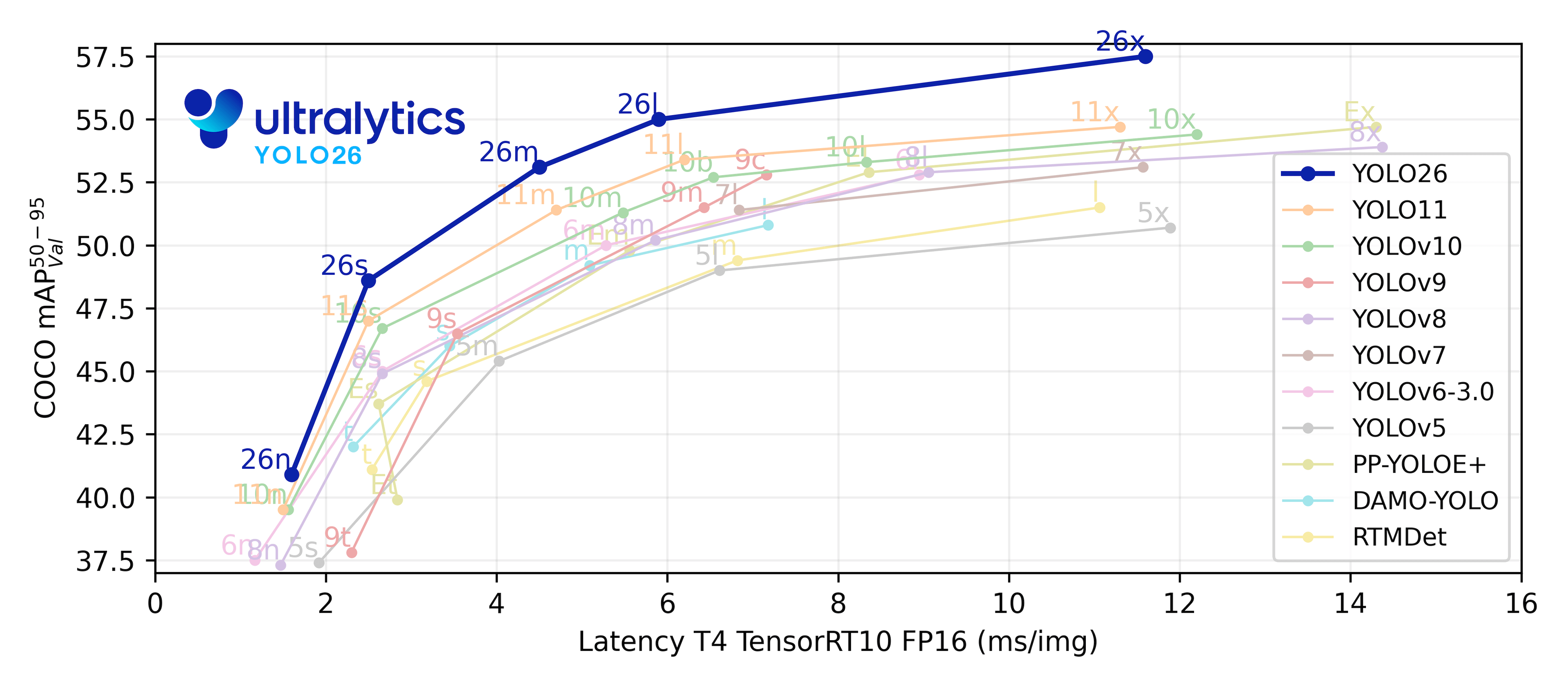

- **[YOLO26](models/yolo26.md) ⚠️ Coming Soon**: Ultralytics' next-generation YOLO model optimized for edge deployment with end-to-end NMS-free inference.

## YOLO Licenses: How is Ultralytics YOLO licensed?

Ultralytics offers two licensing options to accommodate diverse use cases:

- **AGPL-3.0 License**: This [OSI-approved](https://opensource.org/license/agpl-v3) open-source license is ideal for students and enthusiasts, promoting open collaboration and knowledge sharing. See the [LICENSE](https://github.com/ultralytics/ultralytics/blob/main/LICENSE) file for more details.

- **Enterprise License**: Designed for commercial use, this license permits seamless integration of Ultralytics software and AI models into commercial goods and services, bypassing the open-source requirements of AGPL-3.0. If your scenario involves embedding our solutions into a commercial offering, reach out through [Ultralytics Licensing](https://www.ultralytics.com/license).

Our licensing strategy is designed to ensure that any improvements to our open-source projects are returned to the community. We believe in open source, and our mission is to ensure that our contributions can be used and expanded in ways that benefit everyone.

## The Evolution of Object Detection

Object detection has evolved significantly over the years, from traditional computer vision techniques to advanced deep learning models. The [YOLO family of models](https://www.ultralytics.com/blog/the-evolution-of-object-detection-and-ultralytics-yolo-models) has been at the forefront of this evolution, consistently pushing the boundaries of what's possible in real-time object detection.

YOLO's unique approach treats object detection as a single regression problem, predicting [bounding boxes](https://www.ultralytics.com/glossary/bounding-box) and class probabilities directly from full images in one evaluation. This revolutionary method has made YOLO models significantly faster than previous two-stage detectors while maintaining high accuracy.

With each new version, YOLO has introduced architectural improvements and innovative techniques that have enhanced performance across various metrics. YOLO11 continues this tradition by incorporating the latest advancements in computer vision research, offering even better speed-accuracy trade-offs for real-world applications.

## FAQ

### What is Ultralytics YOLO and how does it improve object detection?

Ultralytics YOLO is the latest advancement in the acclaimed YOLO (You Only Look Once) series for real-time object detection and image segmentation. It builds on previous versions by introducing new features and improvements for enhanced performance, flexibility, and efficiency. YOLO supports various [vision AI tasks](tasks/index.md) such as detection, segmentation, pose estimation, tracking, and classification. Its state-of-the-art architecture ensures superior speed and accuracy, making it suitable for diverse applications, including edge devices and cloud APIs.

### How can I get started with YOLO installation and setup?

Getting started with YOLO is quick and straightforward. You can install the Ultralytics package using [pip](https://pypi.org/project/ultralytics/) and get up and running in minutes. Here's a basic installation command:

!!! example "Installation using pip"

=== "CLI"

```bash

pip install -U ultralytics

```

For a comprehensive step-by-step guide, visit our [Quickstart](quickstart.md) page. This resource will help you with installation instructions, initial setup, and running your first model.

### How can I train a custom YOLO model on my dataset?

Training a custom YOLO model on your dataset involves a few detailed steps:

1. Prepare your annotated dataset.

2. Configure the training parameters in a YAML file.

3. Use the `yolo TASK train` command to start training. (Each `TASK` has its own argument)

Here's example code for the Object Detection Task:

!!! example "Train Example for Object Detection Task"

=== "Python"

```python

from ultralytics import YOLO

# Load a pretrained YOLO model (you can choose n, s, m, l, or x versions)

model = YOLO("yolo11n.pt")

# Start training on your custom dataset

model.train(data="path/to/dataset.yaml", epochs=100, imgsz=640)

```

=== "CLI"

```bash

# Train a YOLO model from the command line

yolo detect train data=path/to/dataset.yaml epochs=100 imgsz=640

```

For a detailed walkthrough, check out our [Train a Model](modes/train.md) guide, which includes examples and tips for optimizing your training process.

### What are the licensing options available for Ultralytics YOLO?

Ultralytics offers two licensing options for YOLO:

- **AGPL-3.0 License**: This open-source license is ideal for educational and non-commercial use, promoting open collaboration.

- **Enterprise License**: This is designed for commercial applications, allowing seamless integration of Ultralytics software into commercial products without the restrictions of the AGPL-3.0 license.

For more details, visit our [Licensing](https://www.ultralytics.com/license) page.

### How can Ultralytics YOLO be used for real-time object tracking?

Ultralytics YOLO supports efficient and customizable multi-object tracking. To utilize tracking capabilities, you can use the `yolo track` command, as shown below:

!!! example "Example for Object Tracking on a Video"

=== "Python"

```python

from ultralytics import YOLO

# Load a pretrained YOLO model

model = YOLO("yolo11n.pt")

# Start tracking objects in a video

# You can also use live video streams or webcam input

model.track(source="path/to/video.mp4")

```

=== "CLI"

```bash

# Perform object tracking on a video from the command line

# You can specify different sources like webcam (0) or RTSP streams

yolo track source=path/to/video.mp4

```

For a detailed guide on setting up and running object tracking, check our [Track Mode](modes/track.md) documentation, which explains the configuration and practical applications in real-time scenarios.

---

# Source: https://raw.githubusercontent.com/ultralytics/ultralytics/main/docs/en/integrations/amazon-sagemaker.md

---

comments: true

description: Learn step-by-step how to deploy Ultralytics' YOLO11 on Amazon SageMaker Endpoints, from setup to testing, for powerful real-time inference with AWS services.

keywords: YOLO11, Amazon SageMaker, AWS, Ultralytics, machine learning, computer vision, model deployment, AWS CloudFormation, AWS CDK, real-time inference

---

# A Guide to Deploying YOLO11 on Amazon SageMaker Endpoints

Deploying advanced [computer vision](https://www.ultralytics.com/glossary/computer-vision-cv) models like [Ultralytics' YOLO11](https://github.com/ultralytics/ultralytics) on Amazon SageMaker Endpoints opens up a wide range of possibilities for various [machine learning](https://www.ultralytics.com/glossary/machine-learning-ml) applications. The key to effectively using these models lies in understanding their setup, configuration, and deployment processes. YOLO11 becomes even more powerful when integrated seamlessly with Amazon SageMaker, a robust and scalable machine learning service by AWS.

This guide will take you through the process of deploying YOLO11 [PyTorch](https://www.ultralytics.com/glossary/pytorch) models on Amazon SageMaker Endpoints step by step. You'll learn the essentials of preparing your AWS environment, configuring the model appropriately, and using tools like AWS CloudFormation and the AWS Cloud Development Kit (CDK) for deployment.

## Amazon SageMaker

[Amazon SageMaker](https://aws.amazon.com/sagemaker/) is a machine learning service from Amazon Web Services (AWS) that simplifies the process of building, training, and deploying machine learning models. It provides a broad range of tools for handling various aspects of machine learning workflows. This includes automated features for tuning models, options for training models at scale, and straightforward methods for deploying models into production. SageMaker supports popular machine learning frameworks, offering the flexibility needed for diverse projects. Its features also cover data labeling, workflow management, and performance analysis.

## Deploying YOLO11 on Amazon SageMaker Endpoints

Deploying YOLO11 on Amazon SageMaker lets you use its managed environment for real-time inference and take advantage of features like autoscaling. Take a look at the AWS architecture below.

### Step 1: Setup Your AWS Environment

First, ensure you have the following prerequisites in place:

- An AWS Account: If you don't already have one, sign up for an AWS account.

- Configured IAM Roles: You'll need an IAM role with the necessary permissions for Amazon SageMaker, AWS CloudFormation, and Amazon S3. This role should have policies that allow it to access these services.