# Squared Ai

> ## Documentation Index

---

# Source: https://docs.squared.ai/release-notes/2024.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# 2024 releases

Version: v0.5.0 to v0.8.0

Version: v0.12.0 to v0.13.0

Version: v0.14.0 to v0.19.0

Version: v0.20.0 to v0.22.0

Version: v0.23.0 to v0.24.0

Version: v0.25.0 to v0.30.0

Version: v0.31.0 to v0.35.0

Version: v0.36.0 to v0.38.0

---

# Source: https://docs.squared.ai/release-notes/2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# 2025 Releases

> Release updates for the year 2025

Version: v0.39.0 to v0.41.0

Version: v0.41.0 to v0.41.0

Version: v0.42.0 to v0.46.0

Version: v0.47.0 to v0.50.0

Version: v0.51.0 to v0.54.0

Version: v0.55.0 to v0.59.0

Version: v0.60.0 to v0.63.0

Version: v0.64.0 to v0.67.0

Version: v0.68.0 to v0.72.0

Version: v0.73.0 to v0.77.0

Version: v0.78.0 to v0.81.0

Version: v0.82.0 to v0.85.0

---

# Source: https://docs.squared.ai/release-notes/2026.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# 2026 Releases

> Release updates for the year 2026

Version: v0.86.0 to v0.90.0

---

# Source: https://docs.squared.ai/release-notes/2025/April-2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# April 2025 Releases

> Release updates for the month of April

## 🚀 Features

* **Assistant UI**\

Introduced a new Assistant interface with UI improvements for enhanced user interaction and workflow automation.

* **EULA Management**\

Added End User License Agreement (EULA) controller and management capabilities, including database storage, permissions, and acceptance tracking.

* **WatsonX.Data Source Connector**\

Added IBM WatsonX.Data as a new source connector for data integration workflows.

* **Generic Connector Edit Component**\

Introduced a reusable generic connector edit component for improved maintainability and consistent editing experience.

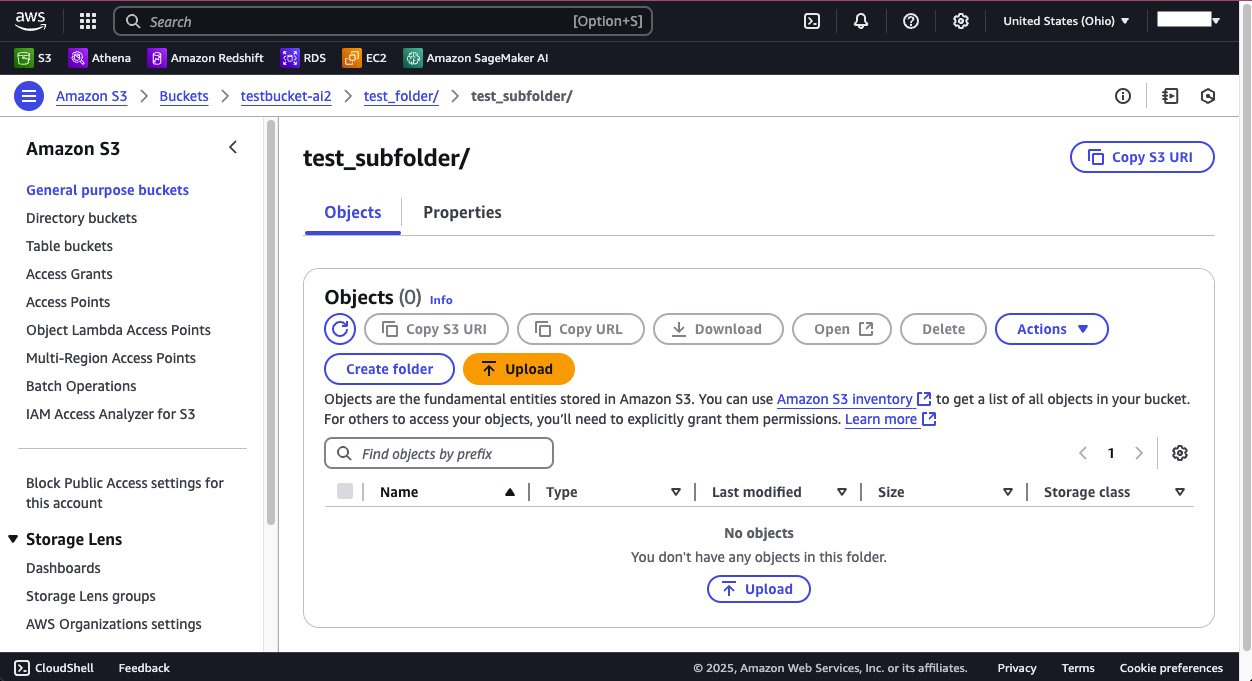

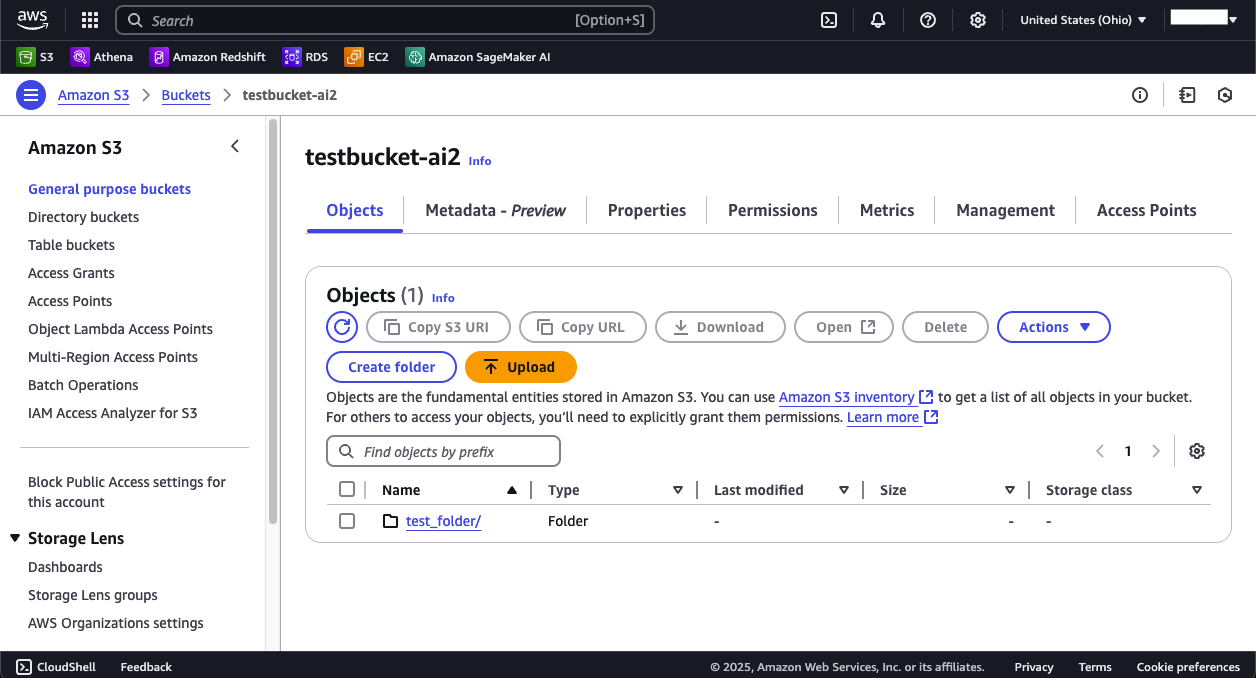

* **Unstructured File Support in S3 Connector**\

Added support for unstructured files in the S3 connector, expanding data ingestion capabilities.

* **Chatbot Data App Reports Enhancements**\

Improved reporting capabilities for chatbot data applications with new overview pages and dedicated routes.

* **Databricks Timeout Configuration**\

Added timeout configuration options for the Databricks source connector to handle long-running queries.

* **QA Verification Workflow**\

Enforced qa-verified label requirement for PRs with needs-qa label, improving quality assurance processes.

* **CI/CD Improvements**\

Updated workflows with GHA Docker cache, latest versions, and improved trigger event types for faster builds.

* **Snyk Security Scanning**\

Enhanced Snyk scanning to focus on manifests and lockfiles for more efficient vulnerability detection.

## 🐛 Bug Fixes

* **Top Bar Name Rendering**\

Fixed proper rendering of names in the top bar and added truncation for long names with tooltips.

* **Confirm Email Template**\

Corrected issues with the email confirmation template.

* **Test Connection Flow**\

Removed separate test connection step and combined it with the save changes button for streamlined UX.

* **Connector Deletion**\

Fixed connector deletion to use connectorID as the parameter.

* **Query Handling with Multiple FROMs**\

Applied different gsub handling when queries contain multiple FROM clauses.

* **Catalog Refresh Indicator**\

Added visual indicator for catalog refresh and fixed duplicate API calls.

* **EULA Enable Fix**\

Resolved issues with EULA enable functionality and added skip option for invite sign-up flow.

* **Add Models Button**\

Fixed redirect issues with the Add Models button from the syncs page.

* **SSO Documentation**\

Updated notes to clarify support for Okta and Azure in SSO configuration.

* **Assistant UI Bugs**\

Fixed various bugs in the Assistant UI including duplicate field entity components.

* **SFTP Connection Query**\

Fixed inner query replacement for SFTP connections.

* **Data App Message Feedbacks**\

Ensured selected data apps only display their own message feedbacks.

* **Breadcrumb Navigation**\

Fixed navigation issues in breadcrumb components.

* **Chatbot Keys**\

Corrected key usage for chatbot components.

* **AI/ML Source State Persistence**\

Fixed state persistence when creating AI/ML sources and prevented resubmission when navigating back.

* **Company Name Display**\

Fixed display to show company name instead of workspace name in invitations and org settings.

* **EULA Popup Flash**\

Fixed EULA popup briefly appearing when updating documents.

## 🚜 Refactor

* **Destination Name in Syncs**\

Changed destination name display in Syncs from Connector Name to Name for clarity.

* **Image to SVG Conversion**\

Converted images to SVGs for better scalability and performance.

* **Custom Select Widget for RJSF**\

Added custom widget for select components in React JSON Schema Forms.

* **Reports UI/UX Audit**\

Completed Reports UI/UX audit fixes for improved user experience.

* **Data Apps Audit**\

Addressed medium priority items from the Data Apps audit.

* **Error Logs Modal**\

Refactored error logs modal for better usability.

* **Catalog Fetching Hook**\

Created dedicated hook for fetching catalog data.

* **Reports Overview Page**\

Added new Overview page with RBAC for Reports route and dedicated data apps reports route.

* **User Invite Company Name**\

Updated user invites to send company name instead of workspace name.

* **Data App Reports Tags**\

Changed tag and empty copy for data app reports.

* **Feature Flag Arrays**\

Changed feature flags to use arrays for enabling across multiple workspaces.

## ⚙️ Miscellaneous Tasks

* **EULA Database Setup**\

Added EULA to database, factory, and model with proper permissions and role groups.

* **Okta SSO Support**\

Added Okta support to Single Sign-On configuration.

* **Assistant Resources**\

Added assistant rendering type, resource permissions, and role group configuration.

* **Server Gem Updates**\

Updated server gem through versions 0.22.0, 0.22.1, 0.22.3, 0.22.5, and integrations gem to 0.22.6.

* **Visual Component Model Update**\

Updated the Visual Component model with improvements.

* **Chat Report Implementation**\

Added Chat Report implementation to Data App functionality.

* **Template Mapping Tests**\

Added comprehensive tests for template mapping options and static template options.

* **EULA Application Check**\

Added EULA check to Application controller for compliance enforcement.

* **Dependency Updates**\

Bumped dependency versions for security and compatibility improvements.

* **Legacy File Cleanup**\

Removed old and unused files from the codebase.

---

# Source: https://docs.squared.ai/release-notes/2024/August-2024.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# August 2024 releases

> Release updates for the month of August

## 🚀 **New Features**

### 🔄 **Enable/Disable Sync**

We’ve introduced the ability to enable or disable a sync. When a sync is disabled, it won’t execute according to its schedule, allowing you to effectively pause it without the need to delete it. This feature provides greater control and flexibility in managing your sync operations.

### 🧠 **Source: Databricks AI Model Connector**

Multiwoven now integrates seamlessly with [Databricks AI models](https://docs.squared.ai/guides/data-integration/sources/databricks-model) in the source connectors. This connection allows users to activate AI models directly through Multiwoven, enhancing your data processing and analytical capabilities with cutting-edge AI tools.

### 📊 **Destination: Microsoft Excel**

You can now use [Microsoft Excel](https://docs.squared.ai/guides/data-integration/destinations/productivity-tools/microsoft-excel) as a destination connector. Deliver your modeled data directly to Excel sheets for in-depth analysis or reporting. This addition simplifies workflows for those who rely on Excel for their data presentation and analysis needs.

### ✅ **Triggering Test Sync**

Before running a full sync, users can now initiate a test sync to verify that everything is functioning as expected. This feature ensures that potential issues are caught early, saving time and resources.

### 🏷️ **Sync Run Type**

Sync types are now clearly labeled as either "General" or "Test" in the Syncs Tab. This enhancement provides clearer context for each sync operation, making it easier to distinguish between different sync runs.

### 🛢️ **Oracle DB as a Destination Connector**

[Oracle DB](https://docs.squared.ai/guides/data-integration/destinations/database/oracle) is now available as a destination connector. Users can navigate to **Add Destination**, select **Oracle**, and input the necessary database details to route data directly to Oracle databases.

### 🗄️ **Oracle DB as a Source Connector**

[Oracle DB](https://docs.squared.ai/guides/data-integration/sources/oracle) has also been added as a source connector. Users can pull data from Oracle databases by navigating to **Add Source**, selecting **Oracle**, and entering the database details.

***

## 🔧 **Improvements**

### **Memory Bloat Issue in Sync**

Resolved an issue where memory bloat was affecting sync performance over time, ensuring more stable and efficient sync operations.

### **Discover and Table URL Fix**

Fixed issues with discovering and accessing table URLs, enhancing the reliability and accuracy of data retrieval processes.

### **Disable to Fields**

Added the option to disable fields where necessary, giving users more customization options to fit their specific needs.

### **Query Source Response Update**

Updated the query source response mechanism, improving data handling and accuracy in data query operations.

### **OCI8 Version Fix**

Resolved issues related to the OCI8 version, ensuring better compatibility and smoother database interactions.

### **User Read Permission Update**

Updated user read permissions to enhance security and provide more granular control over data access.

### **Connector Name Update**

Updated connector names across the platform to ensure better clarity and consistency, making it easier to manage and understand your integrations.

### **Account Verification Route Removal**

Streamlined the user signup process by removing the account verification route, reducing friction for new users.

### **Connector Creation Process**

Refined the connector creation process, making it more intuitive and user-friendly, thus reducing the learning curve for new users.

### **README Update**

The README file has been updated to reflect the latest changes and enhancements, providing more accurate and helpful guidance.

### **Request/Response Logs Added**

We’ve added request/response logs for multiple connectors, including Klaviyo, HTTP, Airtable, Slack, MariaDB, Google Sheets, Iterable, Zendesk, HubSpot, Stripe, and Salesforce CRM, improving debugging and traceability.

### **Logger Issue in Sync**

Addressed a logging issue within sync operations, ensuring that logs are accurate and provide valuable insights.

### **Main Layout Protected**

Wrapped the main layout with a protector, enhancing security and stability across the platform.

### **User Email Verification**

Implemented email verification during signup using Devise, increasing account security and ensuring that only verified users have access.

### **Databricks Datawarehouse Connector Name Update**

Renamed the Databricks connection to "Databricks Datawarehouse" for improved clarity and better alignment with user expectations.

### **Version Upgrade to 0.9.1**

The platform has been upgraded to version `0.9.1`, incorporating all the above features and improvements, ensuring a more robust and feature-rich experience.

### **Error Message Refactoring**

Refactored error messages to align with agreed-upon standards, resulting in clearer and more consistent communication across the platform.

---

# Source: https://docs.squared.ai/release-notes/2025/August-2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# August 2025 Releases

> Release updates for the month of August

## 🚀 Features

* **Google Drive Source Connector**\

Added Google Drive as a new source connector for integrating cloud storage documents and files.

* **Headless Extension Support**\

Introduced headless browser extension support for enhanced automation capabilities.

* **Agent Templates**\

Added agent template modal and functionality for creating workflows from pre-built templates.

* **Assistant PDF Export**\

Added ability to export assistant messages as PDF documents.

* **Chat History Persistence**\

Implemented persistent chat history storage for maintaining conversation context across sessions.

* **Bar Chart Enhancements**\

Added color coding and X/Y axes labels to bar charts for improved data visualization.

* **Data App Workflow Integration**\

Integrated workflows with data apps, enabling workflow execution through chatbot and assistant interfaces.

* **Lead Capture for HubSpot and Slack**\

Added lead capture functionality for HubSpot and Slack integrations.

* **Chatbot Expand Toggle**\

Added toggle functionality to expand and collapse the chatbot interface.

* **Assistant List View**\

Added list view for displaying available assistants with breadcrumb navigation.

* **GitHub Bot Release Workflows**\

Updated release workflows with GitHub bot integration for automated releases.

## 🐛 Bug Fixes

* **Overview Card Heights**\

Fixed inconsistent height rendering for overview cards.

* **Connection Edges**\

Resolved issues with connection edges in workflow canvas.

* **File Name Assignment**\

Fixed file name incorrectly being assigned file ID in Google Drive connector.

* **Workflow Name Assignment**\

Corrected workflow name assignment during updates.

* **Chatbot Error Responses**\

Fixed error response handling in chatbot interactions.

* **LLM Field Label**\

Corrected label for LLM configuration field.

* **Google Drive Vendor Name**\

Fixed missing vendor name in field list for Google Drive connector.

* **Exception Data Handling**\

Fixed missing exception from returned data.

* **Workflow Failure**\

Resolved workflow execution failure issues.

* **One2Many Type Parsing**\

Fixed parsing of one2many type as JSON array.

* **Signup Form Copy**\

Updated copy changes for signup form.

* **Assistant Component Properties**\

Fixed component properties rendering in assistant.

* **Base URL Configuration**\

Corrected base URL for published links and API calls.

* **Chatbot Dimensions**\

Fixed chatbot dimensions when in expanded state.

* **Model Configuration Key**\

Changed model to configurable key for flexibility.

* **Data Apps Pagination**\

Fixed pagination for data apps and assistants lists.

* **Chatbot Cross Icon**\

Fixed chatbot cross icon visibility in expanded state.

* **No Access Rendering**\

Fixed rendering of No Access state on page reload.

## 🚜 Refactor

* **Feedback Metric Calculation**\

Updated and reverted feedback metric calculation changes.

* **Workflow Create & Update Logic**\

Changed logic for workflow creation and update operations.

* **Table Styling**\

Updated styling and copy in table components.

* **Node Selection**\

Prevented multiple node selection in workflow builder.

* **Attribution Removal**\

Removed attribution from components.

* **JSON Form Font Size**\

Changed font size in JSON Form for better readability.

* **Data App Deletion**\

Added automatic data app deletion during workflow deletion.

* **Configurable Type Default**\

Added configurable\_type as model as default value.

* **Filter Updates**\

Changed filters for improved data filtering.

## ⚙️ Miscellaneous Tasks

* **Server Gem Updates**\

Updated server gem through versions 0.32.3 and 0.33.0.

* **Integrations Gem Updates**\

Upgraded integrations gem through versions 0.33.3 and 0.33.4.

* **Error Message Handling**\

Updated error message handling in detail field for APIs.

* **AI Workflow Description**\

Updated AI Workflow description text.

* **Workflow Log Database**\

Added Workflow Log storage in database.

* **Direct Database Template**\

Changed the Direct Database Template configuration.

* **Embedding Provider Service**\

Added support for embedding provider service.

* **Chat Messages Title**\

Added Title field to Chat Messages database table.

* **Sync Run Logger**\

Added logger for sync run status updates.

* **Data App Session Title**\

Moved title field to Data App Session database.

* **Workflow Logger**\

Added workflow execution logger for debugging.

* **Data App Session API**\

Added destroy API endpoint to data app session controller.

* **Template Null Fix**\

Added template fix for handling null values.

* **Data App Token**\

Send data app token on workflow run request.

* **Chat History Search**\

Added search bar for chat history.

---

# Source: https://docs.squared.ai/release-notes/August_2024.md

# August 2024 releases

> Release updates for the month of August

## 🚀 **New Features**

### 🔄 **Enable/Disable Sync**

We’ve introduced the ability to enable or disable a sync. When a sync is disabled, it won’t execute according to its schedule, allowing you to effectively pause it without the need to delete it. This feature provides greater control and flexibility in managing your sync operations.

### 🧠 **Source: Databricks AI Model Connector**

Multiwoven now integrates seamlessly with [Databricks AI models](https://docs.squared.ai/guides/data-integration/sources/databricks-model) in the source connectors. This connection allows users to activate AI models directly through Multiwoven, enhancing your data processing and analytical capabilities with cutting-edge AI tools.

### 📊 **Destination: Microsoft Excel**

You can now use [Microsoft Excel](https://docs.squared.ai/guides/data-integration/destinations/productivity-tools/microsoft-excel) as a destination connector. Deliver your modeled data directly to Excel sheets for in-depth analysis or reporting. This addition simplifies workflows for those who rely on Excel for their data presentation and analysis needs.

### ✅ **Triggering Test Sync**

Before running a full sync, users can now initiate a test sync to verify that everything is functioning as expected. This feature ensures that potential issues are caught early, saving time and resources.

### 🏷️ **Sync Run Type**

Sync types are now clearly labeled as either "General" or "Test" in the Syncs Tab. This enhancement provides clearer context for each sync operation, making it easier to distinguish between different sync runs.

### 🛢️ **Oracle DB as a Destination Connector**

[Oracle DB](https://docs.squared.ai/guides/data-integration/destinations/database/oracle) is now available as a destination connector. Users can navigate to **Add Destination**, select **Oracle**, and input the necessary database details to route data directly to Oracle databases.

### 🗄️ **Oracle DB as a Source Connector**

[Oracle DB](https://docs.squared.ai/guides/data-integration/sources/oracle) has also been added as a source connector. Users can pull data from Oracle databases by navigating to **Add Source**, selecting **Oracle**, and entering the database details.

***

## 🔧 **Improvements**

### **Memory Bloat Issue in Sync**

Resolved an issue where memory bloat was affecting sync performance over time, ensuring more stable and efficient sync operations.

### **Discover and Table URL Fix**

Fixed issues with discovering and accessing table URLs, enhancing the reliability and accuracy of data retrieval processes.

### **Disable to Fields**

Added the option to disable fields where necessary, giving users more customization options to fit their specific needs.

### **Query Source Response Update**

Updated the query source response mechanism, improving data handling and accuracy in data query operations.

### **OCI8 Version Fix**

Resolved issues related to the OCI8 version, ensuring better compatibility and smoother database interactions.

### **User Read Permission Update**

Updated user read permissions to enhance security and provide more granular control over data access.

### **Connector Name Update**

Updated connector names across the platform to ensure better clarity and consistency, making it easier to manage and understand your integrations.

### **Account Verification Route Removal**

Streamlined the user signup process by removing the account verification route, reducing friction for new users.

### **Connector Creation Process**

Refined the connector creation process, making it more intuitive and user-friendly, thus reducing the learning curve for new users.

### **README Update**

The README file has been updated to reflect the latest changes and enhancements, providing more accurate and helpful guidance.

### **Request/Response Logs Added**

We’ve added request/response logs for multiple connectors, including Klaviyo, HTTP, Airtable, Slack, MariaDB, Google Sheets, Iterable, Zendesk, HubSpot, Stripe, and Salesforce CRM, improving debugging and traceability.

### **Logger Issue in Sync**

Addressed a logging issue within sync operations, ensuring that logs are accurate and provide valuable insights.

### **Main Layout Protected**

Wrapped the main layout with a protector, enhancing security and stability across the platform.

### **User Email Verification**

Implemented email verification during signup using Devise, increasing account security and ensuring that only verified users have access.

### **Databricks Datawarehouse Connector Name Update**

Renamed the Databricks connection to "Databricks Datawarehouse" for improved clarity and better alignment with user expectations.

### **Version Upgrade to 0.9.1**

The platform has been upgraded to version `0.9.1`, incorporating all the above features and improvements, ensuring a more robust and feature-rich experience.

### **Error Message Refactoring**

Refactored error messages to align with agreed-upon standards, resulting in clearer and more consistent communication across the platform.

---

# Source: https://docs.squared.ai/release-notes/2024/December-2024.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# December 2024 releases

> Release updates for the month of December

# 🚀 Features and Improvements

## **Features**

### **Audit Logs UI**

Streamline the monitoring of user activities with a new, intuitive interface for audit logs.

### **Custom Visual Components**

Create tailored visual elements for unique data representation and insights.

### **Dynamic Query Data Models**

Enhance query flexibility with support for dynamic data models.

### **Stream Support in HTTP Model**

Enable efficient data streaming directly in HTTP models.

### **Pagination for Connectors, Models, and Sync Pages**

Improve navigation and usability with added pagination support.

### **Multiple Choice Feedback**

Collect more detailed user feedback with multiple-choice options.

### **Rendering Type Filter for Data Apps**

Filter data apps effectively with the new rendering type filter.

### **Improved User Login**

Fixes for invited user logins and prevention of duplicate invitations for already verified users.

### **Context-Aware Titles**

Titles dynamically change based on the current route for better navigation.

## **Improvements**

### **Bug Fixes**

* Fixed audit log filter badge calculation.

* Corrected timestamp formatting in utilities.

* Limited file size for custom visual components to 2MB.

* Resolved BigQuery test sync failures.

* Improved UI for audit log views.

* Addressed sidebar design inconsistencies with Figma.

* Ensured correct settings tab highlights.

* Adjusted visual component height for tables and custom visual types.

* Fixed issues with HTTP request method retrieval.

### **Enhancements**

* Added support for exporting audit logs without filters.

* Updated query type handling during model fetching.

* Improved exception handling in resource builder.

* Introduced catalog and schedule sync resources.

* Refined action names across multiple controllers for consistency.

* Reordered deployment steps, removing unnecessary commands.

### **Resource Links and Controllers**

* Added resource links to:

* Audit Logs

* Catalogs

* Connectors

* Models

* Syncs

* Schedule Syncs

* Enterprise components (Users, Profiles, Feedbacks, Data Apps)

* Updated audit logs for comprehensive coverage across controllers.

### **UI and Usability**

* Improved design consistency in audit logs and data apps.

* Updated export features for audit logs.

***

---

# Source: https://docs.squared.ai/release-notes/2025/December-2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# December 2025 Releases

> Release updates for the month of December

## 🚀 Features

* **Workflow RBAC**\

Added Role-Based Access Control for workflows with governance UI for managing permissions.

* **AIS Hosted Vector Store**\

Introduced AIS Hosted Vector Store setup for managed vector database storage.

* **Guardrails Workflow Component**\

Added guardrails component support for implementing safety constraints in workflows.

* **Connector Form State Persistence**\

Added form state persistence for connectors to maintain user input across sessions.

* **SQL Schema for Vector Store Tables**\

Added SQL schema option during vector store table creation for customized data structures.

* **Workflow Auditability**\

Implemented workflow auditability features for tracking changes and compliance.

* **Custom Compliance Support**\

Added custom compliance support with custom list input capabilities.

* **Chatbot Chat Sessions**\

Implemented chatbot chat sessions support for persistent conversations.

* **User Uploadable Chat Avatar**\

Added ability for users to upload custom chat avatars.

* **Scatterplot Visualization**\

Introduced scatterplot visualization type for data analysis.

* **Stepped Form Persistent State**\

Implemented persistent state for stepped forms to preserve progress.

* **Coverage Report Prefix**\

Added prefix to coverage report in UI-CI workflow for better organization.

## 🐛 Bug Fixes

* **Workflow Run ID in Error**\

Fixed workflow run ID display in error messages.

* **Unit Test Coverage CI**\

Reverted unit test coverage display in CI.

* **See More Endpoint Payload**\

Corrected payload for see more endpoint.

* **File Download Error Handling**\

Fixed error string handling in handle\_file\_download\_error.

* **Chat Assistant Date Display**\

Fixed date display alongside time in chat assistant with proper formatting.

* **Cursor Assertion Error**\

Fixed missing cursor assertion error.

* **Coverage File Collection**\

Fixed coverage collection from all files.

* **Delete Data App Session Policy**\

Updated delete data app session policy.

* **Workflow Name During Setup**\

Fixed showing the name of the workflow during setup process.

* **Odoo Abstract Field**\

Fixed abstract field not available in model in some Odoo versions.

## 🚜 Refactor

* **Model Provider Selection**\

Changed to allow model selection only after provider is selected.

* **Fallback Value Formatting**\

Changed fallback and formatted value handling.

## ⚙️ Miscellaneous Tasks

* **Workflow Error Handling**\

Added raise error in await workflow result for better error propagation.

* **Server Gem Update**\

Updated Server Gem to version 0.34.12.

* **Vector DB Connector Filters**\

Added filter changes for vector DB connectors and storage components.

* **Parsing Flag Removal**\

Removed need parsing flag from workflow processing.

* **Auth Failure Logging**\

Added logs for authentication failures.

* **Workflows Page Title**\

Added page title for Workflows section.

* **Chatbot Interface Tests**\

Improved tests for chatbot interface and helpers.

* **Prompt Input Sample**\

Added sample input to prompt input component.

* **Unit Test Coverage**\

Added comprehensive unit tests for workflow builder.

* **Assistant Workspace IDs**\

Removed ASSISTANT\_ENABLED\_WORKSPACE\_IDS constant.

* **CI Naming Updates**\

Updated CI name and job titles for clarity.

* **Integrations Gem Update**\

Upgraded integrations gem to version 0.34.13.

* **Knowledge Base Database**\

Added Knowledge Base and Knowledge Base File to database.

* **Dynamic SQL Payload**\

Updated DynamicSql in Payload Generator.

* **Tool Model**\

Added Tool model for workflow tooling support.

* **Knowledge Base Create API**\

Added Knowledge Base Create API endpoint.

---

# Source: https://docs.squared.ai/release-notes/December_2024.md

# December 2024 releases

> Release updates for the month of December

# 🚀 Features and Improvements

## **Features**

### **Audit Logs UI**

Streamline the monitoring of user activities with a new, intuitive interface for audit logs.

### **Custom Visual Components**

Create tailored visual elements for unique data representation and insights.

### **Dynamic Query Data Models**

Enhance query flexibility with support for dynamic data models.

### **Stream Support in HTTP Model**

Enable efficient data streaming directly in HTTP models.

### **Pagination for Connectors, Models, and Sync Pages**

Improve navigation and usability with added pagination support.

### **Multiple Choice Feedback**

Collect more detailed user feedback with multiple-choice options.

### **Rendering Type Filter for Data Apps**

Filter data apps effectively with the new rendering type filter.

### **Improved User Login**

Fixes for invited user logins and prevention of duplicate invitations for already verified users.

### **Context-Aware Titles**

Titles dynamically change based on the current route for better navigation.

## **Improvements**

### **Bug Fixes**

* Fixed audit log filter badge calculation.

* Corrected timestamp formatting in utilities.

* Limited file size for custom visual components to 2MB.

* Resolved BigQuery test sync failures.

* Improved UI for audit log views.

* Addressed sidebar design inconsistencies with Figma.

* Ensured correct settings tab highlights.

* Adjusted visual component height for tables and custom visual types.

* Fixed issues with HTTP request method retrieval.

### **Enhancements**

* Added support for exporting audit logs without filters.

* Updated query type handling during model fetching.

* Improved exception handling in resource builder.

* Introduced catalog and schedule sync resources.

* Refined action names across multiple controllers for consistency.

* Reordered deployment steps, removing unnecessary commands.

### **Resource Links and Controllers**

* Added resource links to:

* Audit Logs

* Catalogs

* Connectors

* Models

* Syncs

* Schedule Syncs

* Enterprise components (Users, Profiles, Feedbacks, Data Apps)

* Updated audit logs for comprehensive coverage across controllers.

### **UI and Usability**

* Improved design consistency in audit logs and data apps.

* Updated export features for audit logs.

***

---

# Source: https://docs.squared.ai/release-notes/2025/Feb-2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# February 2025 Releases

> Release updates for the month of February

## 🚀 Features

* **PG vector as source changes**\

Made changes to the PostgreSQL connector to support PG Vector.

## 🐛 Bug Fixes

* **Vulnerable integration gem versions update**\

Upgraded Server Gems to the new versions, fixing vulnerabilities found in previous versions of the Gems.

## ⚙️ Miscellaneous Tasks

* **Sync alert bug fixes**\

Fixed certain issues in the Sync Alert mailers.

---

# Source: https://docs.squared.ai/release-notes/2025/January-2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# January 2025 Releases

> Release updates for the month of January

## 🚀 Features

* **Added Empty State for Feedback Overview Table**\

Introduces a default view when no feedback data is available, ensuring clearer guidance and intuitive messaging for end users.

* **Custom Visual Component for Writing Data to Destination Connectors**\

Simplifies the process of sending or mapping data to various destination connectors within the platform’s interface.

* **Azure Blob Storage Integration**\

Adds support for storing and retrieving data from Azure Blob, expanding available cloud storage options.

* **Update Workflows to Deploy Solid Worker**\

Automates deployment of a dedicated worker process, improving back-end task management and system scalability.

* **Chatbot Visual Type**\

Adds a dedicated visualization type designed for chatbot creation and management, enabling more intuitive configuration of conversational experiences.

* **Trigger Sync Alerts / Sync Alerts**\

Implements a notification system to inform teams about the success or failure of data synchronization events in real time.

* **Runner Script Enhancements for Chatbot**\

Improves the runner script’s capability to handle chatbot logic, ensuring smoother automated operations.

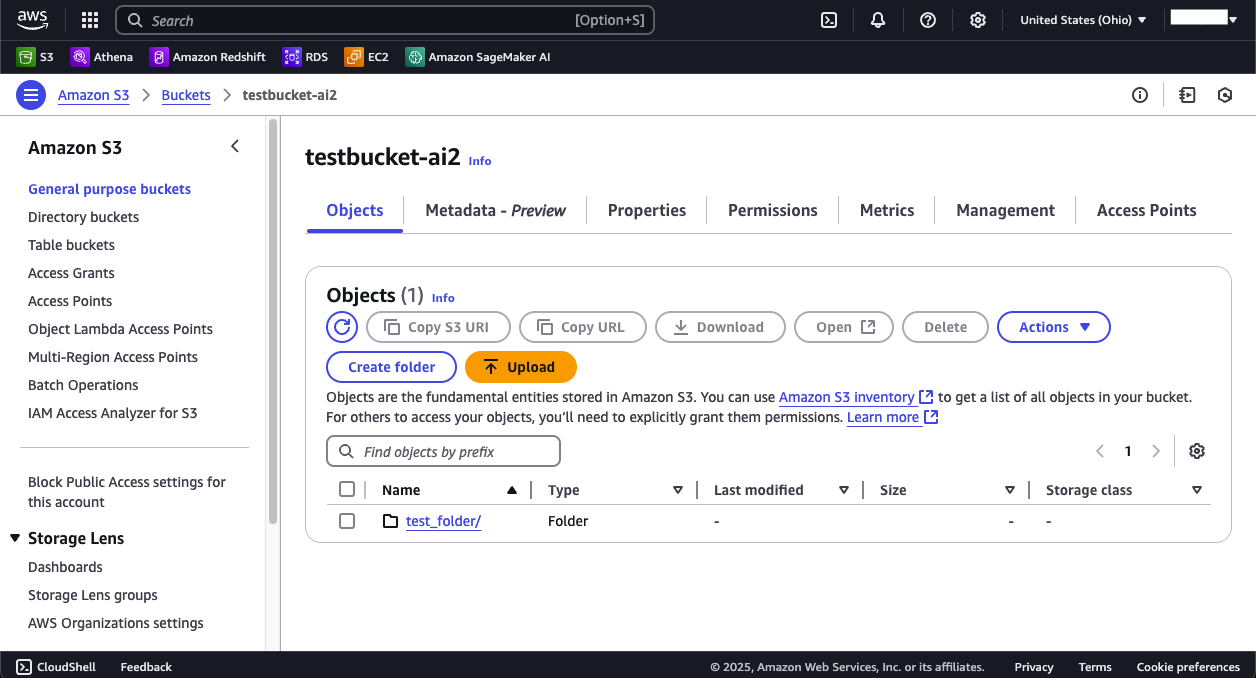

* **Add S3 Destination Connector**\

Enables direct export of transformed or collected data to Amazon S3, broadening deployment possibilities for cloud-based workflows.

* **Add SFTP Source Connector**\

Permits data ingestion from SFTP servers, streamlining workflows where secure file transfers are a primary data source.

## 🐛 Bug Fixes

* **Handle Chatbot Response When Streaming Is Off**\

Resolves an issue causing chatbot responses to fail when streaming mode was disabled, improving overall reliability.

* **Sync Alert Issues**\

Fixes various edge cases where alerts either triggered incorrectly or failed to trigger for certain data sync events.

* **UI Enhancements and Fixes**\

Addresses multiple interface inconsistencies, refining the user experience for navigation and data presentation.

* **Validation for “Continue” CTA During Chatbot Creation**\

Ensures that all mandatory fields are properly completed before users can progress through chatbot setup.

* **Refetch Data Model After Update**\

Corrects a scenario where updated data models were not automatically reloaded, preventing stale information in certain views.

* **OpenAI Connector Failure Handling**\

Improves error handling and retry mechanisms for OpenAI-related requests, reducing the impact of transient network issues.

* **Stream Fetch Fix for Salesforce**\

Patches a problem causing occasional timeouts or failed data streams when retrieving records from Salesforce.

* **Radio Button Inconsistencies**\

Unifies radio button behavior across the platform’s interface, preventing unexpected selection or styling errors.

* **Keep Reports Link Highlight**\

Ensures the “Reports” link remains visibly highlighted in the navigation menu, maintaining consistent visual cues.

## ⚙️ Miscellaneous Tasks

* **Add Default Request and Response in Connection Configuration for OpenAI**\

Provides pre-populated request/response templates for OpenAI connectors, simplifying initial setup for users.

* **Add Alert Policy to Roles**\

Integrates alert policies into user role management, allowing fine-grained control over who can create or modify data alerts.

---

# Source: https://docs.squared.ai/release-notes/2026/January-2026.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# January 2026 Releases

> Release updates for the month of January 2026

## 🚀 Features

* **Custom Roles Edit Support**

Added *Edit Details* button for Custom Roles to allow User-defined Roles to be modified.

* **Knowledge Base**

Introduced Knowledge Base for use with Workflows.

* **Hugging Face Embedding Models**

Added support for Hugging Face embedding models.

* **Agent Tools**

Introduced Agent Tools.

* **Knowledge Base Component**

Added Knowledge Base component in Workflows.

## 🐛 Bug Fixes

* **Collapsed Chat Overflow**

Fixed overflow issue in collapsed chat.

* **Template Temperatures**

Fixed temperature handling in templates.

* **Text Feedback Submission**

Allowed text feedback submission even when additional remarks are required.

* **Prompt Template Variable Handling**

Fixed handling when no variables are found in prompt templates.

* **Workflow Edge Updates**

Fixed edge updates when deleting nodes in workflows.

* **React Optimization**

Removed unnecessary useCallback and useMemo usage.

* **Vector Store UI**

Fixed UI issues while creating vector stores in Knowledge Base.

* **Token Handling**

Ensured different tokens per data app.

## 🚜 Refactor

* **Custom Component Layout**

Allowed fit-content for Custom Components for better-fitting layouts.

## ⚙️ Miscellaneous Tasks

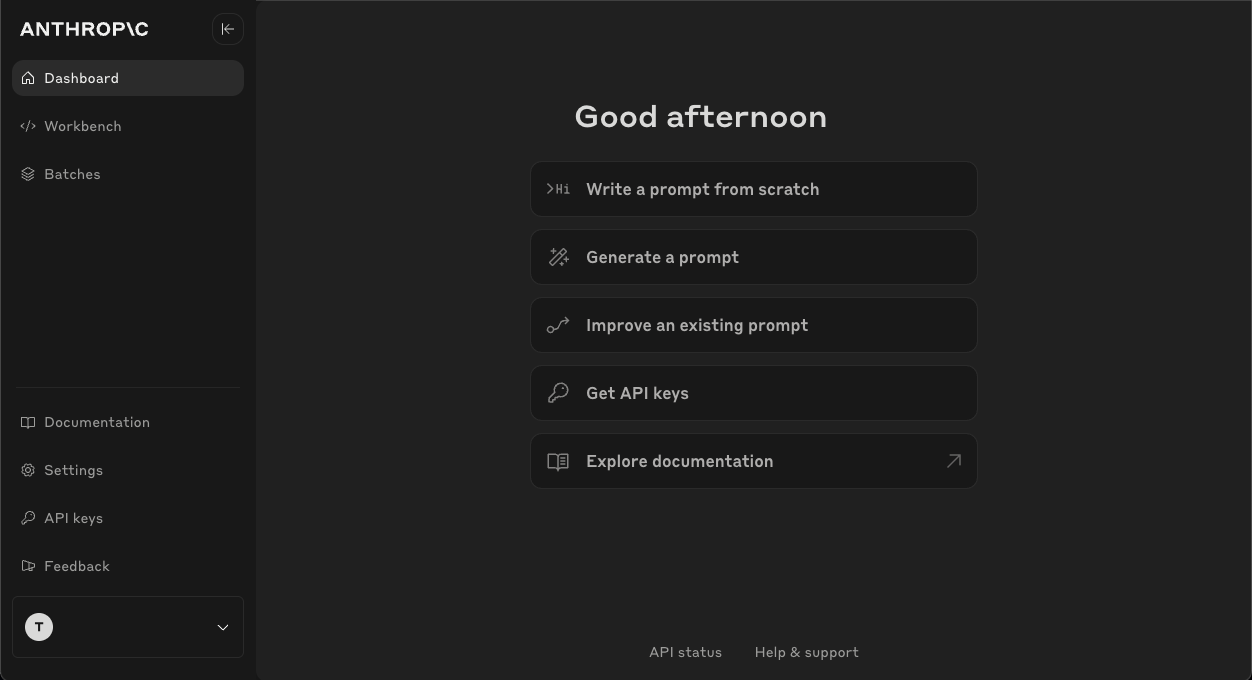

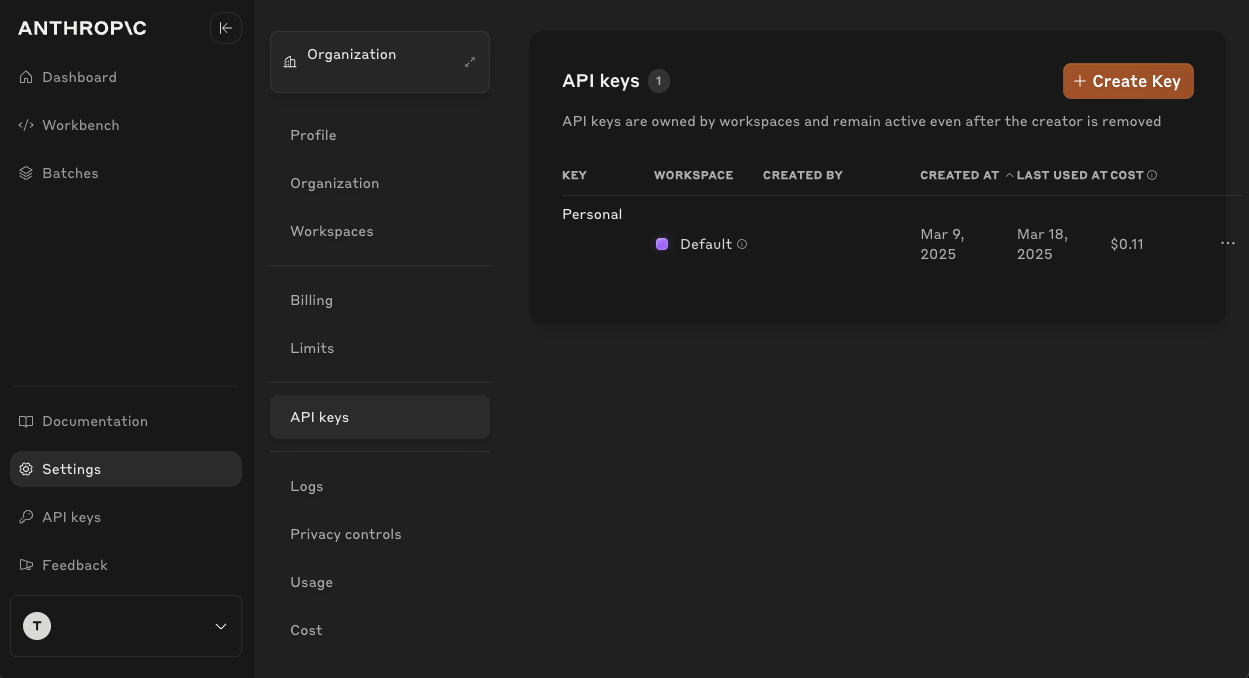

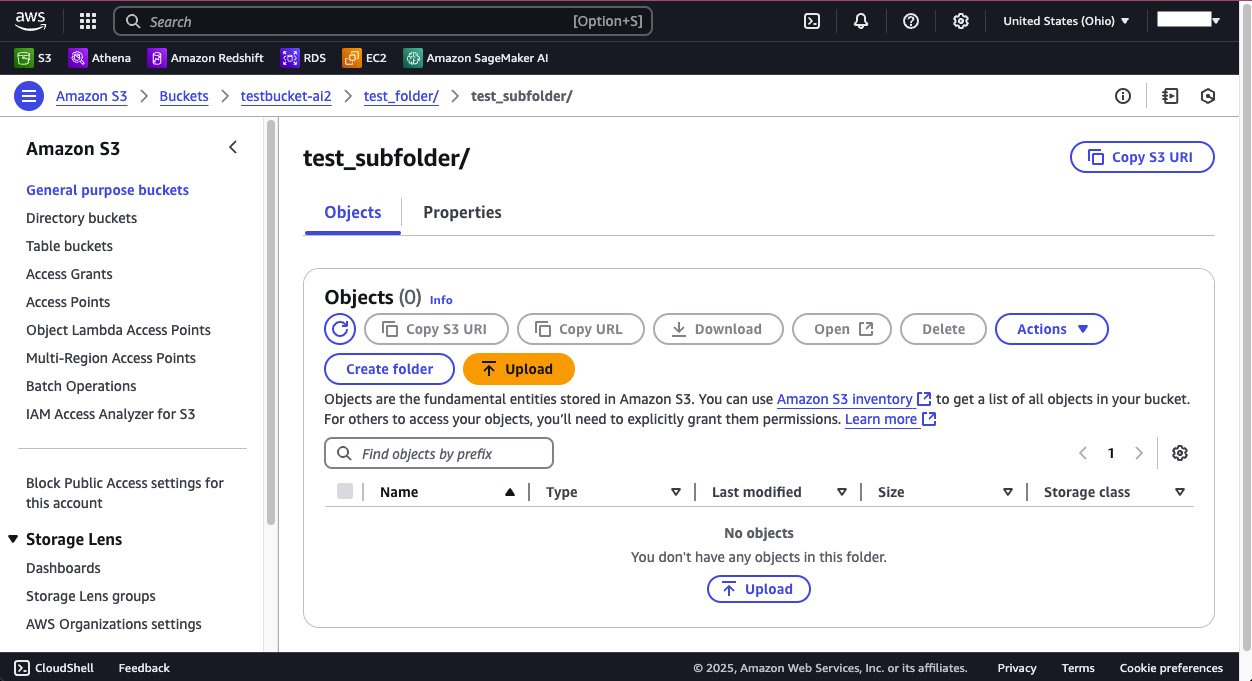

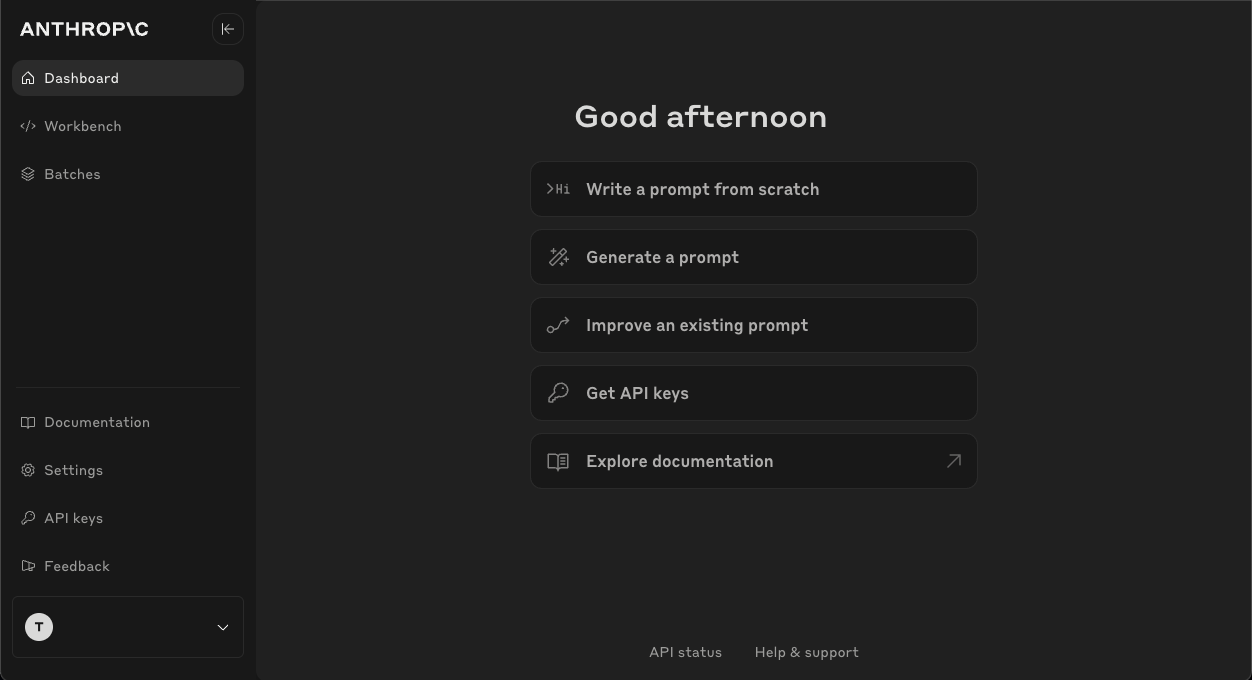

* **Anthropic Ruby Gem**

Added Ruby Anthropic gem support.

* **Testing & Linting**

Improved tests, linting, and overall code quality.

---

# Source: https://docs.squared.ai/release-notes/January_2025.md

# January 2025 Releases

> Release updates for the month of January

## 🚀 Features

* **Added Empty State for Feedback Overview Table**\

Introduces a default view when no feedback data is available, ensuring clearer guidance and intuitive messaging for end users.

* **Custom Visual Component for Writing Data to Destination Connectors**\

Simplifies the process of sending or mapping data to various destination connectors within the platform’s interface.

* **Azure Blob Storage Integration**\

Adds support for storing and retrieving data from Azure Blob, expanding available cloud storage options.

* **Update Workflows to Deploy Solid Worker**\

Automates deployment of a dedicated worker process, improving back-end task management and system scalability.

* **Chatbot Visual Type**\

Adds a dedicated visualization type designed for chatbot creation and management, enabling more intuitive configuration of conversational experiences.

* **Trigger Sync Alerts / Sync Alerts**\

Implements a notification system to inform teams about the success or failure of data synchronization events in real time.

* **Runner Script Enhancements for Chatbot**\

Improves the runner script’s capability to handle chatbot logic, ensuring smoother automated operations.

* **Add S3 Destination Connector**\

Enables direct export of transformed or collected data to Amazon S3, broadening deployment possibilities for cloud-based workflows.

* **Add SFTP Source Connector**\

Permits data ingestion from SFTP servers, streamlining workflows where secure file transfers are a primary data source.

## 🐛 Bug Fixes

* **Handle Chatbot Response When Streaming Is Off**\

Resolves an issue causing chatbot responses to fail when streaming mode was disabled, improving overall reliability.

* **Sync Alert Issues**\

Fixes various edge cases where alerts either triggered incorrectly or failed to trigger for certain data sync events.

* **UI Enhancements and Fixes**\

Addresses multiple interface inconsistencies, refining the user experience for navigation and data presentation.

* **Validation for “Continue” CTA During Chatbot Creation**\

Ensures that all mandatory fields are properly completed before users can progress through chatbot setup.

* **Refetch Data Model After Update**\

Corrects a scenario where updated data models were not automatically reloaded, preventing stale information in certain views.

* **OpenAI Connector Failure Handling**\

Improves error handling and retry mechanisms for OpenAI-related requests, reducing the impact of transient network issues.

* **Stream Fetch Fix for Salesforce**\

Patches a problem causing occasional timeouts or failed data streams when retrieving records from Salesforce.

* **Radio Button Inconsistencies**\

Unifies radio button behavior across the platform’s interface, preventing unexpected selection or styling errors.

* **Keep Reports Link Highlight**\

Ensures the “Reports” link remains visibly highlighted in the navigation menu, maintaining consistent visual cues.

## ⚙️ Miscellaneous Tasks

* **Add Default Request and Response in Connection Configuration for OpenAI**\

Provides pre-populated request/response templates for OpenAI connectors, simplifying initial setup for users.

* **Add Alert Policy to Roles**\

Integrates alert policies into user role management, allowing fine-grained control over who can create or modify data alerts.

---

# Source: https://docs.squared.ai/release-notes/2024/July-2024.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# July 2024 releases

> Release updates for the month of July

## ✨ **New Features**

### 🔍 **Search Filter in Table Selector**

The table selector method now includes a powerful search filter. This feature enhances your workflow by allowing you to swiftly locate and select the exact tables you need, even in large datasets. It’s all about saving time and boosting productivity.

### 🏠 **Databricks Lakehouse Destination**

We're excited to introduce Databricks Lakehouse as a new destination connector. Seamlessly integrate your data pipelines with Databricks Lakehouse, harnessing its advanced analytics capabilities for data processing and AI-driven insights. This feature empowers your data strategies with greater flexibility and power.

### 📅 **Manual Sync Schedule Controller**

Take control of your data syncs with the new Manual Sync Schedule controller. This feature gives you the freedom to define when and how often syncs occur, ensuring they align perfectly with your business needs while optimizing resource usage.

### 🛢️ **MariaDB Destination Connector**

MariaDB is now available as a destination connector! You can now channel your processed data directly into MariaDB databases, enabling robust data storage and processing workflows. This integration is perfect for users operating in MariaDB environments.

### 🎛️ **Table Selector and Layout Enhancements**

We’ve made significant improvements to the table selector and layout. The interface is now more intuitive, making it easier than ever to navigate and manage your tables, especially in complex data scenarios.

### 🔄 **Catalog Refresh**

Introducing on-demand catalog refresh! Keep your data sources up-to-date with a simple refresh, ensuring you always have the latest data structure available. Say goodbye to outdated data and hello to consistency and accuracy.

### 🛡️ **S3 Connector ARN Support for Authentication**

Enhance your security with ARN (Amazon Resource Name) support for Amazon S3 connectors. This update provides a more secure and scalable approach to managing access to your S3 resources, particularly beneficial for large-scale environments.

### 📊 **Integration Changes for Sync Record Log**

We’ve optimized the integration logic for sync record logs. These changes ensure more reliable logging, making it easier to track sync operations and diagnose issues effectively.

### 🗄️ **Server Changes for Log Storage in Sync Record Table**

Logs are now stored directly in the sync record table, centralizing your data and improving log accessibility. This update ensures that all relevant sync information is easily retrievable for analysis.

### ✅ **Select Row Support in Data Table**

Interact with your data tables like never before! We've added row selection support, allowing for targeted actions such as editing or deleting entries directly from the table interface.

### 🛢️ **MariaDB Source Connector**

The MariaDB source connector is here! Pull data directly from MariaDB databases into Multiwoven for seamless integration into your data workflows.

### 🛠️ **Sync Records Error Log**

A detailed error log feature has been added to sync records, providing granular visibility into issues that occur during sync operations. Troubleshooting just got a whole lot easier!

### 🛠️ **Model Query Type - Table Selector**

The table selector is now available as a model query type, offering enhanced flexibility in defining queries and working with your data models.

### 🔄 **Force Catalog Refresh**

Set the refresh flag to true, and the catalog will be forcefully refreshed. This ensures you're always working with the latest data, reducing the chances of outdated information impacting your operations.

## 🔧 **Improvements**

* **Manual Sync Delete API Call**: Enhanced the API call for deleting manual syncs for smoother operations.

* **Server Error Handling**: Improved error handling to better display server errors when data fetches return empty results.

* **Heartbeat Timeout in Extractor**: Introduced new actions to handle heartbeat timeouts in extractors for improved reliability.

* **Sync Run Type Column**: Added a `sync_run_type` column in sync logs for better tracking and operational clarity.

* **Refactor Discover Stream**: Refined the discover stream process, leading to better efficiency and reliability.

* **DuckDB HTTPFS Extension**: Introduced server installation steps for the DuckDB `httpfs` extension.

* **Temporal Initialization**: Temporal processes are now initialized in all registered namespaces, improving system stability.

* **Password Reset Email**: Updated the reset password email template and validation for a smoother user experience.

* **Organization Model Changes**: Applied structural changes to the organization model, enhancing functionality.

* **Log Response Validation**: Added validation to log response bodies, improving error detection.

* **Missing DuckDB Dependencies**: Resolved missing dependencies for DuckDB, ensuring smoother operations.

* **STS Client Initialization**: Removed unnecessary credential parameters from STS client initialization, boosting security.

* **Main Layout Error Handling**: Added error screens for the main layout to improve user experience when data is missing or errors occur.

* **Server Gem Updates**: Upgraded server gems to the latest versions, enhancing performance and security.

* **AppSignal Logging**: Enhanced AppSignal logging by including app request and response logs for better monitoring.

* **Sync Records Table**: Added a dedicated table for sync records to improve data management and retrieval.

* **AWS S3 Connector**: Improved handling of S3 credentials and added support for STS credentials in AWS S3 connectors.

* **Sync Interval Dropdown Fix**: Fixed an issue where the sync interval dropdown text was hidden on smaller screens.

* **Form Data Processing**: Added a pre-check process for form data before checking connections, improving validation and accuracy.

* **S3 Connector ARN Support**: Updated the gem to support ARN-based authentication for S3 connectors, enhancing security.

* **Role Descriptions**: Updated role descriptions for clearer understanding and easier management.

* **JWT Secret Configuration**: JWT secret is now configurable from environment variables, boosting security practices.

* **MariaDB README Update**: Updated the README file to include the latest information on MariaDB connectors.

* **Logout Authorization**: Streamlined the logout process by skipping unnecessary authorization checks.

* **Sync Record JSON Error**: Added a JSON error field in sync records to enhance error tracking and debugging.

* **MariaDB DockerFile Update**: Added `mariadb-dev` to the DockerFile to better support MariaDB integrations.

* **Signup Error Response**: Improved the clarity and detail of signup error responses.

* **Role Policies Update**: Refined role policies for enhanced access control and security.

* **Pundit Policy Enhancements**: Applied Pundit policies at the role permission level, ensuring robust authorization management.

---

# Source: https://docs.squared.ai/release-notes/2025/July-2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# July 2025 Releases

> Release updates for the month of July

## 🚀 Features

* **Workflow Builder**\

Introduced a comprehensive visual workflow builder with React Flow canvas, drag-and-drop components, sidebar navigation, and node configuration. Includes auto-save functionality, workflow publishing, and interface testing capabilities.

* **Odoo Source Connector**\

Added Odoo as a new source connector for ERP data integration.

* **Odoo Destination Connector**\

Introduced Odoo as a destination connector for writing data to Odoo systems.

* **Workflow Run Feature API**\

Added API endpoints for executing and managing workflow runs.

* **Retrieval Flow Components**\

Implemented retrieval flow with configurable components for building AI-powered data retrieval workflows.

* **Agent Routes Setup**\

Set up routing infrastructure for agent-based workflow interactions.

* **Workflow Interface**\

Added chatbot/assistant interface for testing workflows with pagination support and list view.

* **Workflow Component Descriptions**\

Added descriptions for workflow components to improve user understanding.

* **Code Quality Migration**\

Migrated code climate and code coverage tools to qlty.sh with updated badges.

* **GitHub Workflow Timeout**\

Added timeout configuration for server-ci GitHub Actions workflow.

## 🐛 Bug Fixes

* **Global Namespace Violation**\

Fixed global namespace violation in runner script.

* **JSON Schema Validation**\

Removed invalid field from JSON schema and fixed various schema issues.

* **Batched Query Processing**\

Added batched\_query call and improved SQL parsing capabilities.

* **Workflow Builder UI Fixes**\

Fixed numerous workflow builder issues including drop position calculation, component selection, node duplication, edge deletion, and fit view functionality.

* **LLM Model Temperature**\

Fixed missing temperature field for LLM model configuration.

* **Component Rendering**\

Fixed component icon rendering from URLs and dynamic component loading from API.

* **Interface State Persistence**\

Resolved issues with interface state not persisting correctly.

* **Auto-Save Status**\

Fixed auto-save to correctly update status to draft and trigger after 1 second delay.

* **Chatbot Messages**\

Fixed assistant chat JSON messages, loading states, and message responder issues.

* **Prompt Input Modal**\

Resolved issues with prompt input modal and variable values.

* **Chart Rendering**\

Fixed chart rendering issues in workflow interfaces.

* **RBAC Policy**\

Corrected RBAC policy implementation for workflows.

* **Sidebar Styling**\

Fixed sidebar collapsed state, styling, and component sizes.

* **Async Dropdown**\

Fixed async dropdown scrolling and sizing to window dimensions.

* **Workflow Template JSON**\

Corrected workflow template JSON structure.

* **Workflow Execution Errors**\

Added user feedback on workflow execution errors.

## 🚜 Refactor

* **AIS Data Store Discover**\

Fixed AIS Data Store discover method implementation.

* **Workflow Templates**\

Refactored workflow templates for better maintainability.

## ⚙️ Miscellaneous Tasks

* **Sub-Category Support**\

Added sub\_category to connector model and fixed postgres sub-category handling.

* **Connector Instance Protocol**\

Added connector\_instance to protocol for better connector management.

* **Server Gem Updates**\

Updated server gem through versions 0.30.4, 0.31.3, 0.32.0, and 0.32.1.

* **Vector Search Enhancements**\

Added execute\_search to connector for vector operations and vector search support for PostgreSQL.

* **Firecrawl Updates**\

Added markdown\_hash to Firecrawl and updated web\_scraping extractors for VectorDB integration.

* **Workflow Components**\

Updated workflow components with filters, JSON updates, and schema improvements.

* **Chatbot Default State**\

Changed chatbot to render closed by default.

* **Workflow Run Model**\

Added workflow run model changes and scope for updated\_at descending.

* **Security Enhancements**\

Added rack attack for rate limiting, secured cross-domain access, and added clickjacking prevention.

* **Credential Masking**\

Implemented connector credentials masking for improved security.

* **PG Vector Migration**\

Migrated existing PostgreSQL vector connectors to vector sub\_category.

* **NASA Workspace**\

Added NASA workspace to production environment.

* **Workflow Role Group**\

Added Workflows role group for permission management.

* **Workflow Template Database**\

Added and reverted template field for workflow in database.

* **Workflow Builder Validations**\

Implemented agent workflow builder validations.

* **Async Dropdown Filtering**\

Added async dropdown service support with filters and connector sub\_category filtering.

* **BigQuery Private Key**\

Reformatted private\_key in BigQuery client for connection handling.

* **Pinecone Metadata**\

Updated metadata handling in Pinecone DB destination connector.

---

# Source: https://docs.squared.ai/release-notes/July_2024.md

# July 2024 releases

> Release updates for the month of July

## ✨ **New Features**

### 🔍 **Search Filter in Table Selector**

The table selector method now includes a powerful search filter. This feature enhances your workflow by allowing you to swiftly locate and select the exact tables you need, even in large datasets. It’s all about saving time and boosting productivity.

### 🏠 **Databricks Lakehouse Destination**

We're excited to introduce Databricks Lakehouse as a new destination connector. Seamlessly integrate your data pipelines with Databricks Lakehouse, harnessing its advanced analytics capabilities for data processing and AI-driven insights. This feature empowers your data strategies with greater flexibility and power.

### 📅 **Manual Sync Schedule Controller**

Take control of your data syncs with the new Manual Sync Schedule controller. This feature gives you the freedom to define when and how often syncs occur, ensuring they align perfectly with your business needs while optimizing resource usage.

### 🛢️ **MariaDB Destination Connector**

MariaDB is now available as a destination connector! You can now channel your processed data directly into MariaDB databases, enabling robust data storage and processing workflows. This integration is perfect for users operating in MariaDB environments.

### 🎛️ **Table Selector and Layout Enhancements**

We’ve made significant improvements to the table selector and layout. The interface is now more intuitive, making it easier than ever to navigate and manage your tables, especially in complex data scenarios.

### 🔄 **Catalog Refresh**

Introducing on-demand catalog refresh! Keep your data sources up-to-date with a simple refresh, ensuring you always have the latest data structure available. Say goodbye to outdated data and hello to consistency and accuracy.

### 🛡️ **S3 Connector ARN Support for Authentication**

Enhance your security with ARN (Amazon Resource Name) support for Amazon S3 connectors. This update provides a more secure and scalable approach to managing access to your S3 resources, particularly beneficial for large-scale environments.

### 📊 **Integration Changes for Sync Record Log**

We’ve optimized the integration logic for sync record logs. These changes ensure more reliable logging, making it easier to track sync operations and diagnose issues effectively.

### 🗄️ **Server Changes for Log Storage in Sync Record Table**

Logs are now stored directly in the sync record table, centralizing your data and improving log accessibility. This update ensures that all relevant sync information is easily retrievable for analysis.

### ✅ **Select Row Support in Data Table**

Interact with your data tables like never before! We've added row selection support, allowing for targeted actions such as editing or deleting entries directly from the table interface.

### 🛢️ **MariaDB Source Connector**

The MariaDB source connector is here! Pull data directly from MariaDB databases into Multiwoven for seamless integration into your data workflows.

### 🛠️ **Sync Records Error Log**

A detailed error log feature has been added to sync records, providing granular visibility into issues that occur during sync operations. Troubleshooting just got a whole lot easier!

### 🛠️ **Model Query Type - Table Selector**

The table selector is now available as a model query type, offering enhanced flexibility in defining queries and working with your data models.

### 🔄 **Force Catalog Refresh**

Set the refresh flag to true, and the catalog will be forcefully refreshed. This ensures you're always working with the latest data, reducing the chances of outdated information impacting your operations.

## 🔧 **Improvements**

* **Manual Sync Delete API Call**: Enhanced the API call for deleting manual syncs for smoother operations.

* **Server Error Handling**: Improved error handling to better display server errors when data fetches return empty results.

* **Heartbeat Timeout in Extractor**: Introduced new actions to handle heartbeat timeouts in extractors for improved reliability.

* **Sync Run Type Column**: Added a `sync_run_type` column in sync logs for better tracking and operational clarity.

* **Refactor Discover Stream**: Refined the discover stream process, leading to better efficiency and reliability.

* **DuckDB HTTPFS Extension**: Introduced server installation steps for the DuckDB `httpfs` extension.

* **Temporal Initialization**: Temporal processes are now initialized in all registered namespaces, improving system stability.

* **Password Reset Email**: Updated the reset password email template and validation for a smoother user experience.

* **Organization Model Changes**: Applied structural changes to the organization model, enhancing functionality.

* **Log Response Validation**: Added validation to log response bodies, improving error detection.

* **Missing DuckDB Dependencies**: Resolved missing dependencies for DuckDB, ensuring smoother operations.

* **STS Client Initialization**: Removed unnecessary credential parameters from STS client initialization, boosting security.

* **Main Layout Error Handling**: Added error screens for the main layout to improve user experience when data is missing or errors occur.

* **Server Gem Updates**: Upgraded server gems to the latest versions, enhancing performance and security.

* **AppSignal Logging**: Enhanced AppSignal logging by including app request and response logs for better monitoring.

* **Sync Records Table**: Added a dedicated table for sync records to improve data management and retrieval.

* **AWS S3 Connector**: Improved handling of S3 credentials and added support for STS credentials in AWS S3 connectors.

* **Sync Interval Dropdown Fix**: Fixed an issue where the sync interval dropdown text was hidden on smaller screens.

* **Form Data Processing**: Added a pre-check process for form data before checking connections, improving validation and accuracy.

* **S3 Connector ARN Support**: Updated the gem to support ARN-based authentication for S3 connectors, enhancing security.

* **Role Descriptions**: Updated role descriptions for clearer understanding and easier management.

* **JWT Secret Configuration**: JWT secret is now configurable from environment variables, boosting security practices.

* **MariaDB README Update**: Updated the README file to include the latest information on MariaDB connectors.

* **Logout Authorization**: Streamlined the logout process by skipping unnecessary authorization checks.

* **Sync Record JSON Error**: Added a JSON error field in sync records to enhance error tracking and debugging.

* **MariaDB DockerFile Update**: Added `mariadb-dev` to the DockerFile to better support MariaDB integrations.

* **Signup Error Response**: Improved the clarity and detail of signup error responses.

* **Role Policies Update**: Refined role policies for enhanced access control and security.

* **Pundit Policy Enhancements**: Applied Pundit policies at the role permission level, ensuring robust authorization management.

---

# Source: https://docs.squared.ai/release-notes/2024/June-2024.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# June 2024 releases

> Release updates for the month of June

# 🚀 New Features

* **Iterable Destination Connector**\

Integrate with Iterable, allowing seamless data flow to this popular marketing automation platform.

* **Workspace Settings and useQueryWrapper**\

New enhancements to workspace settings and the introduction of `useQueryWrapper` for improved data handling.

* **Amazon S3 Source Connector**\

Added support for Amazon S3 as a source connector, enabling data ingestion directly from your S3 buckets.

# 🛠️ Improvements

* **GitHub URL Issues**\

Addressed inconsistencies with GitHub URLs in the application.

* **Change GitHub PAT to SSH Private Key**\

Updated authentication method from GitHub PAT to SSH Private Key for enhanced security.

* **UI Maintainability and Workspace ID on Page Refresh**\

Improved UI maintainability and ensured that the workspace ID persists after page refresh.

* **CE Sync Commit for Multiple Commits**\

Fixed the issue where CE sync commits were not functioning correctly for multiple commits.

* **Add Role in User Info API Response**\

Enhanced the user info API to include role details in the response.

* **Sync Write Update Action for Destination**\

Synchronized the write update action across various destinations for consistency.

* **Fix Sync Name Validation Error**\

Resolved validation errors in sync names due to contract issues.

* **Update Commit Message Regex**\

Updated the regular expression for commit messages to follow git conventions.

* **Update Insert and Update Actions**\

Renamed `insert` and `update` actions to `destination_insert` and `destination_update` for clarity.

* **Comment Contract Valid Rule in Update Sync Action**\

Adjusted the contract validation rule in the update sync action to prevent failures.

* **Fix for Primary Key in `destination_update`**\

Resolved the issue where `destination_update` was not correctly picking up the primary key.

* **Add Limit and Offset Query Validator**\

Introduced validation for limit and offset queries to improve API reliability.

* **Ignore RBAC for Get Workspaces API**\

Modified the API to bypass Role-Based Access Control (RBAC) for fetching workspaces.

* **Heartbeat Timeout Update for Loader**\

Updated the heartbeat timeout for the loader to ensure smoother operations.

* **Add Strong Migration Gem**\

Integrated the Strong Migration gem to help with safe database migrations.

Stay tuned for more exciting updates in the upcoming releases!

---

# Source: https://docs.squared.ai/release-notes/2025/June-2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# June 2025 Releases

> Release updates for the month of June

## 🚀 Features

* **Intuit QuickBooks Source Connector**\

Added Intuit QuickBooks as a new source connector for integrating accounting and financial data.

* **Pinecone DB Source Connector**\

Added Pinecone DB as a source connector for reading from vector databases.

* **Qdrant Source Connector**\

Introduced Qdrant as a source connector for vector search data retrieval.

* **Firecrawl Source Connector**\

Added Firecrawl as a new source connector for web crawling and scraping capabilities.

* **White-Labelling Support**\

Introduced comprehensive white-labelling capabilities including custom logo uploads for workspaces and organizations.

* **Workflow CRUD APIs**\

Added complete Create, Read, Update, Delete APIs for workflow management.

* **Components Schema API**\

Introduced components schema API for better component management and configuration.

* **File-Based Assistant**\

Added file-based assistant functionality for Space Cadet workspace.

* **Email Verification Redirect**\

Implemented redirect to login page when email verification is disabled for streamlined user experience.

* **Empty Graph Text**\

Added descriptive text for empty graph states to improve user guidance.

* **Deploy to Staging and QA Workflows**\

Created new deployment workflows for staging and QA environments with failure handling and environment visibility.

## 🐛 Bug Fixes

* **Continue Button State**\

Fixed continue button being incorrectly disabled in forms.

* **Searchbar Double Border**\

Resolved double border styling issue on search bars.

* **Long Title Truncation**\

Added truncation for long titles to prevent layout issues.

* **Double Toast Notifications**\

Fixed duplicate toast notifications appearing.

* **Workflow Component Schema**\

Corrected workflow component schema configuration issues.

* **Qdrant and Pinecone Spec**\

Quick fix for Qdrant and Pinecone connector specifications.

* **Read User Permission**\

Removed read user permission from non-admin roles for better security.

## 🚜 Refactor

* **Chatbot Metrics Data App Reports**\

Added support for chatbot metrics in data app reports.

* **Models Audit Changes**\

Implemented audit-related improvements for models.

* **SignUp Email Verification**\

Updated SignUp flow to handle email verification configuration.

* **Searchbar Width Prop**\

Added width prop to searchbar component for flexible sizing.

* **Vector Search Protocol**\

Updated protocol handling for Vector\_Search type.

* **Logo Management**\

Refactored logo imports, API responses, and added sidebar logo component with max file size validation.

* **API Host Configuration**\

Changed API host configuration for better environment management.

* **Error Toast Handling**\

Added consistent error toast notifications across the application.

## ⚙️ Miscellaneous Tasks

* **Logo Upload Infrastructure**\

Added database changes and serializer updates for logo upload functionality.

* **Data App Reports Enhancement**\

Updated Data App reports to show messages and feedback responses.

* **Monthly API Response**\

Updated API to return 'monthly' instead of 'month' for consistency.

* **Enterprise Controller Updates**\

Updated Enterprise Workspace and Organization controllers for logo upload support.

* **Workflow Model Changes**\

Added workflow model associations and unique index for workflow names.

* **Component URL Icons**\

Updated component URL icons for better visual consistency.

* **Server Gem Updates**\

Updated server gem through versions 0.27.0, 0.29.0, 0.30.0, and 0.30.3.

* **S3 Bucket Storage**\

Enabled S3 bucket for storage capabilities.

* **Connector Sub-Categories**\

Added sub\_category field to connector meta for better organization.

* **Firecrawl Optimizations**\

Optimized Firecrawl client and added query support.

* **Demo Stage Workspace**\

Added demo stage workspace ID to assistant enabled workspace IDs.

* **Component Model Updates**\

Updated component model with improvements.

* **BCLL Cleanup**\

Removed hardcoded BCLL logo and custom height configurations.

---

# Source: https://docs.squared.ai/release-notes/June_2024.md

# June 2024 releases

> Release updates for the month of June

# 🚀 New Features

* **Iterable Destination Connector**\

Integrate with Iterable, allowing seamless data flow to this popular marketing automation platform.

* **Workspace Settings and useQueryWrapper**\

New enhancements to workspace settings and the introduction of `useQueryWrapper` for improved data handling.

* **Amazon S3 Source Connector**\

Added support for Amazon S3 as a source connector, enabling data ingestion directly from your S3 buckets.

# 🛠️ Improvements

* **GitHub URL Issues**\

Addressed inconsistencies with GitHub URLs in the application.

* **Change GitHub PAT to SSH Private Key**\

Updated authentication method from GitHub PAT to SSH Private Key for enhanced security.

* **UI Maintainability and Workspace ID on Page Refresh**\

Improved UI maintainability and ensured that the workspace ID persists after page refresh.

* **CE Sync Commit for Multiple Commits**\

Fixed the issue where CE sync commits were not functioning correctly for multiple commits.

* **Add Role in User Info API Response**\

Enhanced the user info API to include role details in the response.

* **Sync Write Update Action for Destination**\

Synchronized the write update action across various destinations for consistency.

* **Fix Sync Name Validation Error**\

Resolved validation errors in sync names due to contract issues.

* **Update Commit Message Regex**\

Updated the regular expression for commit messages to follow git conventions.

* **Update Insert and Update Actions**\

Renamed `insert` and `update` actions to `destination_insert` and `destination_update` for clarity.

* **Comment Contract Valid Rule in Update Sync Action**\

Adjusted the contract validation rule in the update sync action to prevent failures.

* **Fix for Primary Key in `destination_update`**\

Resolved the issue where `destination_update` was not correctly picking up the primary key.

* **Add Limit and Offset Query Validator**\

Introduced validation for limit and offset queries to improve API reliability.

* **Ignore RBAC for Get Workspaces API**\

Modified the API to bypass Role-Based Access Control (RBAC) for fetching workspaces.

* **Heartbeat Timeout Update for Loader**\

Updated the heartbeat timeout for the loader to ensure smoother operations.

* **Add Strong Migration Gem**\

Integrated the Strong Migration gem to help with safe database migrations.

Stay tuned for more exciting updates in the upcoming releases!

---

# Source: https://docs.squared.ai/release-notes/2025/March-2025.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.squared.ai/llms.txt

> Use this file to discover all available pages before exploring further.

# March 2025 Releases

> Release updates for the month of March

## 🚀 Features

* **Custom RBAC UI**\

Introduced a comprehensive Role-Based Access Control interface, allowing administrators to create, edit, and manage custom roles with granular permissions across the platform.

* **SSO Integration**\

Added complete Single Sign-On support including SSO Configuration Controller, SSO Login, ACS Callback, and organization settings for seamless enterprise authentication.

* **WatsonX.AI Connector**\

Added IBM WatsonX.AI as a new AI/ML source connector, expanding the available AI model integrations.

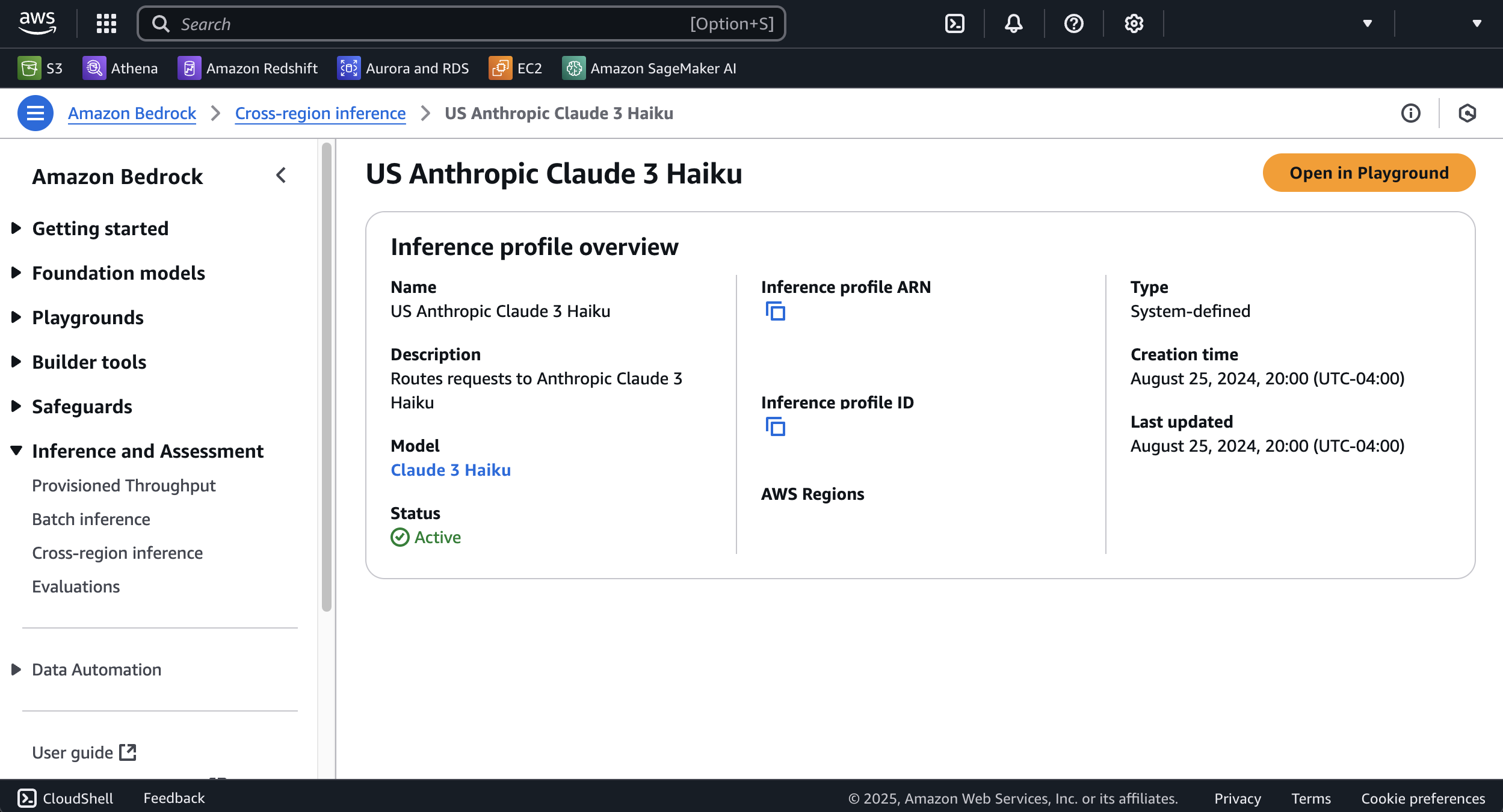

* **Anthropic AI/ML Source Connector**\

Introduced Anthropic as a new AI/ML source connector for leveraging Claude models in data workflows.

* **Chatbot Enhancements**\

Added feedback display on chatbot preview and history context for user prompts, improving conversational AI experiences.

* **Sync Export API**\

New API endpoints for exporting sync configurations and sync records, enabling better data portability and backup options.

* **Environment Variables for Connection Config**\

Support for using environment variables in connection configurations, improving security and deployment flexibility.

* **Enhanced Search Functionality**\

Added search bars to model selection, destination section, and data source views for easier navigation in large datasets.

* **Delete Confirmation Modals**\

Added confirmation dialogs when deleting sources and workspace members to prevent accidental data loss.

* **Docker Hub Integration**\

Updated workflow to automatically push images to Docker Hub when new releases are created.

* **Snyk Security Testing**\

Integrated Snyk tests for server, UI, and integrations to enhance security vulnerability detection.

* **Primary Key Helper Text**\

Added helper text and tooltips for Primary Key fields to improve user understanding during data modeling.

## 🐛 Bug Fixes

* **Pagination in Empty State**\

Fixed pagination controls incorrectly showing when there are no results to display.

* **Content Layout**\

Corrected content centering at maximum width for better visual consistency.

* **User Login with Email Confirmation**\

Resolved issues with user login flow when email confirmation is required.

* **Role Permission Count**\

Fixed incorrect permission count display for roles and updated to group permission count.

* **SSO Entity ID**\

Corrected entity ID handling in SSO configuration.

* **Missing Logo Icons**\

Fixed missing logo icons appearing in various authentication views.

* **Invite User Workflow**\