# Lmdeploy

> .. autoclass:: PytorchEngineConfig

---

inference pipeline

==================

.. currentmodule:: lmdeploy

pipeline

--------

.. autofunction:: pipeline

serving

--------

.. autofunction:: serve

.. autofunction:: client

PytorchEngineConfig

-------------------

.. autoclass:: PytorchEngineConfig

TurbomindEngineConfig

---------------------

.. autoclass:: TurbomindEngineConfig

GenerationConfig

----------------

.. autoclass:: GenerationConfig

ChatTemplateConfig

------------------

.. autoclass:: ChatTemplateConfig

---

On Other Platforms

=================================

.. toctree::

:maxdepth: 1

:caption: OtherPF

ascend/get_started.md

maca/get_started.md

camb/get_started.md

---

Welcome to LMDeploy's tutorials!

====================================

.. figure:: ./_static/image/lmdeploy-logo.svg

:width: 50%

:align: center

:alt: LMDeploy

:class: no-scaled-link

.. raw:: html

LMDeploy is a toolkit for compressing, deploying, and serving LLM.

Star

Watch

Fork

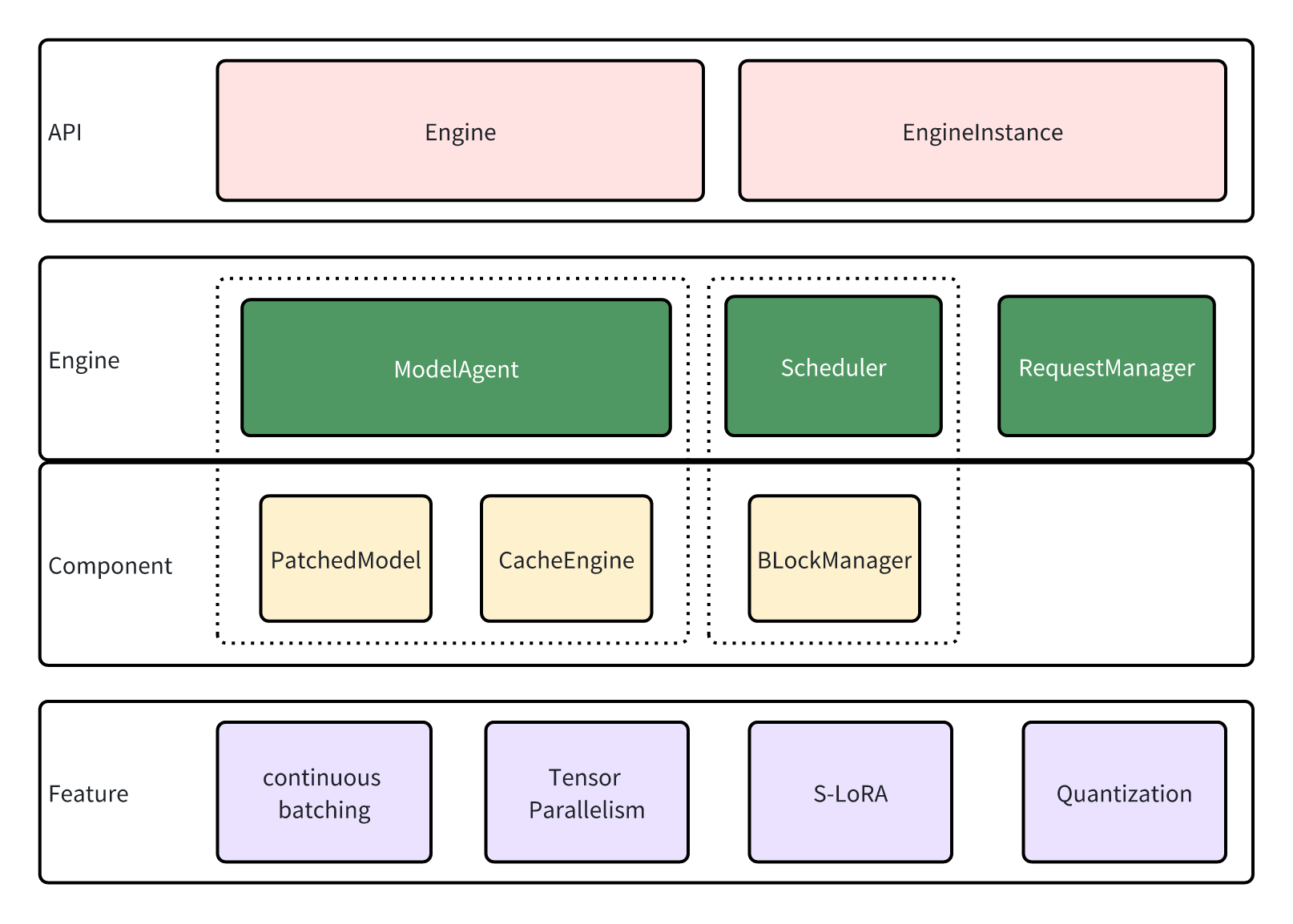

LMDeploy has the following core features:

* **Efficient Inference**: LMDeploy delivers up to 1.8x higher request throughput than vLLM, by introducing key features like persistent batch(a.k.a. continuous batching), blocked KV cache, dynamic split&fuse, tensor parallelism, high-performance CUDA kernels and so on.

* **Effective Quantization**: LMDeploy supports weight-only and k/v quantization, and the 4-bit inference performance is 2.4x higher than FP16. The quantization quality has been confirmed via OpenCompass evaluation.

* **Effortless Distribution Server**: Leveraging the request distribution service, LMDeploy facilitates an easy and efficient deployment of multi-model services across multiple machines and cards.

* **Excellent Compatibility**: LMDeploy supports `KV Cache Quant `_, `AWQ `_ and `Automatic Prefix Caching `_ to be used simultaneously.

Documentation

-------------

.. _get_started:

.. toctree::

:maxdepth: 1

:caption: Get Started

get_started/installation.md

get_started/get_started.md

get_started/index.rst

.. _supported_models:

.. toctree::

:maxdepth: 1

:caption: Models

supported_models/supported_models.md

supported_models/reward_models.md

.. _llm_deployment:

.. toctree::

:maxdepth: 1

:caption: Large Language Models(LLMs) Deployment

llm/pipeline.md

llm/api_server.md

llm/api_server_tools.md

llm/api_server_reasoning.md

llm/api_server_lora.md

llm/proxy_server.md

.. _vlm_deployment:

.. toctree::

:maxdepth: 1

:caption: Vision-Language Models(VLMs) Deployment

multi_modal/vl_pipeline.md

multi_modal/api_server_vl.md

multi_modal/index.rst

.. _quantization:

.. toctree::

:maxdepth: 1

:caption: Quantization

quantization/w4a16.md

quantization/w8a8.md

quantization/kv_quant.md

.. _benchmark:

.. toctree::

:maxdepth: 1

:caption: Benchmark

benchmark/benchmark.md

benchmark/evaluate_with_opencompass.md

benchmark/evaluate_with_vlmevalkit.md

.. toctree::

:maxdepth: 1

:caption: Advanced Guide

inference/turbomind.md

inference/pytorch.md

advance/pytorch_new_model.md

advance/long_context.md

advance/chat_template.md

advance/debug_turbomind.md

advance/structed_output.md

advance/pytorch_multinodes.md

advance/pytorch_profiling.md

advance/metrics.md

advance/context_parallel.md

advance/spec_decoding.md

advance/update_weights.md

.. toctree::

:maxdepth: 1

:caption: API Reference

api/pipeline.rst

Indices and tables

==================

* :ref:`genindex`

* :ref:`search`

---

Vision-Language Models

=================================

.. toctree::

:maxdepth: 2

:caption: Examples

deepseek_vl2.md

llava.md

internvl.md

xcomposer2d5.md

cogvlm.md

minicpmv.md

phi3.md

mllama.md

qwen2_vl.md

qwen2_5_vl.md

molmo.md

gemma3.md

---

# Customized chat template

The effect of the applied chat template can be observed by **setting log level** `INFO`.

LMDeploy supports two methods of adding chat templates:

- One approach is to utilize an existing conversation template by directly configuring a JSON file like the following.

```json

{

"model_name": "your awesome chat template name",

"system": "<|im_start|>system\n",

"meta_instruction": "You are a robot developed by LMDeploy.",

"eosys": "<|im_end|>\n",

"user": "<|im_start|>user\n",

"eoh": "<|im_end|>\n",

"assistant": "<|im_start|>assistant\n",

"eoa": "<|im_end|>",

"separator": "\n",

"capability": "chat",

"stop_words": ["<|im_end|>"]

}

```

`model_name` is a required field and can be either the name of an LMDeploy built-in chat template (which can be viewed through `lmdeploy list`), or a new name. Other fields are optional.

1. When `model_name` is the name of a built-in chat template, the non-null fields in the JSON file will override the corresponding attributes of the original chat template.

2. However, when `model_name` is a new name, it will register `BaseChatTemplate` directly as a new chat template. The specific definition can be referred to [BaseChatTemplate](https://github.com/InternLM/lmdeploy/blob/24bd4b9ab6a15b3952e62bcfc72eaba03bce9dcb/lmdeploy/model.py#L113-L188).

The new chat template would be like this:

```

{system}{meta_instruction}{eosys}{user}{user_content}{eoh}{assistant}{assistant_content}{eoa}{separator}{user}...

```

When using the CLI tool, you can pass in a custom chat template with `--chat-template`, for example.

```shell

lmdeploy serve api_server internlm/internlm2_5-7b-chat --chat-template ${JSON_FILE}

```

You can also pass it in through the interface function, for example.

```python

from lmdeploy import ChatTemplateConfig, serve

serve('internlm/internlm2_5-7b-chat',

chat_template_config=ChatTemplateConfig.from_json('${JSON_FILE}'))

```

- Another approach is to customize a Python chat template class like the existing LMDeploy chat templates. It can be used directly after successful registration. The advantages are a high degree of customization and strong controllability. Below is an example of registering an LMDeploy chat template.

```python

from lmdeploy.model import MODELS, BaseChatTemplate

@MODELS.register_module(name='customized_model')

class CustomizedModel(BaseChatTemplate):

"""A customized chat template."""

def __init__(self,

system='<|im_start|>system\n',

meta_instruction='You are a robot developed by LMDeploy.',

user='<|im_start|>user\n',

assistant='<|im_start|>assistant\n',

eosys='<|im_end|>\n',

eoh='<|im_end|>\n',

eoa='<|im_end|>',

separator='\n',

stop_words=['<|im_end|>', '<|action_end|>']):

super().__init__(system=system,

meta_instruction=meta_instruction,

eosys=eosys,

user=user,

eoh=eoh,

assistant=assistant,

eoa=eoa,

separator=separator,

stop_words=stop_words)

from lmdeploy import ChatTemplateConfig, pipeline

messages = [{'role': 'user', 'content': 'who are you?'}]

pipe = pipeline('internlm/internlm2_5-7b-chat',

chat_template_config=ChatTemplateConfig('customized_model'))

for response in pipe.stream_infer(messages):

print(response.text, end='')

```

In this example, we register a LMDeploy chat template that sets the model to be created by LMDeploy, so when the user asks who the model is, the model will answer that it was created by LMDeploy.

---

# Context Parallel

When the memory on a single GPU is insufficient to deploy a model, it is often deployed using tensor parallelism (TP), which generally requires `num_key_value_heads` to be divisible by `TP`. If you want to deploy with `TP > num_key_value_heads`, the kv-heads should be duplicated to meet the divisibility requirement. However, this has two disadvantages:

1. The amount of available kv_cache is halved, which reducing the maximum supported session length.

2. The maximum inference batch size is reduced, leading to lower throughput.

To address this issue, the TurboMind inference backend supports setting `attn_dp_size`, which avoids creating copies of kv-heads, but this introduces data imbalance. To eliminate data imbalance, TurboMind supports sequence parallelism, which allowing kv_cache to be stored interleaved on different cp_ranks. See the example below:

```

cp_rank=2, prompt_len=5, generation_len=4

kv_cache stored on cp_rank0: 0, 2, 4, 6, 8

kv_cache stored on cp_rank1: 1, 3, 5, 7

```

## Usage

Taking Intern-S1 / Qwen3-235B-A22B as an example, their `num_key_value_heads` is 4. If you want to deploy with `TP=8` and avoid duplication of kv_cache, you can deploy in the following way:

```

lmdeploy serve api_server internlm/Intern-S1 --tp 8 --cp 2

lmdeploy serve api_server Qwen/Qwen3-235B-A22B --tp 8 --cp 2

```

---

# How to debug Turbomind

Turbomind is implemented in C++, which is not as easy to debug as Python. This document provides basic methods for debugging Turbomind.

## Prerequisite

First, complete the local compilation according to the commands in [Install from source](../get_started/installation.md).

## Configure Python debug environment

Since many large companies currently use Centos 7 for online production environments, we will use Centos 7 as an example to illustrate the process.

### Obtain `glibc` and `python3` versions

```bash

rpm -qa | grep glibc

rpm -qa | grep python3

```

The result should be similar to this:

```

[username@hostname workdir]# rpm -qa | grep glibc

glibc-2.17-325.el7_9.x86_64

glibc-common-2.17-325.el7_9.x86_64

glibc-headers-2.17-325.el7_9.x86_64

glibc-devel-2.17-325.el7_9.x86_64

[username@hostname workdir]# rpm -qa | grep python3

python3-pip-9.0.3-8.el7.noarch

python3-rpm-macros-3-34.el7.noarch

python3-rpm-generators-6-2.el7.noarch

python3-setuptools-39.2.0-10.el7.noarch

python3-3.6.8-21.el7_9.x86_64

python3-devel-3.6.8-21.el7_9.x86_64

python3.6.4-sre-1.el6.x86_64

```

Based on the information above, we can see that the version of `glibc` is `2.17-325.el7_9.x86_64` and the version of `python3` is `3.6.8-21.el7_9.x86_64`.

### Download and install `debuginfo` library

Download `glibc-debuginfo-common-2.17-325.el7.x86_64.rpm`, `glibc-debuginfo-2.17-325.el7.x86_64.rpm`, and `python3-debuginfo-3.6.8-21.el7.x86_64.rpm` from http://debuginfo.centos.org/7/x86_64.

```bash

rpm -ivh glibc-debuginfo-common-2.17-325.el7.x86_64.rpm

rpm -ivh glibc-debuginfo-2.17-325.el7.x86_64.rpm

rpm -ivh python3-debuginfo-3.6.8-21.el7.x86_64.rpm

```

### Upgrade GDB

```bash

sudo yum install devtoolset-10 -y

echo "source scl_source enable devtoolset-10" >> ~/.bashrc

source ~/.bashrc

```

### Verification

```bash

gdb python3

```

The output should be similar to this:

```

[username@hostname workdir]# gdb python3

GNU gdb (GDB) Red Hat Enterprise Linux 9.2-10.el7

Copyright (C) 2020 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law.

Type "show copying" and "show warranty" for details.

This GDB was configured as "x86_64-redhat-linux-gnu".

Type "show configuration" for configuration details.

For bug reporting instructions, please see:

.

Find the GDB manual and other documentation resources online at:

.

For help, type "help".

Type "apropos word" to search for commands related to "word"...

Reading symbols from python3...

(gdb)

```

If it shows `Reading symbols from python3`, the configuration has been successful.

For other operating systems, please refer to [DebuggingWithGdb](https://wiki.python.org/moin/DebuggingWithGdb).

## Set up symbolic links

After setting up symbolic links, there is no need to install it locally with `pip` every time.

```bash

# Change directory to lmdeploy, e.g.

cd /workdir/lmdeploy

# Since it has been built in the build directory

# Link the lib directory

cd lmdeploy && ln -s ../build/lib . && cd ..

# (Optional) Link compile_commands.json for clangd index

ln -s build/compile_commands.json .

```

## Start debugging

````bash

# Use gdb to start the API server with Llama-2-13b-chat-hf, e.g.

gdb --args python3 -m lmdeploy serve api_server /workdir/Llama-2-13b-chat-hf

# Set directories in gdb

Reading symbols from python3...

(gdb) set directories /workdir/lmdeploy

# Set a breakpoint using the relative path, e.g.

(gdb) b src/turbomind/models/llama/BlockManager.cc:104

# When it shows

# ```

# No source file named src/turbomind/models/llama/BlockManager.cc.

# Make breakpoint pending on future shared library load? (y or [n])

# ```

# Just type `y` and press enter

# Run

(gdb) r

# (Optional) Use https://github.com/InternLM/lmdeploy/blob/main/benchmark/profile_restful_api.py to send a request

python3 profile_restful_api.py --backend lmdeploy --dataset-path /workdir/ShareGPT_V3_unfiltered_cleaned_split.json --num_prompts 1

````

## Using GDB

Refer to [GDB Execution Commands](https://lldb.llvm.org/use/map.html) and happy debugging.

---

# Context length extrapolation

Long text extrapolation refers to the ability of LLM to handle data longer than the training text during inference. TurboMind engine now support [LlamaDynamicNTKScalingRotaryEmbedding](https://github.com/huggingface/transformers/blob/main/src/transformers/models/llama/modeling_llama.py#L178) and the implementation is consistent with huggingface.

## Usage

You can enable the context length extrapolation abality by modifying the TurbomindEngineConfig. Edit the `session_len` to the expected length and change `rope_scaling_factor` to a number no less than 1.0.

Take `internlm2_5-7b-chat-1m` as an example, which supports a context length of up to **1 million tokens**:

```python

from lmdeploy import pipeline, GenerationConfig, TurbomindEngineConfig

backend_config = TurbomindEngineConfig(

rope_scaling_factor=2.5,

session_len=1000000,

max_batch_size=1,

cache_max_entry_count=0.7,

tp=4)

pipe = pipeline('internlm/internlm2_5-7b-chat-1m', backend_config=backend_config)

prompt = 'Use a long prompt to replace this sentence'

gen_config = GenerationConfig(top_p=0.8,

top_k=40,

temperature=0.8,

max_new_tokens=1024)

response = pipe(prompt, gen_config=gen_config)

print(response)

```

## Evaluation

We use several methods to evaluate the long-context-length inference ability of LMDeploy, including [passkey retrieval](#passkey-retrieval), [needle in a haystack](#needle-in-a-haystack) and computing [perplexity](#perplexity)

### Passkey Retrieval

You can try the following code to test how many times LMDeploy can retrieval the special key.

```python

import numpy as np

from lmdeploy import pipeline

from lmdeploy import TurbomindEngineConfig

import time

session_len = 1000000

backend_config = TurbomindEngineConfig(

rope_scaling_factor=2.5,

session_len=session_len,

max_batch_size=1,

cache_max_entry_count=0.7,

tp=4)

pipe = pipeline('internlm/internlm2_5-7b-chat-1m', backend_config=backend_config)

def passkey_retrieval(session_len, n_round=5):

# create long context input

tok = pipe.tokenizer

task_description = 'There is an important info hidden inside a lot of irrelevant text. Find it and memorize them. I will quiz you about the important information there.'

garbage = 'The grass is green. The sky is blue. The sun is yellow. Here we go. There and back again.'

for _ in range(n_round):

start = time.perf_counter()

n_times = (session_len - 1000) // len(tok.encode(garbage))

n_garbage_prefix = np.random.randint(0, n_times)

n_garbage_suffix = n_times - n_garbage_prefix

garbage_prefix = ' '.join([garbage] * n_garbage_prefix)

garbage_suffix = ' '.join([garbage] * n_garbage_suffix)

pass_key = np.random.randint(1, 50000)

information_line = f'The pass key is {pass_key}. Remember it. {pass_key} is the pass key.' # noqa: E501

final_question = 'What is the pass key? The pass key is'

lines = [

task_description,

garbage_prefix,

information_line,

garbage_suffix,

final_question,

]

# inference

prompt = ' '.join(lines)

response = pipe([prompt])

print(pass_key, response)

end = time.perf_counter()

print(f'duration: {end - start} s')

passkey_retrieval(session_len, 5)

```

This test takes approximately 364 seconds per round when conducted on A100-80G GPUs

### Needle In A Haystack

[OpenCompass](https://github.com/open-compass/opencompass) offers very useful tools to perform needle-in-a-haystack evaluation. For specific instructions, please refer to the [guide](https://github.com/open-compass/opencompass/blob/main/docs/en/advanced_guides/needleinahaystack_eval.md).

### Perplexity

The following codes demonstrate how to use LMDeploy to calculate perplexity.

```python

from transformers import AutoTokenizer

from lmdeploy import TurbomindEngineConfig, pipeline

import numpy as np

# load model and tokenizer

model_repoid_or_path = 'internlm/internlm2_5-7b-chat-1m'

backend_config = TurbomindEngineConfig(

rope_scaling_factor=2.5,

session_len=1000000,

max_batch_size=1,

cache_max_entry_count=0.7,

tp=4)

pipe = pipeline(model_repoid_or_path, backend_config=backend_config)

tokenizer = AutoTokenizer.from_pretrained(model_repoid_or_path, trust_remote_code=True)

# get perplexity

text = 'Use a long prompt to replace this sentence'

input_ids = tokenizer.encode(text)

ppl = pipe.get_ppl(input_ids)[0]

print(ppl)

```

---

# Production Metrics

LMDeploy exposes a set of metrics via Prometheus, and provides visualization via Grafana.

## Setup Guide

This section describes how to set up the monitoring stack (Prometheus + Grafana) provided in the `lmdeploy/monitoring` directory.

## Prerequisites

- [Docker](https://docs.docker.com/engine/install/) and [Docker Compose](https://docs.docker.com/compose/install/) installed

- LMDeploy server running with metrics system enabled

## Usage (DP = 1)

1. **Start your LMDeploy server with metrics enabled**

```

lmdeploy serve api_server Qwen/Qwen2.5-7B-Instruct --enable-metrics

```

Replace the model path according to your needs.

By default, the metrics endpoint will be available at `http://:23333/metrics`.

2. **Navigate to the monitoring directory**

```

cd lmdeploy/monitoring

```

3. **Start the monitoring stack**

```

docker compose up

```

This command will start Prometheus and Grafana in the background.

4. **Access the monitoring interfaces**

- Prometheus: Open your web browser and go to http://localhost:9090.

- Grafana: Open your web browser and go to http://localhost:3000.

5. **Log in to Grafana**

- Default Username: `admin`

- Default Password: `admin` You will be prompted to change the password upon your first login.

6. **View the Dashboard**

The LMDeploy dashboard is pre-configured and should be available automatically.

## Usage (DP > 1)

1. **Start your LMDeploy server with metrics enabled**

As an example, we use the model `Qwen/Qwen2.5-7B-Instruct` with `DP=2, TP=2`. Start the service as follows:

```bash

# Proxy server

lmdeploy serve proxy --server-port 8000 --routing-strategy 'min_expected_latency' --serving-strategy Hybrid --log-level INFO

# API server

LMDEPLOY_DP_MASTER_ADDR=127.0.0.1 \

LMDEPLOY_DP_MASTER_PORT=29555 \

lmdeploy serve api_server \

Qwen/Qwen2.5-7B-Instruct \

--backend pytorch \

--tp 2 \

--dp 2 \

--proxy-url http://0.0.0.0:8000 \

--nnodes 1 \

--node-rank 0 \

--enable-metrics

```

You should be able to see multiple API servers added to the proxy server list. Details can be found in `lmdeploy/serve/proxy/proxy_config.json`.

For example, you may have the following API servers:

```

http://$host_ip:$api_server_port1

http://$host_ip:$api_server_port2

```

2. **Modify the Prometheus configuration**

When `DP > 1`, LMDeploy will launch one API server for each DP rank. If you want to monitor a specific API server, e.g. `http://$host_ip:$api_server_port1`, modify the configuration file `lmdeploy/monitoring/prometheus.yaml` as follows.

> Note that you should use the actual host machine IP instead of `127.0.0.1` here, since LMDeploy starts the API server using the actual host IP when `DP > 1`

```

global:

scrape_interval: 5s

evaluation_interval: 30s

scrape_configs:

- job_name: lmdeploy

static_configs:

- targets:

- '$host_ip:$api_server_port1' # <= Modify this

```

3. **Navigate to the monitoring folder and perform the same steps as described above**

## Troubleshooting

1. **Port conflicts**

Check if any services are occupying ports `23333` (LMDeploy server port), `9090` (Prometheus port), or `3000` (Grafana port). You can either stop the conflicting running ports or modify the config files as follows:

- Modify LMDeploy server port for Prometheus scrape

In `lmdeploy/monitoring/prometheus.yaml`

```

global:

scrape_interval: 5s

evaluation_interval: 30s

scrape_configs:

- job_name: lmdeploy

static_configs:

- targets:

- '127.0.0.1:23333' # <= Modify this LMDeploy server port 23333, need to match the running server port

```

- Modify Prometheus port

In `lmdeploy/monitoring/grafana/datasources/datasource.yaml`

```

apiVersion: 1

datasources:

- name: Prometheus

type: prometheus

access: proxy

url: http://localhost:9090 # <= Modify this Prometheus interface port 9090

isDefault: true

editable: false

```

- Modify Grafana port:

In `lmdeploy/monitoring/docker-compose.yaml`, for example, change the port to `3090`

Option 1: Add `GF_SERVER_HTTP_PORT` to the environment section.

```

environment:

- GF_AUTH_ANONYMOUS_ENABLED=true

- GF_SERVER_HTTP_PORT=3090 # <= Add this line

```

Option 2: Use port mapping.

```

grafana:

image: grafana/grafana:latest

container_name: grafana

ports:

- "3090:3000" # <= Host:Container port mapping

```

2. **No data on the dashboard**

- Create traffic

Try to send some requests to the LMDeploy server to create certain traffic

```

python3 benchmark/profile_restful_api.py --backend lmdeploy --num-prompts 5000 --dataset-path ShareGPT_V3_unfiltered_cleaned_split.json

```

After refreshing, you should be able to see data on the dashboard.

---

# PyTorchEngine Multi-Node Deployment Guide

To support larger-scale model deployment requirements, PyTorchEngine provides multi-node deployment support. Below are the detailed steps for deploying a `tp=16` model across two 8-GPU nodes.

## 1. Create Docker Containers (Optional)

To ensure consistency across the cluster environment, it is recommended to use Docker to set up the cluster. Create containers on each node as follows:

```bash

docker run -it \

--network host \

-v $MODEL_PATH:$CONTAINER_MODEL_PATH \

openmmlab/lmdeploy:latest

```

> \[!IMPORTANT\]

> Ensure that the model is placed in the same directory on all node containers.

## 2. Set Up the Cluster Using Ray

### 2.1 Start the Head Node

Select one node as the **head node** and run the following command in its container:

```bash

ray start --head --port=$DRIVER_PORT

```

### 2.2 Join the Cluster

On the other nodes, use the following command in their containers to join the cluster created by the head node:

```bash

ray start --address=$DRIVER_NODE_ADDR:$DRIVER_PORT

```

run `ray status` on head node to check the cluster.

> \[!IMPORTANT\]

> Ensure that `DRIVER_NODE_ADDR` is the address of the head node and `DRIVER_PORT` matches the port number used during the head node initialization.

## 3. Use LMDeploy Interfaces

In the head node's container, you can use all functionalities of PyTorchEngine as usual.

### 3.1 Start the Server

```bash

lmdeploy serve api_server \

$CONTAINER_MODEL_PATH \

--backend pytorch \

--tp 16

```

### 3.2 Use the Pipeline

```python

from lmdeploy import pipeline, PytorchEngineConfig

if __name__ == '__main__':

model_path = '/path/to/model'

backend_config = PytorchEngineConfig(tp=16)

with pipeline(model_path, backend_config=backend_config) as pipe:

outputs = pipe('Hakuna Matata')

```

> \[!NOTE\]

> PyTorchEngine will automatically choose the appropriate launch method (single-node/multi-node) based on the `tp` parameter and the number of devices available in the cluster. If you want to enforce the use of the Ray cluster, you can configure `distributed_executor_backend='ray'` in `PytorchEngineConfig` or use the environment variable `LMDEPLOY_EXECUTOR_BACKEND=ray`.

______________________________________________________________________

By following the steps above, you can successfully deploy PyTorchEngine in a multi-node environment and leverage the Ray cluster for distributed computing.

> \[!WARNING\]

> To achieve better performance, we recommend users to configure a higher-quality network environment (such as using [InfiniBand](https://en.wikipedia.org/wiki/InfiniBand)) to improve engine efficiency.

---

# PyTorchEngine Multithread

We have removed `thread_safe` mode from PytorchEngine since [PR2907](https://github.com/InternLM/lmdeploy/pull/2907). We encourage users to achieve high concurrency by using **service API** or **coroutines** whenever possible, for example:

```python

import asyncio

from lmdeploy import pipeline, PytorchEngineConfig

event_loop = asyncio.new_event_loop()

asyncio.set_event_loop(event_loop)

model_path = 'Llama-3.2-1B-Instruct'

pipe = pipeline(model_path, backend_config=PytorchEngineConfig())

async def _gather_output():

tasks = [

pipe.async_batch_infer('Hakuna Matata'),

pipe.async_batch_infer('giraffes are heartless creatures'),

]

return await asyncio.gather(*tasks)

output = asyncio.run(_gather_output())

print(output[0].text)

print(output[1].text)

```

If you do need multithreading, it would be easy to warp it like below:

```python

import threading

from queue import Queue

import asyncio

from lmdeploy import pipeline, PytorchEngineConfig

model_path = 'Llama-3.2-1B-Instruct'

async def _batch_infer(inque: Queue, outque: Queue, pipe):

while True:

if inque.empty():

await asyncio.sleep(0)

continue

input = inque.get_nowait()

output = await pipe.async_batch_infer(input)

outque.put(output)

def server(inques, outques):

event_loop = asyncio.new_event_loop()

asyncio.set_event_loop(event_loop)

pipe = pipeline(model_path, backend_config=PytorchEngineConfig())

for inque, outque in zip(inques, outques):

event_loop.create_task(_batch_infer(inque, outque, pipe))

event_loop.run_forever()

def client(inque, outque, message):

inque.put(message)

print(outque.get().text)

inques = [Queue(), Queue()]

outques = [Queue(), Queue()]

t_server = threading.Thread(target=server, args=(inques, outques))

t_client0 = threading.Thread(target=client, args=(inques[0], outques[0], 'Hakuna Matata'))

t_client1 = threading.Thread(target=client, args=(inques[1], outques[1], 'giraffes are heartless creatures'))

t_server.start()

t_client0.start()

t_client1.start()

t_client0.join()

t_client1.join()

```

> \[!WARNING\]

> This is NOT recommended, as multithreading introduces additional overhead, leading to unstable inference performance.

---

# lmdeploy.pytorch New Model Support

lmdeploy.pytorch is designed to simplify the support for new models and the development of prototypes. Users can adapt new models according to their own needs.

## Model Support

### Configuration Loading (Optional)

lmdeploy.pytorch initializes the engine based on the model's config file. If the parameter naming of the model to be integrated differs from common models in transformers, parsing errors may occur. A custom ConfigBuilder can be added to parse the configuration.

```python

# lmdeploy/pytorch/configurations/gemma.py

from lmdeploy.pytorch.config import ModelConfig

from .builder import AutoModelConfigBuilder

class GemmaModelConfigBuilder(AutoModelConfigBuilder):

@classmethod

def condition(cls, hf_config):

# Check if hf_config is suitable for this builder

return hf_config.model_type in ['gemma', 'gemma2']

@classmethod

def build(cls, hf_config, model_path: str = None):

# Use the hf_config loaded by transformers

# Construct the ModelConfig for the pytorch engine

return ModelConfig(hidden_size=hf_config.hidden_size,

num_layers=hf_config.num_hidden_layers,

num_attention_heads=hf_config.num_attention_heads,

num_key_value_heads=hf_config.num_key_value_heads,

bos_token_id=hf_config.bos_token_id,

eos_token_id=hf_config.eos_token_id,

head_dim=hf_config.head_dim,

vocab_size=hf_config.vocab_size)

```

The `lmdeploy.pytorch.check_env.check_model` function can be used to verify if the configuration can be parsed correctly.

### Implementing the Model

After ensuring that the configuration can be parsed correctly, you can start implementing the model logic. Taking the implementation of llama as an example, we need to create the model using the configuration file from transformers.

```python

class LlamaForCausalLM(nn.Module):

# Constructor, builds the model with the given config

# ctx_mgr is the context manager, which can be used to pass engine configurations or additional parameters

def __init__(self,

config: LlamaConfig,

ctx_mgr: StepContextManager,

dtype: torch.dtype = None,

device: torch.device = None):

super().__init__()

self.config = config

self.ctx_mgr = ctx_mgr

# build LLamaModel

self.model = LlamaModel(config, dtype=dtype, device=device)

# build lm_head

self.lm_head = build_rowwise_linear(config.hidden_size,

config.vocab_size,

bias=False,

dtype=dtype,

device=device)

# Model inference function

# It is recommended to use the same parameters as below

def forward(

self,

input_ids: torch.Tensor,

position_ids: torch.Tensor,

past_key_values: List[List[torch.Tensor]],

attn_metadata: Any = None,

inputs_embeds: torch.Tensor = None,

**kwargs,

):

hidden_states = self.model(

input_ids=input_ids,

position_ids=position_ids,

past_key_values=past_key_values,

attn_metadata=attn_metadata,

inputs_embeds=inputs_embeds,

)

logits = self.lm_head(hidden_states)

logits = logits.float()

return logits

```

In addition to these, the following content needs to be added:

```python

class LlamaForCausalLM(nn.Module):

...

# Indicates whether the model supports cudagraph

# Can be a callable object, receiving forward inputs

# Dynamically determines if cudagraph is supported

support_cuda_graph = True

# Builds model inputs

# Returns a dictionary, the keys of which must be inputs to forward

def prepare_inputs_for_generation(

self,

past_key_values: List[List[torch.Tensor]],

inputs_embeds: Optional[torch.Tensor] = None,

context: StepContext = None,

):

...

# Loads weights

# The model's inputs are key-value pairs of the state dict

def load_weights(self, weights: Iterable[Tuple[str, torch.Tensor]]):

...

```

We have encapsulated many fused operators to simplify the model construction. These operators better support various functions such as tensor parallelism and quantization. We encourage developers to use these ops as much as possible.

```python

# Using predefined build_merged_colwise_linear, SiluAndMul, build_rowwise_linear

# Helps us build the model faster and without worrying about tensor concurrency, quantization, etc.

class LlamaMLP(nn.Module):

def __init__(self,

config: LlamaConfig,

dtype: torch.dtype = None,

device: torch.device = None):

super().__init__()

quantization_config = getattr(config, 'quantization_config', None)

# gate up

self.gate_up_proj = build_merged_colwise_linear(

config.hidden_size,

[config.intermediate_size, config.intermediate_size],

bias=config.mlp_bias,

dtype=dtype,

device=device,

quant_config=quantization_config,

is_tp=True,

)

# silu and mul

self.act_fn = SiluAndMul(inplace=True)

# down

self.down_proj = build_rowwise_linear(config.intermediate_size,

config.hidden_size,

bias=config.mlp_bias,

quant_config=quantization_config,

dtype=dtype,

device=device,

is_tp=True)

def forward(self, x):

"""forward."""

gate_up = self.gate_up_proj(x)

act = self.act_fn(gate_up)

return self.down_proj(act)

```

### Model Registration

To ensure that the developed model implementation can be used normally, we also need to register the model in `lmdeploy/pytorch/models/module_map.py`

```python

MODULE_MAP.update({

'LlamaForCausalLM':

f'{LMDEPLOY_PYTORCH_MODEL_PATH}.llama.LlamaForCausalLM',

})

```

If you do not wish to modify the model source code, you can also pass a custom module map from the outside, making it easier to integrate into other projects.

```

from lmdeploy import PytorchEngineConfig, pipeline

backend_config = PytorchEngineConfig(custom_module_map='/path/to/custom/module_map.py')

generator = pipeline(model_path, backend_config=backend_config)

```

---

# PyTorchEngine Profiling

We provide multiple profiler to analysis the performance of PyTorchEngine.

## PyTorch Profiler

We have integrated the PyTorch Profiler. You can enable it by setting environment variables when launching the pipeline or API server:

```bash

# enable profile cpu

export LMDEPLOY_PROFILE_CPU=1

# enable profile cuda

export LMDEPLOY_PROFILE_CUDA=1

# profile would start after 3 seconds

export LMDEPLOY_PROFILE_DELAY=3

# profile 10 seconds

export LMDEPLOY_PROFILE_DURATION=10

# prefix path to save profile files

export LMDEPLOY_PROFILE_OUT_PREFIX="/path/to/save/profile_"

```

After the program exits, the profiling data will be saved to the path specified by `LMDEPLOY_PROFILE_OUT_PREFIX` for performance analysis.

## Nsight System

We also support using Nsight System to profile NVIDIA devices.

### Single GPU

For single-GPU scenarios, simply use `nsys profile`:

```bash

nsys profile python your_script.py

```

### Multi-GPU

When using multi-GPU solutions like DP/TP/EP, set the following environment variables:

```bash

# enable nsight system

export LMDEPLOY_RAY_NSYS_ENABLE=1

# prefix path to save profile files

export LMDEPLOY_RAY_NSYS_OUT_PREFIX="/path/to/save/profile_"

```

Then launch the script or API server as usual (Do **NOT** use nsys profile here).

The profiling results will be saved under `LMDEPLOY_RAY_NSYS_OUT_PREFIX`. If `LMDEPLOY_RAY_NSYS_OUT_PREFIX` is not configured, you can find the results in `/tmp/ray/session_xxx/nsight`.

## Ray timeline

We use `ray` to support multi-device deployment. You can get the ray timeline with the environments below.

```bash

export LMDEPLOY_RAY_TIMELINE_ENABLE=1

export LMDEPLOY_RAY_TIMELINE_OUT_PATH="/path/to/save/timeline.json"

```

---

# Speculative Decoding

Speculative decoding is an optimization technique that introcude a lightweight draft model to propose multiple next tokens and then, the main model verify and choose the longest matched tokens in a forward pass. Compared with standard auto-regressive decoding, this methold lets the system generate multiple tokens at once.

> \[!NOTE\]

> This is an experimental feature in lmdeploy.

## Examples

Here are some examples.

### Eagle 3

#### Prepare

Install [flash-atten3 ](https://github.com/Dao-AILab/flash-attention?tab=readme-ov-file#flashattention-3-beta-release)

```shell

git clone --depth=1 https://github.com/Dao-AILab/flash-attention.git

cd flash-attention/hopper

python setup.py install

```

#### pipeline

```python

from lmdeploy import PytorchEngineConfig, pipeline

from lmdeploy.messages import SpeculativeConfig

if __name__ == '__main__':

model_path = 'meta-llama/Llama-3.1-8B-Instruct'

spec_cfg = SpeculativeConfig(

method='eagle3',

num_speculative_tokens=3,

model='yuhuili/EAGLE3-LLaMA3.1-Instruct-8B',

)

pipe = pipeline(model_path, backend_config=PytorchEngineConfig(max_batch_size=128), speculative_config=spec_cfg)

response = pipe(['Hi, pls intro yourself', 'Shanghai is'])

print(response)

```

#### serving

```shell

lmdeploy serve api_server \

meta-llama/Llama-3.1-8B-Instruct \

--backend pytorch \

--server-port 24545 \

--speculative-draft-model yuhuili/EAGLE3-LLaMA3.1-Instruct-8B \

--speculative-algorithm eagle3 \

--speculative-num-draft-tokens 3 \

--max-batch-size 128 \

--enable-metrics

```

### Deepseek MTP

#### Prepare

Install [FlashMLA](https://github.com/deepseek-ai/FlashMLA?tab=readme-ov-file#installation)

```shell

git clone https://github.com/deepseek-ai/FlashMLA.git flash-mla

cd flash-mla

git submodule update --init --recursive

pip install -v .

```

#### pipeline

```python

from lmdeploy import PytorchEngineConfig, pipeline

from lmdeploy.messages import SpeculativeConfig

if __name__ == '__main__':

model_path = 'deepseek-ai/DeepSeek-V3'

spec_cfg = SpeculativeConfig(

method='deepseek_mtp',

num_speculative_tokens=3,

)

pipe = pipeline(model_path,

backend_config=PytorchEngineConfig(tp=16, max_batch_size=128),

speculative_config=spec_cfg)

response = pipe(['Hi, pls intro yourself', 'Shanghai is'])

print(response)

```

#### serving

```shell

lmdeploy serve api_server \

deepseek-ai/DeepSeek-V3 \

--backend pytorch \

--server-port 24545 \

--tp 16 \

--speculative-algorithm deepseek_mtp \

--speculative-num-draft-tokens 3 \

--max-batch-size 128 \

--enable-metrics

```

---

# Structured output

Structured output, also known as guided decoding, forces the model to generate text that exactly matches a user-supplied JSON schema, grammar, or regex.

Both the PyTorch and Turbomind backends now support structured (schema-constrained) generation.

Below are examples for the pipeline API and the API server.

## pipeline

```python

from lmdeploy import pipeline

from lmdeploy.messages import GenerationConfig, PytorchEngineConfig

model = 'internlm/internlm2-chat-1_8b'

guide = {

'type': 'object',

'properties': {

'name': {

'type': 'string'

},

'skills': {

'type': 'array',

'items': {

'type': 'string',

'maxLength': 10

},

'minItems': 3

},

'work history': {

'type': 'array',

'items': {

'type': 'object',

'properties': {

'company': {

'type': 'string'

},

'duration': {

'type': 'string'

}

},

'required': ['company']

}

}

},

'required': ['name', 'skills', 'work history']

}

pipe = pipeline(model, backend_config=PytorchEngineConfig(), log_level='INFO')

gen_config = GenerationConfig(

response_format=dict(type='json_schema', json_schema=dict(name='test', schema=guide)))

response = pipe(['Make a self introduction please.'], gen_config=gen_config)

print(response)

```

## api_server

Firstly, start the api_server service for the InternLM2 model.

```shell

lmdeploy serve api_server internlm/internlm2-chat-1_8b --backend pytorch

```

The client can test using OpenAI’s python package: The output result is a response in JSON format.

```python

from openai import OpenAI

guide = {

'type': 'object',

'properties': {

'name': {

'type': 'string'

},

'skills': {

'type': 'array',

'items': {

'type': 'string',

'maxLength': 10

},

'minItems': 3

},

'work history': {

'type': 'array',

'items': {

'type': 'object',

'properties': {

'company': {

'type': 'string'

},

'duration': {

'type': 'string'

}

},

'required': ['company']

}

}

},

'required': ['name', 'skills', 'work history']

}

response_format=dict(type='json_schema', json_schema=dict(name='test',schema=guide))

messages = [{'role': 'user', 'content': 'Make a self-introduction please.'}]

client = OpenAI(api_key='YOUR_API_KEY', base_url='http://0.0.0.0:23333/v1')

model_name = client.models.list().data[0].id

response = client.chat.completions.create(

model=model_name,

messages=messages,

temperature=0.8,

response_format=response_format,

top_p=0.8)

print(response)

```

---

# Update Weights

LMDeploy supports update model weights online for scenes such as RL training. Here are the steps to do so.

## Step 1: Launch server

For pytorch backend you have to add `--distributed-executor-backend ray`.

```shell

lmdeploy serve api_server internlm/internlm2_5-7b-chat --server-port 23333 --distributed-executor-backend ray # for pytorch backend

```

## Step 2: Offloads weights & kv cache

Before update model weights, the server should offloads weights and kv cache.

```python

from lmdeploy.utils import serialize_state_dict

import requests

BASE_URL = 'http://0.0.0.0:23333'

api_key = 'sk-xxx'

headers = {

"Content-Type": "application/json",

"Authorization": f"Bearer {api_key}",

}

# offloads weights and kv cache with level=2

response = requests.post(f"{BASE_URL}/sleep", headers=headers, params=dict(tags=['weights', 'kv_cache'], level=2))

assert response.status_code == 200, response.status_code

# wake up weights, the server is ready for update weights

response = requests.post(f"{BASE_URL}/wakeup", headers=headers, params=dict(tags=['weights']))

assert response.status_code == 200, response.status_code

```

## Step 3: Update weights

Split model weights into multi segments and update through `update_weights` endpoint.

```python

segmented_state_dict: List[Dict[str, torch.Tensor]] = ...

num_segment = len(segmented_state_dict)

for seg_idx in range(num_segment):

serialized_data = serialize_state_dict(segmented_state_dict[seg_idx])

data = dict(serialized_named_tensors=serialized_data, finished=seg_idx == num_segment-1)

response = requests.post(f"{BASE_URL}/update_weights", headers=headers, json=data)

assert response.status_code == 200, f"response.status_code = {response.status_code}"

```

**Note**: For pytorch backend, lmdeploy also supports flattened bucket tensors:

```python

from lmdeploy.utils import serialize_state_dict, FlattenedTensorBucket, FlattenedTensorMetadata

segmented_state_dict: List[Dict[str, torch.Tensor]] = ...

num_segment = len(segmented_state_dict)

for seg_idx in range(num_segment):

named_tensors = list(segmented_state_dict[seg_idx].items())

bucket = FlattenedTensorBucket(named_tensors=named_tensors)

metadata = bucket.get_metadata()

flattened_tensor_data = dict(flattened_tensor=bucket.get_flattened_tensor(), metadata=metadata)

serialized_data = serialize_state_dict(flattened_tensor_data)

data = dict(serialized_named_tensors=serialized_data, finished=seg_idx == num_segment-1, load_format='flattened_bucket')

response = requests.post(f"{BASE_URL}/update_weights", headers=headers, json=data)

assert response.status_code == 200, f"response.status_code = {response.status_code}"

```

## Step 4: Wakeup server

After update model weights, the server should onloads kv cache and provide serving again with the new updated weights.

```python

response = requests.post(f"{BASE_URL}/wakeup", headers=headers, params=dict(tags=['kv_cache']))

assert response.status_code == 200, response.status_code

```

---

# TurboMind Benchmark on A100

All the following results are tested on A100-80G(x8) CUDA 11.8.

The tested lmdeploy version is `v0.2.0`

## Request Throughput Benchmark

- `batch`: the max batch size during inference

- `tp`: the number of GPU cards for tensor parallelism

- `num_prompts`: the number of prompts, i.e. the number of requests

- `PRS`: **R**equest **P**er **S**econd

- `FTL`: **F**irst **T**oken **L**atency

### FP16

| model | batch | tp | num_promts | RPS | FTL(ave)(s) | FTL(min)(s) | FTL(max)(s) | 50%(s) | 75%(s) | 95%(s) | 99%(s) | throughput(out tok/s) | throughput(total tok/s) |

| ------------ | ----- | --- | ---------- | ------ | ----------- | ----------- | ----------- | ------ | ------ | ------ | ------ | --------------------- | ----------------------- |

| llama2-7b | 256 | 1 | 3000 | 14.556 | 0.526 | 0.092 | 4.652 | 0.066 | 0.101 | 0.155 | 0.220 | 3387.419 | 6981.159 |

| llama2-13b | 128 | 1 | 3000 | 7.950 | 0.352 | 0.075 | 4.193 | 0.051 | 0.067 | 0.138 | 0.202 | 1850.145 | 3812.978 |

| internlm-20b | 128 | 2 | 3000 | 10.291 | 0.287 | 0.073 | 3.845 | 0.053 | 0.072 | 0.113 | 0.161 | 2053.266 | 4345.057 |

| llama2-70b | 256 | 4 | 3000 | 7.231 | 1.075 | 0.139 | 14.524 | 0.102 | 0.153 | 0.292 | 0.482 | 1682.738 | 3467.969 |

## Static Inference Benchmark

- `batch`: the max batch size during inference

- `tp`: the number of GPU cards for tensor parallelism

- `prompt_tokens`: the number of input tokens

- `output_tokens`: the number of generated tokens

- `throughput`: the number of generated tokens per second

- `FTL`: **F**irst **T**oken **L**atency

### FP16 llama2-7b

| batch | tp | prompt_tokens | output_tokens | throughput(out tok/s) | mem(GB) | FTL(ave)(s) | FTL(min)(s) | FTL(max)(s) | 50%(s) | 75%(s) | 95%(s) | 99%(s) |

| ----- | --- | ------------- | ------------- | --------------------- | ------- | ----------- | ----------- | ----------- | ------ | ------ | ------ | ------ |

| 1 | 1 | 1 | 128 | 100.02 | 76.55 | 0.011 | 0.01 | 0.011 | 0.009 | 0.009 | 0.01 | 0.011 |

| 1 | 1 | 128 | 128 | 102.21 | 76.59 | 0.022 | 0.022 | 0.022 | 0.01 | 0.01 | 0.01 | 0.01 |

| 1 | 1 | 128 | 2048 | 98.92 | 76.59 | 0.022 | 0.022 | 0.022 | 0.01 | 0.01 | 0.01 | 0.01 |

| 1 | 1 | 2048 | 128 | 86.1 | 76.77 | 0.139 | 0.139 | 0.14 | 0.01 | 0.01 | 0.01 | 0.011 |

| 1 | 1 | 2048 | 2048 | 93.78 | 76.77 | 0.14 | 0.139 | 0.141 | 0.011 | 0.011 | 0.011 | 0.011 |

| 16 | 1 | 1 | 128 | 1504.72 | 76.59 | 0.021 | 0.011 | 0.031 | 0.01 | 0.011 | 0.011 | 0.013 |

| 16 | 1 | 128 | 128 | 1272.47 | 76.77 | 0.129 | 0.023 | 0.149 | 0.011 | 0.011 | 0.012 | 0.014 |

| 16 | 1 | 128 | 2048 | 1010.62 | 76.77 | 0.13 | 0.023 | 0.144 | 0.015 | 0.018 | 0.02 | 0.021 |

| 16 | 1 | 2048 | 128 | 348.87 | 78.3 | 2.897 | 0.143 | 3.576 | 0.02 | 0.021 | 0.022 | 0.025 |

| 16 | 1 | 2048 | 2048 | 601.63 | 78.3 | 2.678 | 0.142 | 3.084 | 0.025 | 0.028 | 0.03 | 0.031 |

| 32 | 1 | 1 | 128 | 2136.73 | 76.62 | 0.079 | 0.014 | 0.725 | 0.011 | 0.012 | 0.013 | 0.021 |

| 32 | 1 | 128 | 128 | 2125.47 | 76.99 | 0.214 | 0.022 | 0.359 | 0.012 | 0.013 | 0.014 | 0.035 |

| 32 | 1 | 128 | 2048 | 1462.12 | 76.99 | 0.2 | 0.026 | 0.269 | 0.021 | 0.026 | 0.031 | 0.033 |

| 32 | 1 | 2048 | 128 | 450.43 | 78.3 | 4.288 | 0.143 | 5.267 | 0.031 | 0.032 | 0.034 | 0.161 |

| 32 | 1 | 2048 | 2048 | 733.34 | 78.34 | 4.118 | 0.19 | 5.429 | 0.04 | 0.045 | 0.05 | 0.053 |

| 64 | 1 | 1 | 128 | 4154.81 | 76.71 | 0.042 | 0.013 | 0.21 | 0.012 | 0.018 | 0.028 | 0.041 |

| 64 | 1 | 128 | 128 | 3024.07 | 77.43 | 0.44 | 0.026 | 1.061 | 0.014 | 0.018 | 0.026 | 0.158 |

| 64 | 1 | 128 | 2048 | 1852.06 | 77.96 | 0.535 | 0.027 | 1.231 | 0.03 | 0.041 | 0.048 | 0.053 |

| 64 | 1 | 2048 | 128 | 493.46 | 78.4 | 6.59 | 0.142 | 16.235 | 0.046 | 0.049 | 0.055 | 0.767 |

| 64 | 1 | 2048 | 2048 | 755.65 | 78.4 | 39.105 | 0.142 | 116.285 | 0.047 | 0.049 | 0.051 | 0.207 |

---

# Benchmark

Please install the lmdeploy precompiled package and download the script and the test dataset:

```shell

pip install lmdeploy

# clone the repo to get the benchmark script

git clone --depth=1 https://github.com/InternLM/lmdeploy

cd lmdeploy

# switch to the tag corresponding to the installed version:

git fetch --tags

# Check the installed lmdeploy version:

pip show lmdeploy | grep Version

# Then, check out the corresponding tag (replace with the version string):

git checkout

# download the test dataset

wget https://huggingface.co/datasets/anon8231489123/ShareGPT_Vicuna_unfiltered/resolve/main/ShareGPT_V3_unfiltered_cleaned_split.json

```

## Benchmark offline pipeline API

```shell

python3 benchmark/profile_pipeline_api.py ShareGPT_V3_unfiltered_cleaned_split.json meta-llama/Meta-Llama-3-8B-Instruct

```

For a comprehensive list of available arguments, please execute `python3 benchmark/profile_pipeline_api.py -h`

## Benchmark offline engine API

```shell

python3 benchmark/profile_throughput.py ShareGPT_V3_unfiltered_cleaned_split.json meta-llama/Meta-Llama-3-8B-Instruct

```

Detailed argument specification can be retrieved by running `python3 benchmark/profile_throughput.py -h`

## Benchmark online serving

Launch the server first (you may refer [here](../llm/api_server.md) for guide) and run the following command:

```shell

python3 benchmark/profile_restful_api.py --backend lmdeploy --num-prompts 5000 --dataset-path ShareGPT_V3_unfiltered_cleaned_split.json

```

For detailed argument specification of `profile_restful_api.py`, please run the help command `python3 benchmark/profile_restful_api.py -h`.

---

# Model Evaluation Guide

This document describes how to evaluate a model's capabilities on academic datasets using OpenCompass and LMDeploy. The complete evaluation process consists of two main stages: inference stage and evaluation stage.

During the inference stage, the target model is first deployed as an inference service using LMDeploy. OpenCompass then sends dataset content as requests to this service and collects the generated responses.

In the evaluation stage, the OpenCompass evaluation model `opencompass/CompassVerifier-32B` is deployed as a service via LMDeploy. OpenCompass subsequently submits the inference results to this service to obtain final evaluation scores.

If sufficient computational resources are available, please refer to the [End-to-End Evaluation](#end-to-end-evaluation) section for complete workflow execution. Otherwise, we recommend following the [Step-by-Step Evaluation](#step-by-step-evaluation) section to execute both stages sequentially.

## Environment Setup

```shell

pip install lmdeploy

pip install "opencompass[full]"

# Download the lmdeploy source code, which will be used in subsequent steps to access eval script and configuration

git clone --depth=1 https://github.com/InternLM/lmdeploy.git

```

It is recommended to install LMDeploy and OpenCompass in separate Python virtual environments to avoid potential dependency conflicts.

## End-to-End Evaluation

1. **Deploy Target Model**

```shell

lmdeploy serve api_server --server-port 10000 <--other-options>

```

2. **Deploy Evaluation Model (Judger)**

```shell

lmdeploy serve api_server opencompass/CompassVerifier-32B --server-port 20000 --tp 2

```

3. **Generate Evaluation Configuration and Execute**

```shell

cd {the/root/path/of/lmdeploy/repo}

## Specify the dataset path. OC will download the datasets automatically if they are

## not found in the path

export HF_DATASETS_CACHE=/nvme4/huggingface_hub/datasets

export COMPASS_DATA_CACHE=/nvme1/shared/opencompass/.cache

python eval/eval.py {task_name} \

--mode all \

--api-server http://{api-server-ip}:10000 \

--judger-server http://{judger-server-ip}:20000 \

-w {oc_output_dir}

```

For detailed usage instructions about `eval.py`, such as specifying evaluation datasets, please run `python eval/eval.py --help`.

After evaluation completion, results are saved in `{oc_output_dir}/{yyyymmdd_hhmmss}`, where `{yyyymmdd_hhmmss}` represents the task timestamp.

## Step-by-Step Evaluation

### Inference Stage

This stage generates model responses for the dataset.

1. **Deploy Target Model**

```shell

lmdeploy serve api_server --server-port 10000 <--other-options>

```

2. **Generate Inference Configuration and Execute**

```shell

cd {the/root/path/of/lmdeploy/repo}

## Specify the dataset path. OC will download the datasets automatically if they are

## not found in the path

export COMPASS_DATA_CACHE=/nvme1/shared/opencompass/.cache

export HF_DATASETS_CACHE=/nvme4/huggingface_hub/datasets

# Run inference task

python eval/eval.py {task_name} \

--mode infer \

--api-server http://{api-server-ip}:10000 \

-w {oc_output_dir}

```

For detailed usage instructions about `eval.py`, such as specifying evaluation datasets, please run `python eval/eval.py --help`.

### Evaluation Stage

This stage uses the evaluation model (Judger) to assess the quality of inference results.

1. **Deploy Evaluation Model (Judger)**

```shell

lmdeploy serve api_server opencompass/CompassVerifier-32B --server-port 20000 --tp 2 --session-len 65536

```

2. **Generate Evaluation Configuration and Execute**

```shell

cd {the/root/path/of/lmdeploy/repo}

## Specify the dataset path. OC will download the datasets automatically if they are

## not found in the path

export COMPASS_DATA_CACHE=/nvme1/shared/opencompass/.cache

export HF_DATASETS_CACHE=/nvme4/huggingface_hub/datasets

# Run evaluation task

opencompass /path/to/judger_config.py -m eval -w {oc_output_dir} -r {yyyymmdd_hhmmss}

```

Important Notes:

- `task_name` must be identical to the one used in the inference stage

- The `oc_output_dir` specified with `-w` must match the directory used in the inference stage

- The `-r` parameter indicates "previous outputs & results" and should specify the timestamp directory generated during the inference stage (the subdirectory under `{oc_output_dir}`)

For detailed usage instructions about `eval.py`, such as specifying evaluation datasets, please run `python eval/eval.py --help`.

---

# Multi-Modal Model Evaluation Guide

This document describes how to evaluate multi-modal models' capabilities using VLMEvalKit and LMDeploy.

## Environment Setup

```shell

pip install lmdeploy

git clone https://github.com/open-compass/VLMEvalKit.git

cd VLMEvalKit && pip install -e .

```

It is recommended to install LMDeploy and VLMEvalKit in separate Python virtual environments to avoid potential dependency conflicts.

## Evaluations

1. **Deploy Large Multi-Modality Models (LMMs)**

```shell

lmdeploy serve api_server --server-port 23333 <--other-options>

```

2. **Config the Evaluation Settings**

Modify `VLMEvalKit/vlmeval/config.py`, add following LMDeploy API configurations in the `api_models` dictionary.

The `` is a custom name for your evaluation task (e.g., `lmdeploy_qwen3vl-4b`). The `model` parameter should match the `` used in the `lmdeploy serve` command.

```python

// filepath: VLMEvalKit/vlmeval/config.py

// ...existing code...

api_models = {

# lmdeploy api

...,

"": partial(

LMDeployAPI,

api_base="http://0.0.0.0:23333/v1/chat/completions",

model="",

retry=4,

timeout=1200,

temperature=0.7, # modify if needed

max_new_tokens=16384, # modify if needed

),

...

}

// ...existing code...

```

3. **Start Evaluations**

```shell

cd VLMEvalKit

python run.py --data OCRBench --model --api-nproc 16 --reuse --verbose --api 123

```

The `` should match the one used in the above config file.

Parameter explanations:

- `--data`: Specify the dataset for evaluation (e.g., `OCRBench`).

- `--model`: Specify the model name, which must match the `` in your `config.py`.

- `--api-nproc`: Specify the number of parallel API calls.

- `--reuse`: Reuse previous inference results to avoid re-running completed evaluations.

- `--verbose`: Enable verbose logging.

---

# FAQ

## ModuleNotFoundError

### No module named 'mmengine.config.lazy'

There is probably a cached mmengine in your local host. Try to install its latest version.

```shell

pip install --upgrade mmengine

```

### No module named '\_turbomind'

It may have been caused by the following reasons.

1. You haven't installed lmdeploy's precompiled package. `_turbomind` is the pybind package of c++ turbomind, which involves compilation. It is recommended that you install the precompiled one.

```shell

pip install lmdeploy[all]

```

2. If you have installed it and still encounter this issue, it is probably because you are executing turbomind-related command in the root directory of lmdeploy source code. Switching to another directory will fix it.

But if you are a developer, you often need to develop and compile locally. The efficiency of installing whl every time is too low. You can specify the path of lib after compilation through symbolic links.

```shell

# mkdir and build locally

mkdir bld && cd bld && bash ../generate.sh && ninja -j$(nproc)

# go to the lmdeploy subdirectory from bld and set symbolic links

cd ../lmdeploy && ln -s ../bld/lib .

# go to the lmdeploy root directory

cd ..

# use the python command such as check_env

python3 -m lmdeploy check_env

```

If you still encounter problems finding turbomind so, it means that maybe there are multiple Python environments on your local machine, and the version of Python does not match during compilation and execution. In this case, you need to set `PYTHON_EXECUTABLE` in `lmdeploy/generate.sh` according to the actual situation, such as `-DPYTHON_EXECUTABLE=/usr/local/bin/python3`. And it needs to be recompiled.

## Libs

### libnccl.so.2 not found

Make sure you have install lmdeploy (>=v0.0.5) through `pip install lmdeploy[all]`.

If the issue still exists after lmdeploy installation, add the path of `libnccl.so.2` to environment variable LD_LIBRARY_PATH.

```shell

# Get the location of nvidia-nccl-cu11 package

pip show nvidia-nccl-cu11|grep Location

# insert the path of "libnccl.so.2" to LD_LIBRARY_PATH

export LD_LIBRARY_PATH={Location}/nvidia/nccl/lib:$LD_LIBRARY_PATH

```

### symbol cudaFreeAsync version libcudart.so.11.0 not defined in file libcudart.so.11.0 with link time reference

It's probably due to a low-version cuda toolkit. LMDeploy runtime requires a minimum CUDA version of 11.2

## Inference

### RuntimeError: \[TM\]\[ERROR\] CUDA runtime error: out of memory /workspace/lmdeploy/src/turbomind/utils/allocator.h

This is usually due to a disproportionately large memory ratio for the k/v cache, which is dictated by `TurbomindEngineConfig.cache_max_entry_count`.

The implications of this parameter have slight variations in different versions of lmdeploy. For specifics, please refer to the source code for the \[detailed notes\] (https://github.com/InternLM/lmdeploy/blob/52419bd5b6fb419a5e3aaf3c3b4dea874b17e094/lmdeploy/messages.py#L107)

If you encounter this issue while using the pipeline interface, please reduce the `cache_max_entry_count` in `TurbomindEngineConfig` like following:

```python

from lmdeploy import pipeline, TurbomindEngineConfig

backend_config = TurbomindEngineConfig(cache_max_entry_count=0.2)

pipe = pipeline('internlm/internlm2_5-7b-chat',

backend_config=backend_config)

response = pipe(['Hi, pls intro yourself', 'Shanghai is'])

print(response)

```

If OOM occurs when you run CLI tools, please pass `--cache-max-entry-count` to decrease k/v cache memory ratio. For example:

```shell

# chat command

lmdeploy chat internlm/internlm2_5-7b-chat --cache-max-entry-count 0.2

# server command

lmdeploy serve api_server internlm/internlm2_5-7b-chat --cache-max-entry-count 0.2

```

## Serve

### Api Server Fetch Timeout

The image URL fetch timeout for the API server can be configured via the environment variable `LMDEPLOY_FETCH_TIMEOUT`.

By default, requests may take up to 10 seconds before timing out. See [lmdeploy/vl/utils.py](https://github.com/InternLM/lmdeploy/blob/7b6876eafcb842633e0efe8baabe5906d7beeeea/lmdeploy/vl/utils.py#L31) for usage.

## Quantization

### RuntimeError: \[enforce fail at inline_container.cc:337\] . unexpected pos 4566829760 vs 4566829656

Please check your disk space. This error is due to insufficient disk space when saving weights, which might be encountered when quantizing the 70B model

### ModuleNotFoundError: No module named 'flash_attn'

Quantizing `qwen` requires the installation of `flash-attn`. But based on feedback from community users, `flash-attn` can be challenging to install. Therefore, we have removed it from lmdeploy dependencies and now recommend that users install it it manually as needed.

---

# Get Started with Huawei Ascend

We currently support running lmdeploy on **Atlas 800T A3, Atlas 800T A2 and Atlas 300I Duo**.

The usage of lmdeploy on a Huawei Ascend device is almost the same as its usage on CUDA with PytorchEngine in lmdeploy.

Please read the original [Get Started](../get_started.md) guide before reading this tutorial.

Here is the [supported model list](../../supported_models/supported_models.md#PyTorchEngine-on-Other-Platforms).

> \[!IMPORTANT\]

> We have uploaded a docker image with KUNPENG CPU to aliyun.

> Please try to pull the image by following command:

>

> Atlas 800T A3:

>

> `docker pull crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/ascend:a3-latest`

>

> (Atlas 800T A3 currently supports only the Qwen-series with eager mode.)

>

> Atlas 800T A2:

>

> `docker pull crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/ascend:a2-latest`

>

> 300I Duo:

>

> `docker pull crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/ascend:300i-duo-latest`

>

> (Atlas 300I Duo currently works only with graph mode.)

>

> To build the environment yourself, refer to the Dockerfiles [here](../../../../docker).

## Offline batch inference

### LLM inference

Set `device_type="ascend"` in the `PytorchEngineConfig`:

```python

from lmdeploy import pipeline

from lmdeploy import PytorchEngineConfig

pipe = pipeline("internlm/internlm2_5-7b-chat",

backend_config=PytorchEngineConfig(tp=1, device_type="ascend"))

question = ["Shanghai is", "Please introduce China", "How are you?"]

response = pipe(question)

print(response)

```

### VLM inference

Set `device_type="ascend"` in the `PytorchEngineConfig`:

```python

from lmdeploy import pipeline, PytorchEngineConfig

from lmdeploy.vl import load_image

pipe = pipeline('OpenGVLab/InternVL2-2B',

backend_config=PytorchEngineConfig(tp=1, device_type='ascend'))

image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/tests/data/tiger.jpeg')

response = pipe(('describe this image', image))

print(response)

```

## Online serving

### Serve a LLM model

Add `--device ascend` in the serve command.

```bash

lmdeploy serve api_server --backend pytorch --device ascend internlm/internlm2_5-7b-chat

```

Run the following commands to launch docker container for lmdeploy LLM serving:

```bash

docker run -it --net=host crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/ascend:a2-latest \

bash -i -c "lmdeploy serve api_server --backend pytorch --device ascend internlm/internlm2_5-7b-chat"

```

### Serve a VLM model

Add `--device ascend` in the serve command

```bash

lmdeploy serve api_server --backend pytorch --device ascend OpenGVLab/InternVL2-2B

```

Run the following commands to launch docker container for lmdeploy VLM serving:

```bash

docker run -it --net=host crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/ascend:a2-latest \

bash -i -c "lmdeploy serve api_server --backend pytorch --device ascend OpenGVLab/InternVL2-2B"

```

## Inference with Command line Interface

Add `--device ascend` in the serve command.

```bash

lmdeploy chat internlm/internlm2_5-7b-chat --backend pytorch --device ascend

```

Run the following commands to launch lmdeploy chatting after starting container:

```bash

docker run -it crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/ascend:a2-latest \

bash -i -c "lmdeploy chat --backend pytorch --device ascend internlm/internlm2_5-7b-chat"

```

## Quantization

### w4a16 AWQ

Run the following commands to quantize weights on Atlas 800T A2.

```bash

lmdeploy lite auto_awq $HF_MODEL --work-dir $WORK_DIR --device npu

```

Please check [supported_models](../../supported_models/supported_models.md) before use this feature.

### w8a8 SMOOTH_QUANT

Run the following commands to quantize weights on Atlas 800T A2.

```bash

lmdeploy lite smooth_quant $HF_MODEL --work-dir $WORK_DIR --device npu

```

Please check [supported_models](../../supported_models/supported_models.md) before use this feature.

### int8 KV-cache Quantization

Ascend backend has supported offline int8 KV-cache Quantization on eager mode.

Please refer this [doc](https://github.com/DeepLink-org/dlinfer/blob/main/docs/quant/ascend_kv_quant.md) for details.

## Limitations on 300I Duo

1. only support dtype=float16.

2. only support graph mode, please do not add --eager-mode.

---

# Cambricon

The usage of lmdeploy on a Cambricon device is almost the same as its usage on CUDA with PytorchEngine in lmdeploy.

Please read the original [Get Started](../get_started.md) guide before reading this tutorial.

Here is the [supported model list](../../supported_models/supported_models.md#PyTorchEngine-on-Other-Platforms).

> \[!IMPORTANT\]

> We have uploaded a docker image to aliyun.

> Please try to pull the image by following command:

>

> `docker pull crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/camb:latest`

> \[!IMPORTANT\]

> Currently, launching multi-device inference on Cambricon accelerators requires manually starting Ray.

>

> Below is an example for a 2-devices setup:

>

> ```shell

> export MLU_VISIBLE_DEVICES=0,1

> ray start --head --resources='{"MLU": 2}'

> ```

## Offline batch inference

### LLM inference

Set `device_type="camb"` in the `PytorchEngineConfig`:

```python

from lmdeploy import pipeline

from lmdeploy import PytorchEngineConfig

pipe = pipeline("internlm/internlm2_5-7b-chat",

backend_config=PytorchEngineConfig(tp=1, device_type="camb"))

question = ["Shanghai is", "Please introduce China", "How are you?"]

response = pipe(question)

print(response)

```

### VLM inference

Set `device_type="camb"` in the `PytorchEngineConfig`:

```python

from lmdeploy import pipeline, PytorchEngineConfig

from lmdeploy.vl import load_image

pipe = pipeline('OpenGVLab/InternVL2-2B',

backend_config=PytorchEngineConfig(tp=1, device_type='camb'))

image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/tests/data/tiger.jpeg')

response = pipe(('describe this image', image))

print(response)

```

## Online serving

### Serve a LLM model

Add `--device camb` in the serve command.

```bash

lmdeploy serve api_server --backend pytorch --device camb internlm/internlm2_5-7b-chat

```

Run the following commands to launch docker container for lmdeploy LLM serving:

```bash

docker run -it --net=host crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/camb:latest \

bash -i -c "lmdeploy serve api_server --backend pytorch --device camb internlm/internlm2_5-7b-chat"

```

### Serve a VLM model

Add `--device camb` in the serve command

```bash

lmdeploy serve api_server --backend pytorch --device camb OpenGVLab/InternVL2-2B

```

Run the following commands to launch docker container for lmdeploy VLM serving:

```bash

docker run -it --net=host crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/camb:latest \

bash -i -c "lmdeploy serve api_server --backend pytorch --device camb OpenGVLab/InternVL2-2B"

```

## Inference with Command line Interface

Add `--device camb` in the serve command.

```bash

lmdeploy chat internlm/internlm2_5-7b-chat --backend pytorch --device camb

```

Run the following commands to launch lmdeploy chatting after starting container:

```bash

docker run -it crpi-4crprmm5baj1v8iv.cn-hangzhou.personal.cr.aliyuncs.com/lmdeploy_dlinfer/camb:latest \

bash -i -c "lmdeploy chat --backend pytorch --device camb internlm/internlm2_5-7b-chat"

```

---

# Quick Start

This tutorial shows the usage of LMDeploy on CUDA platform:

- Offline inference of LLM model and VLM model

- Serve a LLM or VLM model by the OpenAI compatible server

- Console CLI to interactively chat with LLM model

Before reading further, please ensure that you have installed lmdeploy as outlined in the [installation guide](installation.md)

## Offline batch inference

### LLM inference

```python

from lmdeploy import pipeline

pipe = pipeline('internlm/internlm2_5-7b-chat')

response = pipe(['Hi, pls intro yourself', 'Shanghai is'])

print(response)

```

When constructing the `pipeline`, if an inference engine is not designated between the TurboMind Engine and the PyTorch Engine, LMDeploy will automatically assign one based on [their respective capabilities](../supported_models/supported_models.md), with the TurboMind Engine taking precedence by default.

However, you have the option to manually select an engine. For instance,

```python

from lmdeploy import pipeline, TurbomindEngineConfig

pipe = pipeline('internlm/internlm2_5-7b-chat',

backend_config=TurbomindEngineConfig(

max_batch_size=32,

enable_prefix_caching=True,

cache_max_entry_count=0.8,

session_len=8192,

))

```

or,

```python

from lmdeploy import pipeline, PytorchEngineConfig

pipe = pipeline('internlm/internlm2_5-7b-chat',

backend_config=PytorchEngineConfig(

max_batch_size=32,

enable_prefix_caching=True,

cache_max_entry_count=0.8,

session_len=8192,

))

```

```{note}

The parameter "cache_max_entry_count" significantly influences the GPU memory usage.

It means the proportion of FREE GPU memory occupied by the K/V cache after the model weights are loaded.

The default value is 0.8. The K/V cache memory is allocated once and reused repeatedly, which is why it is observed that the built pipeline and the "api_server" mentioned later in the next consumes a substantial amount of GPU memory.

If you encounter an Out-of-Memory(OOM) error, you may need to consider lowering the value of "cache_max_entry_count".

```

When use the callable `pipe()` to perform token generation with given prompts, you can set the sampling parameters via `GenerationConfig` as below:

```python

from lmdeploy import GenerationConfig, pipeline

pipe = pipeline('internlm/internlm2_5-7b-chat')

prompts = ['Hi, pls intro yourself', 'Shanghai is']

response = pipe(prompts,

gen_config=GenerationConfig(

max_new_tokens=1024,

top_p=0.8,

top_k=40,

temperature=0.6

))

```

In the `GenerationConfig`, `top_k=1` or `temperature=0.0` indicates greedy search.

For more information about pipeline, please read the [detailed tutorial](../llm/pipeline.md)

### VLM inference

The usage of VLM inference pipeline is akin to that of LLMs, with the additional capability of processing image data with the pipeline.

For example, you can utilize the following code snippet to perform the inference with an InternVL model:

```python

from lmdeploy import pipeline

from lmdeploy.vl import load_image

pipe = pipeline('OpenGVLab/InternVL2-8B')

image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/tests/data/tiger.jpeg')

response = pipe(('describe this image', image))

print(response)

```

In VLM pipeline, the default image processing batch size is 1. This can be adjusted by `VisionConfig`. For instance, you might set it like this:

```python

from lmdeploy import pipeline, VisionConfig

from lmdeploy.vl import load_image

pipe = pipeline('OpenGVLab/InternVL2-8B',

vision_config=VisionConfig(

max_batch_size=8

))

image = load_image('https://raw.githubusercontent.com/open-mmlab/mmdeploy/main/tests/data/tiger.jpeg')

response = pipe(('describe this image', image))

print(response)

```

However, the larger the image batch size, the greater risk of an OOM error, because the LLM component within the VLM model pre-allocates a massive amount of memory in advance.

We encourage you to manually choose between the TurboMind Engine and the PyTorch Engine based on their respective capabilities, as detailed in [the supported-models matrix](../supported_models/supported_models.md).

Additionally, follow the instructions in [LLM Inference](#llm-inference) section to reduce the values of memory-related parameters

## Serving

As demonstrated in the previous [offline batch inference](#offline-batch-inference) section, this part presents the respective serving methods for LLMs and VLMs.

### Serve a LLM model

```shell

lmdeploy serve api_server internlm/internlm2_5-7b-chat

```

This command will launch an OpenAI-compatible server on the localhost at port `23333`. You can specify a different server port by using the `--server-port` option.

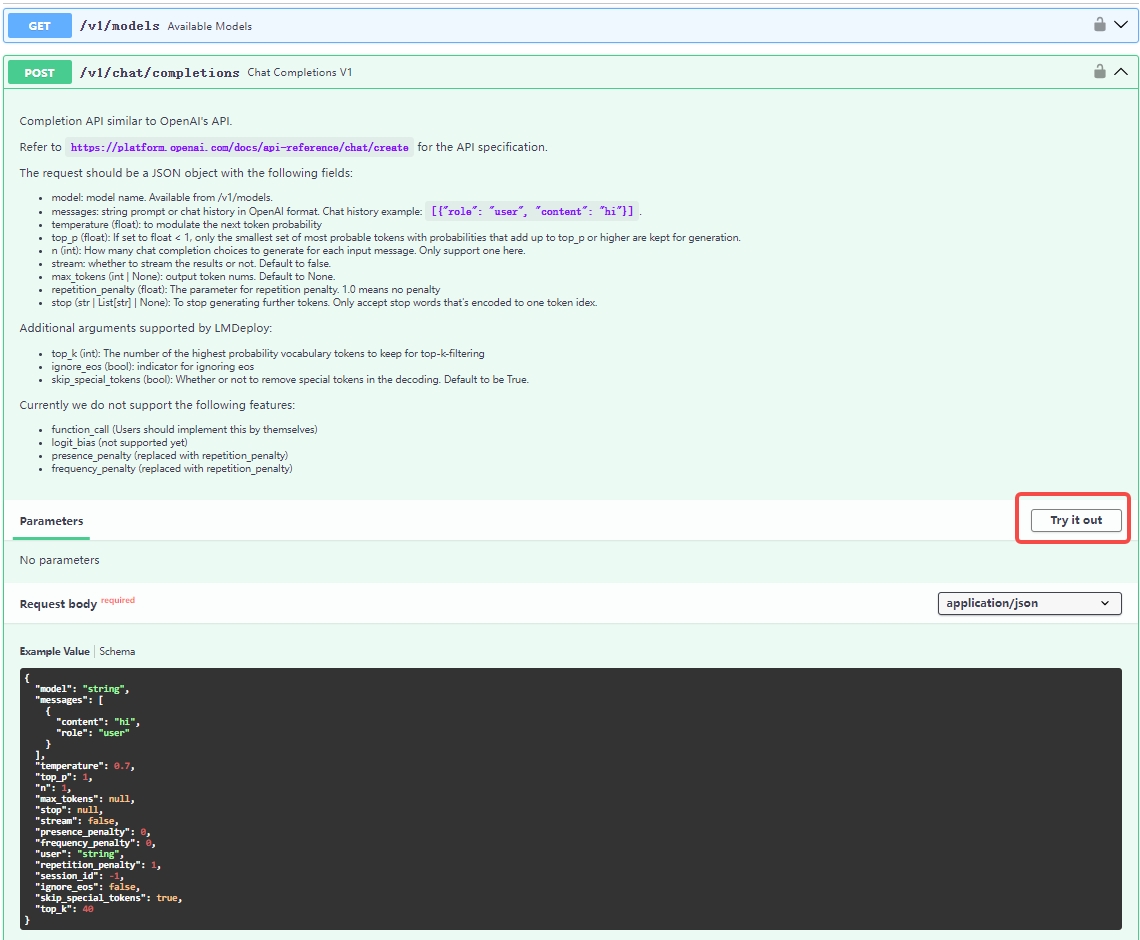

For more options, consult the help documentation by running `lmdeploy serve api_server --help`. Most of these options align with the engine configuration.

To access the service, you can utilize the official OpenAI Python package `pip install openai`. Below is an example demonstrating how to use the entrypoint `v1/chat/completions`

```python

from openai import OpenAI

client = OpenAI(

api_key='YOUR_API_KEY',

base_url="http://0.0.0.0:23333/v1"