# Lighthouse

>

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/architecture.md

# Architecture

_Some incomplete notes_

## Components & Terminology

* **Driver** - Interfaces with [Puppeteer](https://github.com/puppeteer/puppeteer) and [Chrome Debugging Protocol](https://developer.chrome.com/devtools/docs/debugger-protocol) ([API viewer](https://chromedevtools.github.io/debugger-protocol-viewer/))

* **Gatherers** - Uses Driver to collect information about the page. Minimal post-processing. Run Lighthouse with `--gather-mode` to see the 3 primary outputs from gathering:

1. `artifacts.json`: The output from all [gatherers](../core/gather/gatherers).

2. `trace.json`: Most performance characteristics come from here. You can view it in the DevTools Peformance panel.

3. `devtoolslog.json`: A log of all the [DevTools Protocol](https://chromedevtools.github.io/devtools-protocol/) events. Primary signal about network requests and page state.

* **Audit** - The [audits](../core/audits) are tests for a single feature/optimization/metric. Using the Artifacts as input, an audit evaluates a test and resolves to a numeric score. See [Understanding Results](./understanding-results.md) for details of the LHR (Lighthouse Result object).

* **Computed Artifacts** - [Generated](../core/computed) on-demand from artifacts, these add additional meaning, and are often shared amongst multiple audits.

* **Report** - The report UI, created client-side from the LHR. See [HTML Report Generation Overview](../report/README.md) for details.

### Audit/Report terminology

* **Category** - Roll-up collection of audits and audit groups into a user-facing section of the report (eg. `Best Practices`). Applies weighting and overall scoring to the section. Examples: Accessibility, Best Practices.

* **Audit title** - Short user-visible title for the successful audit. eg. “All image elements have `[alt]` attributes.”

* **Audit failureTitle** - Short user-visible title for a failing audit. eg. “Some image elements do not have `[alt]` attributes.”

* **Audit description** - Explanation of why the user should care about the audit. Not necessarily how to fix it, unless there is no external link that explains it. ([See description guidelines](../CONTRIBUTING.md#audit-description-guidelines)). eg. “Informative elements should aim for short, descriptive alternate text. Decorative elements can be ignored with an empty alt attribute. [Learn more].”

## Protocol

* _Interacting with Chrome:_ The Chrome protocol connection maintained via [WebSocket](https://github.com/websockets/ws) for the CLI [`chrome.debuggger` API](https://developer.chrome.com/extensions/debugger) when in the Chrome extension.

* _Event binding & domains_: Some domains must be `enable()`d so they issue events. Once enabled, they flush any events that represent state. As such, network events will only issue after the domain is enabled. All the protocol agents resolve their `Domain.enable()` callback _after_ they have flushed any pending events. See example:

```js

// will NOT work

driver.defaultSession.sendCommand('Security.enable').then(_ => {

driver.defaultSession.on('Security.securityStateChanged', state => { /* ... */ });

})

// WILL work! happy happy. :)

driver.defaultSession.on('Security.securityStateChanged', state => { /* ... */ }); // event binding is synchronous

driver.defaultSession.sendCommand('Security.enable');

```

* _Debugging the protocol_: Read [Better debugging of the Protocol](https://github.com/GoogleChrome/lighthouse/issues/184).

## Understanding a Trace

`core/lib/tracehouse/trace-processor.js` provides the core transformation of a trace into more meaningful objects. Each raw trace event has a monotonically increasing timestamp in microseconds, a thread ID, a process ID, a duration in microseconds (potentially), and other applicable metadata properties such as the event type, the task name, the frame, etc. [Learn more about trace events](https://docs.google.com/document/d/1CvAClvFfyA5R-PhYUmn5OOQtYMH4h6I0nSsKchNAySU/preview).

### Example Trace Event

```js

{

'pid': 41904, // process ID

'tid': 1295, // thread ID

'ts': 1676836141, // timestamp in microseconds

'ph': 'X', // trace event type

'cat': 'toplevel', // trace category from which this event came

'name': 'MessageLoop::RunTask', // relatively human-readable description of the trace event

'dur': 64, // duration of the task in microseconds

'args': {}, // contains additional data such as frame when applicable

}

```

### Processed trace

The processed trace identifies trace events for key moments (navigation start, FCP, LCP, DOM content loaded, trace end, etc) and provides filtered views of just the main process and the main thread events. Because the timestamps are not necessarily interesting in isolation, the processed trace also calculates the times in milliseconds of key moments relative to navigation start, thus providing the typical interpretation of metrics in ms.

```js

{

processEvents: [/* all trace events in the main process */],

mainThreadEvents: [/* all trace events on the main thread */],

timings: {

timeOrigin: 0, // timeOrigin is always 0 ms

firstContentfulPaint: 150, // firstContentfulPaint time in ms after time origin

/* other key moments */

traceEnd: 16420, // traceEnd time in ms after time origin

},

timestamps: {

timeOrigin: 623000000, // timeOrigin timestamp in microseconds, marks the start of the navigation of interest

firstContentfulPaint: 623150000, // firstContentfulPaint timestamp in microseconds

/* other key moments */

traceEnd: 639420000, // traceEnd timestamp in microseconds

},

}

```

## Audits

The return value of each audit [takes this shape](https://github.com/GoogleChrome/lighthouse/blob/17b7163486b69239689ed49415bdeee6f7766bfa/types/audit.d.ts#L66-L83).

The `details` object is parsed in report-renderer.js. View other audits for guidance on how to structure `details`.

## Core internal module dependencies

(Generated May 17, 2022 via `madge core/index.js --image arch.png --layout dot --exclude="(locales\/)|(stack-packs\/packs)"`)

## Lantern

[Lantern](./lantern.md) is how Lighthouse simulates network and cpu throttling.

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/authenticated-pages.md

# Running Lighthouse on Authenticated Pages

Default runs of Lighthouse load a page as a "new user", with no previous session or storage data. This means that pages requiring authenticated access do not work without additional setup. You have a few options for running Lighthouse on pages behind a login:

## Option 1: Script the login with Puppeteer

[Puppeteer](https://pptr.dev) is the most flexible approach for running Lighthouse on pages requiring authentication.

See [a working demo at /docs/recipes/auth](./recipes/auth).

View our full documentation for using [Lighthouse along with Puppeteer](https://github.com/GoogleChrome/lighthouse/blob/main/docs/puppeteer.md).

## Option 2: Leverage logged-in state with Chrome DevTools

The Lighthouse panel in Chrome DevTools will never clear your cookies, so you can log in to the target site and then run Lighthouse. If `localStorage` or `indexedDB` is important for your authentication purposes, be sure to uncheck `Clear storage`.

## Option 3: Pass custom request headers with Lighthouse CLI

CLI:

```sh

lighthouse http://www.example.com --view --extra-headers="{\"Authorization\":\"...\"}"

```

Node:

```js

const result = await lighthouse('http://www.example.com', {

extraHeaders: {

Authorization: '...',

},

});

```

You could also set the `Cookie` header, but beware: it will [override any other Cookies you expect to be there](https://github.com/GoogleChrome/lighthouse/pull/9170). For a more flexible cookie-based approach, use [puppeteer (Option 1)](./recipes/auth/README.md) instead.

## Option 4: Open a debug instance of Chrome and manually log in

1. Globally install lighthouse: `npm i -g lighthouse` or `yarn global add lighthouse`. `chrome-debug` is now in your PATH. This binary launches a standalone Chrome instance with an open debugging port.

1. Run chrome-debug. This logs the debugging port of your Chrome instance.

1. Navigate to your site and log in.

1. In a separate terminal, run `lighthouse http://mysite.com --disable-storage-reset --port port-number`, using the port number from chrome-debug.

## Option 5: Reuse a prepared Chrome User Profile

This option is currently under development. Track or join the discussion here: [#8957](https://github.com/GoogleChrome/lighthouse/issues/8957).

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/bug-labels.md

Given the new onslaught of issues that folks in the community are filing, we thought it might be good to explain some of our

bug labeling and triaging practices to the community.

## Bug Triaging Overview

Every week, there is a new "bug sheriff" (assigned from the core Lighthouse team) and their job is to go through and triage incoming bugs and pay attention to bugs

where we need more information from the reporter. Therefore, depending on the week, you might be hearing from a different

bug sheriff about your bug.

## Labeling Bugs

Here are the different (actively used) labels and what they mean, organized by category bucket:

### Priority Labels

- P0: Urgent issue- drop everything and deal with immediately

- P1: We want to work on this in the next few weeks

- P1.5: We want to work on this in the next few months

- P2: We want to work on this in the next few quarters.

- P3: Good idea, useful for future thinking.

### Process labels

- Needs more information: issue that hasn't been prioritized yet because we need more information from the bug creator. If we don't hear back in 2 weeks, we will close out the bug.

- Pending close: issue that we will soon close.

- Needs priority: issue that needs to be prioritized by team (as P0, P1, P1.5, etc.)

- Needs investigation: issue that we need to dig into to understand what is going on (mostly for bugs)

### Type of incoming issue labels

- Bug: something is wrong on our end and needs to be fixed.

- Feature: suggestion of new thing to implement.

- Internal cleanup: nothing is wrong but clean up and/or refactor of the existing way we're doing something.

- Question: question from community. Good fodder for new documentation that needs to be written.

### Other labels

- Good first issue: for new external contributor, these issues can be useful for them to tackle.

- Help wanted: issues that could use help from the community.

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/configuration.md

# Lighthouse Configuration

The Lighthouse config object is the primary method of customizing Lighthouse to suit your use case. Using a custom config, you can limit the audits to run, add additional loads of the page under special conditions, add your own custom checks, tweak the scoring, and more.

Read more about the [architecture of Lighthouse](./architecture.md).

## Usage

You can specify a custom config file when using Lighthouse through the CLI or consuming the npm module yourself.

**custom-config.js file**

```js

export default {

extends: 'lighthouse:default',

settings: {

onlyAudits: [

'speed-index',

'interactive',

],

},

};

```

**Use config file via CLI**

```sh

lighthouse --config-path=path/to/custom-config.js https://example.com

```

**Use config file via Node**

```js

import lighthouse from 'lighthouse';

import config from './path/to/custom-config.js';

await lighthouse('https://example.com/', {port: 9222}, config);

```

## Properties

| Name | Type | |

| - | - | - |

| extends | string|undefined |

| settings | Object|undefined |

| artifacts | Object[] |

| audits | string[] |

| categories | Object|undefined |

| groups | Object|undefined |

| plugins | string[] | Includes plugins and their audits. Refer to the [plugin documentation](https://github.com/GoogleChrome/lighthouse/blob/master/docs/plugins.md) for details.|

### `extends: "lighthouse:default"|undefined`

The `extends` property controls if your configuration should inherit from the default Lighthouse configuration. [Learn more.](#config-extension)

#### Example

```js

{

extends: 'lighthouse:default',

}

```

### `settings: Object|undefined`

The settings property controls various aspects of running Lighthouse such as CPU/network throttling and which audits should run.

#### Example

```js

{

settings: {

onlyCategories: ['performance'],

onlyAudits: ['works-offline'],

}

}

```

#### Options

For full list see [our config settings typedef](https://github.com/GoogleChrome/lighthouse/blob/575e29b8b6634bfb280bc820efea6795f3dd9017/types/externs.d.ts#L141-L186).

| Name | Type | Description |

| -- | -- | -- |

| onlyCategories | `string[]` | Includes only the specified categories in the final report. Additive with `onlyAudits` and reduces the time to audit a page. |

| onlyAudits | `string[]` | Includes only the specified audits in the final report. Additive with `onlyCategories` and reduces the time to audit a page. |

| skipAudits | `string[]` | Excludes the specified audits from the final report. Takes priority over `onlyCategories`, not usable in conjunction with `onlyAudits`, and reduces the time to audit a page. |

### `artifacts: Object[]`

The list of artifacts to collect on a single Lighthouse run. This property is required and on extension will be concatenated with the existing set of artifacts.

```js

{

artifacts: [

{id: 'Accessibility', gatherer: 'accessibility'},

{id: 'AnchorElements', gatherer: 'anchor-elements'},

]

}

```

| Name | Type | Description |

| -- | -- | -- |

| id | `string` | Unique identifier for this artifact. This is how the artifact is referenced in audits. |

| gatherer | `string` | Gatherer used to produce this artifact. Does not need to be unique within the `artifacts` list. |

### `audits: string[]`

The audits property controls which audits to run and include with your Lighthouse report. See [more examples](#more-examples) to see how to add custom audits to your config.

#### Example

```js

{

audits: [

'first-contentful-paint',

'byte-efficiency/unminified-css',

]

}

```

### `categories: Object|undefined`

The categories property controls how to score and organize the audit results in the report. Each category defined in the config will have an entry in the `categories` property of Lighthouse's output. The category output contains the child audit results along with an overall score for the category.

**Note:** many modules consuming Lighthouse have no need to group or score all the audit results; in these cases, it's fine to omit a categories section.

#### Example

```js

{

categories: {

performance: {

title: 'Performance',

description: 'This category judges your performance',

auditRefs: [

{id: 'first-contentful-paint', weight: 3, group: 'metrics'},

{id: 'interactive', weight: 5, group: 'metrics'},

],

}

}

}

```

#### Options

| Name | Type | Description |

| -- | -- | -- |

| title | `string` | The display name of the category. |

| description | `string` | The displayed description of the category. |

| supportedModes | `string[]` (optional, [user flows](https://github.com/GoogleChrome/lighthouse/blob/master/docs/user-flows.md)) | The modes supported by the category. Category will support all modes if this is not provided. |

| auditRefs | `Object[]` | The audits to include in the category. |

| auditRefs[$i].id | `string` | The ID of the audit to include. |

| auditRefs[$i].weight | `number` | The weight of the audit in the scoring of the category. |

| auditRefs[$i].group | `string` (optional) | The ID of the [display group](#groups-objectundefined) of the audit. |

### `groups: Object|undefined`

The groups property controls how to visually group audits within a category. For example, this is what enables the grouped rendering of metrics and accessibility audits in the report.

**Note: The report-renderer has display logic that's hardcoded to specific audit group names. Adding arbitrary groups without additional rendering logic may not perform as expected.**

#### Example

```js

{

categories: {

performance: {

auditRefs: [

{id: 'my-performance-metric', weight: 2, group: 'metrics'},

],

}

},

groups: {

'metrics': {

title: 'Metrics',

description: 'These metrics encapsulate your web app\'s performance across a number of dimensions.'

},

}

}

```

## Config Extension

The stock Lighthouse configurations can be extended if you only need to make small tweaks, such as adding an audit or skipping an audit, but wish to still run most of what Lighthouse offers. When adding the `extends: 'lighthouse:default'` property to your config, the artifacts, audits, groups, and categories will be automatically included, allowing you modify settings or add additional audits and artifacts.

Please note that the `extends` property only supports extension of `lighthouse:default`. Other internal configs found in the [core/config](https://github.com/GoogleChrome/lighthouse/tree/main/core/config) directory can be used by importing the config object from file reference, or by using the [`--preset`](https://github.com/GoogleChrome/lighthouse#cli-options) CLI flag.

See [more examples below](#more-examples) to view different types of extensions in action.

**Config extension is the recommended way to run custom Lighthouse**. If there's a use case that extension doesn't currently solve, we'd love to [hear from you](https://github.com/GoogleChrome/lighthouse/issues/new)!

## More Examples

The best examples are the ones Lighthouse uses itself! There are several reference configuration files that are maintained as part of Lighthouse.

* [core/config/default-config.js](https://github.com/GoogleChrome/lighthouse/blob/main/core/config/default-config.js)

* [core/config/lr-desktop-config.js](https://github.com/GoogleChrome/lighthouse/blob/main/core/config/lr-desktop-config.js)

* [core/config/lr-mobile-config.js](https://github.com/GoogleChrome/lighthouse/blob/main/core/config/lr-mobile-config.js)

* [core/config/perf-config.js](https://github.com/GoogleChrome/lighthouse/blob/main/core/config/perf-config.js)

* [docs/recipes/custom-audit/custom-config.js](https://github.com/GoogleChrome/lighthouse/blob/main/docs/recipes/custom-audit/custom-config.js)

* [pwmetrics](https://github.com/paulirish/pwmetrics/blob/v4.1.1/lib/perf-config.ts)

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/emulation.md

# Emulation in Lighthouse

In Lighthouse, "Emulation" refers to the screen/viewport emulation and UserAgent string spoofing.

["Throttling"](./throttling.md) covers the similar topics around network and CPU throttling/simulation.

With the default configuration, Lighthouse emulates a mobile device. There's [a `desktop` configuration](../core/config/desktop-config.js), available to CLI users with `--preset=desktop`, which applies a consistent desktop environment and scoring calibration. This is recommended as a replacement for `--emulated-form-factor=desktop`.

### Advanced emulation setups

Some products use Lighthouse in scenarios where emulation is applied outside of Lighthouse (e.g. by Puppeteer) or running against Chrome on real mobile devices.

You must always set `formFactor`. It doesn't control emulation, but it determines how Lighthouse should interpret the run in regards to scoring performance metrics and skipping mobile-only tests in desktop.

You can choose how `screenEmulation` is applied. It can accept an object of `{width: number, height: number, deviceScaleRatio: number, mobile: boolean, disabled: false}` to apply that screen emulation or an object of `{disabled: true}` if Lighthouse should avoid applying screen emulation. It's typically set to disabled if either emulation is applied outside of Lighthouse, or it's being run on a mobile device. The `mobile` boolean applies overlay scrollbars and a few other mobile-specific screen emulation characteristics.

You can choose how to handle userAgent emulation. The `emulatedUserAgent` property accepts either a `string` to apply the provided userAgent or a `boolean` -- `true` if the default UA spoofing should be applied (default) or `false` if no UA spoofing should be applied. Typically `false` is used if UA spoofing is applied outside of Lighthouse or on a mobile device. You can also redundantly apply userAgent emulation with no risk.

If you're using Lighthouse on a mobile device, you want to set `--screenEmulation.disabled` and `--throttling.cpuSlowdownMultiplier=1`. (`--formFactor=mobile` is the default already).

### Changes made in v7

In Lighthouse v7, most of the configuration regarding emulation changed to be more intuitive and clear. The [tracking issue](https://github.com/GoogleChrome/lighthouse/issues/10910

) captures additional motivations.

* Removed: The `emulatedFormFactor` property (which determined how emulation is applied).

* Removed: The `TestedAsMobileDevice` artifact. Instead of being inferred, the explicit `formFactor` property is used.

* Removed: The `internalDisableDeviceScreenEmulation` property. It's equivalent to the new `--screenEmulation.disabled=true`.

* Added: The `formFactor` property.

* Added: The `screenEmulation` property.

* Added: The `emulatedUserAgent` property.

* (`throttling` and `throttlingMethod` remain unchanged)

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/error-reporting.md

# Error Reporting Explained

## What's going on?

The Lighthouse team is constantly trying to improve the reliability of our tools, so we've added error tracking functionality to the CLI. Given your consent, we would like to anonymously report runtime exceptions using [Sentry](https://sentry.io/welcome/). We will use this information to detect new bugs and avoid regressions.

Only CLI users are currently impacted. DevTools, extension, and node module users will not have errors reported.

## What will happen if I opt-in?

Runtime exceptions will be reported to the team along with information on your environment such as the URL you tested, your OS, and Chrome version. See [what data gets reported](#what-data-gets-reported).

## What will happen if I do not opt-in?

Runtime exceptions will not be reported to the team. Your ability to use Lighthouse will not be affected in any way.

## What data gets reported?

* The URL you tested

* The runtime settings used (throttling enabled/disabled, emulation, etc)

* The message, stack trace, and associated data of the error

* The file path of Lighthouse node module on your machine

* Your Lighthouse version

* Your Chrome version

* Your operating system

[This code search](https://github.com/GoogleChrome/lighthouse/search?l=JavaScript&q=Sentry.&type=&utf8=%E2%9C%93) reveals where Sentry methods are used.

## How do I opt-in?

The first time you run the CLI you will be prompted with a message asking you if Lighthouse can anonymously report runtime exceptions. You can give a direct response of `yes` or `no` (`y`, `n`, and pressing enter which defaults to `no` are also acceptable responses), and you will not be prompted again. If no response is given within 20 seconds, a `no` response will be assumed and you will not be prompted again.

Running Lighthouse with `--enable-error-reporting` will report errors regardless of the saved preference.

## How do I keep error reporting disabled?

As mentioned, if you do not respond to the CLI prompt within 20 seconds, a `no` response will be assumed and you will not be prompted again.

Non-interactive terminal sessions (`process.stdout.isTTY === false`) and invocations with the `CI` environment variable (`process.env.CI === true`), common on CI providers like Travis and AppVeyor, will not be prompted and error reporting will remain disabled.

Running Lighthouse with `--no-enable-error-reporting` will keep error reporting disabled regardless of the saved preference.

## How do I change my opt-in preference?

Your response to the prompt will be saved to your home directory `~/.config/configstore/lighthouse.json` and used on future runs. To trigger a re-prompt, simply delete this file and Lighthouse will ask again on the next run. You can also edit this json file directly.

As mentioned above, any explicit `--[no-]enable-error-reporting` flags will override the saved preference.

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/hacking-tips.md

A few assorted scripts and tips to make hacking on Lighthouse a bit easier

# Eng team resources

* [LH Build Tracker](https://lh-build-tracker.herokuapp.com/builds/limit/100) - plotted results of [build-tracker](../build-tracker.config.js) [data](../.github/workflows/ci.yml#:~:text=buildtracker)

* [LH PR Tracking](https://paulirish.github.io/lh-pr-tracking/) - stats about open PRs, collected [daily](https://github.com/paulirish/lh-pr-tracking/blob/master/.github/workflows/update-stats.yml).

## Evaluate Lighthouse's runtime performance

Lighthouse has instrumentation to collect timing data for its operations. The data is exposed at `LHR.timing.entries`. You can generate a trace from this data for closer analysis.

[View example trace](https://ahead-daughter.surge.sh/paulirish.json.timing.trace.html)

To generate, run `yarn timing-trace` with the LHR json:

```sh

lighthouse http://example.com --output=json --output-path=lhr.json

yarn timing-trace lhr.json

```

That will generate `lhr.json.timing.trace.json`. Then, drag 'n drop that file into `chrome://tracing`.

## Unhandled promise rejections

Getting errors like these?

> (node:12732) UnhandledPromiseRejectionWarning: Unhandled promise rejection (rejection id: 1)

> (node:12732) DeprecationWarning: Unhandled promise rejections are deprecated. In the future, promise rejections that are not handled will terminate the Node.js process with a non-zero exit code.

Use [`--trace-warnings`](https://medium.com/@jasnell/introducing-process-warnings-in-node-v6-3096700537ee) to get actual stack traces.

```sh

node --trace-warnings cli http://example.com

```

## Iterating on the report

This will generate new reports from the same results json.

```sh

# capture some results first:

lighthouse http://example.com --output=json > temp.report.json

# quickly generate reports:

node generate_report.js > temp.report.html; open temp.report.html

```

```js

// generate_report.js

import {ReportGenerator} from './report/generator/report-generator.js';

import results from './temp.report.json' assert { type: 'json' };

const html = ReportGenerator.generateReportHtml(results);

console.log(html);

```

## Using Audit Classes Directly, Providing Your Own Artifacts

See [gist](https://gist.github.com/connorjclark/d4555ad90ae5b5ecf793ad2d46ca52db).

## Mocking modules with testdouble

We use `testdouble` and `mocha` to mock modules for testing. However, [mocha will not "hoist" the mocks](https://jestjs.io/docs/ecmascript-modules#module-mocking-in-esm) so any imports that depend on a mocked module need to be done dynamically *after* the testdouble mock is applied.

Analyzing the dependency trees can be complicated, so we recommend importing as many modules as possible (read: all non-test modules, typically) dynamically and only using static imports for test libraries (e.g. `testdouble`, `jest-mock`, `assert`). For example:

```js

import jestMock from 'jest-mock';

import * as td from 'testdouble';

await td.replaceEsm('./module-to-mock.js', {

mockedFunction: jestMock.fn(),

});

// module-to-mock.js is imported somewhere in the dependency tree of root-module.js

const rootModule = await import('./root-module.js');

```

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/headless-chrome.md

# Running Lighthouse using headless Chrome

## CLI (headless)

Setup:

```sh

# Lighthouse requires Node 22 LTS (22.x) or later.

curl -sL https://deb.nodesource.com/setup_22.x | sudo -E bash - &&\

sudo apt-get install -y nodejs npm

# get chromium (stable)

apt-get install chromium

# install lighthouse

npm i -g lighthouse

```

Kick off run of Lighthouse using headless Chrome:

```sh

lighthouse --chrome-flags="--headless" https://github.com

```

## (CLI headless=new)

There is also the new `--headless=new` option, which includes functionality that

was explicitly omitted from the original headless browser.

## CLI (xvfb)

Alternatively, you can run full Chrome + xvfb instead of headless mode. These steps worked on Debian Jessie:

```sh

# get node 22

curl -sL https://deb.nodesource.com/setup_22.x | sudo -E bash -

sudo apt-get install -y nodejs npm

# get chromium (stable) and Xvfb

apt-get install chromium-browser xvfb

# install lighthouse

npm i -g lighthouse

```

Run it:

```sh

export DISPLAY=:1.5

TMP_PROFILE_DIR=$(mktemp -d -t lighthouse.XXXXXXXXXX)

# start up chromium inside xvfb

xvfb-run --server-args='-screen 0, 1024x768x16' \

chromium-browser --user-data-dir=$TMP_PROFILE_DIR \

--start-maximized \

--no-first-run \

--remote-debugging-port=9222 "about:blank"

# Kick off Lighthouse run on same port as debugging port.

lighthouse --port=9222 https://github.com

```

## Posting Lighthouse reports to GitHub Gists

Be sure to replace `${GITHUB_OWNER}` and `${GITHUB_TOKEN}` with your own credentials. The code below is tested on Ubuntu.

```sh

apt-get install -y nodejs npm chromium jq

npm install -g lighthouse

# Run lighthouse as JSON, pipe it to jq to wrangle and send it to GitHub Gist via curl

# so Lighthouse Viewer can grab it.

lighthouse "http://localhost" --chrome-flags="--no-sandbox --headless" \

--output json \

| jq -r "{ description: \"YOUR TITLE HERE\", public: \"false\", files: {\"$(date "+%Y%m%d").lighthouse.report.json\": {content: (. | tostring) }}}" \

| curl -sS -X POST -H 'Content-Type: application/json' \

-u ${GITHUB_OWNER}:${GITHUB_TOKEN} \

-d @- https://api.github.com/gists > results.gist

# Let's be nice and add the Lighthouse Viewer link in the Gist description.

GID=$(cat results.gist | jq -r '.id') && \

curl -sS -X POST -H 'Content-Type: application/json' \

-u ${GITHUB_OWNER}:${GITHUB_TOKEN} \

-d "{ \"description\": \"YOUR TITLE HERE - Lighthouse: https://googlechrome.github.io/lighthouse/viewer/?gist=${GID}\" }" "https://api.github.com/gists/${GID}" > updated.gist

```

## Node module

Install:

```sh

yarn add lighthouse

```

Run it:

```javascript

const lighthouse = require('lighthouse');

const chromeLauncher = require('chrome-launcher');

function launchChromeAndRunLighthouse(url, flags = {}, config = null) {

return chromeLauncher.launch(flags).then(chrome => {

flags.port = chrome.port;

return lighthouse(url, flags, config).then(results => {

chrome.kill();

return results;

}

});

}

const flags = {

chromeFlags: ['--headless']

};

launchChromeAndRunLighthouse('https://github.com', flags).then(results => {

// Use results!

});

```

## Other resources

Other resources you might find helpful:

- [Getting Started with Headless Chrome](https://developers.google.com/web/updates/2017/04/headless-chrome)

- Example [Dockerfile](https://github.com/GoogleChrome/lighthouse-ci/blob/main/docs/recipes/docker-client/Dockerfile)

- Lighthouse's GitHub Actions [`.ci.yml`](https://github.com/GoogleChrome/lighthouse/blob/main/.github/workflows/ci.yml)

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/lantern.md

# Lantern

## Overview

Project Lantern is an ongoing effort to reduce the run time of Lighthouse and improve audit quality by modeling page activity and simulating browser execution. This document details the accuracy of these models and captures the expected natural variability.

## Deep Dive

[](https://www.youtube.com/watch?v=0dkry1r49xw)

## Accuracy

All of the following accuracy stats are reported on a set of 300 URLs sampled from the Alexa top 1000, HTTPArchive dataset, and miscellaneous ad landing pages. Median was collected for *9 runs* in one environment and compared to the median of *9 runs* in a second environment.

Stats were collected using the [trace-evaluation](https://github.com/patrickhulce/lighthouse-trace-evaluations) scripts. Table cells contain [Spearman's rho](https://en.wikipedia.org/wiki/Spearman%27s_rank_correlation_coefficient) and [MAPE](https://en.wikipedia.org/wiki/Mean_absolute_percentage_error) for the respective metric.

### Lantern Accuracy Stats

| Comparison | FCP | FMP | TTI |

| -- | -- | -- | -- |

| Lantern predicting Default LH | .811 : 23.1% | .811 : 23.6% | .869 : 42.5% |

| Lantern predicting LH on WPT | .785 : 28.3% | .761 : 33.7% | .854 : 45.4% |

### Reference Stats

| Comparison | FCP | FMP | TTI |

| -- | -- | -- | -- |

| Unthrottled LH predicting Default LH | .738 : 27.1% | .694 : 33.8% | .743 : 62.0% |

| Unthrottled LH predicting WPT | .691 : 33.8% | .635 : 33.7% | .712 : 66.4% |

| Default LH predicting WPT | .855 : 22.3% | .813 : 27.0% | .889 : 32.3% |

## Conclusions

### Lantern Accuracy Conclusions

We conclude that Lantern is ~6-13% more inaccurate than DevTools throttling. When evaluating rank performance, Lantern achieves correlations within ~.04-.07 of DevTools throttling.

* For the single view use case, our original conclusion that Lantern's inaccuracy is roughly equal to the inaccuracy introduced by expected variance seems to hold. The standard deviation of single observations from DevTools throttling is ~9-13%, and given Lantern's much lower variance, single observations from Lantern are not significantly more inaccurate on average than single observations from DevTools throttling.

* For the repeat view use case, we can conclude that Lantern is systematically off by ~6-13% more than DevTools throttling.

### Metric Variability Conclusions

The reference stats demonstrate that there is high degree of variability with the user-centric metrics and strengthens the position that every load is just an observation of a point drawn from a distribution and to understand the entire experience, multiple draws must be taken, i.e. multiple runs are needed to have sufficiently small error bounds on the median load experience.

The current size of confidence intervals for DevTools throttled performance scores are as follows.

* 95% confidence interval for **1-run** of site at median: 50 **+/- 15** = 65-35

* 95% confidence interval for **3-runs** of site at median: 50 **+/- 11** = 61-39

* 95% confidence interval for **5-runs** of site at median: 50 **+/- 8** = 58-42

## Links

* [Lighthouse Variability and Accuracy Analysis](https://docs.google.com/document/d/1BqtL-nG53rxWOI5RO0pItSRPowZVnYJ_gBEQCJ5EeUE/edit?usp=sharing)

* [Lantern Deck](https://docs.google.com/presentation/d/1EsuNICCm6uhrR2PLNaI5hNkJ-q-8Mv592kwHmnf4c6U/edit?usp=sharing)

* [Lantern Design Doc](https://docs.google.com/a/chromium.org/document/d/1pHEjtQjeycMoFOtheLfFjqzggY8VvNaIRfjC7IgNLq0/edit?usp=sharing)

* [WPT Trace Data Set Half 1](https://drive.google.com/open?id=1Y_duiiJVljzIEaYWEmiTqKQFUBFWbKVZ) (access on request)

* [WPT Trace Data Set Half 2](https://drive.google.com/open?id=1EoHk8nQaBv9aoaVv81TvR7UfXTUu2fiu) (access on request)

* [Unthrottled Trace Data Set Half 1](https://drive.google.com/open?id=1axJf9R3FPpzxhR7FKOvXPLFLxxApfwD0) (access on request)

* [Unthrottled Trace Data Set Half 2](https://drive.google.com/open?id=1krcWq5DF0oB1hq90G29bEwIP7zDcJrYY) (access on request)

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/new-audits.md

So, you want to create a new audit? Great! We're excited that you want to add to the Lighthouse project :) The goal of this

document is to help you understand what constitutes as a "good" audit for Lighthouse, and steps you can follow if you want

to propose a new audit.

## New audit principles

Lighthouse audits that surface in the report should:

- be applicable to a significant portion of web developers (based on scale and severity of impact)

- contribute significantly towards making the mobile web experience better for end users.

- not have a significant impact on our runtime performance or bundle size.

- be new, not something that is already measured by existing audits.

- be measurable (especially for performance audits) or have clear pass/fail states.

- be actionable - when failing, specific advice should be given. If the failure can be tied to a specific resource (a DOM element, script, line of code), use the appropriate detail type (see below). If multiple failures can occur for a page, return a table.

- not use 3rd party APIs for completing the audit check.

## Actionability

1. Specific advice should be given if the audit fails. If an audit can fail in multiple ways, each way should have specific guidance that the user should take to resolve the problem.

1. If the failure can be applied to a specific resource, use the appropriate detail type (see subsection).

1. If multiple failures can occur on a single page, show each (use a table - don't just return a binary score).

### Detail Types

An audit can return a number of different [detail types](https://github.com/GoogleChrome/lighthouse/blob/main/types/lhr/audit-details.d.ts).

| detail type | resource | notes |

|---------------------------|-----------------------|----------------------------------------|

| `'node'` | DOM element | set path to a devtoolsNodePath |

| `'source-location'` | Code Network Resource | use to point to specific line, column |

| `'code'` | N/A; freeform | render as monospace font `like this` |

| `'url'` | Network Resource | we will make it a pretty link |

| `'thumbnail'` | Image Resource | same as above, but we show a thumbnail |

| `'link'` | - | arbitrary link / url combination |

| `'bytes'` | - | value is in bytes but formatted as KiB |

| `'text'\|'ms'\|'numeric'` | - | |

### Granularity

The following detail types accept a `granularity` field:

- `bytes`

- `ms`

- `numeric`

`granularity` must be an integer power of 10. Some examples of valid values for `granularity`:

- 0.001

- 0.01

- 0.1

- 1

- 10

- 100

The formatted value will be rounded to that nearest number. If not provided, the default is `0.1` (except for `ms`, which is `10`).

## Naming

### Audit ID

The audit ID should be based on the noun of the subject matter that it surfaces to the user.

The filename should match the audit ID.

**Policy**

- No verbs.

- No `no-` prefixes.

- Use the noun of the items it surfaces or concept it centers around.

- Adjective modifiers are acceptable and encouraged if the noun would be too broad without specificity.

- If an adjective modifier will result in describing either the passing or failing state, prefer the failing state.

**Examples**

- ~~no-vulnerable-dependencies~~ vulnerable-dependencies (no `no-`)

- ~~redirects-http~~ http-redirect (no verbs)

- ~~uses-long-cache-ttl~~ cache-headers (no verbs)

- ~~is-crawlable~~ crawlability (no verbs)

- ~~images~~ oversized-images (too broad)

- ~~used-css~~ unused-css (prefer failing state adjective)

### Audit Title / Failure Title

Audit titles vary based on report context and audit type.

- Opportunities should have an *imperative* `title` describing the action the developer should take to fix the issue.

- Standard audits should have both a *descriptive* `title` and a `failureTitle` that describe what the page is currently doing that resulted in a passing/failing state.

Opportunity `title`: "Compress large images"

Standard Audit `title`: "Page works offline"

Standard Audit `failureTitle`: "Page does not work offline"

## Process for creating a new audit

1. Scan the criteria we’ve laid out above. If you think the principles match with your proposed new audit, then proceed!

1. Next step is to create an issue on GitHub with answers to the following questions:

```

#### Provide a basic description of the audit

#### How would the audit appear in the report?

#### How is this audit different from existing ones?

#### What % of developers/pages will this impact?

#### How is the new audit making a better web for end users?

#### What is the resourcing situation?

#### Any other links or documentation that we should check out?

```

3. Once the proposal is submitted, then Lighthouse team will take a look and followup. We will discuss possible implementation approaches, and associated runtime overhead.

With this new information we can better understand the impl cost and effort required and prioritize the audit into our sprint/roadmap.

1. Depending on the prioritization, we'll then work with you to figure out the necessary engineering/UX/product details.

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/plugins.md

# Plugin Handbook

## Table of Contents

1. [Introduction](#introduction)

1. [What is a Lighthouse Plugin?](#what-is-a-lighthouse-plugin)

1. [Comparing a Plugin vs. Custom Config](#comparing-a-plugin-vs-custom-config)

1. [Getting Started](#getting-started)

1. [API](#api)

1. [Plugin Config](#plugin-config)

1. [Plugin Audits](#plugin-audits)

1. [Best Practices](#best-practices)

1. [Naming](#naming)

1. [Scoring](#scoring)

1. [Common Mistakes](#common-mistakes)

1. [Examples](#examples)

## Introduction

If you're new to Lighthouse development, start by reading up on the overall [architecture](./architecture.md), how [configuration](./configuration.md) works, and what makes a [good audit](./new-audits.md) before continuing.

### What is a Lighthouse Plugin?

Lighthouse plugins are a way to extend the functionality of Lighthouse with insight from domain experts (that's you!) and easily share this extra functionality with other Lighthouse users. At its core, a plugin is a node module that implements a set of checks that will be run by Lighthouse and added to the report as a new category.

### Comparing a Plugin vs. Custom Config

Plugins are easily shared and have a stable API that won't change between minor version bumps but are also more limited in scope than a [custom Lighthouse configuration](./configuration.md). Before getting started with plugins, think about your current needs, and consult the table below to decide which is best for you.

| Capability | Plugin | Custom Config |

| -------------------------------------------- | ------ | ------------- |

| Include your own custom audits | ✅ | ✅ |

| Add a custom category | ✅ | ✅ |

| Easily shareable and extensible on NPM | ✅ | ❌ |

| Semver-stable API | ✅ | ❌ |

| Gather custom data from the page (artifacts) | ❌ | ✅ |

| Modify core categories | ❌ | ✅ |

| Modify `config.settings` properties | ❌ | ✅ |

### Getting Started

To develop a Lighthouse plugin, you'll need to write three things:

1. A `package.json` file to define your plugin's dependencies and point to your `plugin.js` file.

1. A `plugin.js` file to declare your plugin's audits, category name, and scoring.

1. Custom audit files that will contain the primary logic of the checks you want to perform.

To see a fully functioning example, see our [plugin recipe](./recipes/lighthouse-plugin-example/readme.md) or its [GitHub repository template](https://github.com/GoogleChrome/lighthouse-plugin-example).

#### `package.json`

A Lighthouse plugin is just a node module with a name that starts with `lighthouse-plugin-`. Any dependencies you need are up to you. However, do not depend on Lighthouse directly, use [`peerDependencies`](http://npm.github.io/using-pkgs-docs/package-json/types/peerdependencies.html) to alert dependents, and `devDependencies` for your own local development:

**Example `package.json`**

```json

{

"name": "lighthouse-plugin-example",

"type": "module",

"main": "plugin.js",

"peerDependencies": {

"lighthouse": "^13.0.1"

},

"devDependencies": {

"lighthouse": "^13.0.1"

}

}

```

#### `plugin.js`

This file contains the configuration for your plugin. It can be called anything you like, just ensure it is referenced by the `"main"` property in your `package.json`.

**Example `plugin.js`**

```js

export default {

// Additional audits to run on information Lighthouse gathered.

audits: [{path: 'lighthouse-plugin-example/audits/has-cat-images.js'}],

// A new category in the report for the plugin output.

category: {

title: 'Cats',

description:

'When integrated into your website effectively, cats deliver delight and bemusement.',

auditRefs: [{id: 'has-cat-images-id', weight: 1}],

},

};

```

#### Custom Audits

These files contain the logic that will generate results for the Lighthouse report. An audit is a class with two important properties:

1. `meta` - This contains important information about how the audit will be referenced and how it will be displayed in the HTML report.

2. `audit` - This is a function that should return the audit's results. See [API > Plugin Audits](#plugin-audits).

**Example `audits/has-cat-images.js`**

```js

import {Audit} from 'lighthouse';

class CatAudit extends Audit {

static get meta() {

return {

id: 'has-cat-images-id',

title: 'Page has least one cat image',

failureTitle: 'Page does not have at least one cat image',

description:

'Pages should have lots of cat images to keep users happy. ' +

'Consider adding a picture of a cat to your page improve engagement.',

requiredArtifacts: ['ImageElements'],

};

}

static audit(artifacts) {

// Artifacts requested in `requiredArtifacts` above are passed to your audit.

// See the "API -> Plugin Audits" section below for what artifacts are available.

const images = artifacts.ImageElements;

const catImages = images.filter(image => image.src.toLowerCase().includes('cat'));

return {

// Give users a 100 if they had a cat image, 0 if they didn't.

score: catImages.length > 0 ? 1 : 0,

// Also return the total number of cat images that can be used by report JSON consumers.

numericValue: catImages.length,

};

}

}

export default CatAudit;

```

#### Run the plugin locally in development

```sh

# be in your plugin directory, and have lighthouse as a devDependency.

NODE_PATH=.. npx lighthouse -- https://example.com --plugins=lighthouse-plugin-example --only-categories=lighthouse-plugin-example --view

# Note: we add the parent directory to NODE_PATH as a hack to allow Lighthouse to find this plugin.

# This is useful for local development, but is not necessary when your plugin consuming from NPM as

# a node module.

```

## API

### Plugin Config

The plugin config file (see `plugin.js` in the [example](#pluginjs) and [recipe](./recipes/lighthouse-plugin-example/plugin.js)) is a subset of the available [configuration](./configuration.md) for full custom Lighthouse config files.

A plugin config is an object that has at least two properties: `audits` and `category`.

#### `audits`

Defines the new audits the plugin adds. It is an array of string paths to the audit files. Each path should be treated as an absolute string a user of your module might pass to `require`, so use paths of the form `lighthouse-plugin-/path/to/audits/audit-file.js`.

**Type**: `Array<{path: string}>`

#### `category`

Defines the display strings of the plugin's category and configures audit scoring and grouping. It is an object with at least two properties `title` and `auditRefs`.

- `title: string` **REQUIRED** - The display name of the plugin's category in the report.

- `description: string` _OPTIONAL_ - A more detailed description of the category's purpose.

- `manualDescription: string` _OPTIONAL_ - A more detailed description of all of the manual audits in a plugin. Only use this if you've added manual audits.

- `auditRefs: Array<{id: string, weight: number, group?: string}>` **REQUIRED** - The list of audits to include in the plugin category along with their overall weight in the score of the plugin category. Each audit ref may optionally reference a group ID from `groups`.

- `supportedModes: string[]` _OPTIONAL_ - Which Lighthouse [modes](https://github.com/GoogleChrome/lighthouse/blob/master/docs/user-flows.md) this plugin supports. Category will support all modes if this is not provided.

#### `groups`

Defines the audit groups used for display in the HTML report.

It is an object whose keys are the group IDs and whose values are objects with the following properties:

- `title: string` **REQUIRED** - The display name of the group in the report.

- `description: string` _OPTIONAL_ - A more detailed description of the group's purpose.

**Example of Category with Groups**

**Example of Category _without_ Groups**

**Example of Category _without_ Groups**

### Plugin Audits

A plugin audit is a class that implements at least two properties: `meta` and `audit()`.

#### `meta`

The `meta` property is a static getter for the metadata of an [audit](#custom-audits). It should return an object with the following properties:

- `id: string` **REQUIRED** - The string identifier of the audit, in kebab case, typically matching the file name.

- `title: string` **REQUIRED** - Short, user-visible title for the audit when successful.

- `failureTitle: string` _OPTIONAL_ - Short, user-visible title for the audit when failing.

- `description: string` **REQUIRED** - A more detailed description that describes why the audit is important and links to Lighthouse documentation on the audit; markdown links supported.

- `requiredArtifacts: Array` **REQUIRED** - A list of artifacts that must be present for the audit to execute. See [Available Artifacts](#available-artifacts) for what's available to plugins.

- `scoreDisplayMode: "numeric" | "binary" | "manual" | "informative"` _OPTIONAL_ - A string identifying how the score should be interpreted for display.

See [Best Practices > Naming](#naming) for best practices on the display strings.

#### `audit(artifacts, context)`

The `audit()` property is a function the computes the audit results for the report. It accepts two arguments: `artifacts` and `context`. `artifacts` is an object whose keys will be the values you passed to `requiredArtifacts` in the `meta` object. `context` is an internal object whose primary use in plugins is to derive network request information (see [Using Network Requests](#using-network-requests)).

The primary objective of the audit function is to return a `score` from `0` to `1` based on the data observed in `artifacts`. There are several other properties that can be returned by an audit to control additional display features. For the complete list, see the [audit results documentation](./understanding-results.md#audit-properties) and [type information](https://github.com/GoogleChrome/lighthouse/blob/623b789497f6c87f85d366b4038deae5dc701c90/types/audit.d.ts#L69-L87).

#### Available Artifacts

The following artifacts are available for use in the audits of Lighthouse plugins. For more detailed information on their usage and purpose, see the [type information](https://github.com/GoogleChrome/lighthouse/blob/main/types/artifacts.d.ts#L42-L99).

- `fetchTime`

- `BenchmarkIndex`

- `settings`

- `Timing`

- `HostFormFactor`

- `HostUserAgent`

- `HostProduct`

- `GatherContext`

- `URL`

- `ConsoleMessages`

- `DevtoolsLog`

- `MainDocumentContent`

- `ImageElements`

- `LinkElements`

- `MetaElements`

- `Scripts`

- `Trace`

- `ViewportDimensions`

While Lighthouse has more artifacts with information about the page than are in this list, those artifacts are considered experimental and their structure or existence could change at any time. Only use artifacts not on the list above if you are comfortable living on the bleeding edge and can tolerate unannounced breaking changes.

If you're interested in other page information not mentioned here, please file an issue. We'd love to help.

#### Using Network Requests

You might have noticed that a simple array of network requests is missing from the list above. The source information for network requests made by the page is actually contained in the `DevtoolsLog` artifact, which contains all the of DevTools Protocol traffic recorded during page load. The network request objects are derived from this message log at audit time.

See below for an example of an audit that processes network requests.

```js

import {Audit, NetworkRecords} from 'lighthouse';

class HeaderPoliceAudit {

static get meta() {

return {

id: 'header-police-audit-id',

title: 'All headers stripped of debug data',

failureTitle: 'Headers contained debug data',

description: 'Pages should mask debug data in production.',

requiredArtifacts: ['DevtoolsLog'],

};

}

static async audit(artifacts, context) {

// Request the network records from the devtools log.

// The `context` argument is passed in to allow Lighthouse to cache the result and not re-compute the network requests for every audit that needs them.

const devtoolsLog = artifacts.DevtoolsLog;

const requests = await NetworkRecords.request(devtoolsLog, context);

// Do whatever you need to with the network requests.

const badRequests = requests.filter(request =>

request.responseHeaders.some(header => header.name.toLowerCase() === 'x-debug-data')

);

return {

score: badRequests.length === 0 ? 1 : 0,

};

}

}

export default HeaderPoliceAudit;

```

## Best Practices

### Naming

> There are only two hard things in Computer Science: cache invalidation and naming things.

> Phil Karlton

There are several display strings you will need to write in the course of plugin development. To ensure your plugin users have a consistent experience with the rest of the Lighthouse report, follow these guidelines.

#### Category Titles

Write category titles that are short (fewer than 20 characters), ideally a single word or acronym. Avoid unnecessary prefixes like "Lighthouse" or "Plugin" which will already be clear from the context of the report.

#### Category Descriptions

Write category descriptions that provide context for your plugin's audits and link to where users can learn more or ask questions about their advice.

#### Audit Titles

Write audit titles in the _present_ tense that _describe_ what the page is successfully or unsuccessfully doing.

**DO**

> Document has a `` element

> Document does not have a `<title>` element

> Uses HTTPS

> Does not use HTTPS

> Tap targets are sized appropriately

> Tap targets are not sized appropriately

**DON'T**

> Good job on `alt` attributes

> Fix your headers

#### Audit Descriptions

Write audit descriptions that provide brief context for why the audit is important and link to more detailed guides on how to follow its advice. Markdown links are supported, so use them!

**DO**

> Interactive elements like buttons and links should be large enough (48x48px), and have enough space around them, to be easy enough to tap without overlapping onto other elements. [Learn more](https://developers.google.com/web/fundamentals/accessibility/accessible-styles#multi-device_responsive_design).

> All sites should be protected with HTTPS, even ones that don\'t handle sensitive data. HTTPS prevents intruders from tampering with or passively listening in on the communications between your app and your users, and is a prerequisite for HTTP/2 and many new web platform APIs. [Learn more](https://developers.google.com/web/tools/lighthouse/audits/https).

**DON'T**

> Images need alt attributes.

> 4.8.4.4 Requirements for providing text to act as an alternative for images

> Except where otherwise specified, the alt attribute.... 10,000 words later... and that is everything you need to know about the `alt` attribute!

### Scoring

1. Weight each audit by its importance.

1. Differentiate scores within an audit by returning a number _between_ `0` and `1`. Scores greater than `0.9` will be hidden in "Passed Audits" section by default.

1. Avoid inflating scores unnecessarily by marking audits as not applicable. When an audit's advice doesn't apply, simply `return {score: null, notApplicable: true}`.

### Common Mistakes

The web is a diverse place, and your plugin will be run on pages you never thought existed. Here are a few things to keep in mind when writing your audit to avoid common bugs. The Lighthouse team has made all of these mistakes below, so you're in good company!

#### Forgetting to Filter

Most audits will have a specific use case in mind that will apply to most elements or requests, but there are corner cases that come up fairly frequently that are easy to forget.

**Examples:**

- Non-network network requests (`blob:`, `data:`, `file:`, etc)

- Non-javascript scripts (`type="x-shader/x-vertex"`, `type="application/ld+json"`, etc)

- Tracking pixel images (images with size 1x1, 0x0, etc)

#### Forgetting to Normalize

Most artifacts will try to represent as truthfully as possible what was observed from the page. When possible, the values are normalized according to the spec as you would access them from the DOM, but typically no transformation beyond this is done. This means that some values will have leading or trailing whitespace, be mixed-case, potentially missing, relative URLs instead of absolute, etc.

**Examples:**

- Header names and values

- Script `type` values

- Script `src` values

## Examples

- [Cinememe Plugin](https://github.com/exterkamp/lighthouse-plugin-cinememe) - Find and reward dank cinememes (5MB+ animated GIFs ;)

- [YouTube Embed](https://github.com/connorjclark/lighthouse-plugin-yt) - Identifies YouTube embeds

- [Lighthouse Plugin Recipe](./recipes/lighthouse-plugin-example)

- [Field Performance](https://github.com/treosh/lighthouse-plugin-field-performance) - A plugin to gather and display Chrome UX Report field data

- [Publisher Ads Audits](https://github.com/googleads/pub-ads-lighthouse-plugin) - a well-written, but complex, plugin

- [Green Web Foundation](https://github.com/thegreenwebfoundation/lighthouse-plugin-greenhouse) - A plugin to see which domains run on renewable power.

- [requests-content-md5](https://www.npmjs.com/package/lighthouse-plugin-md5) - Generates MD5 hashes from the content of network requests..

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/puppeteer.md

# Using Puppeteer with Lighthouse

## Recipes

### [Using Puppeteer for authenticated pages](./recipes/auth/README.md)

### [Using Puppeteer in a custom gatherer](https://github.com/GoogleChrome/lighthouse/tree/main/docs/recipes/custom-gatherer-puppeteer)

## General Process

### Option 1: Launch Chrome with Puppeteer and handoff to Lighthouse

The example below shows how to inject CSS into the page before Lighthouse audits the page.

A similar approach can be taken for injecting JavaScript.

```js

import puppeteer from 'puppeteer';

import lighthouse from 'lighthouse';

const url = 'https://chromestatus.com/features';

// Use Puppeteer to launch headless Chrome

// - Omit `--enable-automation` (See https://github.com/GoogleChrome/lighthouse/issues/12988)

// - Don't use 800x600 default viewport

const browser = await puppeteer.launch({

// Set to false if you want to see the script in action.

headless: 'new',

defaultViewport: null,

ignoreDefaultArgs: ['--enable-automation']

});

const page = await browser.newPage();

// Wait for Lighthouse to open url, then inject our stylesheet.

browser.on('targetchanged', async target => {

if (page && page.url() === url) {

await page.addStyleTag({content: '* {color: red}'});

}

});

// Lighthouse will open the URL.

// Puppeteer will observe `targetchanged` and inject our stylesheet.

const {lhr} = await lighthouse(url, undefined, undefined, page);

console.log(`Lighthouse scores: ${Object.values(lhr.categories).map(c => c.score).join(', ')}`);

await browser.close();

```

### Option 2: Launch Chrome with Lighthouse/chrome-launcher and handoff to Puppeteer

When using Lighthouse programmatically, you'll often use chrome-launcher to launch Chrome.

Puppeteer can reconnect to this existing browser instance like so:

```js

import chromeLauncher from 'chrome-launcher';

import puppeteer from 'puppeteer';

import lighthouse from 'lighthouse';

const url = 'https://chromestatus.com/features';

// Launch chrome using chrome-launcher.

const chrome = await chromeLauncher.launch();

// Connect to it using puppeteer.connect().

const resp = await fetch(`http://localhost:${chrome.port}/json/version`);

const {webSocketDebuggerUrl} = await resp.json();

const browser = await puppeteer.connect({browserWSEndpoint: webSocketDebuggerUrl});

const page = await browser.newPage();

// Run Lighthouse.

const {lhr} = await lighthouse(url, undefined, undefined, page);

console.log(`Lighthouse scores: ${Object.values(lhr.categories).map(c => c.score).join(', ')}`);

await browser.disconnect();

chrome.kill();

```

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/readme.md

This directory contains useful documentation, examples (keep reading),

and [recipes](./recipes/) to get you started. For an overview of Lighthouse's

internals, see [Lighthouse Architecture](architecture.md).

## Using programmatically

The example below shows how to run Lighthouse programmatically as a Node module. It

assumes you've installed Lighthouse as a dependency (`yarn add --dev lighthouse`).

```js

import fs from 'fs';

import lighthouse from 'lighthouse';

import * as chromeLauncher from 'chrome-launcher';

const chrome = await chromeLauncher.launch({chromeFlags: ['--headless']});

const options = {logLevel: 'info', output: 'html', onlyCategories: ['performance'], port: chrome.port};

const runnerResult = await lighthouse('https://example.com', options);

// `.report` is the HTML report as a string

const reportHtml = runnerResult.report;

fs.writeFileSync('lhreport.html', reportHtml);

// `.lhr` is the Lighthouse Result as a JS object

console.log('Report is done for', runnerResult.lhr.finalDisplayedUrl);

console.log('Performance score was', runnerResult.lhr.categories.performance.score * 100);

chrome.kill();

```

### Performance-only Lighthouse run

Many modules consuming Lighthouse are only interested in the performance numbers.

You can limit the audits you run to a particular category or set of audits.

```js

const flags = {onlyCategories: ['performance']};

await lighthouse(url, flags);

```

You can also craft your own config (e.g. [experimental-config.js](https://github.com/GoogleChrome/lighthouse/blob/main/core/config/experimental-config.js)) for custom runs. Also see the [basic custom audit recipe](https://github.com/GoogleChrome/lighthouse/tree/main/docs/recipes/custom-audit).

### Differences from CLI flags

Note that some flag functionality is only available to the CLI. The set of shared flags that work in both node and CLI can be found [in our typedefs](https://github.com/GoogleChrome/lighthouse/blob/main/types/lhr/settings.d.ts#:~:text=interface%20SharedFlagsSettings). In most cases, the functionality is not offered in the node module simply because it is easier and more flexible to do it yourself.

| CLI Flag | Differences in Node |

| - | - |

| `port` | Only specifies which port to use, Chrome is not launched for you. |

| `chromeFlags` | Ignored, Chrome is not launched for you. |

| `outputPath` | Ignored, output is returned as string in `.report` property. |

| `saveAssets` | Ignored, artifacts are returned in `.artifacts` property. |

| `view` | Ignored, use the `open` npm module if you want this functionality. |

| `enableErrorReporting` | Ignored, error reporting is always disabled for node. |

| `listAllAudits` | Ignored, not relevant in programmatic use. |

| `listTraceCategories` | Ignored, not relevant in programmatic use. |

| `configPath` | Ignored, pass the config in as the 3rd argument to `lighthouse`. |

| `preset` | Ignored, pass the config in as the 3rd argument to `lighthouse`. |

| `verbose` | Ignored, use `logLevel` instead. |

| `quiet` | Ignored, use `logLevel` instead. |

### Turn on logging

If you want to see log output as Lighthouse runs, set an appropriate logging level in your code and pass

the `logLevel` flag when calling `lighthouse`.

```javascript

const flags = {logLevel: 'info'};

await lighthouse('https://example.com', flags);

```

## Configuration

In order to extend the Lighthouse configuration programmatically, you need to pass the config object as the 3rd argument. If omitted, a default configuration is used.

**Example:**

```js

{

extends: 'lighthouse:default',

settings: {

onlyAudits: [

'speed-index',

'interactive',

],

},

}

```

You can extend base configuration from [lighthouse:default](https://github.com/GoogleChrome/lighthouse/blob/main/core/config/default-config.js), or you can build up your own configuration from scratch to have complete control.

For more information on the types of config you can provide, see [Lighthouse Configuration](https://github.com/GoogleChrome/lighthouse/blob/main/docs/configuration.md).

## Testing on a site with authentication

When installed globally via `npm i -g lighthouse` or `yarn global add lighthouse`,

`chrome-debug` is added to your `PATH`. This binary launches a standalone Chrome

instance with an open debugging port.

1. Run `chrome-debug`. This will log the debugging port of your Chrome instance

1. Navigate to your site and log in.

1. In a separate terminal tab, run `lighthouse http://mysite.com --port port-number` using the port number from chrome-debug.

## Testing on a site with an untrusted certificate

When testing a site with an untrusted certificate, Chrome will be unable to load the page and so the Lighthouse report will mostly contain errors.

If this certificate **is one you control** and is necessary for development (for instance, `localhost` with a self-signed certificate for local HTTP/2 testing), we recommend you _add the certificate to your locally-trusted certificate store_. In Chrome, see `Settings` > `Privacy and Security` > `Manage certificates` or consult instructions for adding to the certificate store in your operating system.

Alternatively, you can instruct Chrome to ignore the invalid certificate by adding the Lighthouse CLI flag `--chrome-flags="--ignore-certificate-errors"`. However, you must be as careful with this flag as it's equivalent to browsing the web with TLS disabled. Any content loaded by the test page (e.g. third-party scripts or iframed ads) will *also* not be subject to certificate checks, [opening up avenues for MitM attacks](https://www.chromium.org/Home/chromium-security/education/tls#TOC-What-security-properties-does-TLS-give-me-). For these reasons, we recommend the earlier solution of adding the certificate to your local cert store.

## Testing on a mobile device

Lighthouse can run against a real mobile device. You can follow the [Remote Debugging on Android (Legacy Workflow)](https://developer.chrome.com/devtools/docs/remote-debugging-legacy) up through step 3.3, but the TL;DR is install & run adb, enable USB debugging, then port forward 9222 from the device to the machine with Lighthouse.

You'll likely want to use the CLI flags `--screenEmulation.disabled --throttling.cpuSlowdownMultiplier=1 --throttling-method=provided` to disable any additional emulation.

```sh

$ adb kill-server

$ adb devices -l

* daemon not running. starting it now on port 5037 *

* daemon started successfully *

00a2fd8b1e631fcb device usb:335682009X product:bullhead model:Nexus_5X device:bullhead

$ adb forward tcp:9222 localabstract:chrome_devtools_remote

$ lighthouse --port=9222 --screenEmulation.disabled --throttling.cpuSlowdownMultiplier=1 --throttling-method=provided https://example.com

```

## Lighthouse as trace processor

Lighthouse can be used to analyze trace and performance data collected from other tools (like WebPageTest and ChromeDriver). The `Trace` and `DevtoolsLog` artifact items can be provided using a string for the absolute path on disk if they're saved with `.trace.json` and `.devtoolslog.json` file extensions, respectively. The `DevtoolsLog` array is captured from the `Network` and `Page` domains (a la ChromeDriver's [enableNetwork and enablePage options](https://sites.google.com/a/chromium.org/chromedriver/capabilities#TOC-perfLoggingPrefs-object)).

As an example, here's a trace-only run that reports on user timings and critical request chains:

### `config.json`

```json

{

"settings": {

"auditMode": "/User/me/lighthouse/core/test/fixtures/artifacts/perflog/",

},

"audits": [

"user-timings",

"critical-request-chains"

],

"categories": {

"performance": {

"name": "Performance Metrics",

"description": "These encapsulate your web app's performance.",

"audits": [

{"id": "user-timings", "weight": 1},

{"id": "critical-request-chains", "weight": 1}

]

}

}

}

```

Then, run with: `lighthouse --config-path=config.json http://www.random.url`

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/recipes/custom-audit/readme.md

# Basic Custom Audit Recipe

> **Tip**: see [Lighthouse Architecture](../../../docs/architecture.md) for information

on terminology and architecture.

## What this example does

This example shows how to write a custom Lighthouse audit for a hypothetical search page. The page is considered fully initialized when the main search box (the page's "hero element") is ready to be used. When this happens, the page uses `performance.now()` to mark the time it took to become ready and saves the value in a global variable called `window.myLoadMetrics.searchableTime`.

## The Audit, Gatherer, and Config

- [searchable-gatherer.js](searchable-gatherer.js) - a [Gatherer](https://github.com/GoogleChrome/lighthouse/blob/main/docs/architecture.md#components--terminology) that collects `window.myLoadMetrics.searchableTime`

from the context of the page.

- [searchable-audit.js](searchable-audit.js) - an [Audit](https://github.com/GoogleChrome/lighthouse/blob/main/docs/architecture.md#components--terminology) that tests whether or not `window.myLoadMetrics.searchableTime`

stays below a 4000ms threshold. In other words, Lighthouse will consider the audit "passing"

in the report if the search box initializes within 4s.

- [custom-config.js](custom-config.js) - this file tells Lighthouse where to

find the gatherer and audit files, when to run them, and how to incorporate their

output into the Lighthouse report. This example extends [Lighthouse's

default configuration](https://github.com/GoogleChrome/lighthouse/blob/main/core/config/default-config.js).

**Note**: when extending the default configuration file, all arrays will be concatenated and primitive values will override the defaults.

## Run the configuration

Run Lighthouse with the custom audit by using the `--config-path` flag with your configuration file:

```sh

lighthouse --config-path=custom-config.js https://example.com

```

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/recipes/custom-gatherer-puppeteer/readme.md

# Using Puppeteer in a Gatherer

> **Tip**: see [Basic Custom Audit Recipe](../custom-audit) for basic information about custom audits.

```sh

lighthouse --config-path=custom-config.js https://www.example.com

```

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/recipes/lighthouse-plugin-example/readme.md

# Lighthouse plugin recipe

The result of this guide can be found at our [Lighthouse Plugin GitHub repository template](https://github.com/GoogleChrome/lighthouse-plugin-example)

## Contents

- `package.json` - declares the plugin's entry point (`plugin.js`)

- `plugin.js` - instructs Lighthouse to run the plugin's own `preload-as.js` audit; describes the new category and its details for the report

- `audits/preload-as.js` - the new audit to run in addition to Lighthouse's default audits

## To develop as a plugin developer

Run the following to start of with the recipe as a template:

```sh

mkdir lighthouse-plugin-example && cd lighthouse-plugin-example

curl -L https://github.com/GoogleChrome/lighthouse/archive/main.zip | tar -xzv

mv lighthouse-main/docs/recipes/lighthouse-plugin-example/* ./

rm -rf lighthouse-main

```

Install and run just your plugin:

```sh

yarn

NODE_PATH=.. npx lighthouse -- https://example.com --plugins=lighthouse-plugin-example --only-categories=lighthouse-plugin-example --view

```

When you rename the plugin, be sure to rename its directory as well.

### Iterating

To speed up development, you can gather once and iterate by auditing repeatedly.

```sh

# Gather artifacts from the browser

NODE_PATH=.. npx lighthouse -- https://example.com --plugins=lighthouse-plugin-example --only-categories=lighthouse-plugin-example --gather-mode

# and then iterate re-running this:

NODE_PATH=.. npx lighthouse -- https://example.com --plugins=lighthouse-plugin-example --only-categories=lighthouse-plugin-example --audit-mode --view

```

Finally, publish to NPM.

## To run as a plugin user

1. Install `lighthouse` (v5+) and the plugin `lighthouse-plugin-example`, likely as `devDependencies`.

* `npm install -D lighthouse lighthouse-plugin-example`

1. To run your private lighthouse binary, you have three options

1. `npx --no-install lighthouse -- https://example.com --plugins=lighthouse-plugin-example --view`

1. `yarn lighthouse https://example.com --plugins=lighthouse-plugin-example --view`

1. Add an npm script calling `lighthouse` and run that.

## Result

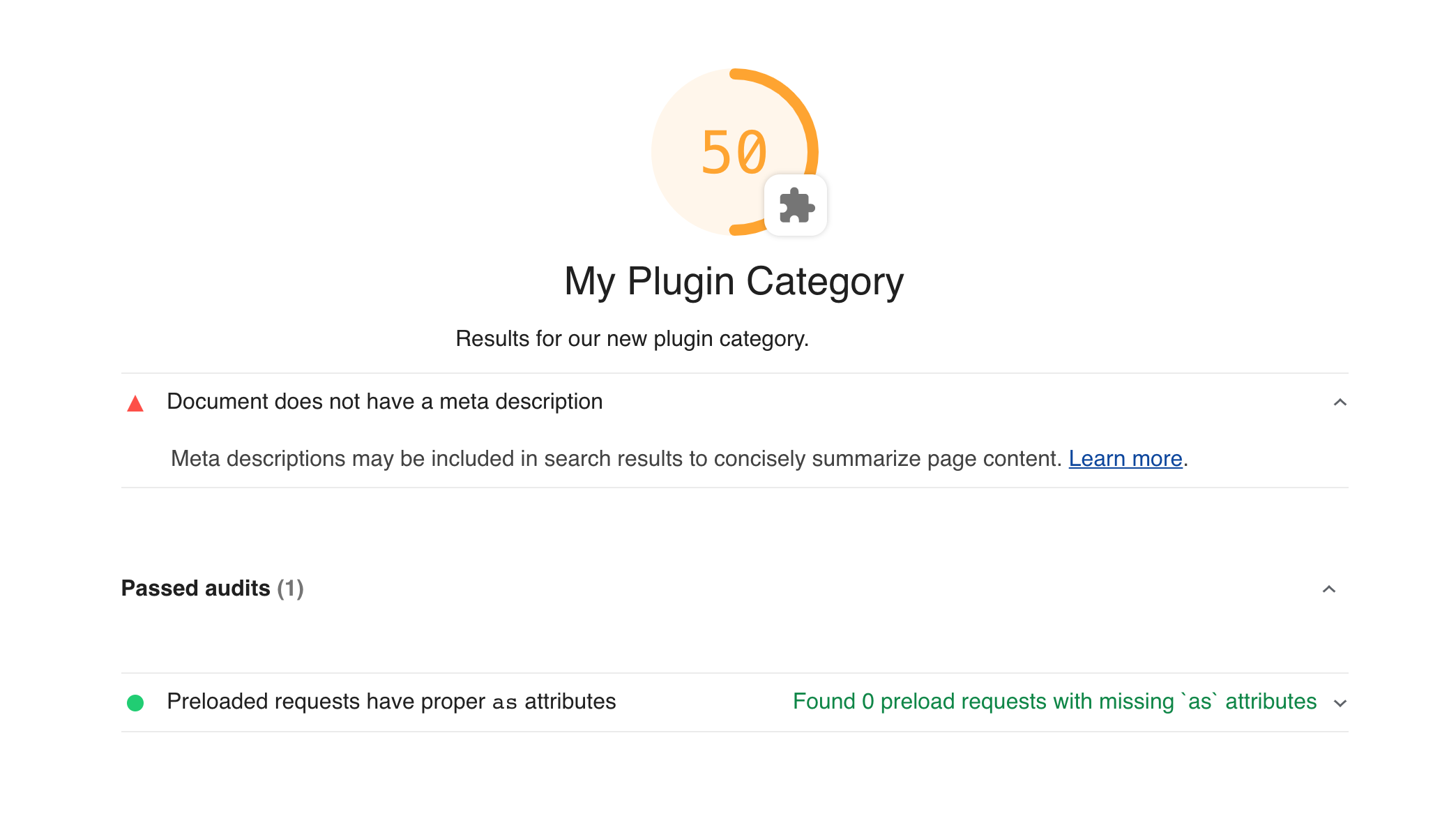

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/recipes/type-checking/readme.md

# Lighthouse type checking recipe

This example project demonstrates how Lighthouse types can be imported into a node project.

`use-types.ts` is a basic user flow script that takes advantage of Lighthouse types and integrates with the version of Puppeteer installed.

---

# Source: https://github.com/GoogleChrome/lighthouse/blob/main/docs/releasing.md

### Release guide for maintainers

This doc is only relevant to core members.

## Release Policy

### Cadence

We aim to release every 4 week, one day after the [expected Chromium branch point](https://www.chromium.org/developers/calendar). These are Wednesdays.

Major version bumps will be the first release of April and October. The due date and progress of major milestones are tracked in https://github.com/GoogleChrome/lighthouse/milestones.

For example, following this schedule, we will attempt a release on these dates: