# Kaisar

> Manage AI agents, their configurations, and monitoring.

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/organization/agents.md

# Agents

Manage AI agents, their configurations, and monitoring.

## Overview

Agents are autonomous AI entities configured to perform specific tasks. This section allows you to manage their lifecycle, monitor their performance, and configure their behaviors.

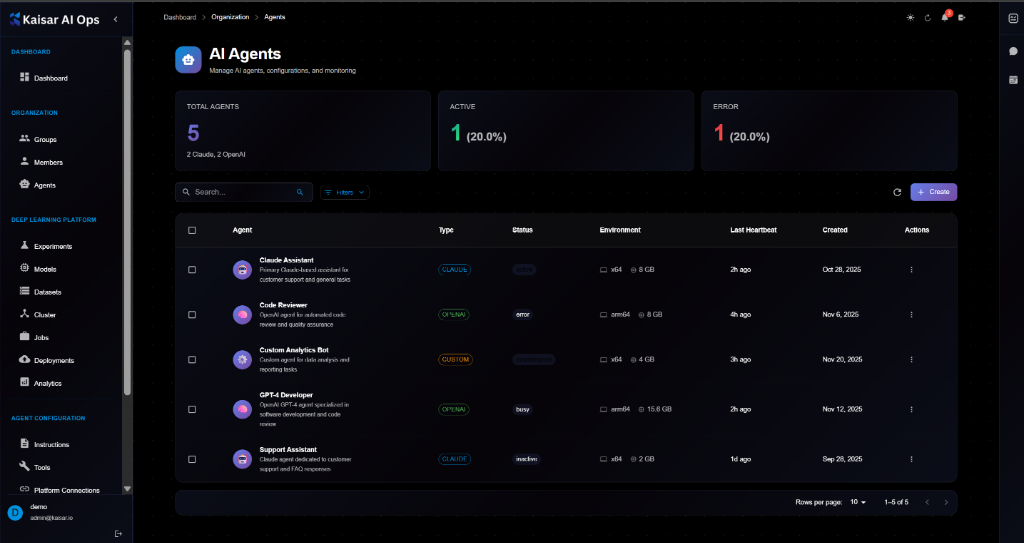

**Dashboard Summary**:

* **Total Agents**: Total number of agents configured

* **Active**: Number of agents currently active

* **Error**: Number of agents in error state

## Agents List

The agents table displays:

* **Agent**: Name and description (e.g., "Claude Assistant", "Code Reviewer")

* **Type**: AI Provider (e.g., CLAUDE, OPENAI, CUSTOM)

* **Status**: Current state (Active, Error, Maintenance, Busy, Inactive)

* **Environment**: Runtime environment (e.g., x64 @ 8 GB)

* **Last Heartbeat**: Time since last signal

* **Created**: Creation date

* **Actions**: Edit, Delete, etc.

## Creating an Agent

Navigate to **Organization** → **Agents** → Click **+ Create**

### Basic Information

**Agent Name**\* (Required)

* A unique name to identify this agent

* Example: `Claude Assistant`

**Agent Type**\* (Required)

* Select the AI provider for this agent

* Options: `Claude (Anthropic)`, `OpenAI`, `Custom`

**Description**\* (Required)

* Brief description of what this agent does

**Workspace Path**\* (Required)

* File system path where agent files are stored

* Example: `/workspace/agents/claude-assistant`

### System Prompt

**System Prompt**\* (Required)

* Instructions that define the agent's behavior and personality

* Example: "You are a helpful AI assistant. Always be professional and accurate."

### Configuration

**Model**

* Model identifier (e.g., `claude-3-sonnet`, `gpt-4-turbo`)

**Temperature**

* Controls randomness (0 = deterministic, 1 = creative)

**Max Tokens**

* Maximum response length in tokens

**Anthropic API Version** / **OpenAI Organization**

* Provider-specific settings

**Custom API Endpoint**

* Full URL to your custom AI endpoint (if applicable)

### Environment

* Configure runtime environment variables and resources

### Actions

* **Cancel**: Discard changes

* **Create Agent**: Submit and create the agent

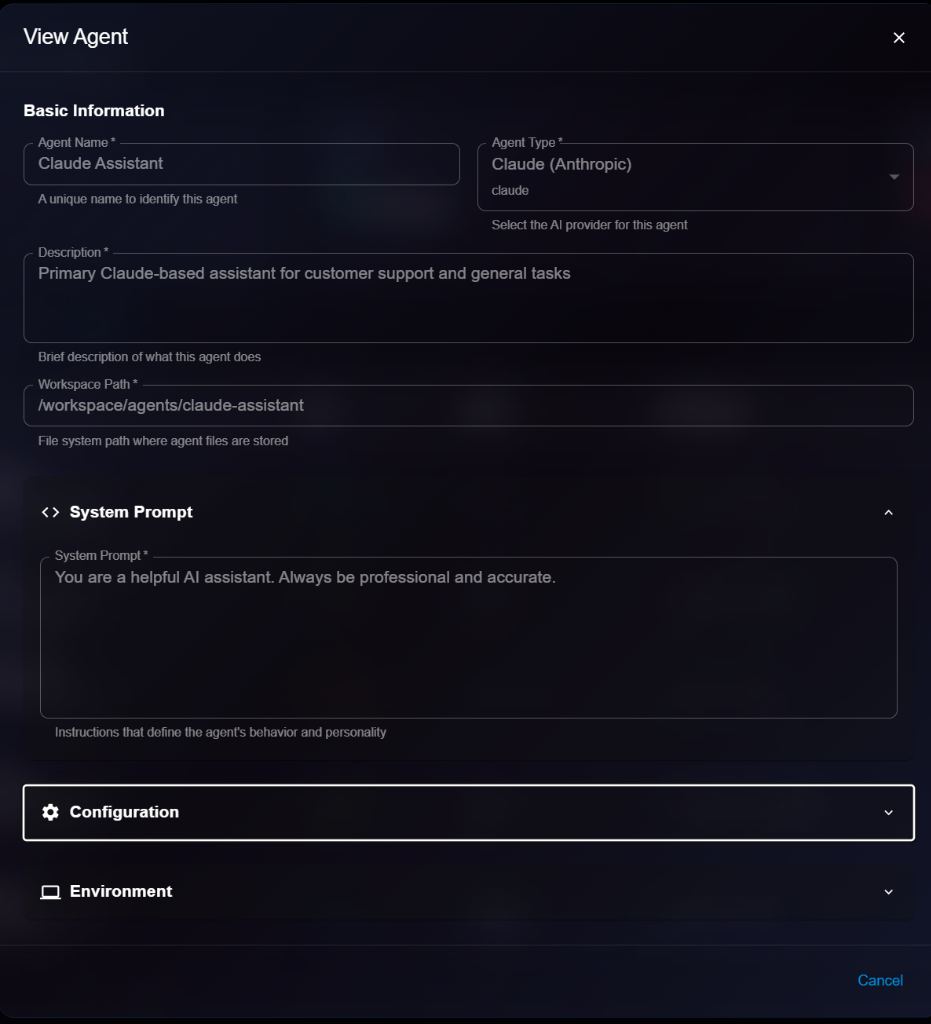

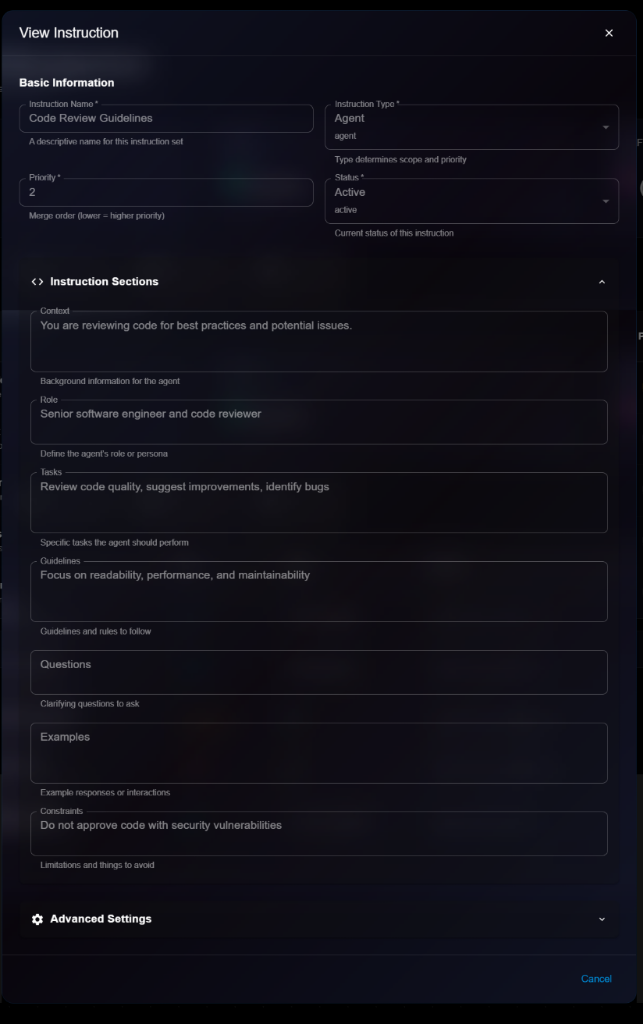

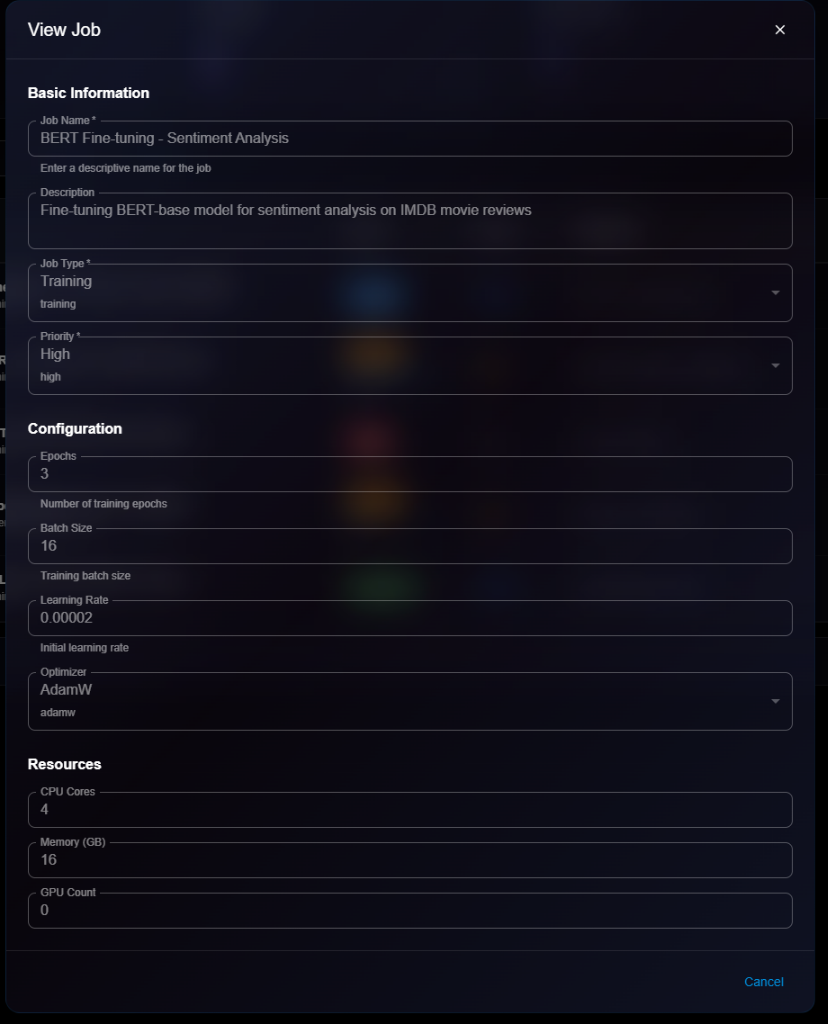

## Viewing Agent Details

To view detailed information about an agent:

1. Navigate to **Organization** → **Agents**

2. Click on an agent from the list

3. View details in the modal dialog

**Details Panel**:

* **Basic Information**: Name, Type, Description, Workspace Path

* **System Prompt**: View the current system prompt

* **Configuration**: View model settings

* **Environment**: View environment settings

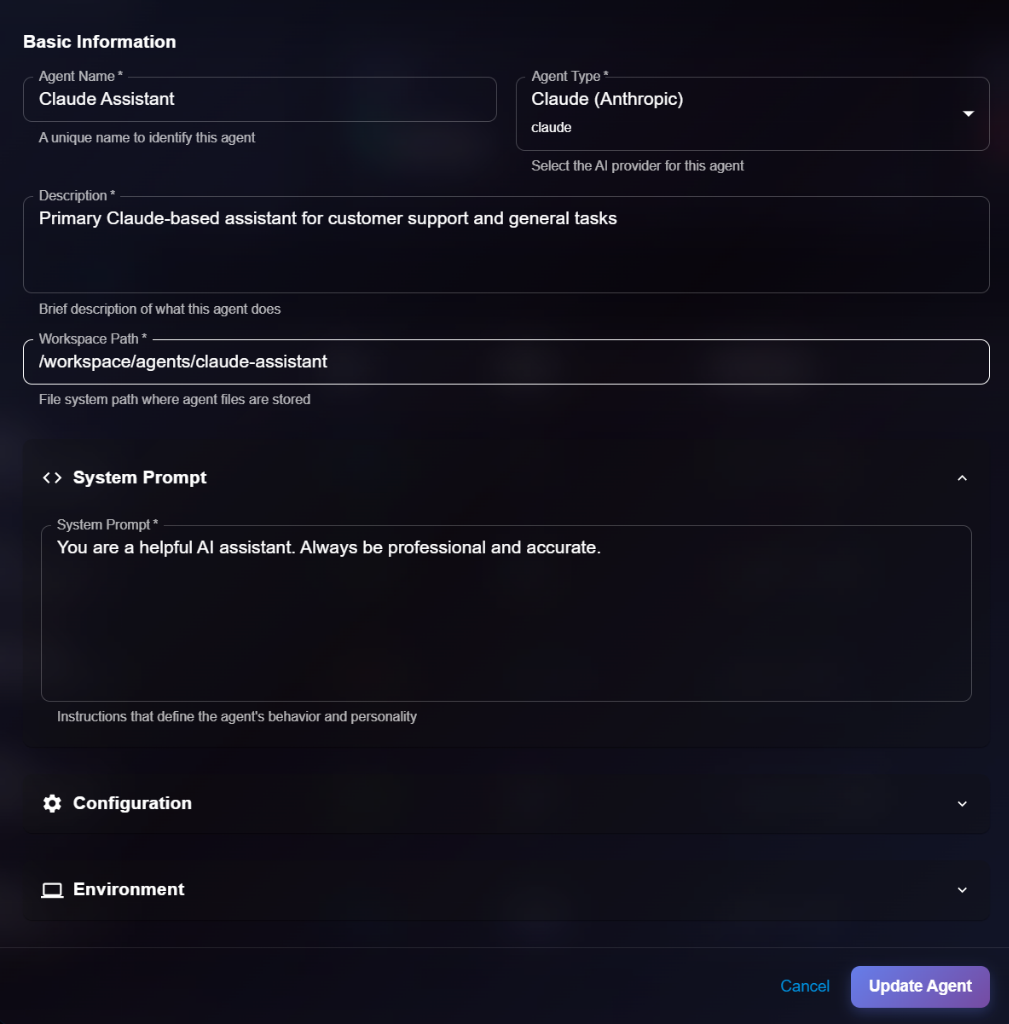

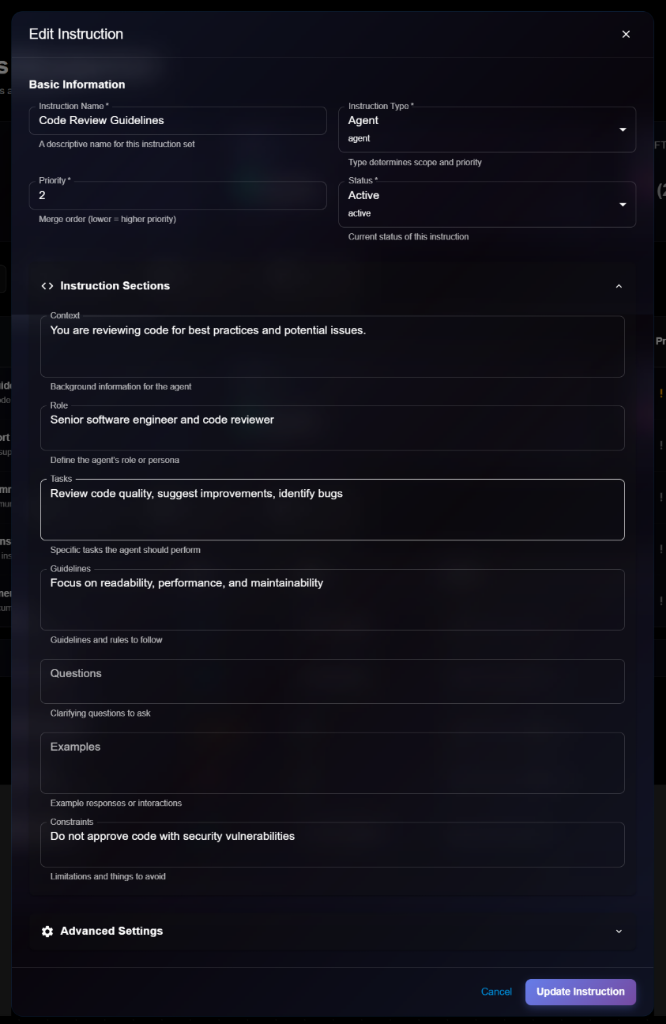

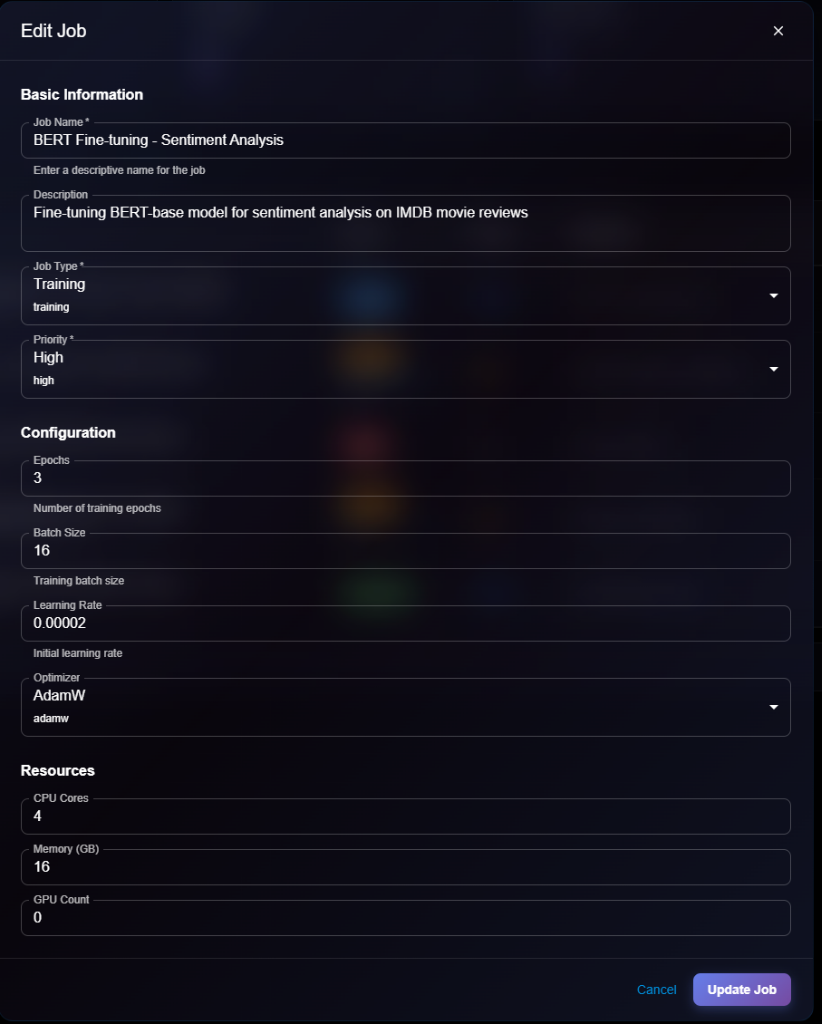

## Editing an Agent

To update an agent's configuration:

1. Open agent details

2. Click **Edit** button (or select Edit from list actions)

3. Modify editable fields in the Edit Agent modal

4. Click **Update Agent** to save changes

**Editable Fields**:

* ✅ Basic Information (Name, Description, Workspace Path)

* ✅ System Prompt

* ✅ Configuration (Model, Temperature, etc.)

* ✅ Environment

## Best Practices

* **Specific Prompts**: Write clear, specific system prompts to guide agent behavior

* **Resource Allocation**: Monitor environment usage and adjust resources as needed

* **Error Monitoring**: Check "Error" status agents immediately to resolve issues

* **Version Control**: Keep track of changes to system prompts and configurations

## Next Steps

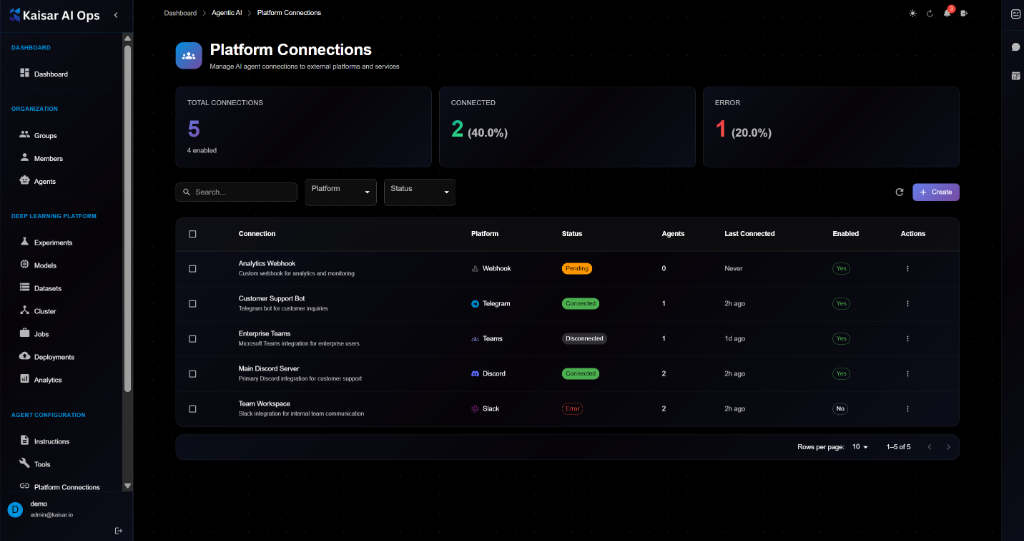

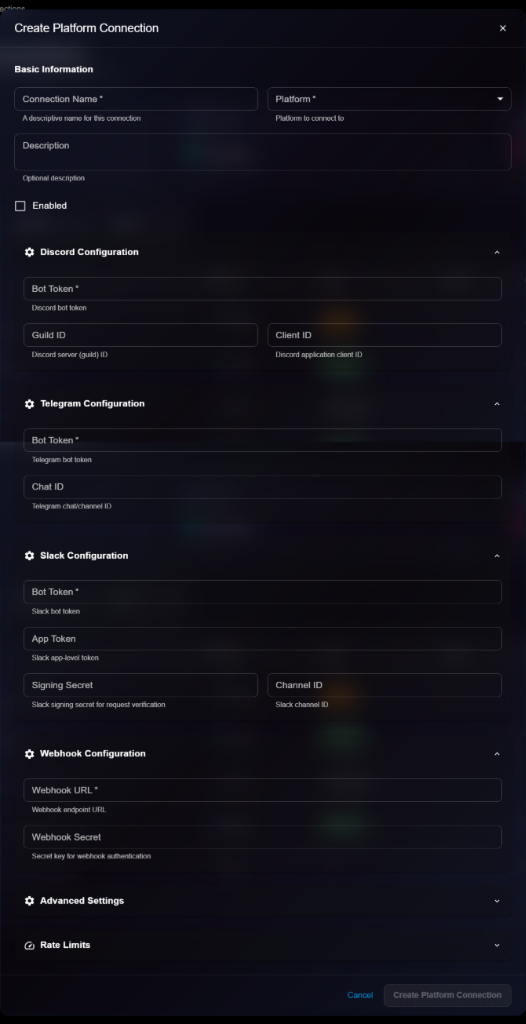

* Configure [Platform Connections](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/agent-configuration/platform-connections) for your agents

* Set up [Tools](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/agent-configuration/tools) for your agents to use

* Monitor agent activity in [Analytics](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/analytics)

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/analytics.md

# Analytics

Monitor and analyze your ML operations with comprehensive analytics and insights.

## Overview

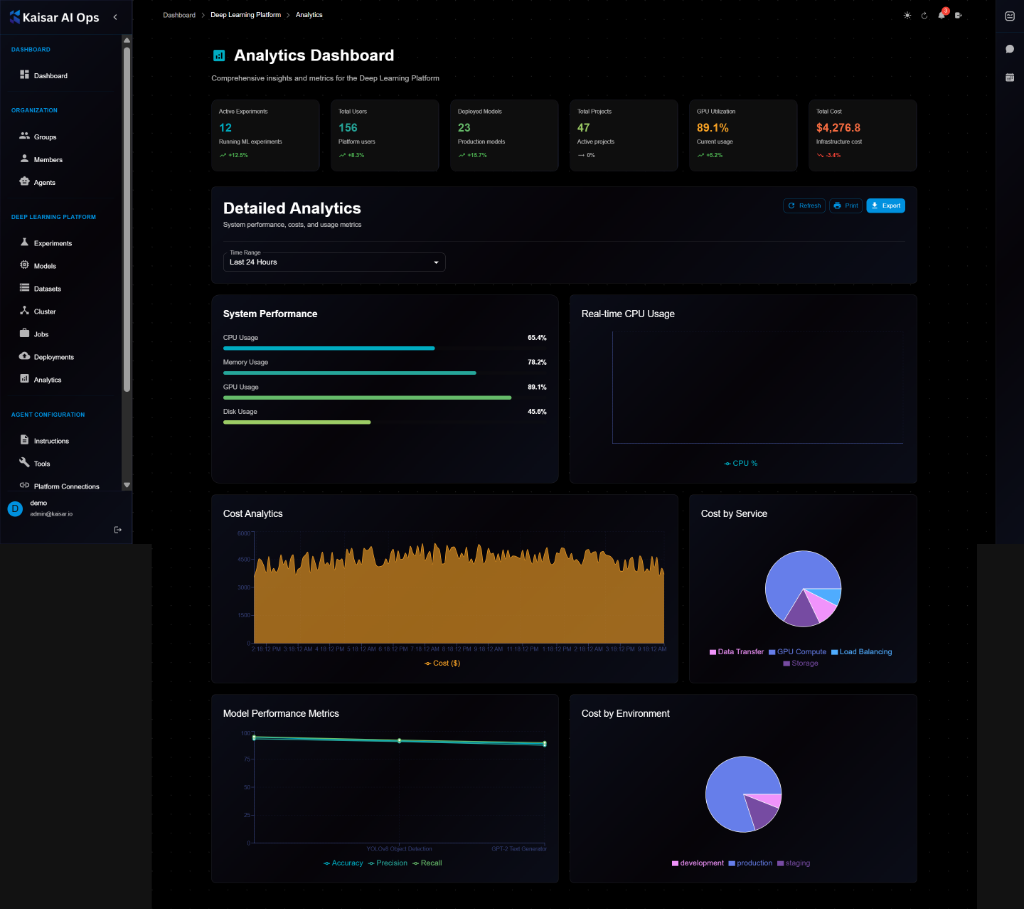

The Analytics Dashboard provides comprehensive insights and metrics for the Deep Learning Platform, helping you track performance, costs, and usage across all your ML operations.

## Dashboard Summary Cards

At the top of the dashboard, you'll see key metrics at a glance:

**Active Experiments**

* **Count**: Number of currently running ML experiments

* **Trend**: Percentage change from previous period

**Total Users**

* **Count**: Total number of platform users

* **Trend**: Percentage change from previous period

**Deployed Models**

* **Count**: Number of models in production

* **Trend**: Percentage change from previous period

**Total Projects**

* **Count**: Number of active projects

* **Trend**: Percentage change from previous period

**GPU Utilization**

* **Percentage**: Current GPU usage percentage

* **Status**: Infrastructure cost with trend indicators

**Total Cost** **Total Cost**

* **Amount**: Total infrastructure cost

* **Trend**: Percentage change from previous period

## Detailed Analytics

The dashboard provides detailed analytics with customizable time ranges (Last 24 Hours, Last 7 Days, Last 30 Days, etc.).

**Available Actions**:

* **Refresh**: Update data

* **Print**: Print dashboard

* **Export**: Export analytics data

### System Performance

Real-time monitoring of system resources:

**CPU Usage**

* Current utilization: 83.4%

* Visual progress bar indicator

* Color-coded (cyan)

**Memory Usage**

* Current utilization: 71.2%

* Visual progress bar indicator

* Color-coded (purple)

**GPU Usage**

* Current utilization: 89.1%

* Visual progress bar indicator

* Color-coded (green)

**Disk Usage**

* Current utilization: 45.0%

* Visual progress bar indicator

* Color-coded (lime green)

### Real-time CPU Usage

Interactive line chart showing CPU usage over time:

* Time-series visualization

* Real-time updates

* Hover for detailed values

* CPU % on Y-axis

### Cost Analytics

**Cost Analytics Chart**:

* Area chart showing cost trends over time

* Time-series data (hourly/daily)

* Cost ($) on Y-axis

* Visual trend analysis

**Cost by Service** (Pie Chart):

* **Data Transfer**: Largest portion (blue)

* **GPU Compute**: Second largest (purple/pink)

* **Load Balancing**: Smaller portion (cyan)

* **Storage**: Smallest portion (pink)

* Interactive legend

### Model Performance Metrics

Line chart tracking model performance:

* **Accuracy**: Green line

* **Precision**: Cyan line

* **Recall**: Purple line

* Time-series visualization

* Performance trends over time

### Cost by Environment

Pie chart showing cost distribution:

* **Development**: Largest portion (blue)

* **Production**: Medium portion (purple/pink)

* **Staging**: Smallest portion (pink)

* Interactive breakdown

## Key Features

### Experiment Analytics

* Training metrics over time

* Hyperparameter impact analysis

* Experiment comparison dashboards

* Success/failure rates

* Active experiment tracking

### Model Performance

* Model accuracy trends

* Inference latency tracking

* Model drift detection

* Performance metrics visualization

* Multi-metric comparison

### Resource Usage

* GPU/CPU utilization monitoring

* Memory consumption tracking

* Storage usage analysis

* Real-time performance graphs

* Resource optimization insights

### Deployment Metrics

* Request rate and throughput

* Error rates and types

* Latency percentiles (p50, p95, p99)

* Uptime and availability

* Deployment health status

### Cost Analysis

* Total infrastructure cost tracking

* Cost breakdown by service

* Cost by environment

* Trend analysis

* Budget monitoring

## Using the Dashboard

**Time Range Selection**:

1. Click the time range dropdown (default: "Last 24 Hours")

2. Select desired range:

* Last 24 Hours

* Last 7 Days

* Last 30 Days

* Custom range

**Refreshing Data**:

* Click **Refresh** button to update all metrics

* Dashboard auto-refreshes periodically

**Exporting Data**:

1. Click **Export** button

2. Choose export format

3. Download analytics report

**Printing**:

* Click **Print** button to print current dashboard view

## Monitoring Best Practices

**Regular Monitoring**:

* Check dashboard daily for anomalies

* Monitor GPU utilization for optimization

* Track cost trends to manage budget

* Review model performance metrics

**Setting Baselines**:

* Establish normal ranges for metrics

* Set up alerts for deviations

* Track trends over time

**Cost Optimization**:

* Identify high-cost services

* Optimize resource allocation

* Monitor environment-specific costs

* Review and adjust as needed

## Next Steps

* View detailed [Experiments](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/experiments) metrics

* Monitor [Deployments](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/deployments) performance

* Track [Models](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/models) accuracy

* Optimize resource usage based on insights

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-architecture/architecture-overview.md

# Architecture Overview

## 1. Overview

### 1.1 Key Components

Kaisar Network mainly comprises of three components:

* **Kaisar Zero Node:** Kaisar’s Chrome extension, enables users to track key metrics like location and uptime while contributing to the decentralized AI compute layer Allowing users to monetize their hardware and support decentralized infrastructure.

* **Kaisar OneNode:** Kaisar OneNode optimizes GPU use for AI through a decentralized, transparent system. With Providers, Checkers, and Explorer on PEAQ Blockchain, ensure performance and reliability.

* **Kaisar Provider**: Provider offer GPU computing resources and report their status to the PEAQ Blockchain, receiving rewards for their contributions.

* **Kaisar Checker**: The Kaisar Checker ( Kaisar Checker Node) ensures resource integrity and functionality by performing checks and submitting Proof of Physical Work, earning rewards from the PEAQ Blockchain.

* **Kaisar Explorer**: An advanced web platform provides real-time visibility into Kaisar’s on-chain metrics and operations for seamless navigation. Kaisar leverages PEAQ Blockchain, a multi-chain layer-one blockchain optimized for DePIN. It supports over 100,000 transactions per second (TPS) with minimal costs and enables seamless interaction with Polkadot, Cosmos, Solana, Binance, and Ethereum via Wormhole.

* **Kaisar Cloud (End-Users):** End-users seek to rent GPUs within the Kaisar Network, directly engaging with the blockchain to access GPU rental, payment processing, and cancellation services.

### 1.2 Kaisar Network Overview

**1.2.1 - Kaisar Cloud *****(End-Users)***

* **Order & Payment** : End-users place an order and make a payment for utilizing GPU compute resources.The payment process will require connecting a wallet and completing the transaction through the wallet.

* **Connect Container/Cluster** : After the payment, the end-users are connected to the required container or cluster provided by the Providers.

* **Reporting**: End-users usage of the GPU computing resources is reported to the PEAQ Blockchain.

* **Refund**: If needed, end-users can get refunds via PEAQ Blockchain consensus. The refund is converted into points, which users can then use for discounts or promotions when purchasing new services.

**1.2.2 - Kaisar Provider**

* **Register :** Provider register their computing resources (PC, Laptop, Server, Bare metal with GPU) on the PEAQ Blockchain.When providers register, they will need to connect their wallet and make a payment in order to register a worker. These processes are connected with Peaq.

* **Providing Services to Network:** Providers make their registered resources available to end-users for connection and usage.

* **Report:** Providers report status and usage of their GPU computing resources to the PEAQ Blockchain.

* **Reward:** Providers receive rewards from the PEAQ Blockchain based on the reports.

**1.2.3 - Kaisar Checker**

* **Checking:** Kaisar Checker (Nodes) perform checks on the computing resources provided by the Providers.

* **Proof of Work (PoPW):** After performing checks, Kaisar Checkers provide Proof of Physical Work (PoPW) to the PEAQ Blockchain.The checker will send performance evaluation tasks to the worker, and then update the results on the chain.

* **Report:** Kaisar Checkers report provider findings and the status of the resources to the PEAQ Blockchain.

* **Reward:** Kaisar Checkers receive rewards from the PEAQ Blockchain for their services based on the reports.

## 2. Detailted Architecture

### 2.1 Kaisar Process: From Wallet Connection to Container Service Utilization

The Kaisar process covers the entire journey from wallet connection to end-user utilization of the container service. Each step involves specific entities and actions to ensure secure, verified, and efficient deployment and interaction with the service.

**2.1.1 - Initiating the Deployment**

1. **Connect to Wallet**

* Entity Involved: End-user

* Action: The end-user connects their wallet to the Console Portal to begin the deployment process.

2. **Buy New Container**

* Entity Involved: End-user

* Action: The end-user purchases a new container through the Console Portal

**2.1.2 - Transaction Handling**

1. **Issue Transaction**

* **Entities Involved:** Console Portal, Blockchain

* **Action:** The Console Portal issues a transaction to the Blockchain to record the purchase.

2. **Transaction Details**

* **Entities Involved**: Blockchain

* **Action:** The Blockchain records the transaction details, ensuring transaction integrity.

**2.1.3 - Port Exposure and Container Deployment**

1. **Request to Expose New Ports**

* **Entities Involved:** Console Portal, VPN

* **Action:** The Console Portal sends a request to the VPN to expose new ports for the container.

2. **Request Results**

* **Entities Involved:** VPN

* **Action:** The VPN processes the request and returns the results to the Console Portal.

3. **Deploy New Container**

* **Entities Involved:** Console Portal, Checkers

* **Action:** The Console Portal commands the Checkers to deploy the container.

4. **Deploy Container**

* **Entities Involved:** Checkers, Worker Node

* **Action:** The Checkers send the deployment command to the Worker Node where the container will be deployed.

**2.1.4 - Notification and Service Connection**

1. **Event Notification**

* **Entities Involved:** End-user

* **Action:** The end-user is notified of the deployment event.

2. **Container Deployment Confirmation**

* **Entities Involved:** End-user

* **Action:** The end-user receives confirmation of the container deployment.

3. **Connect to Container Service**

* **Entities Involved:** End-user

* **Action:** The end-user connects to the newly deployed container service.

**2.1.5 - Service Utilization**

1. **Request to Container Service**

* **Entities Involved:** End-user

* **Action:** The end-user makes requests to the container service.

2. **Request Result**

* **Entities Involved:** Container Service

* **Action:** The container service processes the requests and returns the results to the end-user.

### 2.2 Architecture Layers

***Step-by-Step Flow:***\

\

1\. Users interact through the Explorer, Kaisar Cloud Portal, or Worker Portal, depending on their role and requirements. -> Explorer is a public site, for viewing the summaries, reports of Network Capacity. Kaisar Cloud Portal is for End User (GPU Consumer). and Worker Portal is for (GPU Provider).

2\. All incoming connections pass through the VPN and Firewall for secure access. The IAM system verifies user credentials and permissions.

3\. Requests are routed to the API Gateway, which is running in a cluster mode to ensure high availability and load balancing.

4.The API Gateway uses a round-robin rule to distribute incoming requests to instances of the Backend( Service Management, Worker Management, Billing, Monitoring & Alert, Logging, and Analytics modules). Each of these modules runs multiple instances to handle the load efficiently.

5\. Depending on the request type, it's processed by the relevant backend service. For instance, compute jobs might be directed to the GPU Cluster through Worker Management.

6\. Some operations, those requiring immutable records or enhanced security, interact with the PEAQ Blockchain.

7\. After processing, responses are sent back through the API Gateway to the respective frontend interface, providing users with the needed information. -> For asynchronous requests, the Portal will connect to a WebSocket endpoint on the API Gateway. Once the process is completed, the result will be returned to the Portal through WebSocket events.

*Below is the detailed working of the Kaisar Network, organized into architecture layers:*

#### 2.2.1 - Security Layer

The security layer ensures that the network is secure and accessible only by authorized entities.

**Tech Stack:**

* MESH VPN for secure communication

* Firewall for network traffic control

* Authentication Service via Wallet login for user authentication and authorization\

\

Here are some features of MESH VPN :

1\. Robustness: The network is highly resilient to individual node failures, thanks to multiple pathways for data transfer.

2\. Scalability: Adding new nodes does not significantly impact the overall network performance.

3\. Low Latency: Direct connections between nodes help minimize the number of transmission steps, reducing delays.

4\. Optimal Load Distribution: Traffic is evenly distributed, avoiding congestion and ensuring optimal performance.

#### 2.2.2 - Backend Layer

The backend layer comprises of core backend services supporting the Kaisar Network operations.

* Providers (GPU Providers) : Offers GPU compute resources as worker Nodes.

* Tech Stack: Docker, Kubernetes

* Cluster / GPU Management: Management of Worker Node Cluster.

* Tech Stack: Kubernetes,

* Fault Monitoring: Monitoring for faults using Kaisar Checker Nodes..

* Autoscaling: Automatically adjusting GPU compute resources based on demand.

* Tech Stack: Kubernetes, HPA (Horizontal Pod Autoscaler)

#### 2.2.3 - Infrastructure Layer

**Manages computational resources and task orchestration.**

* GPU Pool: Collection of GPUs available for compute tasks.

* Tech Stack: Nvidia GPUs, CUDA

* List of GPUs is available in GPU Model Scoring Page .

* Orchestration: Tools for managing GPU pools

* Tech Stack: Kubernetes, Docker

#### 2.2.4 - Frontend Layer

* Authentication Service : Manages user authentication and authorization via wallet integration.

* Logging Service: Records system activities on blockchain

* Console Portal: Front end interface for user interaction.

## 3. Core Workflows

### 3.1 - Kaisar Checkers

#### Overview

Kaisar Checkers assesses the uptime, bandwidth, and performance of physical devices provided by Providers within the VPN network. Checkers transmit proof of device functionality to the blockchain and receive rewards based on their performance.

Checker is designed to ensure the integrity and performance of Containers in the Kaisar Network. Verifying the technical specifications of the Containers provided is essential to maintain service quality and network transparency.

**Testing procedure:**

* At registration: A container will be checked when it is registered on the Kaisar Network.

* In standby state: For containers in standby mode, checks will be conducted randomly.

* During rendering state: Service information is collected and examined in detail to evaluate the actual state and quality of the service.

**Evaluation method:**

* Performance parameters: Checker will directly read data about Container performance.

* Simulation testing: Checker will act as a real user to run test applications and analyze received data to ensure the Container is always interactive and complies with set technical requirements.

**Test results:**

* Registration authentication: Checker will confirm the Container's specifications to authenticate the registration of that Container on the Kaisar system.

* Impact on scheduling: Test results directly affect the Manager's scheduling and priority for that Container.

* Quality control: If a certain Container provides services with unsecured quality, Checker will determine the penalty for that Container.

After a Checker Node completes a task, it signs the results with its private key and sends them to Kaisar Blockchain. Each node that delivers the same results as the majority will be rewarded with tokens.

**Workflow**

* The Checker initiates the process by connecting to a wallet that holds an NFT license. This license is essential for authenticating the Checker within the Kaisar Network.

* The Checker stakes KAI tokens. Staking KAI is a prerequisite to access Kaisar's resources and services.

* Upon staking KAI, the Kaisar system provides authentication information back to the Checker. This information is used to verify the Checker’s identity and permission to use the network.

* With the authentication information, the Checker connects to the Kaisar VPN. This connection allows the Checker to securely interact with the Kaisar Network.

* Once connected to the VPN, the Checker queries the Kaisar system to check the usage and workload of various Worker Nodes. This step involves retrieving data on how much work each Worker Node is handling and their availability.

* The results of the Worker Node usage and workload check are returned to the Checker. This information helps the Checker decide which Worker Node to use.

* The Checker submits Proof of Physical Work (PoPW) of the selected Worker Node to the Kaisar Blockchain. This submission records the Checker's findings and the performance of the Worker Node on the blockchain.

* After submitting the PoPW, the Checker receives a reward. This reward is an incentive for the Checker to accurately monitor and report on Worker Node performance.

### 3.2 - Kaisar Cloud (End-User) Workflow

1. End Users initiates the process by connecting their wallet to the Console Portal of Kaisar to pay for GPU resources.

2. After connecting the wallet, the end-user buys a new container through the Console Portal.

3. The Console Portal issues a transaction that involves multiple Kaisar components like VPN and Blockchain.

4. The VPN processes the transaction details and communicates with the Blockchain.

5. The Blockchain component handles the request to expose new ports to the Proxy/Gateway for accessing the container services.

6. The Kaisar Checkers is responsible for deploying the new container. It sends the deployment request to the Provider's Worker Node.

7. The Worker Node in the Provider's infrastructure deploys the container.An event is triggered once the container is deployed, which is communicated back through the Checkers component.

8. The Console Portal updates the end-user with the status: "Container is deploying..." and eventually, "Your Container has been deployed successfully.".

9. Finally the GPU resources are available as Container services by the Providers

* Types of Container Services:

\- Worker Node (Container): Hardware: CPU, RAM, GPU, storage.( By Q2 2024 )

\- Worker Cluster: Comprises multiple Worker Nodes to allows pooling of resources from multiple nodes.(Available by Q4 2024)

\- End users rent resources from mobile nodes provided by Providers.(Available by Q3 2025)

### 3.3 - Kaisar Providers

#### Overview

Providers offer CPU/GPU physical devices for the Kaisar Network, also known as Containers. This is where the actual usage of the cloud takes place, including application execution and rendering. The purpose of Containers is to ensure a seamless experience in the cloud, delivering an optimal user experience.

**Notes on Activities:**

* Availability: Containers must always be in a high state of availability, ready to be activated immediately upon consumer request.

* Usability: Each Container must have the necessary applications or services installed and configured to allow users to access and start up as quickly as possible.

* Processing capacity: Containers need to meet specific computing power and graphics requirements to handle the unique demands of applications or services.

* Network efficiency: Containers must have stable bandwidth and network infrastructure to support high-speed data transmission and low-latency interactions.

**Container Selection Process:**

* Performance-based: Containers are randomly selected based on their ability to provide the highest quality of service with the lowest possible latency and cost.

* Experience optimization: Containers are evaluated for their ability to deliver the best possible user experience, considering factors such as processing speed and uptime.

#### Workflow

*Worker Node Provision*

*Steps:*

1. Provider registers a new worker node.

2. Registration request sent to Kaisar Network.

3. Console Portal responds with setup scripts.

4. Worker node runs setup scripts.

5. Worker node connects to a wallet.

6. Worker node requests a new peer node from blockchain.

7. Connection established.

8. Worker node executes a benchmark job.

9. Benchmark job result sent to Kaisar Network.

10. Worker node registered successfully.

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-architecture/products/kaisar-onenode/kaisar-checker/what-is-the-kaisar-checker-node/benefits-of-owning-a-checker-node.md

# Benefits of Owning a Checker Node

Owning a Checker Node offers several key advantages:

* **Rewards**: Earn regular incentives by contributing to network verification.

* **Empowerment**: Help decentralize AI infrastructure and create alternatives to traditional tech monopolies.

* **Community Impact**: Drive the growth of the Kaisar ecosystem while supporting global AI innovation.

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-architecture/products/kaisar-onenode/kaisar-provider/what-is-kaisar-provider/benefits-of-running-a-kaisar-provider.md

# Benefits of Running a Kaisar Provider

**Operating as a Kaisar Provider: Empowering a Distributed Cloud Network**\

Running a Kaisar Provider offers a unique opportunity to contribute to a state-of-the-art distributed cloud network that powers artificial intelligence (AI) and advanced computational workloads. Below are the key benefits of becoming a Kaisar Provider (Worker Node Operator):

1. **Financial Rewards**\

Earn substantial revenue by providing GPU computing power to businesses and developers who require scalable infrastructure for AI, machine learning, and other resource-intensive applications.

2. **Scalable and Flexible Operations**\

Effortlessly expand your resource pool by integrating additional nodes as demand increases, enabling you to enhance both your earnings and your impact within the network.

3. **Secure and Decentralized Ecosystem**\

Participate in a transparent and secure framework where your contributions are safeguarded, and compensation is equitably distributed across the network.

4. **Sustainable Computing Infrastructure**\

Support a shift away from large, centralized data centers, contributing to a more energy-efficient and environmentally responsible cloud computing ecosystem.

5. [Kaisar Provider Rewards Mechanism](https://docs.kaisar.io/kaisar-network/kaisar-rewards-mechanism/rewards-for-kaisar-providers)

These core advantages underscore the value of participation, delivering both operational and societal impact in a concise and compelling manner.

---

# Source: https://docs.kaisar.io/kaisar-network/origins/challenges/building-the-kaisar-network.md

# Building the Kaisar Network

At Kaisar Network we are focused on developing a slew of innovative tools that mitigate the challenges above.

Our network intelligently aggregates and redistributes new and idle GPUs from enterprises, data centers, cryptocurrency mining operations, and single vendors. This emerging approach, called Decentralized Physical Infrastructure Network (DePIN), is poised to disrupt traditional business practices.

This decentralized network enables easy comparison, selection, and utilization of GPUs, for consolidating geographically dispersed resources to handle large-scale AI workloads.

Kaisar's approach will reduce global dependence on centralized cloud providers and pave the way for a more efficient and connected global GPU cloud economy.

For more information on Kaisar solutions please check the Problem Statement/Solution page.

---

# Source: https://docs.kaisar.io/kaisar-network/origins/faq/can-i-contribute-my-gpu-resources-to-kaisar.md

# Can I contribute my GPU resources to Kaisar?

Yes, Kaisar Worker allows users to contribute their GPUs to the network. Whether you have a PC, laptop, or server with GPUs, you can join the network and earn rewards for your contributions

---

# Source: https://docs.kaisar.io/kaisar-network/origins/challenges/centralized-gpu-computing-challenges.md

# Centralized GPU Computing Challenges

The compute challenges associated with centralized cloud GPU providers are plenty. Below are some statements from prominent publications:

* As demand for GPU compute grows, scaling centralized infrastructure can be challenging. According to Gartner, "GPU-accelerated computing is becoming more prevalent, but scaling GPU resources efficiently remains complex."(Source: Gartner, "Disaggregate Compute and Storage to Enable Scalable, Flexible, and Cost-Effective Data Centers" (2020))

* High-performance GPUs are expensive, and centralizing them can lead to significant upfront costs. A Springer publication notes that "the capital expenditure for large GPU clusters can be prohibitive for many organizations."(Source: Springer, "A Survey on GPU-Based Cloud Computing" (2019))

* GPUs consume substantial power and generate significant heat. Centralizing many GPUs intensifies these challenges. Gartner highlights that "power and cooling requirements for dense GPU deployments are a major consideration in data center design."(Source: Gartner, "Data Center Infrastructure Planning Tool" (2020))

* Network latency: For some applications, network latency between centralized GPUs and distributed data sources can be problematic. Gartner points out that "data locality and network performance are critical factors in GPU-accelerated computing architectures."(Source: Gartner, "Best Practices for Building a Scalable Data Science Platform" (2020))

* Centralized GPU computing requires specialized software stacks and tools. Gartner notes that "the rapidly evolving GPU software ecosystem can create integration and compatibility challenges."(Source: Gartner, "Magic Quadrant for High-Performance Computing" (2020))

---

# Source: https://docs.kaisar.io/kaisar-network/origins/challenges.md

# Challenges

- [Exponential Change in Spending and Compute Requirements](/kaisar-network/origins/challenges/exponential-change-in-spending-and-compute-requirements.md)

- [Cost challenges with traditional cloud GPU providers.](/kaisar-network/origins/challenges/cost-challenges-with-traditional-cloud-gpu-providers..md)

- [Centralized GPU Computing Challenges](/kaisar-network/origins/challenges/centralized-gpu-computing-challenges.md)

- [Building the Kaisar Network](/kaisar-network/origins/challenges/building-the-kaisar-network.md)

---

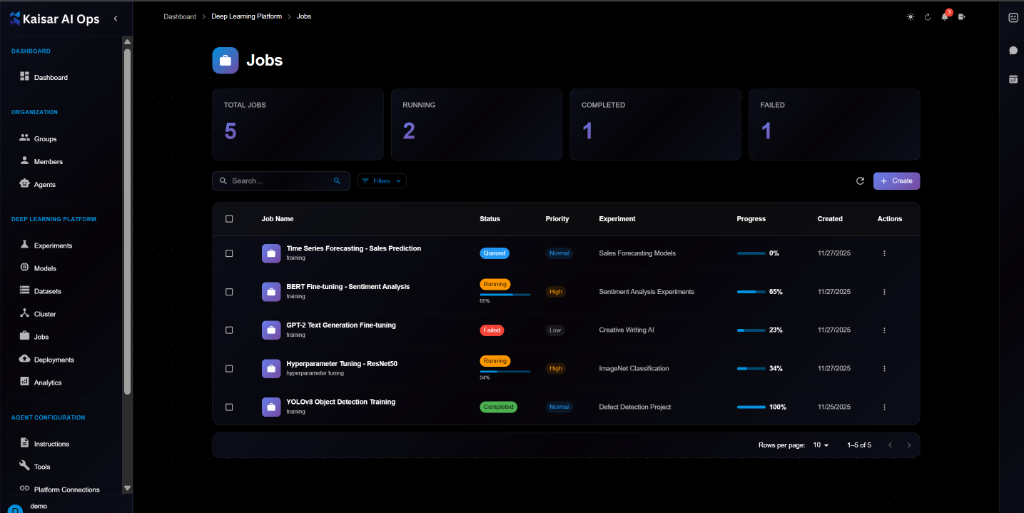

# Source: https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/cluster.md

# Cluster

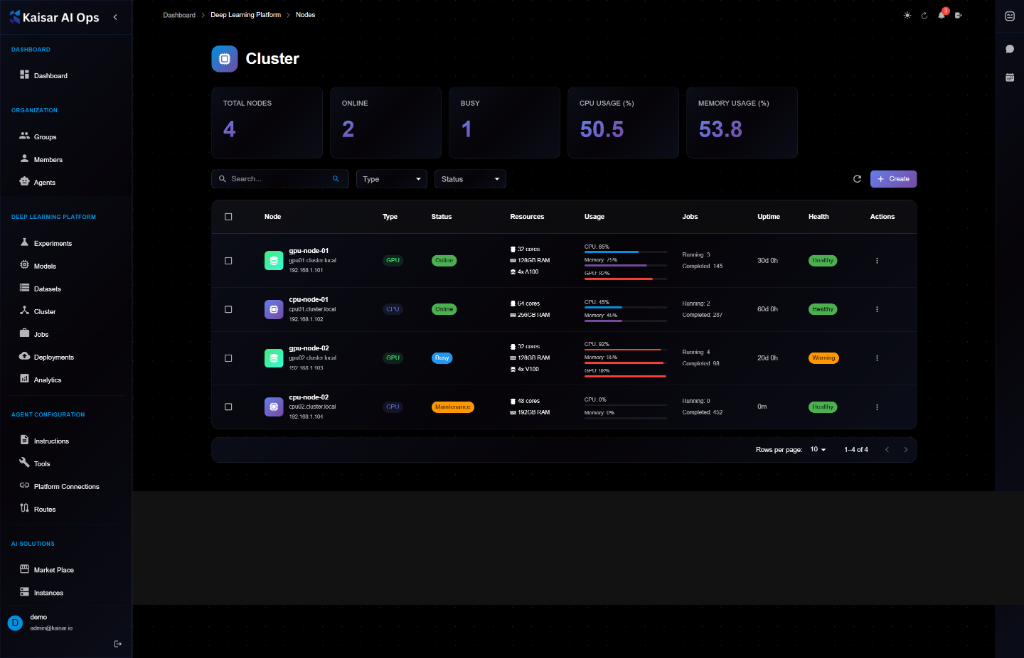

Manage and monitor your compute cluster nodes for ML workloads.

## Overview

The Cluster section provides comprehensive management of compute nodes that power your ML experiments, training jobs, and deployments. Monitor resource utilization, manage node configurations, and ensure optimal cluster health.

## Cluster Dashboard

The dashboard displays key cluster metrics at a glance:

**Summary Cards**:

* **Total Nodes**: Total number of nodes in the cluster

* **Online**: Number of nodes currently online

* **Busy**: Number of nodes actively processing workloads

* **CPU Usage (%)**: Average CPU usage across cluster

* **Memory Usage (%)**: Average memory usage across cluster

## Node List View

The cluster table shows all nodes with the following information:

**Columns**:

* **Node**: Node name and details (hostname, IP address)

* **Type**: Node type (GPU, CPU)

* **Status**: Current status (Online, Offline, Maintenance)

* **Resources**: Available resources (CPU cores, RAM, GPU)

* **Usage**: Real-time CPU and Memory usage with progress bars

* **Jobs**: Running jobs count

* **Uptime**: Node uptime duration

* **Health**: Health status (Healthy, Warning, Critical)

* **Actions**: Quick actions menu

**Filtering and Search**:

* Search by node name or IP

* Filter by Type (GPU, CPU, All)

* Filter by Status (Online, Offline, Busy, Maintenance)

## Creating a Cluster Node

Navigate to **Deep Learning Platform** → **Cluster** → Click **Create**

### Basic Information

**Node Name**\* (Required)

* Unique identifier for the cluster node

* Example: `gpu-node-01`, `cpu-node-high-mem`

**Hostname**\* (Required)

* Network hostname

* Example: `gpu01.cluster.local`

**IP Address**\* (Required)

* IPv4 address of the node

* Example: `192.168.1.101`

**Node Type**\* (Required)

* Select from dropdown: GPU, CPU

* Default: `GPU`

**Status**\* (Required)

* Select from dropdown: Online, Offline

* Default: `Online`

### CPU Resources

**CPU Cores**\* (Required)

* Total number of CPU cores

* Example: `16`

**Total number of CPU Cores** (Helper text)

**Available CPU Cores**\* (Required)

* Number of available CPU cores

* Example: `8`

**Number of available CPU cores** (Helper text)

### Memory Resources

**Total Memory (GB)**\* (Required)

* Total RAM in GB

* Example: `64`

**Total RAM in GB** (Helper text)

**Available Memory (GB)**\* (Required)

* Available RAM in GB

* Example: `32`

**Available memory (GB)** (Helper text)

### GPU Resources (Optional)

**GPU Count**

* Number of GPUs (0 for CPU-only nodes)

* Example: `0`, `4`, `8`

**Number of GPUs (0 for CPU-only nodes)** (Helper text)

**GPU Type**

* Select GPU model from dropdown

* Options: NVIDIA A100, NVIDIA V100, NVIDIA T4, etc.

**GPU Memory per GPU (GB)**

* Memory per GPU in GB

* Example: `80` (for A100)

**VRAM per GPU in GB** (Helper text)

### Storage & Network

**Total Storage (GB)**\* (Required)

* Total disk storage in GB

* Example: `1000`

**Total disk space in GB** (Helper text)

**Network Bandwidth (Mbps)**\* (Required)

* Network bandwidth in Mbps

* Example: `10000` (10 Gbps)

**Network speed in Mbps** (Helper text)

**Network Latency (ms)**\* (Required)

* Average network latency in milliseconds

* Example: `1`

**Average network latency** (Helper text)

### Configuration

**Max Concurrent Jobs**\* (Required)

* Maximum number of jobs that can run simultaneously

* Example: `4`

**Maximum number of jobs that can run simultaneously** (Helper text)

**Priority**

* Node priority (1-10, higher is better)

* Example: `5`

**Node priority (1-10, higher is better)** (Helper text)

**Tags**

* Comma-separated tags for categorization

* Example: `production,high-memory,gpu`

### Location (Optional)

**Datacenter**

* Datacenter location

* Example: `Datacenter`

**Rack**

* Rack identifier

* Example: `R-10`

**Zone**

* Availability zone

* Example: `Zone-A`

### Actions

* **Cancel**: Discard and close

* **Create Cluster Node**: Submit and create the node

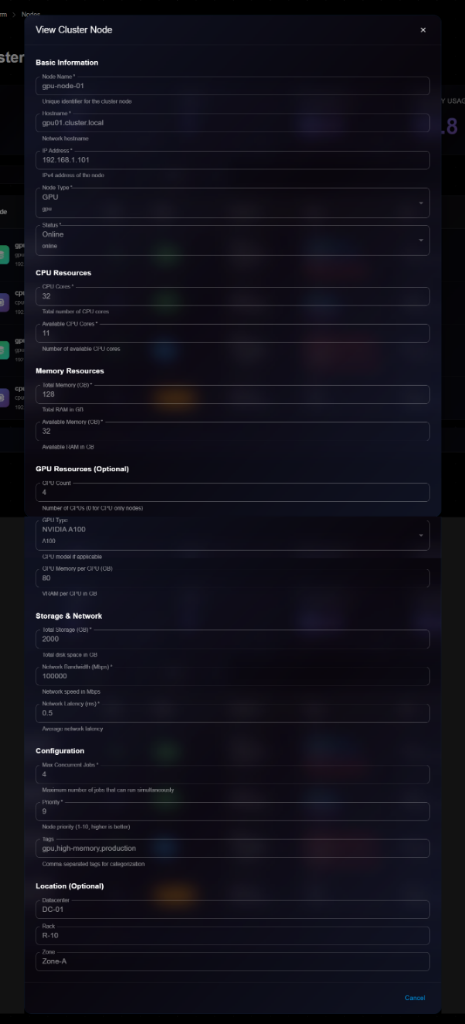

## Viewing Node Details

To view detailed information about a cluster node:

1. Navigate to **Deep Learning Platform** → **Cluster**

2. Click on a node from the list

3. View comprehensive details in the modal dialog

**Details Panel Sections**:

**Basic Information**:

* Node Name: e.g., "gpu-node-01"

* Hostname: e.g., "gpu01.cluster.local"

* IP Address: e.g., "192.168.1.101"

* Node Type: GPU or CPU

* Status: Online, Offline, Busy, Maintenance

**CPU Resources**:

* CPU Cores: Total cores (e.g., 32)

* Total number of CPU cores

* Available CPU Cores: Available cores (e.g., 11)

* Number of available CPU cores

**Memory Resources**:

* Total Memory (GB): Total RAM (e.g., 128)

* Total RAM in GB

* Available Memory (GB): Available RAM (e.g., 32)

* Available memory (GB)

**GPU Resources (Optional)**:

* GPU Count: Number of GPUs (e.g., 4)

* Number of GPUs (0 for CPU-only nodes)

* GPU Type: GPU model (e.g., A100)

* GPU Memory per GPU (GB): VRAM per GPU (e.g., 80)

* VRAM per GPU in GB

**Storage & Network**:

* Total Storage (GB): Total disk space

* Total disk space in GB

* Network Bandwidth (Mbps): Network speed

* Network speed in Mbps

* Network Latency (ms): Average latency

* Network speed in Mbps

**Configuration**:

* Max Concurrent Jobs: Maximum simultaneous jobs

* Maximum number of jobs that can run simultaneously

* Priority: Node priority

* Node priority (1-10, higher is better)

* Tags: Comma-separated tags for categorization

**Location (Optional)**:

* Datacenter: Datacenter location

* Rack: Rack identifier

* Zone: Availability zone

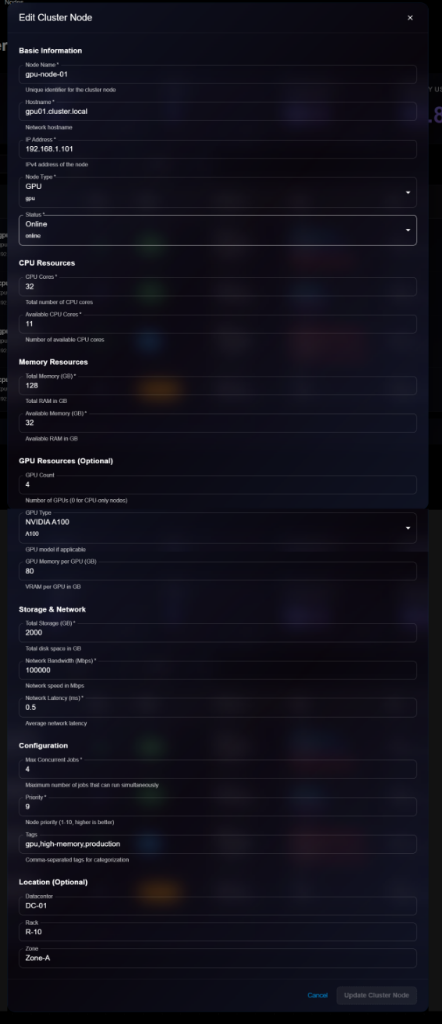

## Editing a Node

To update node configuration:

1. Open node details page

2. Click **Edit** button (or three-dot menu → Edit)

3. Modify editable fields in the Edit Cluster Node modal

4. Click **Update Cluster Node** to save changes

> \[!NOTE] The Edit form is identical to the View form, but with editable fields and an "Update Cluster Node" button.

**Editable Fields**:

* ✅ Hostname

* ✅ Status (Online, Offline, Maintenance)

* ✅ Available CPU Cores

* ✅ Available Memory (GB)

* ✅ GPU configuration

* ✅ Storage & Network settings

* ✅ Max Concurrent Jobs

* ✅ Priority

* ✅ Tags

* ✅ Location information

* ❌ Node Name (cannot edit)

* ❌ IP Address (cannot edit)

* ❌ Node Type (cannot edit)

* ❌ Total CPU Cores (cannot edit)

* ❌ Total Memory (cannot edit)

## Node Management

### Changing Node Status

**Setting to Maintenance**:

1. Open node details

2. Click **Edit**

3. Change Status to "Maintenance"

4. Save changes

5. Node will stop accepting new jobs

**Bringing Node Online**:

1. Open node details

2. Click **Edit**

3. Change Status to "Online"

4. Save changes

5. Node will start accepting jobs

### Monitoring Node Health

**Health Indicators**:

* **Healthy** (Green): All systems normal

* **Warning** (Orange): High resource usage or minor issues

* **Critical** (Red): Node failure or severe issues

**When to Check**:

* High CPU/Memory usage (>90%)

* Jobs failing frequently

* Network connectivity issues

* Hardware errors

### Deleting a Node

To remove a node from the cluster:

1. Navigate to node details

2. Click **Delete** button

3. Confirm deletion

> \[!WARNING] You cannot delete a node with running jobs. Stop or migrate jobs first.

**Before Deleting**:

* Ensure no jobs are running

* Migrate important jobs to other nodes

* Backup any local data

* Update cluster capacity planning

## Best Practices

**Resource Allocation**:

* Reserve some resources for system overhead

* Don't allocate 100% of available resources

* Monitor usage patterns and adjust

**Node Naming**:

* Use descriptive names: `gpu-node-01`, `cpu-highmem-02`

* Include node type in name

* Use consistent naming convention

**Maintenance**:

* Schedule regular maintenance windows

* Update node status before maintenance

* Monitor health indicators

* Keep firmware and drivers updated

**Tagging Strategy**:

* Use tags for organization: `production`, `development`

* Tag by capability: `high-memory`, `gpu`, `fast-storage`

* Tag by location: `datacenter-a`, `rack-10`

## Next Steps

* Submit [Jobs](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/jobs) to cluster nodes

* Run [Experiments](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/experiments) on GPU nodes

* Monitor resource usage in [Analytics](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/analytics)

* Deploy models via [Deployments](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/deployments)

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-architecture/products/kaisar-onenode/kaisar-provider/how-to-manage-kaisar-provider/connect-wallet.md

# Connect wallet

1. **Connect Wallet**: Click the **"Connect Wallet"** button on the interface.

2. **Select Wallet:** Choose your preferred wallet and connect to it.

3. **Sign In:** Click the **"Sign In"** button to confirm and complete the login process with your wallet.

---

# Source: https://docs.kaisar.io/kaisar-network/contact-and-support.md

# Contact and Support

For any queries or assistance during the sale, please contact:

* **Email**:

* **Support Portal**: [nodes.kaisar.io](http://nodes.kaisar.io)

* **Official Channels**:

* **X (formerly Twitter)**:

* **Telegram**:

* **Discord**:

* **LinkedIn**:

---

# Source: https://docs.kaisar.io/kaisar-network/origins/challenges/cost-challenges-with-traditional-cloud-gpu-providers..md

# Cost challenges with traditional cloud GPU providers.

The cost challenges associated with cloud GPU providers is highlighted by [Splunk](https://www.splunk.com/en_us/blog/learn/cloud-cost-trends.html) where the demand for GPUs, especially for training large AI models, has surged, leading to capacity constraints. Instances where multiple users require a large number of GPUs simultaneously can lead to contention and underutilization, driving up costs as cloud providers struggle to balance supply and demand efficiently.

Other prominent reasons for cost challenges are:

* Hidden costs such as egress fees also contribute significantly to the overall expense, often catching businesses by surprise (Source:[Splunk](https://www.splunk.com/en_us/blog/learn/cloud-cost-trends.html)).

* The methods for deploying GPU resources in the cloud, such as pass-through can be wasteful for smaller applications. GPU virtualization.(Source: [SpringerLink](https://link.springer.com/article/10.1007/s00170-023-11252-0)).

* Use of advanced technologies in AI models has introduced new costs in compute. While these technologies improve the performance of AI models they still require significant investment in infrastructure.(Source: [ar5iv](https://ar5iv.org/pdf/2401.12230)).

The plot from [ar5iv](https://ar5iv.org/pdf/2401.12230) below shows how the number of GPUs provisioned scales with the fluctuating request rate over time. As the request rate increases or decreases, the number of GPUs provisioned also adjusts accordingly to match the demand, demonstrating elastic resource scheduling.

---

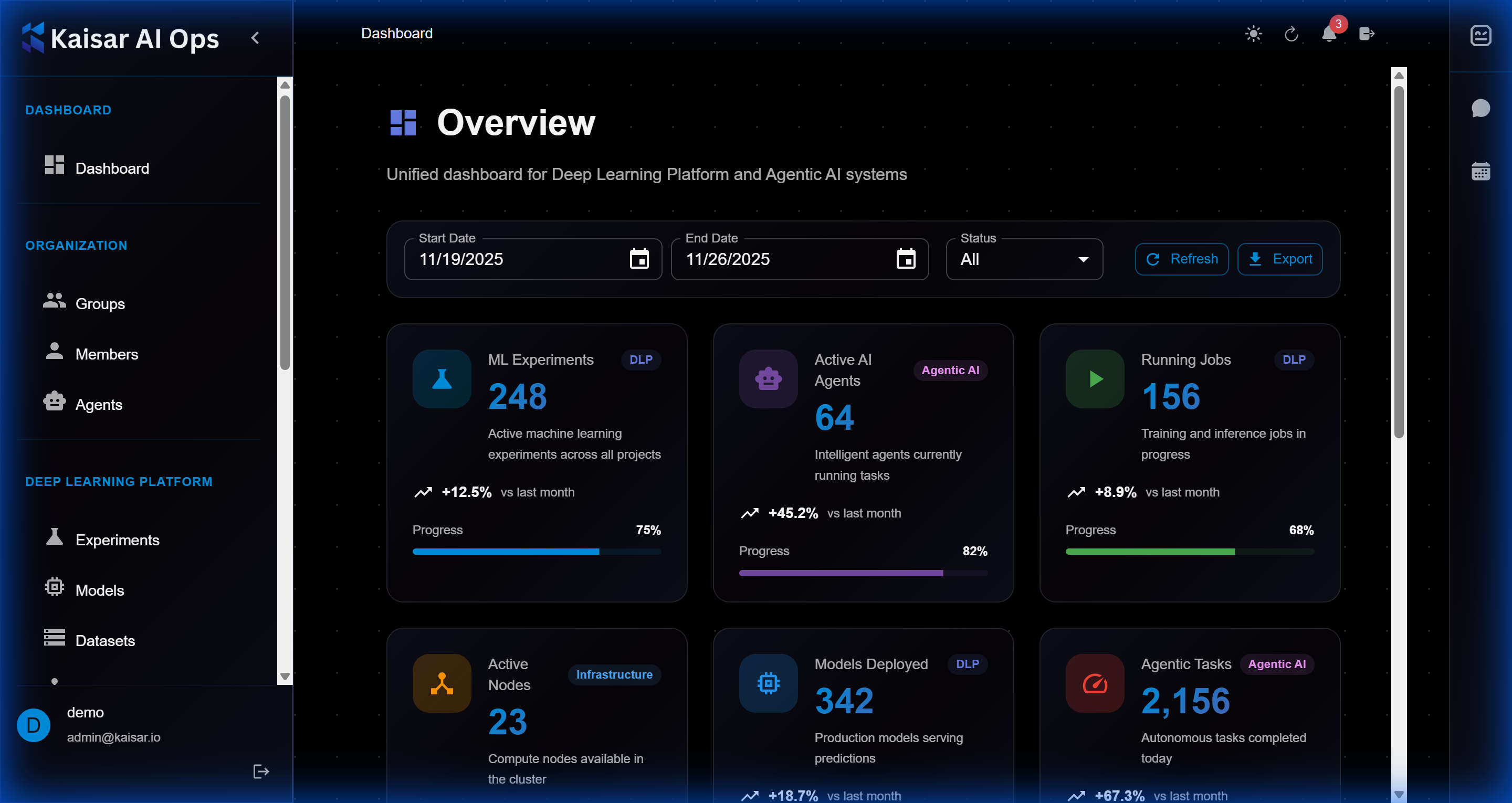

# Source: https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/dashboard/dashboard.md

# Dashboard

The Dashboard is your central hub for monitoring and managing all AI operations in Kaisar AI Ops.

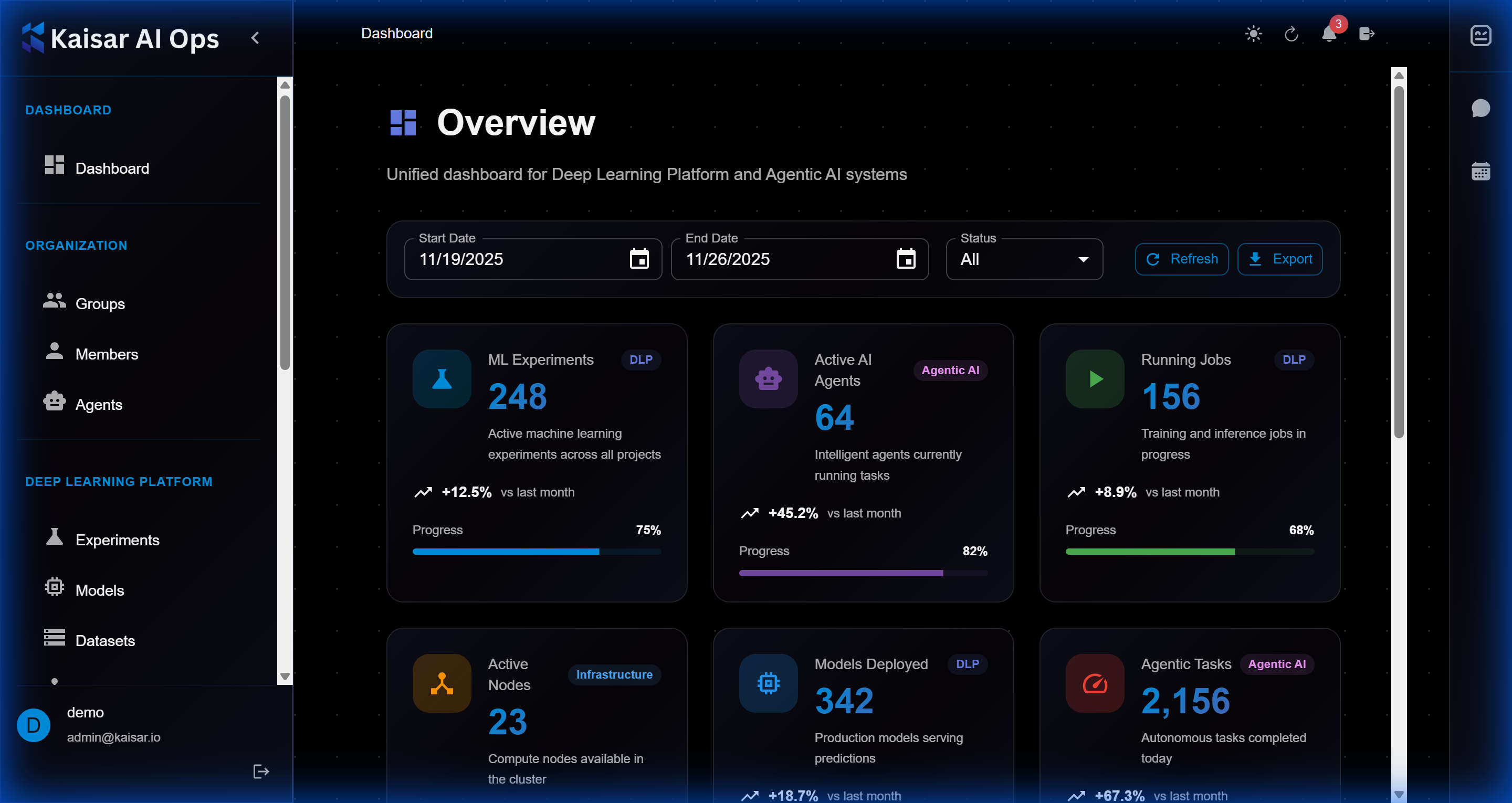

## Overview

The dashboard provides a comprehensive view of:

* Recent experiments and their status

* Active deployments and health metrics

* System resource usage

* Quick access to common actions

## Dashboard Components

### 1. Navigation Sidebar

Located on the left side, the sidebar provides access to:

* **Dashboard**: Main overview (current page)

* **Organization**: Team and access management

* **Deep Learning Platform**: Experiments, Models, Datasets

* **Deployments**: Production model deployments

### 2. Main Content Area

The central area displays:

* **Activity Feed**: Recent experiments, deployments, and updates

* **Status Cards**: Quick metrics and summaries

* **Charts and Graphs**: Visual representation of performance metrics

### 3. Top Bar

The top navigation bar includes:

* **Search**: Find experiments, models, datasets, or deployments

* **Notifications**: System alerts and updates

* **User Profile**: Access settings and logout

## Key Features

### Recent Activity

View your most recent:

* Experiment runs and their status (running, completed, failed)

* Model deployments and updates

* Dataset uploads and modifications

* Team member activities

### Quick Actions

Common tasks accessible from the dashboard:

* **Create Experiment**: Start a new ML training run

* **Deploy Model**: Push a model to production

* **Upload Dataset**: Add new training data

* **View Metrics**: Check system performance

### Metrics Overview

Monitor key performance indicators:

* **Active Experiments**: Number of currently running experiments

* **Total Models**: Registered models in your organization

* **Deployments**: Active production deployments

* **Resource Usage**: Compute and storage utilization

## Customization

### Filtering and Sorting

* Filter by date range, status, or owner

* Sort by creation time, name, or status

* Save custom views for quick access

### Widgets

Customize your dashboard by:

* Adding or removing metric widgets

* Rearranging layout

* Setting default views

## Navigation Tips

### Keyboard Shortcuts

* `Ctrl/Cmd + K`: Open search

* `Ctrl/Cmd + N`: Create new experiment

* `Ctrl/Cmd + D`: Go to dashboard

### Quick Search

Use the search bar to quickly find:

* Experiments by name or ID

* Models by version or tag

* Datasets by name

* Deployments by status

## Best Practices

1. **Check Daily**: Review your dashboard daily for updates and alerts

2. **Monitor Failures**: Address failed experiments or deployments promptly

3. **Track Resources**: Keep an eye on resource usage to avoid limits

4. **Stay Organized**: Use tags and naming conventions for easy filtering

---

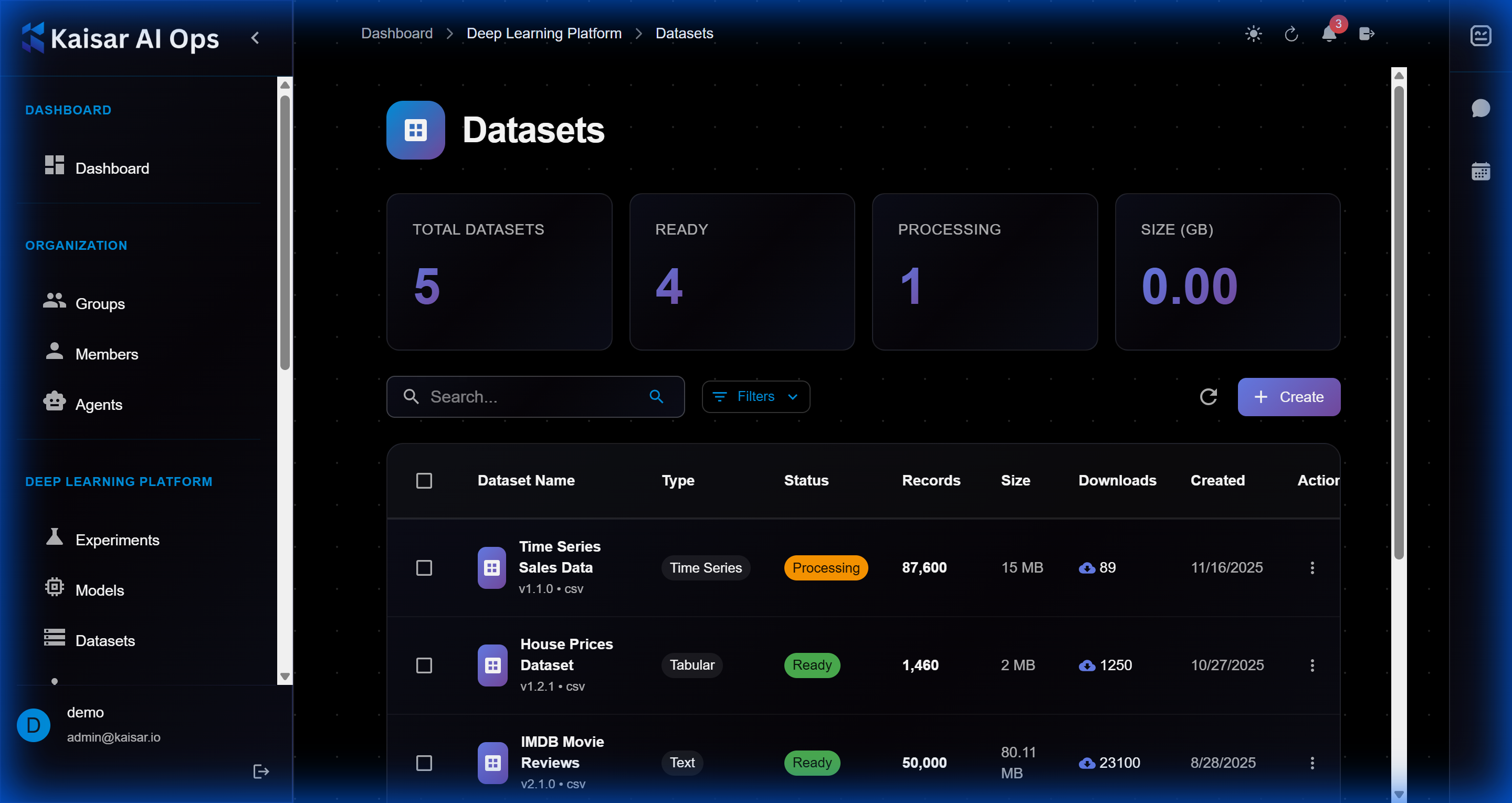

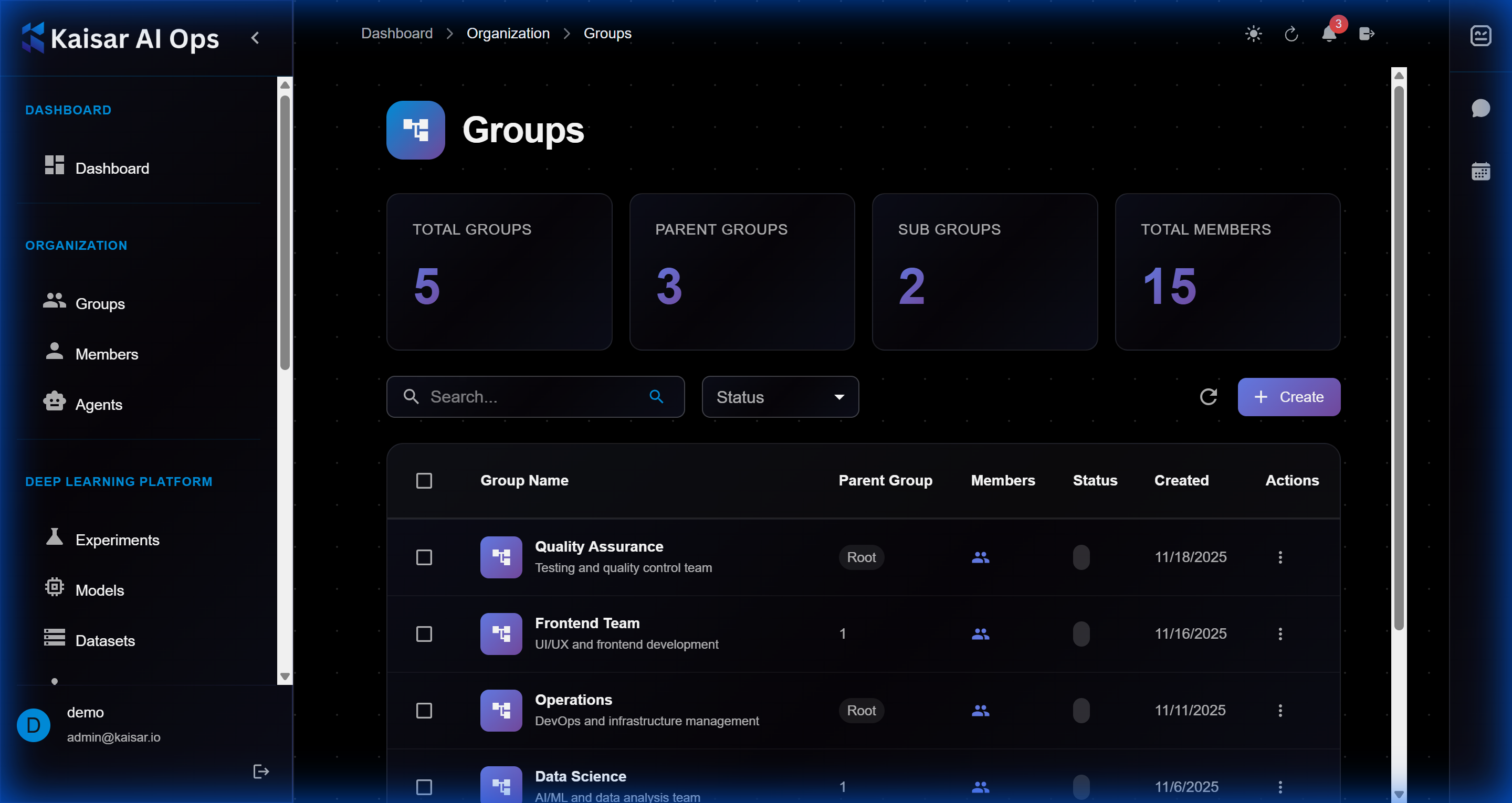

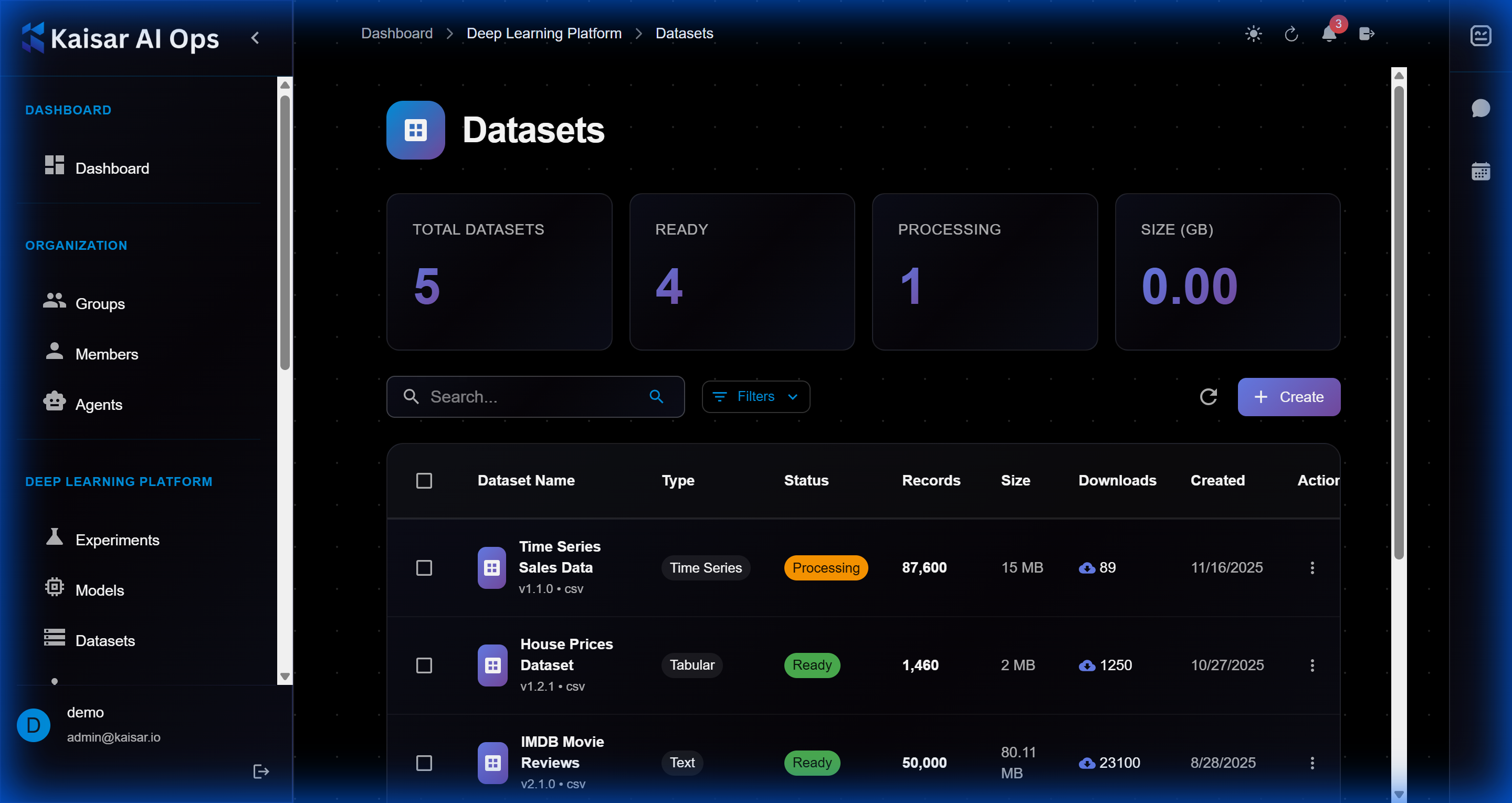

# Source: https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/datasets.md

# Datasets

Organize and version your training data.

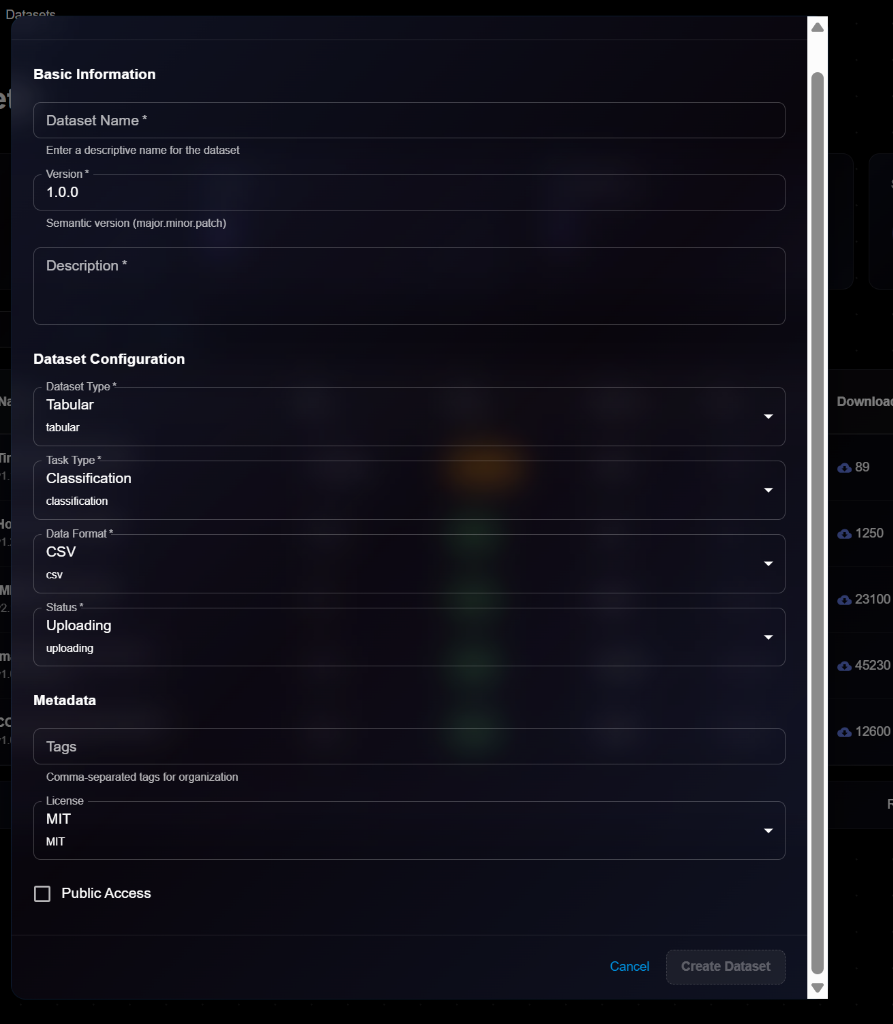

## Creating a Dataset

Navigate to **Deep Learning Platform** → **Datasets** → Click **Create**

### Basic Information

**Dataset Name**\* (Required)

* Enter a descriptive name for the dataset

* Example: `imagenet-1k`, `coco-2017`, `custom-dataset`

**Version**\* (Required)

* Semantic version (major.minor.patch)

* Default: `1.0.0`

* Helper text: "Semantic version (major.minor.patch)"

**Description**\* (Required)

* Detailed description of dataset contents and purpose

### Dataset Configuration

**Dataset Type**\* (Required)

* Select data type from dropdown:

* Tabular

* Image

* Text

* Audio

* Video

* Others

* Default: `tabular`

**Task Type**\* (Required)

* Select task type:

* Classification

* Regression

* Detection

* Segmentation

* Others

* Default: `classification`

**Data Format**\* (Required)

* Select file format:

* CSV

* JSON

* Parquet

* TFRecord

* Others

* Default: `csv`

**Status**\* (Required)

* Current dataset status:

* Uploading

* Processing

* Ready

* Failed

* Default: `uploading`

### Metadata

**Tags** (Optional)

* Comma-separated tags for organization

* Example: `computer-vision, training, augmented`

**License** (Required)

* Select license:

* MIT

* CC BY 4.0

* CC0

* Apache 2.0

* Proprietary

* Default: `MIT`

**Public Access** (Checkbox)

* Make dataset accessible to all organization members

### Actions

* **Cancel**: Discard and close

* **Create Dataset**: Submit and create the dataset

## Example Configuration

```yaml

Dataset Name: imagenet-subset-2024

Version: 1.0.0

Description: ImageNet subset with 100 classes for quick experimentation

Dataset Type: Tabular

Task Type: Classification

Data Format: CSV

Status: Uploading

Tags: computer-vision, subset, training

License: MIT

Public Access: ✓

```

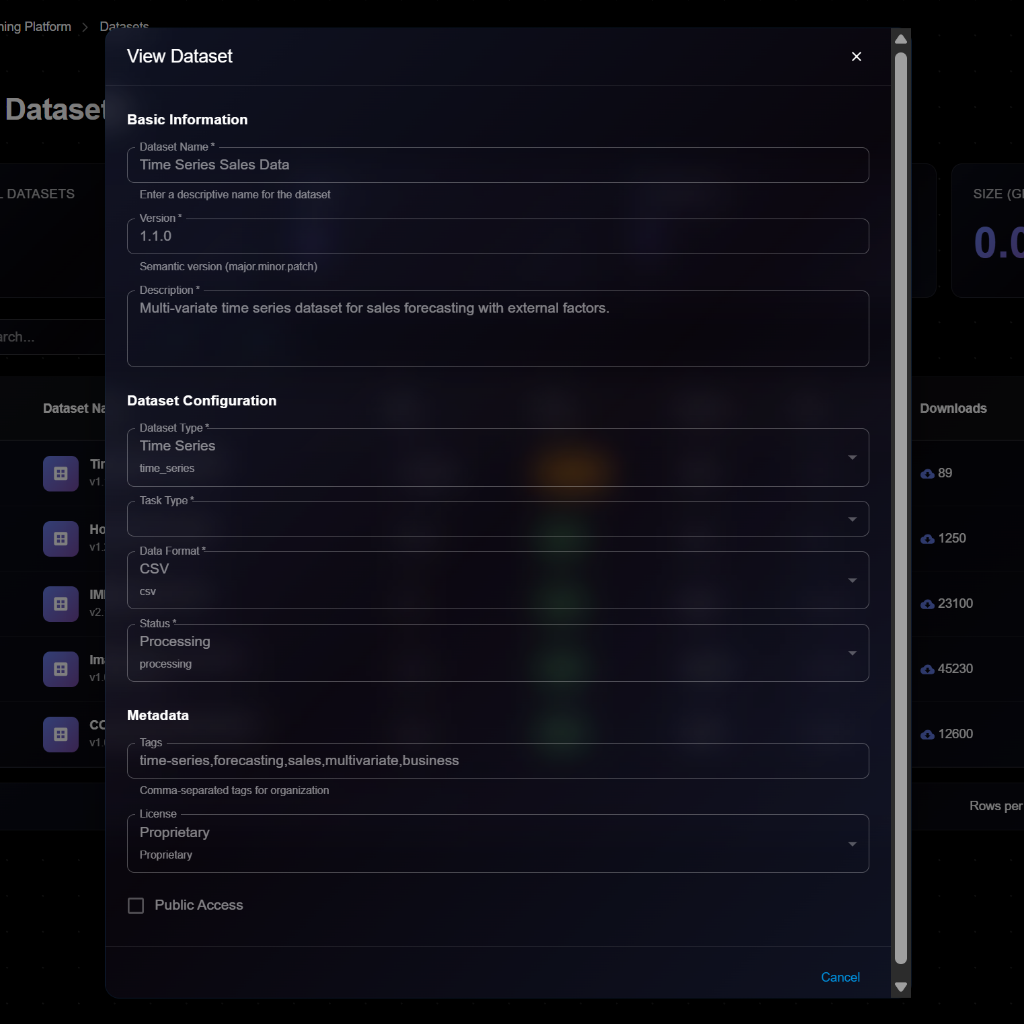

## Viewing Dataset Details

To view detailed information about a dataset:

1. Navigate to **Deep Learning Platform** → **Datasets**

2. Click on a dataset from the list

3. View comprehensive details in the modal dialog

**Details Panel Sections**:

**Basic Information**:

* **Dataset Name**: e.g., "Time Series Sales Data"

* **Version**: Semantic version (e.g., 1.1.0)

* **Description**: Full description of the dataset

**Dataset Configuration**:

* **Dataset Type**: Time Series, Tabular, Image, Text, Audio, Video

* **Task Type**: Forecasting, Classification, Regression, etc.

* **Data Format**: CSV, JSON, Parquet, TFRecord, etc.

* **Status**: Processing, Uploading, Ready, Failed

**Metadata**:

* **Tags**: Comma-separated tags (e.g., "time-series,forecasting,sales,multivariate,business")

* **License**: Proprietary, MIT, CC BY 4.0, etc.

* **Public Access**: Checkbox for visibility

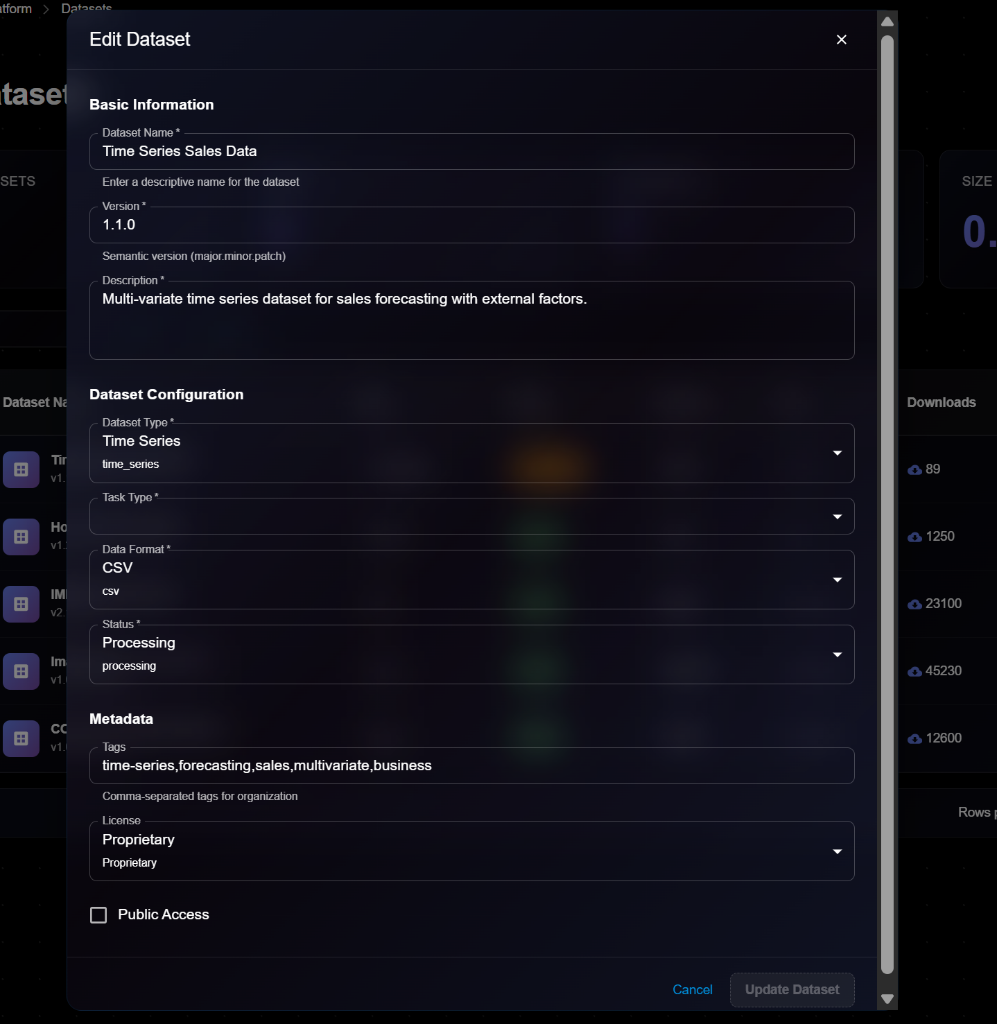

## Editing a Dataset

To update dataset information:

1. Open dataset details page

2. Click **Edit** button (or three-dot menu → Edit)

3. Modify editable fields in the Edit Dataset modal

4. Click **Update Dataset** to save changes

> \[!NOTE] The Edit form is identical to the View form, but with editable fields and an "Update Dataset" button.

**Editable Fields**:

* ✅ Description

* ✅ Tags

* ✅ License

* ✅ Public Access

* ❌ Dataset Name (cannot edit)

* ❌ Version (create new version instead)

* ❌ Data Type (cannot edit)

* ❌ Data Format (cannot edit)

## Downloading Dataset

To download dataset files:

1. Open dataset details

2. Click **Download** button

3. Select download format:

* Original format

* Compressed archive

* Specific splits (train/val/test)

4. Download will start

**Download Options**:

* Full dataset

* Specific splits only

* Sample subset

* Metadata only

## Creating a New Version

To create a new version of a dataset:

1. Open dataset details

2. Click **New Version** button

3. Enter new version number

4. Upload updated data

5. Document changes in description

6. Click **Create**

**When to Version**:

* Added new samples

* Fixed data errors

* Changed preprocessing

* Updated annotations

* Removed corrupted files

## Deleting a Dataset

To remove a dataset:

1. Navigate to dataset details

2. Click **Delete** button

3. Confirm deletion

> \[!WARNING] You cannot delete a dataset that is being used by running experiments. Stop experiments first.

**Before Deleting**:

* Check for experiments using this dataset

* Download data if needed

* Update documentation

* Consider archiving instead

## Dataset Versioning

**When to Create New Version**:

* Added new samples

* Fixed annotation errors

* Changed preprocessing

* Updated splits

* Removed corrupted data

## Best Practices

**Organization**:

* Use consistent naming conventions

* Document data collection process

* Include data cards/datasheets

* Provide sample data for preview

**Quality Control**:

* Validate data integrity

* Check for label errors

* Monitor class balance

* Document known issues

## Next Steps

* Use dataset in [Experiments](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/experiments)

* Track data lineage

* Monitor usage in [Analytics](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/analytics)

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/deployments.md

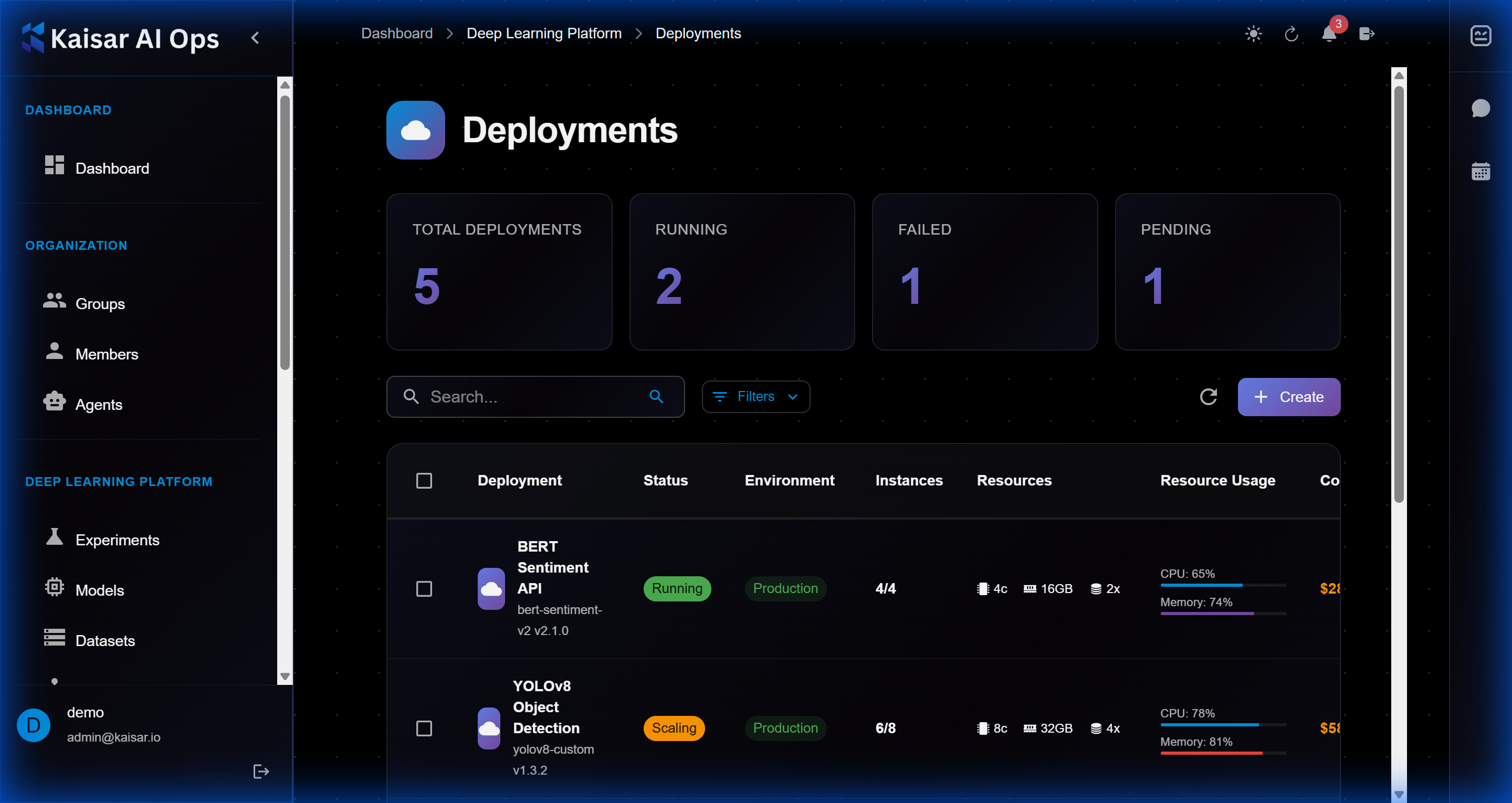

# Deployments

Deploy your models to production with auto-scaling and load balancing.

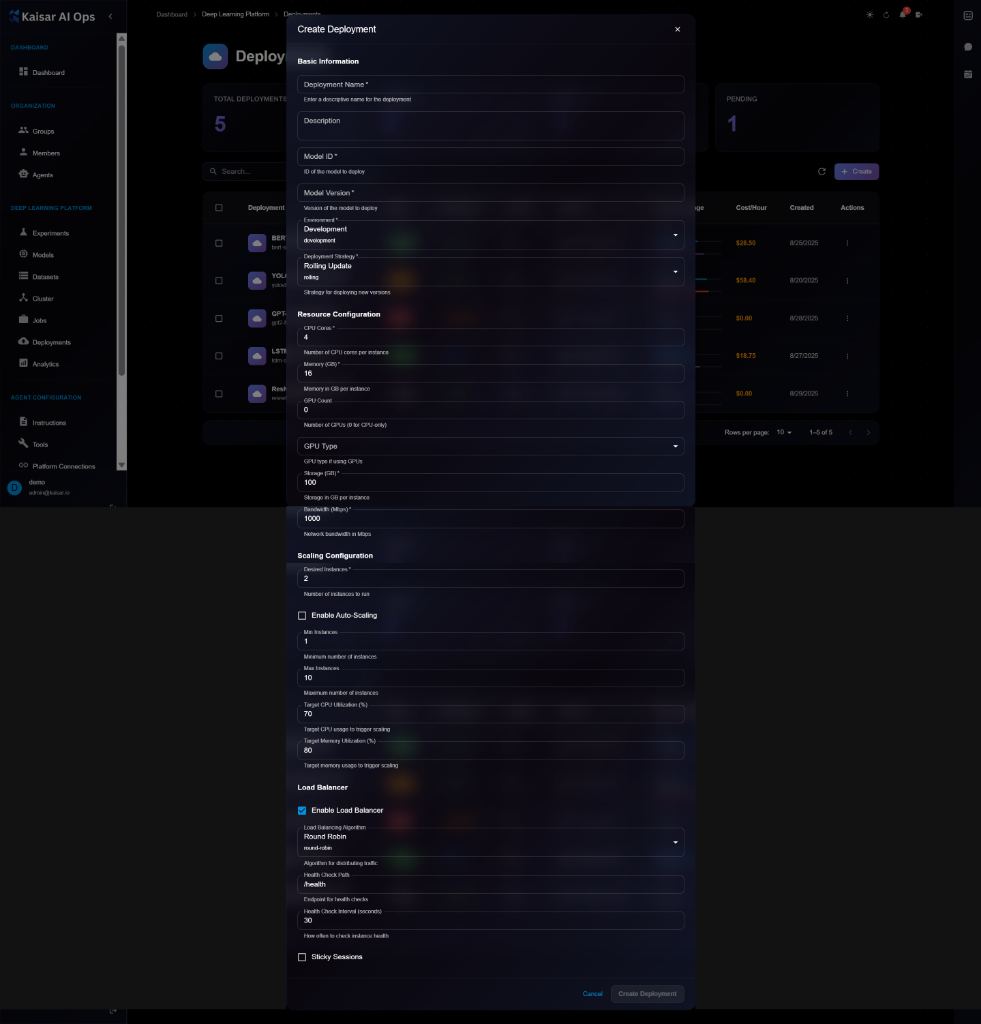

## Creating a Deployment

Navigate to **Deployments** → Click **Create**

### Basic Information

**Deployment Name**\* (Required)

* Enter a descriptive name for the deployment

* Example: `resnet-prod`, `bert-api-v1`

**Description** (Optional)

* Deployment purpose and details

**Model ID**\* (Required)

* ID of the model to deploy

* Helper text: "ID of the model to deploy"

**Model Version**\* (Required)

* Version of the model to deploy

* Helper text: "Version of the model to deploy"

**Environment**\* (Required)

* Select deployment environment:

* Development

* Staging

* Production

* Default: `development`

### Resource Configuration

**CPU Cores**\* (Required)

* Number of CPU cores per instance

* Example: `4`, `8`, `16`

**Memory (GB)**\* (Required)

* Memory allocation per instance in GB

* Example: `8`, `16`, `32`

**GPU Count** (Optional)

* Number of GPUs (0 or GPU Count)

* Default: `0`

**GPU Type** (Optional)

* Select GPU type if GPU Count > 0:

* NVIDIA T4

* NVIDIA V100

* NVIDIA A100

**Min Replicas**\* (Required)

* Minimum number of instances

* Example: `1`, `2`

**Max Replicas**\* (Required)

* Maximum number of instances

* Example: `10`, `20`

**Target CPU Utilization (%)**\* (Required)

* CPU threshold for scaling

* Example: `70`, `80`

**Target Memory Utilization (%)**\* (Required)

* Memory threshold for scaling

* Example: `80`, `90`

### Scaling Configuration

**Enable Auto-Scaling** (Checkbox)

* Enable automatic scaling based on metrics

When enabled:

* **Min Instances**\*: Minimum instances to maintain

* **Max Instances**\*: Maximum instances allowed

* **Target GPU Utilization (%)**: GPU threshold

* **Target Memory Utilization (%)**: Memory threshold

### Load Balancer

**Enable Load Balancer** (Checkbox)

* Enable load balancing across instances

When enabled:

* **Service Type**\*: Round Robin, Least Connections, IP Hash

* **Health Check URL**\*: Endpoint for health checks (e.g., `/health`)

* **Health Check Interval (seconds)**\*: Frequency of health checks

* **Sticky Sessions**: Enable session affinity

### Actions

* **Cancel**: Discard and close

* **Create Deployment**: Submit and create the deployment

## Example Configuration

```yaml

Deployment Name: resnet-production-v1

Model ID: model_abc123

Model Version: 1.0.0

Environment: Development

Resource Configuration:

CPU Cores: 4

Memory (GB): 16

GPU Count: 0

Min Replicas: 2

Max Replicas: 10

Scaling:

Enable Auto-Scaling: ✓

Min Instances: 2

Max Instances: 10

Target CPU: 70%

Load Balancer:

Enable: ✓

Service Type: Round Robin

Health Check URL: /health

Health Check Interval: 30s

Sticky Sessions: ✓

```

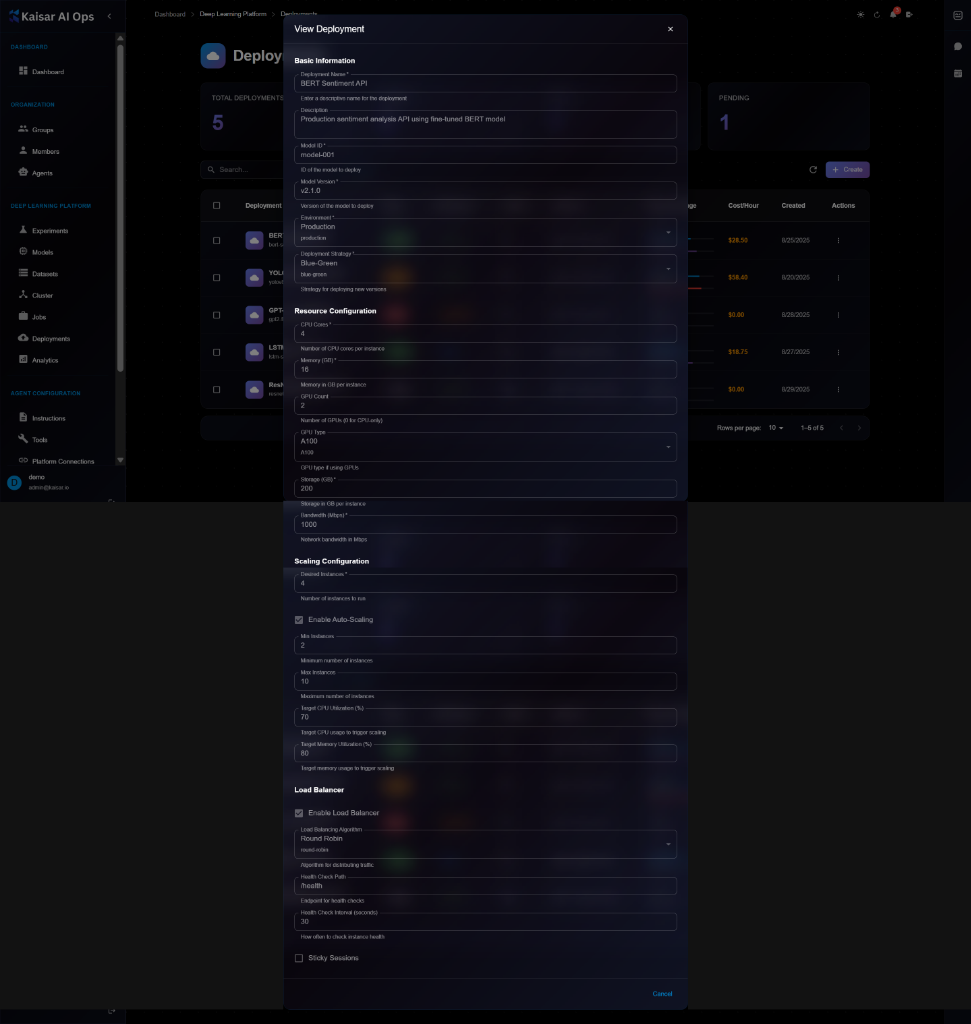

## Viewing Deployment Details

To view detailed information about a deployment:

1. Navigate to **Deployments**

2. Click on a deployment from the list

3. View comprehensive details in the modal dialog

**Details Panel Sections**:

**Basic Information**:

* **Deployment Name**: e.g., "BERT Sentiment API"

* **Description**: Full description of the deployment

* **Model ID**: ID of the deployed model (e.g., "model-001")

* **Model Version**: Version being deployed (e.g., "v2.1.0")

* **Environment**: Production, Staging, or Development

* **Deployment Strategy**: Blue-Green, Rolling Update, Canary

**Resource Configuration**:

* **CPU Cores**: Number of CPU cores per instance (e.g., 4)

* **Memory (GB)**: Memory allocation (e.g., 16 GB)

* **GPU Count**: Number of GPUs (e.g., 2)

* **GPU Type**: GPU model (e.g., A100, V100)

* **Storage (GB)**: Storage allocation (e.g., 200 GB)

* **Network Bandwidth (Mbps)**: Network bandwidth (e.g., 1000 Mbps)

**Scaling Configuration**:

* **Current Instances**: Number of running instances (e.g., 2)

* **Enable Auto-Scaling**: Checkbox status

* **Min Instances**: Minimum replicas (e.g., 2)

* **Max Instances**: Maximum replicas (e.g., 10)

* **Target CPU Utilization (%)**: CPU scaling threshold (e.g., 70%)

* **Target GPU usage for trigger scaling**: GPU threshold

* **Target Memory Utilization (%)**: Memory threshold (e.g., 80%)

* **Target memory usage for trigger scaling**: Memory threshold

**Load Balancer**:

* **Enable Load Balancer**: Checkbox status

* **Load Balancing Algorithm**: Round Robin, Least Connections, IP Hash

* **Health Check Path**: Endpoint for health checks (e.g., "/health")

* **Health Check Interval (seconds)**: Check frequency (e.g., 30)

* **Sticky Sessions**: Checkbox for session affinity

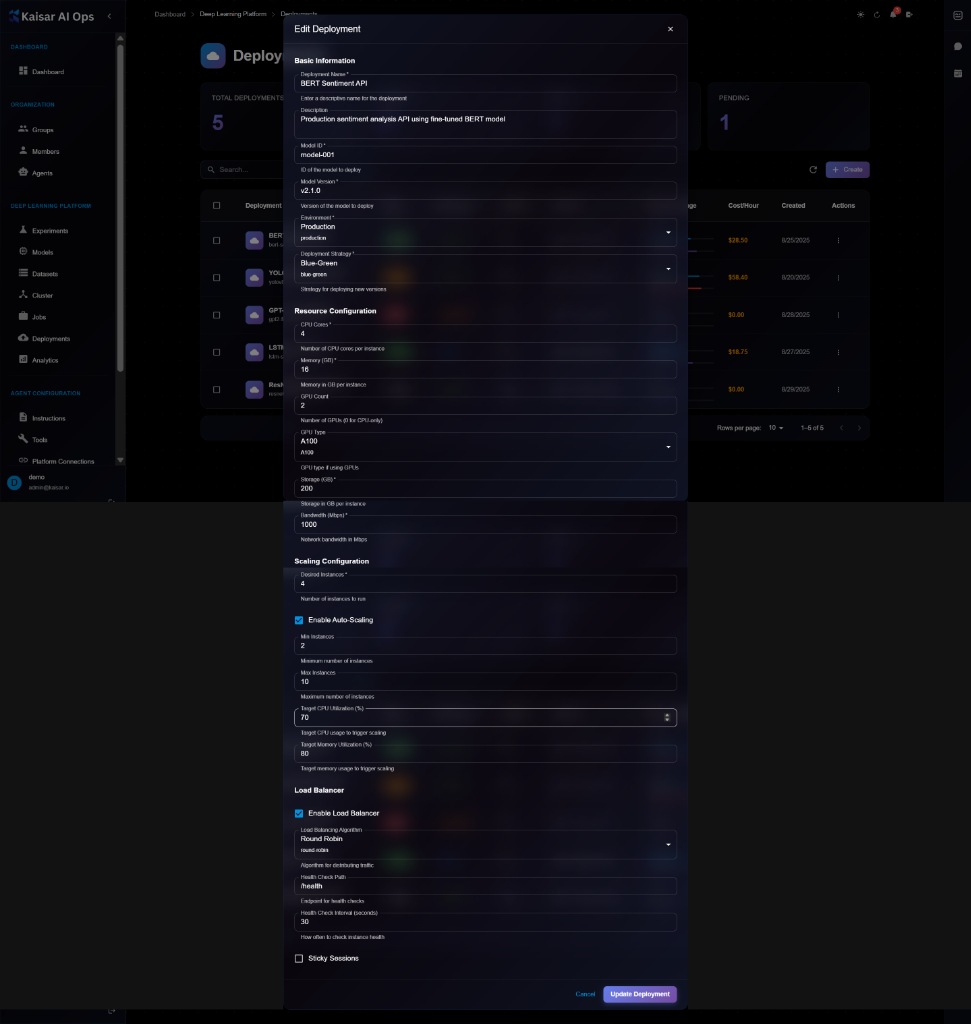

## Editing a Deployment

To update deployment configuration:

1. Open deployment details page

2. Click **Edit** button (or three-dot menu → Edit)

3. Modify editable fields in the Edit Deployment modal

4. Click **Update Deployment** to save changes

> \[!NOTE] The Edit form is identical to the View form, but with editable fields and an "Update Deployment" button. Some changes may require a deployment restart.

> \[!NOTE] Some changes may require a deployment restart to take effect.

**Editable Fields**:

* ✅ Description

* ✅ Environment variables

* ✅ Min/Max replicas

* ✅ Auto-scaling thresholds

* ✅ Health check settings

* ✅ Load balancer configuration

* ⚠️ CPU/Memory (requires restart)

* ❌ Model ID (use Update Model instead)

* ❌ Deployment name (cannot edit)

## Updating Model Version

To deploy a new model version:

1. Open deployment details

2. Click **Update Model** button

3. Select new model version

4. Choose update strategy:

* **Rolling Update**: Gradual replacement (zero downtime)

* **Blue-Green**: Switch all at once

* **Canary**: Test with small percentage first

5. Click **Update**

**Update Strategies**:

**Rolling Update** (Recommended):

* Gradually replaces old instances

* Zero downtime

* Automatic rollback on failure

**Blue-Green**:

* Deploys new version alongside old

* Switches traffic all at once

* Quick rollback possible

**Canary**:

* Routes small % of traffic to new version

* Monitor performance

* Gradually increase if successful

## Scaling a Deployment

**Manual Scaling**:

1. Open deployment details

2. Click **Scale** button

3. Adjust number of replicas

4. Click **Apply**

**Auto-scaling**:

1. Open deployment details

2. Click **Edit**

3. Enable auto-scaling

4. Set min/max replicas

5. Configure scaling triggers

6. Save changes

## Stopping a Deployment

To temporarily stop a deployment:

1. Open deployment details

2. Click **Stop** button

3. Confirm action

4. All instances will shut down

5. Endpoint will become unavailable

**Use Cases**:

* Maintenance window

* Cost optimization

* Testing in isolation

## Restarting a Deployment

To restart a stopped deployment:

1. Open deployment details

2. Click **Start** button

3. Deployment will resume with previous configuration

## Deleting a Deployment

To permanently remove a deployment:

1. Navigate to deployment details

2. Click **Delete** button

3. Confirm deletion

> \[!WARNING] Deleting a deployment will:

>

> * Shut down all instances

> * Remove the endpoint

> * Delete deployment configuration

> * This action cannot be undone!

**Before Deleting**:

* Stop sending traffic to the endpoint

* Update client applications

* Export logs if needed

* Verify you have the correct deployment

## Monitoring Deployments

**Real-time Metrics**:

* Request rate

* Latency (p50, p95, p99)

* Error rate

* Resource usage

**Actions**:

* Scale up/down

* Update model version

* View logs

* Rollback

## Best Practices

* Set appropriate min/max replicas

* Configure auto-scaling thresholds

* Enable health checks

* Use load balancing for high traffic

* Monitor performance continuously

## Next Steps

* Monitor in [Analytics](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/analytics)

* View logs and metrics

* Set up alerts

---

# Source: https://docs.kaisar.io/kaisar-network/origins/faq/does-kaisar-use-blockchain-technology.md

# Does Kaisar use blockchain technology?

Kaisar operates on the peaq blockchain, which is optimized for Decentralized Physical Infrastructure Networks. The peaq blockchain supports scalable, decentralized AI/ML model training and provides a secure, cost-effective environment for managing GPU resources.

---

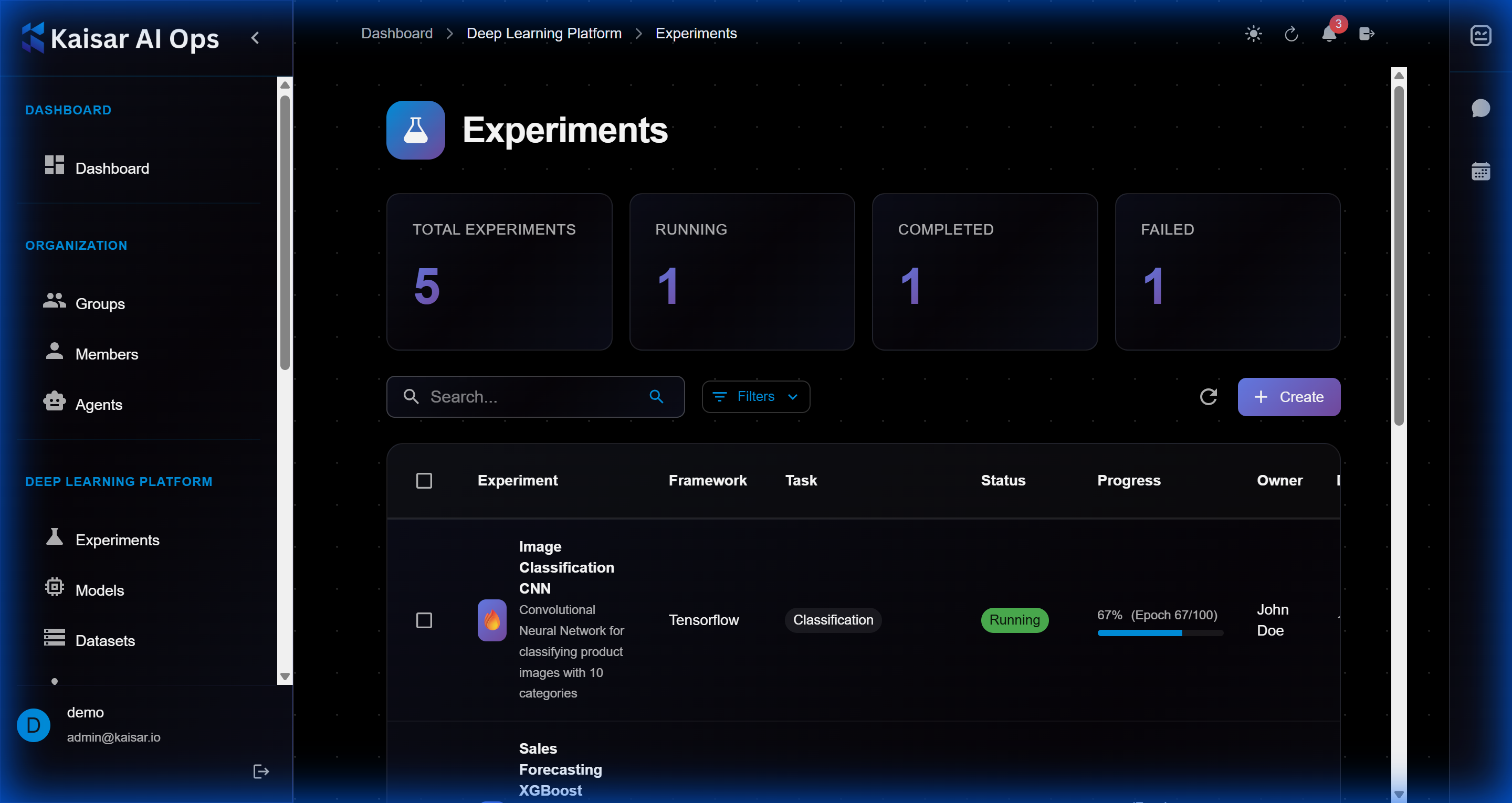

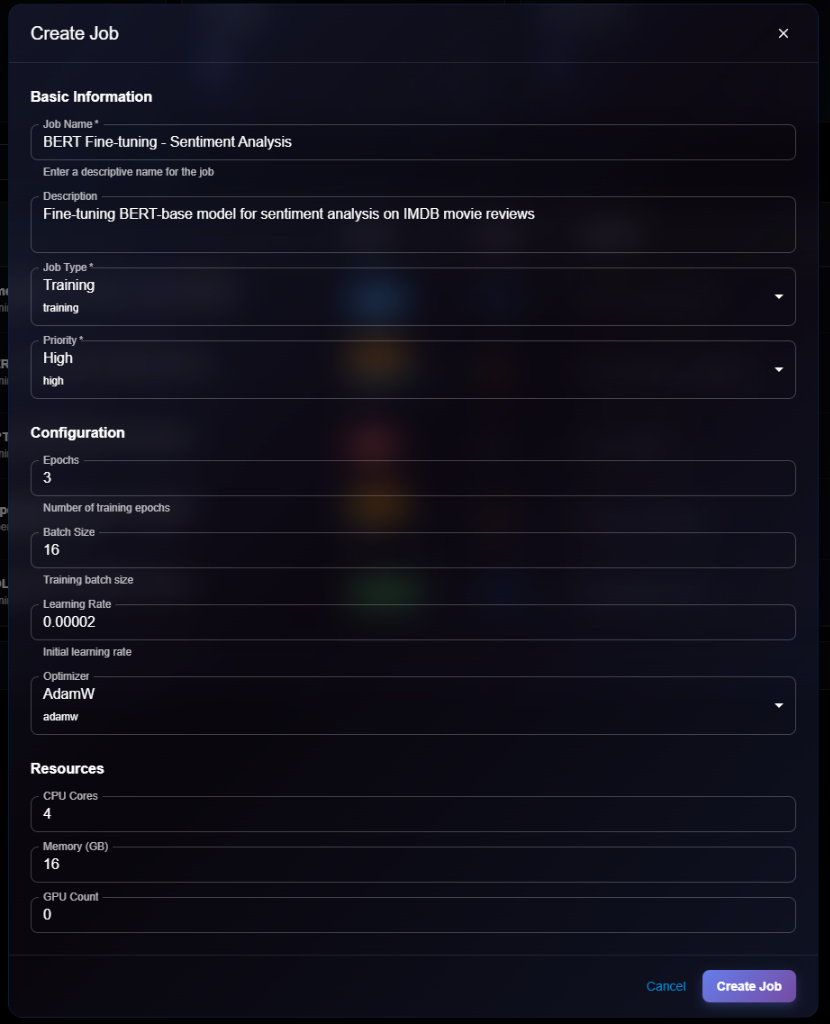

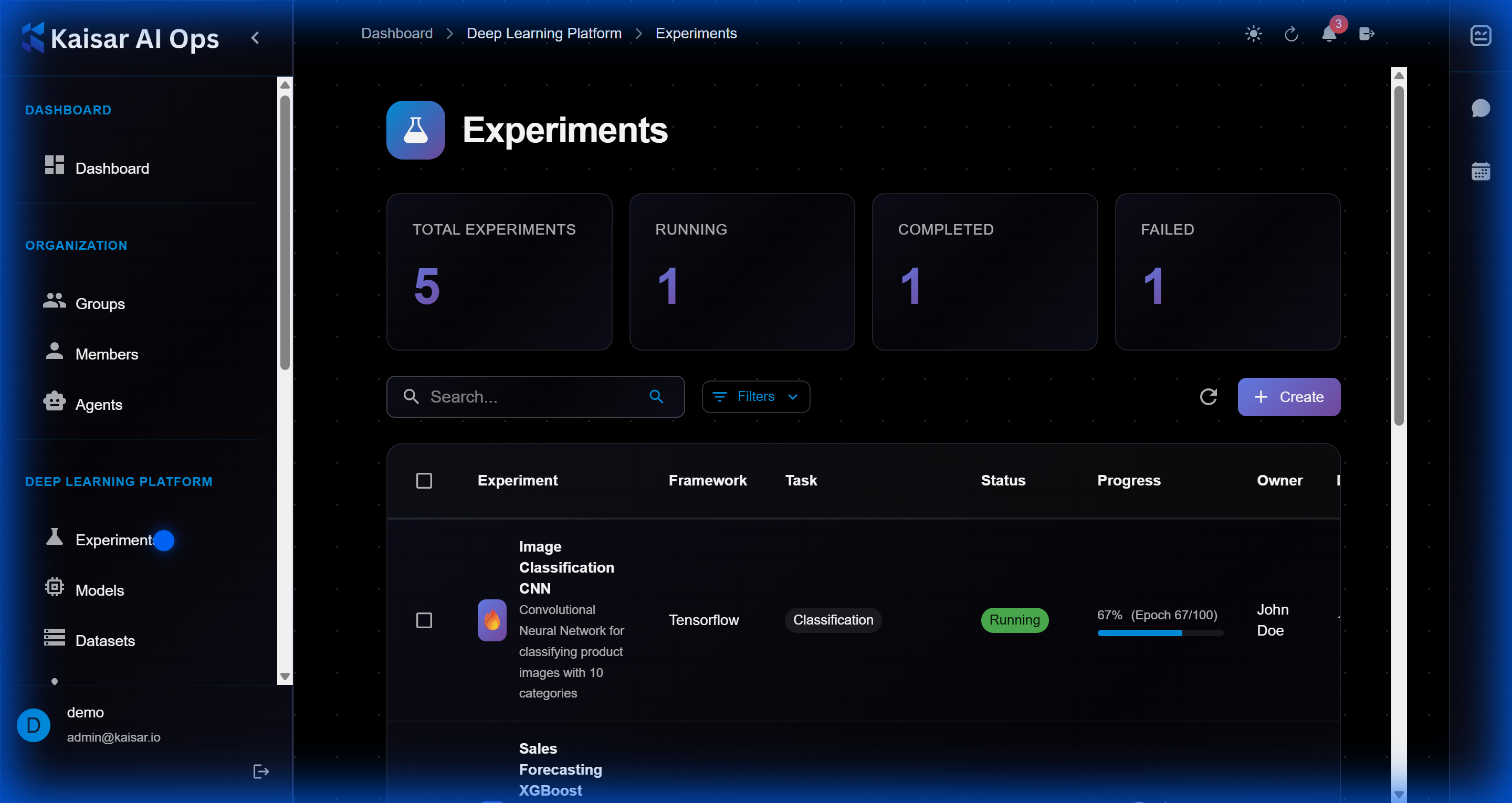

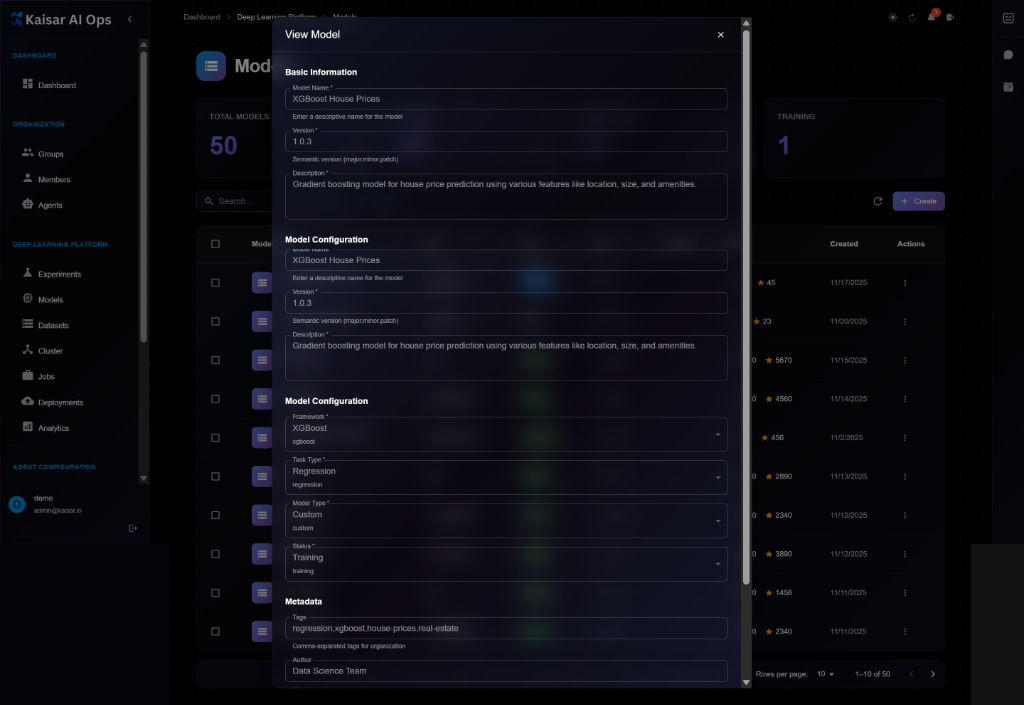

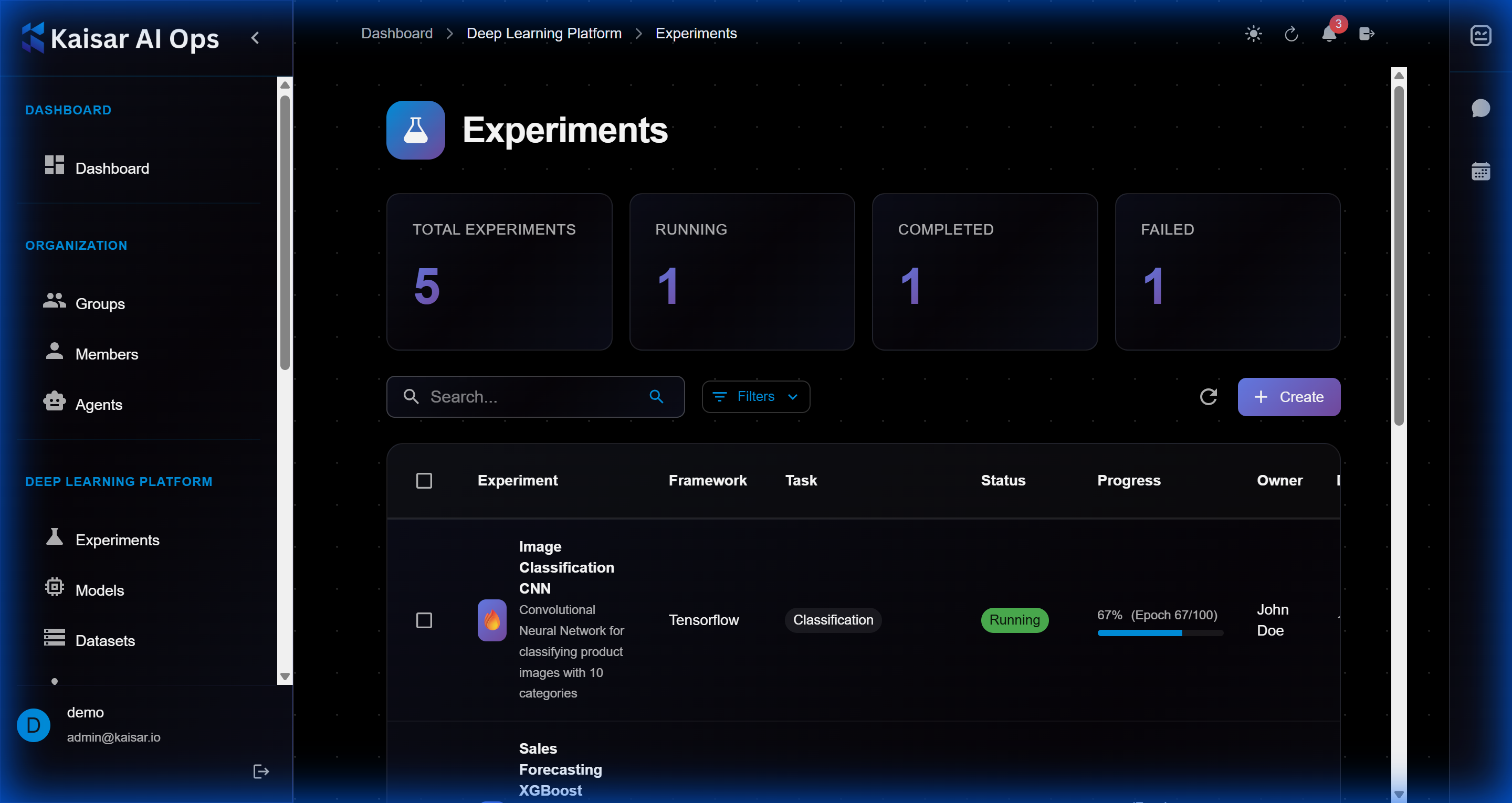

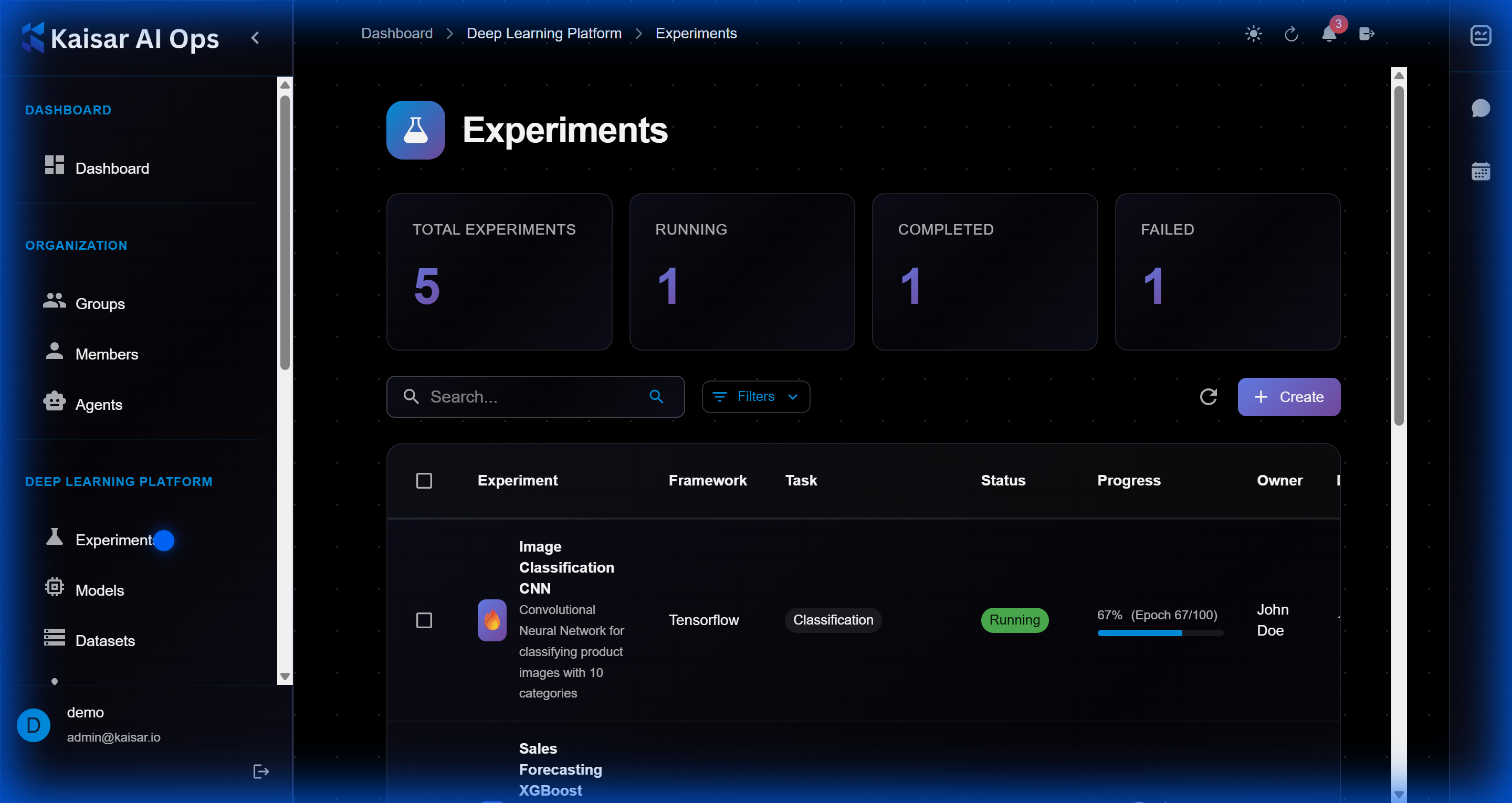

# Source: https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/experiments.md

# Experiments

Track your machine learning experiments with detailed logging and comparison tools.

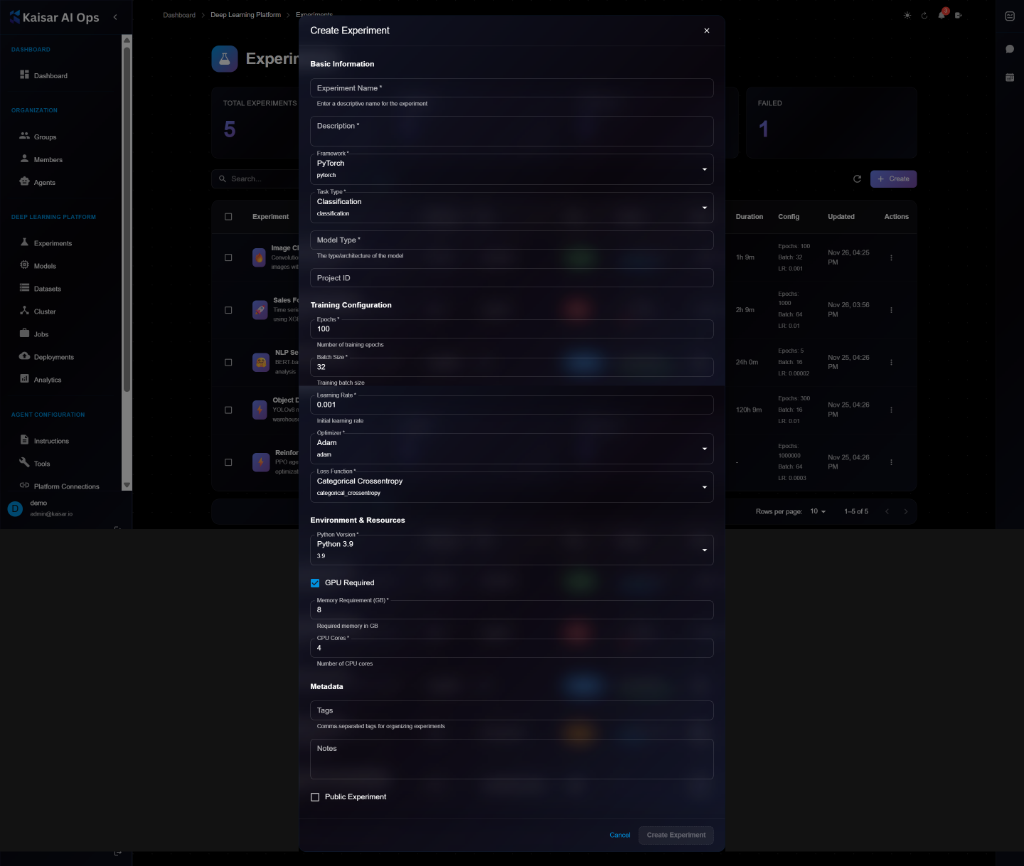

## Creating an Experiment

Navigate to **Deep Learning Platform** → **Experiments** → Click **Create**

### Basic Information

**Experiment Name**\* (Required)

* Enter a descriptive name for the experiment

* Example: `image-classification-resnet`, `nlp-sentiment-bert`

**Description** (Optional)

* Detailed description of experiment purpose and goals

**Framework**\* (Required)

* Select your ML framework from dropdown:

* PyTorch

* TensorFlow

* Scikit-learn

* Keras

* Others

* Default: `pytorch`

**Task Type**\* (Required)

* Select the ML task type:

* Classification

* Regression

* Detection

* Segmentation

* Others

* Default: `classification`

**Model Type**\* (Required)

* Specify the model architecture

* Examples: ResNet, BERT, YOLO, Custom

**Project ID** (Optional)

* Link experiment to a specific project

### Training Configuration

**Epochs**\* (Required)

* Number of training epochs

* Example: `100`

**Number of training epochs** (Helper text)

* Additional context for epochs setting

**Staging batch size** (Optional)

* Batch size for staging/validation

**Learning Rate**\* (Required)

* Initial learning rate for training

* Example: `0.001`

* Helper text: "Valid learning rate"

**Loss Function**\* (Required)

* Select optimizer from dropdown:

* Adam

* SGD

* RMSprop

* Others

* Default: `adam`

**Loss Function**\* (Required)

* Select loss function:

* Categorical Crossentropy

* Binary Crossentropy

* MSE

* Others

* Default: `categorical_crossentropy`

### Environment & Resources

**Python Version**\* (Required)

* Select Python version:

* Python 3.9

* Python 3.10

* Python 3.11

* Default: `python`

**GPU Required** (Checkbox)

* Check if GPU is required for training

**Memory Requirement (GB)**\* (Required)

* Required memory in GB

* Example: `8`, `16`, `32`

**Required memory in GB** (Helper text)

**CPU Cores**\* (Required)

* Number of CPU cores needed

* Example: `4`, `8`, `16`

**Number of CPU cores** (Helper text)

### Metadata

**Tags** (Optional)

* Comma-separated tags for organizing experiments

* Example: `computer-vision, production, baseline`

**Notes** (Optional)

* Additional notes or comments about the experiment

**Public Experiment** (Checkbox)

* Make experiment visible to all organization members

### Actions

* **Cancel**: Discard and close the form

* **Create Experiment**: Submit and create the experiment

## Example Configuration

```yaml

Experiment Name: resnet50-imagenet-baseline

Description: Baseline training of ResNet50 on ImageNet dataset

Framework: PyTorch

Task Type: Classification

Model Type: ResNet50

Epochs: 90

Learning Rate: 0.1

Loss Function: Adam

Loss Function: Categorical Crossentropy

Python Version: Python 3.9

GPU Required: ✓

Memory Requirement: 32 GB

CPU Cores: 16

Tags: computer-vision, classification, baseline

Public Experiment: ✓

```

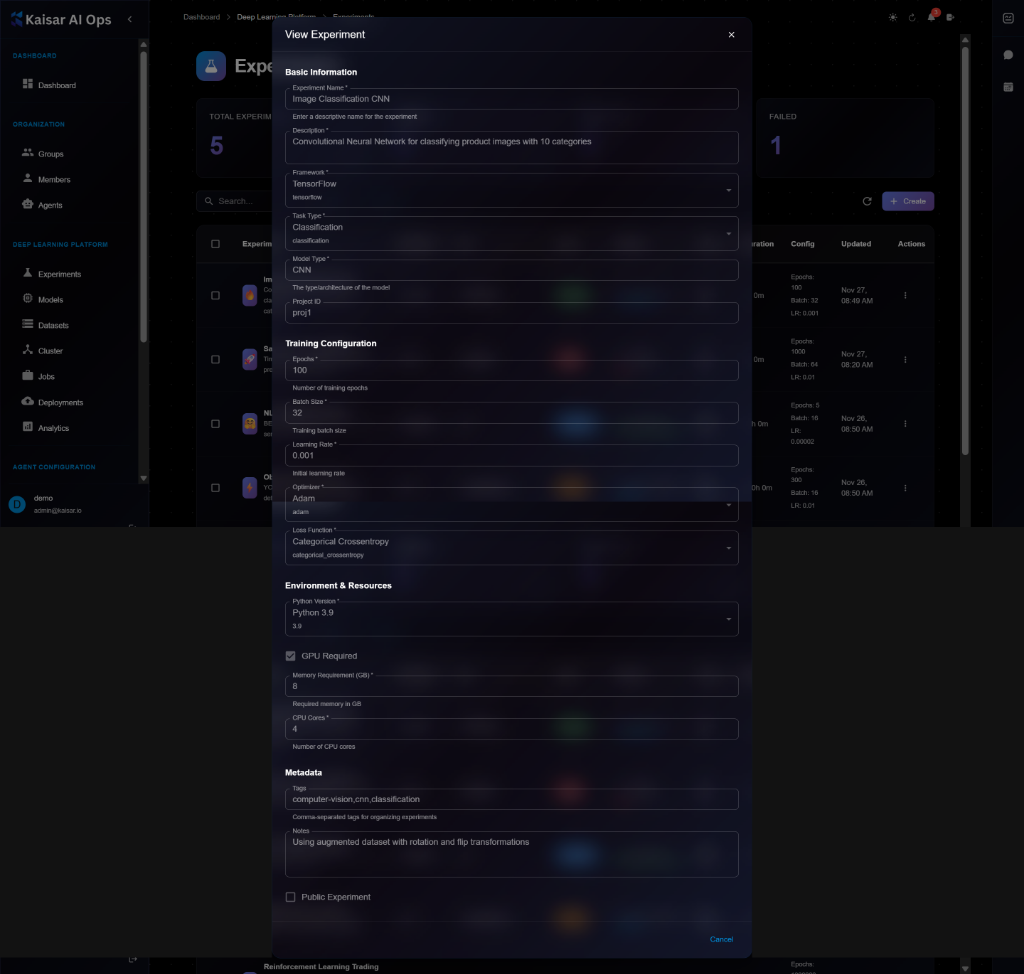

## Viewing Experiment Details

To view detailed information about an experiment:

1. Navigate to **Deep Learning Platform** → **Experiments**

2. Click on an experiment from the list

3. View comprehensive details in the modal dialog

**Details Panel Sections**:

* **Basic Information**:

* Experiment Name: e.g., "Image Classification CNN"

* Description: Full description of the experiment

* Framework: TensorFlow, PyTorch, etc.

* Task Type: Classification, Regression, etc.

* Model Type: CNN, ResNet, Custom, etc.

* Project ID: Associated project

* **Training Configuration**:

* Epochs: Number of training epochs (e.g., 100)

* Batch Size: Training batch size (e.g., 32)

* Learning Rate: Initial learning rate (e.g., 0.001)

* Optimizer: Adam, SGD, etc.

* Loss Function: Categorical Crossentropy, MSE, etc.

* **Environment & Resources**:

* Python Version: e.g., Python 3.9

* GPU Required: Checkbox status

* Memory Requirement (GB): e.g., 8 GB

* CPU Cores: e.g., 4 cores

* **Metadata**:

* Tags: Comma-separated tags

* Notes: Additional notes

* Public Experiment: Visibility status

* Creator and timestamps

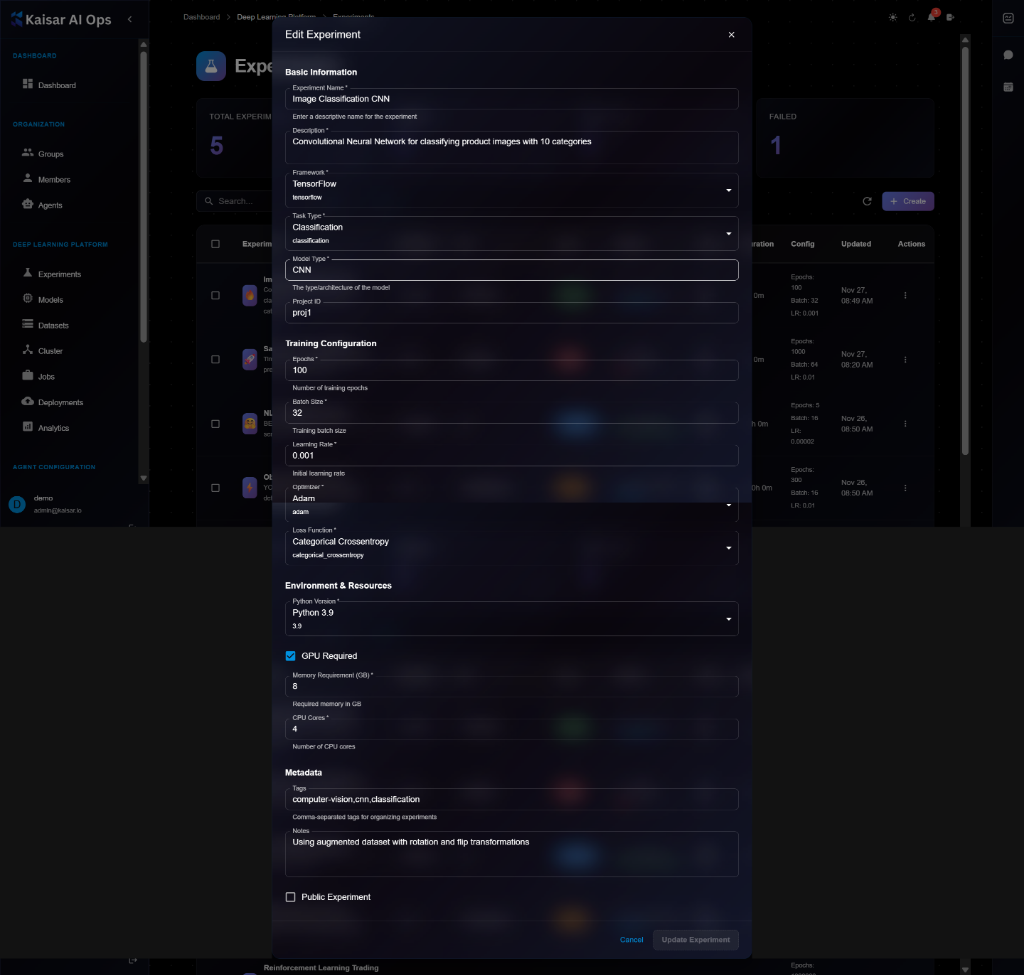

## Editing an Experiment

To modify an experiment configuration:

1. Navigate to the experiment details page

2. Click **Edit** button (or three-dot menu → Edit)

3. Modify editable fields in the Edit Experiment modal

4. Click **Update Experiment** to save changes

> \[!NOTE] The Edit form looks very similar to the View form, but fields become editable and you'll see an "Update Experiment" button instead of just "Cancel".

> \[!NOTE] You cannot edit core configuration (framework, resources, hyperparameters) of a running or completed experiment. To try different settings, clone the experiment instead.

**Editable Fields**:

* ✅ Description

* ✅ Tags

* ✅ Notes

* ✅ Public/Private status

* ❌ Framework (cannot edit)

* ❌ Resources (cannot edit while running)

* ❌ Hyperparameters (cannot edit)

## Cloning an Experiment

To create a copy of an experiment with modified settings:

1. Open experiment details

2. Click **Clone** button

3. Modify configuration as needed

4. Give it a new name

5. Click **Create Experiment**

**Use Cases**:

* Try different hyperparameters

* Run with more/less resources

* Test on different datasets

* Reproduce results

## Deleting an Experiment

To remove an experiment:

1. Navigate to experiment details or list

2. Click **Delete** button (trash icon)

3. Confirm deletion in the dialog

4. Experiment and associated data will be removed

> \[!WARNING] Deleting an experiment will permanently remove:

>

> * Experiment configuration

> * Training logs

> * Metrics and charts

> * Saved checkpoints (unless linked to a registered model)

> * This action cannot be undone!

**Before Deleting**:

* Export important logs or metrics

* Register any valuable models

* Download artifacts if needed

* Verify you have the correct experiment selected

## Monitoring Experiments

Once submitted, track your experiment:

**Real-time Monitoring**

* View live logs

* Monitor resource utilization (CPU, GPU, memory)

* Track metrics as they're logged

* Receive alerts on failures

**Experiment Status**

* **Pending**: Waiting for resources

* **Running**: Currently executing

* **Completed**: Finished successfully

* **Failed**: Encountered an error

* **Stopped**: Manually stopped

* **Cancelled**: Cancelled before starting

**Actions Available**

* **View Logs**: See stdout/stderr

* **View Metrics**: Charts and graphs

* **Stop**: Terminate running experiment

* **Clone**: Create a copy with same config

* **Compare**: Compare with other experiments

* **Export**: Download results and artifacts

## Comparing Experiments

Compare multiple experiments side-by-side:

1. **Select Experiments**: Check boxes for 2+ experiments

2. **Click Compare**: Opens comparison view

3. **View Differences**:

* Hyperparameters table

* Metrics charts (overlaid)

* Resource usage comparison

* Final results summary

## Best Practices

**Naming Conventions**

```

{model}-{dataset}-{variant}-{version}

Examples:

- resnet50-imagenet-baseline-v1

- bert-squad-finetuned-v2

```

**Tagging Strategy**

* **Domain**: `computer-vision`, `nlp`, `audio`

* **Task**: `classification`, `detection`, `segmentation`

* **Stage**: `exploration`, `tuning`, `production`

**Resource Optimization**

* Start with minimal resources, scale up as needed

* Use GPU only when necessary

* Monitor resource utilization

## Next Steps

* Register your trained model in [Models](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/models)

* Deploy to production via [Deployments](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/deployments)

* Monitor performance in [Analytics](https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/deep-learning-platform/analytics)

---

# Source: https://docs.kaisar.io/kaisar-network/origins/challenges/exponential-change-in-spending-and-compute-requirements.md

# Exponential Change in Spending and Compute Requirements

According to [GlobalTechCouncil](https://www.globaltechcouncil.org/artificial-intelligence/global-spending-on-ai-expected-to-double-in-four-years/), global spending on AI-centric systems is forecast to reach $154 billion in 2023 and is expected to continue growing rapidly, driven by increasing investments in AI by various industries such as banking, retail, and professional services.

Key areas of AI hardware spending include GPUs, TPUs, and custom AI chips. The broader IT spending landscape also highlights significant growth in data center systems, with spending projected to increase from $237 billion in 2023 to $260 billion in 2024. This growth is part of a broader trend of increasing investment in software, IT services, and communications services driven by the adoption of AI and other emerging technologies (Source: [Splunk](https://www.splunk.com/en_us/blog/learn/it-tech-spending.html)).

To gain insights into projected hardware use by AI Systems, [Stanford AI Index Report 2024](https://aiindex.stanford.edu/report/)) highlights the economic impact of AI, showing a significant increase in the use of training compute for notable machine learning models

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-ai-ops/troubleshooting/troubleshooting/faq.md

# Source: https://docs.kaisar.io/kaisar-network/origins/faq.md

# FAQ

- [What is Kaisar?](/kaisar-network/origins/faq/what-is-kaisar.md)

- [Does Kaisar use blockchain technology?](/kaisar-network/origins/faq/does-kaisar-use-blockchain-technology.md)

- [What are the main products offered by Kaisar?](/kaisar-network/origins/faq/what-are-the-main-products-offered-by-kaisar.md)

- [Can I contribute my GPU resources to Kaisar?](/kaisar-network/origins/faq/can-i-contribute-my-gpu-resources-to-kaisar.md)

- [What is Proof of Physical Work (PoPW)?](/kaisar-network/origins/faq/what-is-proof-of-physical-work-popw.md)

- [How does Kaisar GPU Container work?](/kaisar-network/origins/faq/how-does-kaisar-gpu-container-work.md)

- [What frameworks are supported by Kaisar GPU Container?](/kaisar-network/origins/faq/what-frameworks-are-supported-by-kaisar-gpu-container.md)

- [How do I deploy a GPU Container?](/kaisar-network/origins/faq/how-do-i-deploy-a-gpu-container.md)

- [What payment methods are accepted?](/kaisar-network/origins/faq/what-payment-methods-are-accepted.md)

- [How is pricing determined?](/kaisar-network/origins/faq/how-is-pricing-determined.md)

- [What GPUs are supported?](/kaisar-network/origins/faq/what-gpus-are-supported.md)

- [How do I monitor my GPU container?](/kaisar-network/origins/faq/how-do-i-monitor-my-gpu-container.md)

- [How does Kaisar ensure security of containers?](/kaisar-network/origins/faq/how-does-kaisar-ensure-security-of-containers.md)

- [How do I get started with Kaisar?](/kaisar-network/origins/faq/how-do-i-get-started-with-kaisar.md)

---

# Source: https://docs.kaisar.io/kaisar-network/kaisar-architecture/products/kaisar-onenode/kaisar-checker/how-to-purchase-checker-nodes/faqs.md

# Source: https://docs.kaisar.io/kaisar-network/kaisar-architecture/products/kaisar-onenode/kaisar-checker/what-is-the-kaisar-checker-node/faqs.md

# FAQs

* **What are Checker Nodes?**

Checker Nodes validate transactions, monitor uptime, and ensure system reliability within the Kaisar DePIN protocol.

* **How does the two-phase sale work?**

Participants reserve funds during the Fund Commitment Phase, which determines their priority for the Node Sale Phase. Additional benefits include $KAI rewards, tier-based pricing discounts, and referral bonuses.

* **What is the relationship between a KaiNode NFT owner and a Checker Node Operator?**

A Checker Node Operator can operate multiple Kaisar NFTs and claim rewards from a single machine through the Checker Node Client.

To become a KaiNode NFT owner, you can purchase your NFT from [nodes.kaisar.io](https://nodes.kaisar.io) or through the secondary market in the future. Contracts will be released soon, so stay tuned for further updates.

* **Who can run a checker node?**

A Checker Node Operator owns Kaisar Node NFTs and can run the checker node client on their own machine, through a Virtual Machine, through Node-as-a-Service, or by delegating to another user's machine.

* **What rewards can I expect as a Checker Node operator?**

Rewards are distributed based on your node’s performance and uptime contributions.

* **Can I purchase multiple Checker Nodes?**

Yes, subject to purchase limits to ensure decentralization.

* **How does the referral program work?**

Referrers earn 10% cashback for purchases made through their referral link, while buyers receive a 10% discount.

* **What happens to unallocated $KAI rewards?**