The Visual Agent Builder enables:

* Intuitive agent configuration with form-based interfaces

* Real-time testing and validation

* Template library with pre-configured agent types

* Easy customization of agent attributes and behaviors

The Visual Agent Builder enables:

* Intuitive agent configuration with form-based interfaces

* Real-time testing and validation

* Template library with pre-configured agent types

* Easy customization of agent attributes and behaviors

### Integration Playbooks

Deep-dive guides walk through setup and sample workflows for each integration:

### Integration Playbooks

Deep-dive guides walk through setup and sample workflows for each integration:

This view shows all the trigger integrations available for your deployment, along with their current connection status.

### Enabling and Disabling Triggers

Each trigger can be easily enabled or disabled using the toggle switch:

This view shows all the trigger integrations available for your deployment, along with their current connection status.

### Enabling and Disabling Triggers

Each trigger can be easily enabled or disabled using the toggle switch:

* **Enabled (blue toggle)**: The trigger is active and will automatically execute your deployment when the specified events occur

* **Disabled (gray toggle)**: The trigger is inactive and will not respond to events

Simply click the toggle to change the trigger state. Changes take effect immediately.

### Monitoring Trigger Executions

Track the performance and history of your triggered executions:

* **Enabled (blue toggle)**: The trigger is active and will automatically execute your deployment when the specified events occur

* **Disabled (gray toggle)**: The trigger is inactive and will not respond to events

Simply click the toggle to change the trigger state. Changes take effect immediately.

### Monitoring Trigger Executions

Track the performance and history of your triggered executions:

## Building Trigger-Driven Automations

Before building your automation, it's helpful to understand the structure of trigger payloads that your crews and flows will receive.

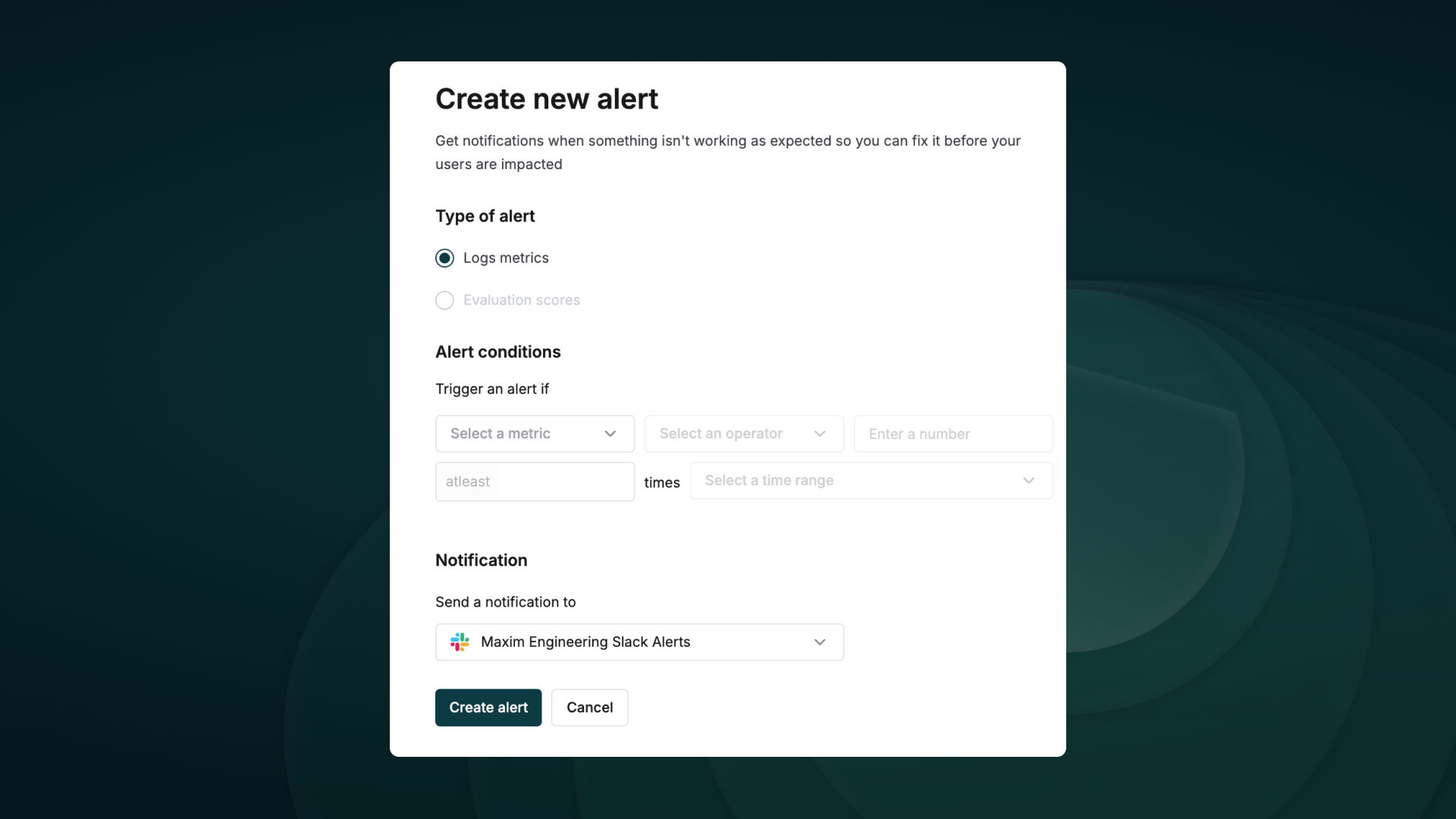

### Trigger Setup Checklist

Before wiring a trigger into production, make sure you:

* Connect the integration under **Tools & Integrations** and complete any OAuth or API key steps

* Enable the trigger toggle on the deployment that should respond to events

* Provide any required environment variables (API tokens, tenant IDs, shared secrets)

* Create or update tasks that can parse the incoming payload within the first crew task or flow step

* Decide whether to pass trigger context automatically using `allow_crewai_trigger_context`

* Set up monitoring—webhook logs, CrewAI execution history, and optional external alerting

### Testing Triggers Locally with CLI

The CrewAI CLI provides powerful commands to help you develop and test trigger-driven automations without deploying to production.

#### List Available Triggers

View all available triggers for your connected integrations:

```bash theme={null}

crewai triggers list

```

This command displays all triggers available based on your connected integrations, showing:

* Integration name and connection status

* Available trigger types

* Trigger names and descriptions

#### Simulate Trigger Execution

Test your crew with realistic trigger payloads before deployment:

```bash theme={null}

crewai triggers run

## Building Trigger-Driven Automations

Before building your automation, it's helpful to understand the structure of trigger payloads that your crews and flows will receive.

### Trigger Setup Checklist

Before wiring a trigger into production, make sure you:

* Connect the integration under **Tools & Integrations** and complete any OAuth or API key steps

* Enable the trigger toggle on the deployment that should respond to events

* Provide any required environment variables (API tokens, tenant IDs, shared secrets)

* Create or update tasks that can parse the incoming payload within the first crew task or flow step

* Decide whether to pass trigger context automatically using `allow_crewai_trigger_context`

* Set up monitoring—webhook logs, CrewAI execution history, and optional external alerting

### Testing Triggers Locally with CLI

The CrewAI CLI provides powerful commands to help you develop and test trigger-driven automations without deploying to production.

#### List Available Triggers

View all available triggers for your connected integrations:

```bash theme={null}

crewai triggers list

```

This command displays all triggers available based on your connected integrations, showing:

* Integration name and connection status

* Available trigger types

* Trigger names and descriptions

#### Simulate Trigger Execution

Test your crew with realistic trigger payloads before deployment:

```bash theme={null}

crewai triggers run  ## Deployment Methods

### Deploy from GitHub

Use this for version‑controlled projects and continuous deployment.

## Deployment Methods

### Deploy from GitHub

Use this for version‑controlled projects and continuous deployment.

### Deploy from ZIP

Ship quickly without Git—upload a compressed package of your project.

### Deploy from ZIP

Ship quickly without Git—upload a compressed package of your project.

## Automations Dashboard

The table lists all live automations with key details:

* **CREW**: Automation name

* **STATUS**: Online / Failed / In Progress

* **URL**: Endpoint for kickoff/status

* **TOKEN**: Automation token

* **ACTIONS**: Re‑deploy, delete, and more

Use the top‑right controls to filter and search:

* Search by name

* Filter by Status

* Filter by Source (GitHub / Studio / ZIP)

Once deployed, you can view the automation details and have the **Options** dropdown menu to `chat with this crew`, `Export React Component` and `Export as MCP`.

## Automations Dashboard

The table lists all live automations with key details:

* **CREW**: Automation name

* **STATUS**: Online / Failed / In Progress

* **URL**: Endpoint for kickoff/status

* **TOKEN**: Automation token

* **ACTIONS**: Re‑deploy, delete, and more

Use the top‑right controls to filter and search:

* Search by name

* Filter by Status

* Filter by Source (GitHub / Studio / ZIP)

Once deployed, you can view the automation details and have the **Options** dropdown menu to `chat with this crew`, `Export React Component` and `Export as MCP`.

## Best Practices

* Prefer GitHub deployments for version control and CI/CD

* Use re‑deploy to roll forward after code or config updates or set it to auto-deploy on every push

## Related

## Best Practices

* Prefer GitHub deployments for version control and CI/CD

* Use re‑deploy to roll forward after code or config updates or set it to auto-deploy on every push

## Related

---

# Source: https://docs.crewai.com/en/changelog.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.crewai.com/llms.txt

> Use this file to discover all available pages before exploring further.

# Changelog

> Product updates, improvements, and bug fixes for CrewAI

---

# Source: https://docs.crewai.com/en/changelog.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.crewai.com/llms.txt

> Use this file to discover all available pages before exploring further.

# Changelog

> Product updates, improvements, and bug fixes for CrewAI

## Prompt‑based Creation

* Describe the automation you want; the AI generates agents, tasks, and tools.

* Use voice input via the microphone icon if preferred.

* Start from built‑in prompts for common use cases.

## Prompt‑based Creation

* Describe the automation you want; the AI generates agents, tasks, and tools.

* Use voice input via the microphone icon if preferred.

* Start from built‑in prompts for common use cases.

## Visual Editor

The canvas reflects the workflow as nodes and edges with three supporting panels that allow you to configure the workflow easily without writing code; a.k.a. "**vibe coding AI Agents**".

You can use the drag-and-drop functionality to add agents, tasks, and tools to the canvas or you can use the chat section to build the agents. Both approaches share state and can be used interchangeably.

* **AI Thoughts (left)**: streaming reasoning as the workflow is designed

* **Canvas (center)**: agents and tasks as connected nodes

* **Resources (right)**: drag‑and‑drop components (agents, tasks, tools)

## Visual Editor

The canvas reflects the workflow as nodes and edges with three supporting panels that allow you to configure the workflow easily without writing code; a.k.a. "**vibe coding AI Agents**".

You can use the drag-and-drop functionality to add agents, tasks, and tools to the canvas or you can use the chat section to build the agents. Both approaches share state and can be used interchangeably.

* **AI Thoughts (left)**: streaming reasoning as the workflow is designed

* **Canvas (center)**: agents and tasks as connected nodes

* **Resources (right)**: drag‑and‑drop components (agents, tasks, tools)

## Execution & Debugging

Switch to the Execution view to run and observe the workflow:

* Event timeline

* Detailed logs (Details, Messages, Raw Data)

* Local test runs before publishing

## Execution & Debugging

Switch to the Execution view to run and observe the workflow:

* Event timeline

* Detailed logs (Details, Messages, Raw Data)

* Local test runs before publishing

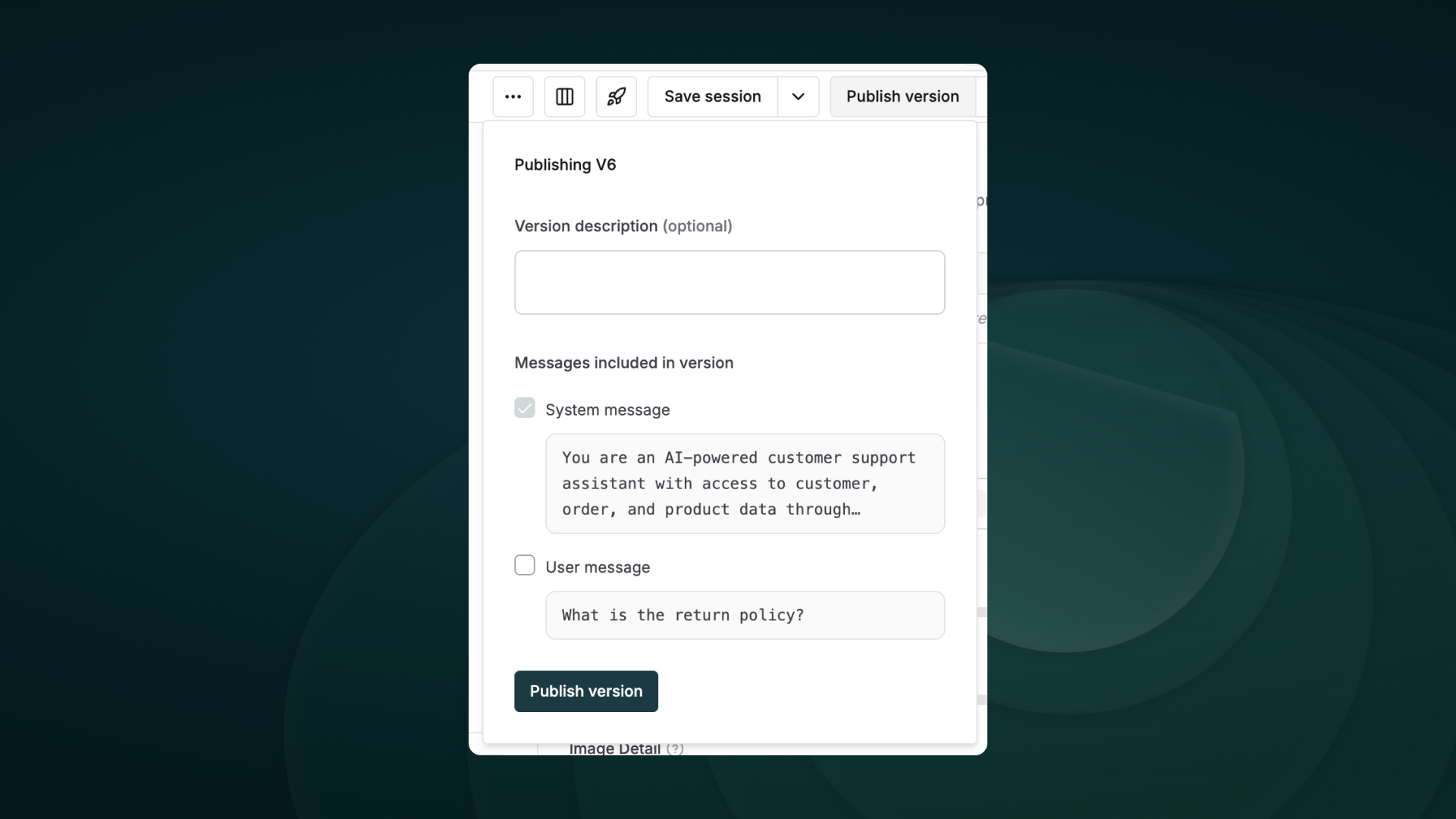

## Publish & Export

* Publish to deploy a live automation

* Download source as a ZIP for local development or customization

## Publish & Export

* Publish to deploy a live automation

* Download source as a ZIP for local development or customization

Once published, you can view the automation details and have the **Options** dropdown menu to `chat with this crew`, `Export React Component` and `Export as MCP`.

Once published, you can view the automation details and have the **Options** dropdown menu to `chat with this crew`, `Export React Component` and `Export as MCP`.

## Best Practices

* Iterate quickly in Studio; publish only when stable

* Keep tools constrained to minimum permissions needed

* Use Traces to validate behavior and performance

## Related

## Best Practices

* Iterate quickly in Studio; publish only when stable

* Keep tools constrained to minimum permissions needed

* Use Traces to validate behavior and performance

## Related

## Best Practices

1. **Be specific in your image generation prompts** to get the best results.

2. **Consider generation time** - Image generation can take some time, so factor this into your task planning.

3. **Follow usage policies** - Always comply with OpenAI's usage policies when generating images.

## Troubleshooting

1. **Check API access** - Ensure your OpenAI API key has access to DALL-E.

2. **Version compatibility** - Check that you're using the latest version of crewAI and crewai-tools.

3. **Tool configuration** - Verify that the DALL-E tool is correctly added to the agent's tool list.

---

# Source: https://docs.crewai.com/en/tools/ai-ml/dalletool.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.crewai.com/llms.txt

> Use this file to discover all available pages before exploring further.

# DALL-E Tool

> The `DallETool` is a powerful tool designed for generating images from textual descriptions.

# `DallETool`

## Description

This tool is used to give the Agent the ability to generate images using the DALL-E model. It is a transformer-based model that generates images from textual descriptions.

This tool allows the Agent to generate images based on the text input provided by the user.

## Installation

Install the crewai\_tools package

```shell theme={null}

pip install 'crewai[tools]'

```

## Example

Remember that when using this tool, the text must be generated by the Agent itself. The text must be a description of the image you want to generate.

```python Code theme={null}

from crewai_tools import DallETool

Agent(

...

tools=[DallETool()],

)

```

If needed you can also tweak the parameters of the DALL-E model by passing them as arguments to the `DallETool` class. For example:

```python Code theme={null}

from crewai_tools import DallETool

dalle_tool = DallETool(model="dall-e-3",

size="1024x1024",

quality="standard",

n=1)

Agent(

...

tools=[dalle_tool]

)

```

The parameters are based on the `client.images.generate` method from the OpenAI API. For more information on the parameters,

please refer to the [OpenAI API documentation](https://platform.openai.com/docs/guides/images/introduction?lang=python).

---

# Source: https://docs.crewai.com/en/tools/search-research/databricks-query-tool.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.crewai.com/llms.txt

> Use this file to discover all available pages before exploring further.

# Databricks SQL Query Tool

> The `DatabricksQueryTool` executes SQL queries against Databricks workspace tables.

# `DatabricksQueryTool`

## Description

Run SQL against Databricks workspace tables with either CLI profile or direct host/token authentication.

## Installation

```shell theme={null}

uv add crewai-tools[databricks-sdk]

```

## Environment Variables

* `DATABRICKS_CONFIG_PROFILE` or (`DATABRICKS_HOST` + `DATABRICKS_TOKEN`)

Create a personal access token and find host details in the Databricks workspace under User Settings → Developer.

Docs: [https://docs.databricks.com/en/dev-tools/auth/pat.html](https://docs.databricks.com/en/dev-tools/auth/pat.html)

## Example

```python Code theme={null}

from crewai import Agent, Task, Crew

from crewai_tools import DatabricksQueryTool

tool = DatabricksQueryTool(

default_catalog="main",

default_schema="default",

)

agent = Agent(

role="Data Analyst",

goal="Query Databricks",

tools=[tool],

verbose=True,

)

task = Task(

description="SELECT * FROM my_table LIMIT 10",

expected_output="10 rows",

agent=agent,

)

crew = Crew(

agents=[agent],

tasks=[task],

verbose=True,

)

result = crew.kickoff()

print(result)

```

## Parameters

* `query` (required): SQL query to execute

* `catalog` (optional): Override default catalog

* `db_schema` (optional): Override default schema

* `warehouse_id` (optional): Override default SQL warehouse

* `row_limit` (optional): Maximum rows to return (default: 1000)

## Defaults on initialization

* `default_catalog`

* `default_schema`

* `default_warehouse_id`

### Error handling & tips

* Authentication errors: verify `DATABRICKS_HOST` begins with `https://` and token is valid.

* Permissions: ensure your SQL warehouse and schema are accessible by your token.

* Limits: long‑running queries should be avoided in agent loops; add filters/limits.

---

# Source: https://docs.crewai.com/en/observability/datadog.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.crewai.com/llms.txt

> Use this file to discover all available pages before exploring further.

# Datadog Integration

> Learn how to integrate Datadog with CrewAI to submit LLM Observability traces to Datadog.

# Integrate Datadog with CrewAI

This guide will demonstrate how to integrate **[Datadog LLM Observability](https://docs.datadoghq.com/llm_observability/)** with **CrewAI** using [Datadog auto-instrumentation](https://docs.datadoghq.com/llm_observability/instrumentation/auto_instrumentation?tab=python). By the end of this guide, you will be able to submit LLM Observability traces to Datadog and view your CrewAI agent runs in Datadog LLM Observability's [Agentic Execution View](https://docs.datadoghq.com/llm_observability/monitoring/agent_monitoring).

## What is Datadog LLM Observability?

[Datadog LLM Observability](https://www.datadoghq.com/product/llm-observability/) helps AI engineers, data scientists, and application developers quickly develop, evaluate, and monitor LLM applications. Confidently improve output quality, performance, costs, and overall risk with structured experiments, end-to-end tracing across AI agents, and evaluations.

## Getting Started

### Install Dependencies

```shell theme={null}

pip install ddtrace crewai crewai-tools

```

### Set Environment Variables

If you do not have a Datadog API key, you can [create an account](https://www.datadoghq.com/) and [get your API key](https://docs.datadoghq.com/account_management/api-app-keys/#api-keys).

You will also need to specify an ML Application name in the following environment variables. An ML Application is a grouping of LLM Observability traces associated with a specific LLM-based application. See [ML Application Naming Guidelines](https://docs.datadoghq.com/llm_observability/instrumentation/sdk?tab=python#application-naming-guidelines) for more information on limitations with ML Application names.

```shell theme={null}

export DD_API_KEY=

## Best Practices

1. **Be specific in your image generation prompts** to get the best results.

2. **Consider generation time** - Image generation can take some time, so factor this into your task planning.

3. **Follow usage policies** - Always comply with OpenAI's usage policies when generating images.

## Troubleshooting

1. **Check API access** - Ensure your OpenAI API key has access to DALL-E.

2. **Version compatibility** - Check that you're using the latest version of crewAI and crewai-tools.

3. **Tool configuration** - Verify that the DALL-E tool is correctly added to the agent's tool list.

---

# Source: https://docs.crewai.com/en/tools/ai-ml/dalletool.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.crewai.com/llms.txt

> Use this file to discover all available pages before exploring further.

# DALL-E Tool

> The `DallETool` is a powerful tool designed for generating images from textual descriptions.

# `DallETool`

## Description

This tool is used to give the Agent the ability to generate images using the DALL-E model. It is a transformer-based model that generates images from textual descriptions.

This tool allows the Agent to generate images based on the text input provided by the user.

## Installation

Install the crewai\_tools package

```shell theme={null}

pip install 'crewai[tools]'

```

## Example

Remember that when using this tool, the text must be generated by the Agent itself. The text must be a description of the image you want to generate.

```python Code theme={null}

from crewai_tools import DallETool

Agent(

...

tools=[DallETool()],

)

```

If needed you can also tweak the parameters of the DALL-E model by passing them as arguments to the `DallETool` class. For example:

```python Code theme={null}

from crewai_tools import DallETool

dalle_tool = DallETool(model="dall-e-3",

size="1024x1024",

quality="standard",

n=1)

Agent(

...

tools=[dalle_tool]

)

```

The parameters are based on the `client.images.generate` method from the OpenAI API. For more information on the parameters,

please refer to the [OpenAI API documentation](https://platform.openai.com/docs/guides/images/introduction?lang=python).

---

# Source: https://docs.crewai.com/en/tools/search-research/databricks-query-tool.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.crewai.com/llms.txt

> Use this file to discover all available pages before exploring further.

# Databricks SQL Query Tool

> The `DatabricksQueryTool` executes SQL queries against Databricks workspace tables.

# `DatabricksQueryTool`

## Description

Run SQL against Databricks workspace tables with either CLI profile or direct host/token authentication.

## Installation

```shell theme={null}

uv add crewai-tools[databricks-sdk]

```

## Environment Variables

* `DATABRICKS_CONFIG_PROFILE` or (`DATABRICKS_HOST` + `DATABRICKS_TOKEN`)

Create a personal access token and find host details in the Databricks workspace under User Settings → Developer.

Docs: [https://docs.databricks.com/en/dev-tools/auth/pat.html](https://docs.databricks.com/en/dev-tools/auth/pat.html)

## Example

```python Code theme={null}

from crewai import Agent, Task, Crew

from crewai_tools import DatabricksQueryTool

tool = DatabricksQueryTool(

default_catalog="main",

default_schema="default",

)

agent = Agent(

role="Data Analyst",

goal="Query Databricks",

tools=[tool],

verbose=True,

)

task = Task(

description="SELECT * FROM my_table LIMIT 10",

expected_output="10 rows",

agent=agent,

)

crew = Crew(

agents=[agent],

tasks=[task],

verbose=True,

)

result = crew.kickoff()

print(result)

```

## Parameters

* `query` (required): SQL query to execute

* `catalog` (optional): Override default catalog

* `db_schema` (optional): Override default schema

* `warehouse_id` (optional): Override default SQL warehouse

* `row_limit` (optional): Maximum rows to return (default: 1000)

## Defaults on initialization

* `default_catalog`

* `default_schema`

* `default_warehouse_id`

### Error handling & tips

* Authentication errors: verify `DATABRICKS_HOST` begins with `https://` and token is valid.

* Permissions: ensure your SQL warehouse and schema are accessible by your token.

* Limits: long‑running queries should be avoided in agent loops; add filters/limits.

---

# Source: https://docs.crewai.com/en/observability/datadog.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.crewai.com/llms.txt

> Use this file to discover all available pages before exploring further.

# Datadog Integration

> Learn how to integrate Datadog with CrewAI to submit LLM Observability traces to Datadog.

# Integrate Datadog with CrewAI

This guide will demonstrate how to integrate **[Datadog LLM Observability](https://docs.datadoghq.com/llm_observability/)** with **CrewAI** using [Datadog auto-instrumentation](https://docs.datadoghq.com/llm_observability/instrumentation/auto_instrumentation?tab=python). By the end of this guide, you will be able to submit LLM Observability traces to Datadog and view your CrewAI agent runs in Datadog LLM Observability's [Agentic Execution View](https://docs.datadoghq.com/llm_observability/monitoring/agent_monitoring).

## What is Datadog LLM Observability?

[Datadog LLM Observability](https://www.datadoghq.com/product/llm-observability/) helps AI engineers, data scientists, and application developers quickly develop, evaluate, and monitor LLM applications. Confidently improve output quality, performance, costs, and overall risk with structured experiments, end-to-end tracing across AI agents, and evaluations.

## Getting Started

### Install Dependencies

```shell theme={null}

pip install ddtrace crewai crewai-tools

```

### Set Environment Variables

If you do not have a Datadog API key, you can [create an account](https://www.datadoghq.com/) and [get your API key](https://docs.datadoghq.com/account_management/api-app-keys/#api-keys).

You will also need to specify an ML Application name in the following environment variables. An ML Application is a grouping of LLM Observability traces associated with a specific LLM-based application. See [ML Application Naming Guidelines](https://docs.datadoghq.com/llm_observability/instrumentation/sdk?tab=python#application-naming-guidelines) for more information on limitations with ML Application names.

```shell theme={null}

export DD_API_KEY= Additionally, you can view the execution graph view of the trace, which shows the control and data flow of the trace, which will scale with larger agents to show handoffs and relationships between LLM calls, tool calls, and agent interactions.

Additionally, you can view the execution graph view of the trace, which shows the control and data flow of the trace, which will scale with larger agents to show handoffs and relationships between LLM calls, tool calls, and agent interactions.

## References

* [Datadog LLM Observability](https://www.datadoghq.com/product/llm-observability/)

* [Datadog LLM Observability CrewAI Auto-Instrumentation](https://docs.datadoghq.com/llm_observability/instrumentation/auto_instrumentation?tab=python#crew-ai)

---

# Source: https://docs.crewai.com/en/enterprise/guides/deploy-crew.md

# Deploy Crew

> Deploying a Crew on CrewAI AMP

## References

* [Datadog LLM Observability](https://www.datadoghq.com/product/llm-observability/)

* [Datadog LLM Observability CrewAI Auto-Instrumentation](https://docs.datadoghq.com/llm_observability/instrumentation/auto_instrumentation?tab=python#crew-ai)

---

# Source: https://docs.crewai.com/en/enterprise/guides/deploy-crew.md

# Deploy Crew

> Deploying a Crew on CrewAI AMP

Once deployment is complete, you'll see:

* Your crew's unique URL

* A Bearer token to protect your crew API

* A "Delete" button if you need to remove the deployment

Once deployment is complete, you'll see:

* Your crew's unique URL

* A Bearer token to protect your crew API

* A "Delete" button if you need to remove the deployment

Once deployment is complete, you'll see:

* Your crew's unique URL

* A Bearer token to protect your crew API

* A "Delete" button if you need to remove the deployment

Once deployment is complete, you'll see:

* Your crew's unique URL

* A Bearer token to protect your crew API

* A "Delete" button if you need to remove the deployment

With Crew Studio, you can:

* Chat with the Crew Assistant to describe your problem

* Automatically generate agents and tasks

* Select appropriate tools

* Configure necessary inputs

* Generate downloadable code for customization

* Deploy directly to the CrewAI AMP platform

## Configuration Steps

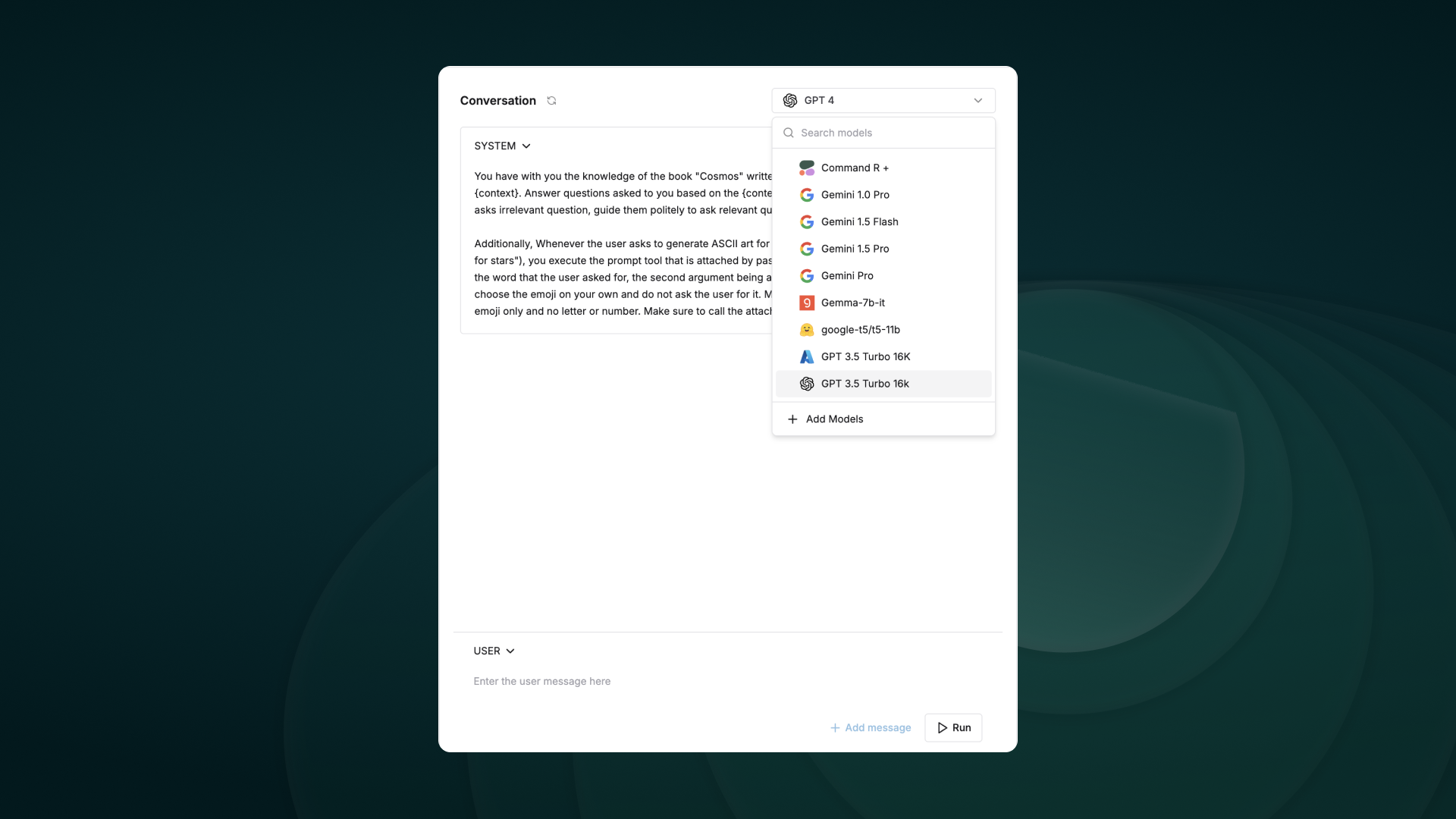

Before you can start using Crew Studio, you need to configure your LLM connections:

With Crew Studio, you can:

* Chat with the Crew Assistant to describe your problem

* Automatically generate agents and tasks

* Select appropriate tools

* Configure necessary inputs

* Generate downloadable code for customization

* Deploy directly to the CrewAI AMP platform

## Configuration Steps

Before you can start using Crew Studio, you need to configure your LLM connections:

This matrix helps visualize how different approaches align with varying requirements for complexity and precision. Let's explore what each quadrant means and how it guides your architectural choices.

## The Complexity-Precision Matrix Explained

### What is Complexity?

In the context of CrewAI applications, **complexity** refers to:

* The number of distinct steps or operations required

* The diversity of tasks that need to be performed

* The interdependencies between different components

* The need for conditional logic and branching

* The sophistication of the overall workflow

### What is Precision?

**Precision** in this context refers to:

* The accuracy required in the final output

* The need for structured, predictable results

* The importance of reproducibility

* The level of control needed over each step

* The tolerance for variation in outputs

### The Four Quadrants

#### 1. Low Complexity, Low Precision

**Characteristics:**

* Simple, straightforward tasks

* Tolerance for some variation in outputs

* Limited number of steps

* Creative or exploratory applications

**Recommended Approach:** Simple Crews with minimal agents

**Example Use Cases:**

* Basic content generation

* Idea brainstorming

* Simple summarization tasks

* Creative writing assistance

#### 2. Low Complexity, High Precision

**Characteristics:**

* Simple workflows that require exact, structured outputs

* Need for reproducible results

* Limited steps but high accuracy requirements

* Often involves data processing or transformation

**Recommended Approach:** Flows with direct LLM calls or simple Crews with structured outputs

**Example Use Cases:**

* Data extraction and transformation

* Form filling and validation

* Structured content generation (JSON, XML)

* Simple classification tasks

#### 3. High Complexity, Low Precision

**Characteristics:**

* Multi-stage processes with many steps

* Creative or exploratory outputs

* Complex interactions between components

* Tolerance for variation in final results

**Recommended Approach:** Complex Crews with multiple specialized agents

**Example Use Cases:**

* Research and analysis

* Content creation pipelines

* Exploratory data analysis

* Creative problem-solving

#### 4. High Complexity, High Precision

**Characteristics:**

* Complex workflows requiring structured outputs

* Multiple interdependent steps with strict accuracy requirements

* Need for both sophisticated processing and precise results

* Often mission-critical applications

**Recommended Approach:** Flows orchestrating multiple Crews with validation steps

**Example Use Cases:**

* Enterprise decision support systems

* Complex data processing pipelines

* Multi-stage document processing

* Regulated industry applications

## Choosing Between Crews and Flows

### When to Choose Crews

Crews are ideal when:

1. **You need collaborative intelligence** - Multiple agents with different specializations need to work together

2. **The problem requires emergent thinking** - The solution benefits from different perspectives and approaches

3. **The task is primarily creative or analytical** - The work involves research, content creation, or analysis

4. **You value adaptability over strict structure** - The workflow can benefit from agent autonomy

5. **The output format can be somewhat flexible** - Some variation in output structure is acceptable

```python theme={null}

# Example: Research Crew for market analysis

from crewai import Agent, Crew, Process, Task

# Create specialized agents

researcher = Agent(

role="Market Research Specialist",

goal="Find comprehensive market data on emerging technologies",

backstory="You are an expert at discovering market trends and gathering data."

)

analyst = Agent(

role="Market Analyst",

goal="Analyze market data and identify key opportunities",

backstory="You excel at interpreting market data and spotting valuable insights."

)

# Define their tasks

research_task = Task(

description="Research the current market landscape for AI-powered healthcare solutions",

expected_output="Comprehensive market data including key players, market size, and growth trends",

agent=researcher

)

analysis_task = Task(

description="Analyze the market data and identify the top 3 investment opportunities",

expected_output="Analysis report with 3 recommended investment opportunities and rationale",

agent=analyst,

context=[research_task]

)

# Create the crew

market_analysis_crew = Crew(

agents=[researcher, analyst],

tasks=[research_task, analysis_task],

process=Process.sequential,

verbose=True

)

# Run the crew

result = market_analysis_crew.kickoff()

```

### When to Choose Flows

Flows are ideal when:

1. **You need precise control over execution** - The workflow requires exact sequencing and state management

2. **The application has complex state requirements** - You need to maintain and transform state across multiple steps

3. **You need structured, predictable outputs** - The application requires consistent, formatted results

4. **The workflow involves conditional logic** - Different paths need to be taken based on intermediate results

5. **You need to combine AI with procedural code** - The solution requires both AI capabilities and traditional programming

```python theme={null}

# Example: Customer Support Flow with structured processing

from crewai.flow.flow import Flow, listen, router, start

from pydantic import BaseModel

from typing import List, Dict

# Define structured state

class SupportTicketState(BaseModel):

ticket_id: str = ""

customer_name: str = ""

issue_description: str = ""

category: str = ""

priority: str = "medium"

resolution: str = ""

satisfaction_score: int = 0

class CustomerSupportFlow(Flow[SupportTicketState]):

@start()

def receive_ticket(self):

# In a real app, this might come from an API

self.state.ticket_id = "TKT-12345"

self.state.customer_name = "Alex Johnson"

self.state.issue_description = "Unable to access premium features after payment"

return "Ticket received"

@listen(receive_ticket)

def categorize_ticket(self, _):

# Use a direct LLM call for categorization

from crewai import LLM

llm = LLM(model="openai/gpt-4o-mini")

prompt = f"""

Categorize the following customer support issue into one of these categories:

- Billing

- Account Access

- Technical Issue

- Feature Request

- Other

Issue: {self.state.issue_description}

Return only the category name.

"""

self.state.category = llm.call(prompt).strip()

return self.state.category

@router(categorize_ticket)

def route_by_category(self, category):

# Route to different handlers based on category

return category.lower().replace(" ", "_")

@listen("billing")

def handle_billing_issue(self):

# Handle billing-specific logic

self.state.priority = "high"

# More billing-specific processing...

return "Billing issue handled"

@listen("account_access")

def handle_access_issue(self):

# Handle access-specific logic

self.state.priority = "high"

# More access-specific processing...

return "Access issue handled"

# Additional category handlers...

@listen("billing", "account_access", "technical_issue", "feature_request", "other")

def resolve_ticket(self, resolution_info):

# Final resolution step

self.state.resolution = f"Issue resolved: {resolution_info}"

return self.state.resolution

# Run the flow

support_flow = CustomerSupportFlow()

result = support_flow.kickoff()

```

### When to Combine Crews and Flows

The most sophisticated applications often benefit from combining Crews and Flows:

1. **Complex multi-stage processes** - Use Flows to orchestrate the overall process and Crews for complex subtasks

2. **Applications requiring both creativity and structure** - Use Crews for creative tasks and Flows for structured processing

3. **Enterprise-grade AI applications** - Use Flows to manage state and process flow while leveraging Crews for specialized work

```python theme={null}

# Example: Content Production Pipeline combining Crews and Flows

from crewai.flow.flow import Flow, listen, start

from crewai import Agent, Crew, Process, Task

from pydantic import BaseModel

from typing import List, Dict

class ContentState(BaseModel):

topic: str = ""

target_audience: str = ""

content_type: str = ""

outline: Dict = {}

draft_content: str = ""

final_content: str = ""

seo_score: int = 0

class ContentProductionFlow(Flow[ContentState]):

@start()

def initialize_project(self):

# Set initial parameters

self.state.topic = "Sustainable Investing"

self.state.target_audience = "Millennial Investors"

self.state.content_type = "Blog Post"

return "Project initialized"

@listen(initialize_project)

def create_outline(self, _):

# Use a research crew to create an outline

researcher = Agent(

role="Content Researcher",

goal=f"Research {self.state.topic} for {self.state.target_audience}",

backstory="You are an expert researcher with deep knowledge of content creation."

)

outliner = Agent(

role="Content Strategist",

goal=f"Create an engaging outline for a {self.state.content_type}",

backstory="You excel at structuring content for maximum engagement."

)

research_task = Task(

description=f"Research {self.state.topic} focusing on what would interest {self.state.target_audience}",

expected_output="Comprehensive research notes with key points and statistics",

agent=researcher

)

outline_task = Task(

description=f"Create an outline for a {self.state.content_type} about {self.state.topic}",

expected_output="Detailed content outline with sections and key points",

agent=outliner,

context=[research_task]

)

outline_crew = Crew(

agents=[researcher, outliner],

tasks=[research_task, outline_task],

process=Process.sequential,

verbose=True

)

# Run the crew and store the result

result = outline_crew.kickoff()

# Parse the outline (in a real app, you might use a more robust parsing approach)

import json

try:

self.state.outline = json.loads(result.raw)

except:

# Fallback if not valid JSON

self.state.outline = {"sections": result.raw}

return "Outline created"

@listen(create_outline)

def write_content(self, _):

# Use a writing crew to create the content

writer = Agent(

role="Content Writer",

goal=f"Write engaging content for {self.state.target_audience}",

backstory="You are a skilled writer who creates compelling content."

)

editor = Agent(

role="Content Editor",

goal="Ensure content is polished, accurate, and engaging",

backstory="You have a keen eye for detail and a talent for improving content."

)

writing_task = Task(

description=f"Write a {self.state.content_type} about {self.state.topic} following this outline: {self.state.outline}",

expected_output="Complete draft content in markdown format",

agent=writer

)

editing_task = Task(

description="Edit and improve the draft content for clarity, engagement, and accuracy",

expected_output="Polished final content in markdown format",

agent=editor,

context=[writing_task]

)

writing_crew = Crew(

agents=[writer, editor],

tasks=[writing_task, editing_task],

process=Process.sequential,

verbose=True

)

# Run the crew and store the result

result = writing_crew.kickoff()

self.state.final_content = result.raw

return "Content created"

@listen(write_content)

def optimize_for_seo(self, _):

# Use a direct LLM call for SEO optimization

from crewai import LLM

llm = LLM(model="openai/gpt-4o-mini")

prompt = f"""

Analyze this content for SEO effectiveness for the keyword "{self.state.topic}".

Rate it on a scale of 1-100 and provide 3 specific recommendations for improvement.

Content: {self.state.final_content[:1000]}... (truncated for brevity)

Format your response as JSON with the following structure:

{{

"score": 85,

"recommendations": [

"Recommendation 1",

"Recommendation 2",

"Recommendation 3"

]

}}

"""

seo_analysis = llm.call(prompt)

# Parse the SEO analysis

import json

try:

analysis = json.loads(seo_analysis)

self.state.seo_score = analysis.get("score", 0)

return analysis

except:

self.state.seo_score = 50

return {"score": 50, "recommendations": ["Unable to parse SEO analysis"]}

# Run the flow

content_flow = ContentProductionFlow()

result = content_flow.kickoff()

```

## Practical Evaluation Framework

To determine the right approach for your specific use case, follow this step-by-step evaluation framework:

### Step 1: Assess Complexity

Rate your application's complexity on a scale of 1-10 by considering:

1. **Number of steps**: How many distinct operations are required?

* 1-3 steps: Low complexity (1-3)

* 4-7 steps: Medium complexity (4-7)

* 8+ steps: High complexity (8-10)

2. **Interdependencies**: How interconnected are the different parts?

* Few dependencies: Low complexity (1-3)

* Some dependencies: Medium complexity (4-7)

* Many complex dependencies: High complexity (8-10)

3. **Conditional logic**: How much branching and decision-making is needed?

* Linear process: Low complexity (1-3)

* Some branching: Medium complexity (4-7)

* Complex decision trees: High complexity (8-10)

4. **Domain knowledge**: How specialized is the knowledge required?

* General knowledge: Low complexity (1-3)

* Some specialized knowledge: Medium complexity (4-7)

* Deep expertise in multiple domains: High complexity (8-10)

Calculate your average score to determine overall complexity.

### Step 2: Assess Precision Requirements

Rate your precision requirements on a scale of 1-10 by considering:

1. **Output structure**: How structured must the output be?

* Free-form text: Low precision (1-3)

* Semi-structured: Medium precision (4-7)

* Strictly formatted (JSON, XML): High precision (8-10)

2. **Accuracy needs**: How important is factual accuracy?

* Creative content: Low precision (1-3)

* Informational content: Medium precision (4-7)

* Critical information: High precision (8-10)

3. **Reproducibility**: How consistent must results be across runs?

* Variation acceptable: Low precision (1-3)

* Some consistency needed: Medium precision (4-7)

* Exact reproducibility required: High precision (8-10)

4. **Error tolerance**: What is the impact of errors?

* Low impact: Low precision (1-3)

* Moderate impact: Medium precision (4-7)

* High impact: High precision (8-10)

Calculate your average score to determine overall precision requirements.

### Step 3: Map to the Matrix

Plot your complexity and precision scores on the matrix:

* **Low Complexity (1-4), Low Precision (1-4)**: Simple Crews

* **Low Complexity (1-4), High Precision (5-10)**: Flows with direct LLM calls

* **High Complexity (5-10), Low Precision (1-4)**: Complex Crews

* **High Complexity (5-10), High Precision (5-10)**: Flows orchestrating Crews

### Step 4: Consider Additional Factors

Beyond complexity and precision, consider:

1. **Development time**: Crews are often faster to prototype

2. **Maintenance needs**: Flows provide better long-term maintainability

3. **Team expertise**: Consider your team's familiarity with different approaches

4. **Scalability requirements**: Flows typically scale better for complex applications

5. **Integration needs**: Consider how the solution will integrate with existing systems

## Conclusion

Choosing between Crews and Flows—or combining them—is a critical architectural decision that impacts the effectiveness, maintainability, and scalability of your CrewAI application. By evaluating your use case along the dimensions of complexity and precision, you can make informed decisions that align with your specific requirements.

Remember that the best approach often evolves as your application matures. Start with the simplest solution that meets your needs, and be prepared to refine your architecture as you gain experience and your requirements become clearer.

This matrix helps visualize how different approaches align with varying requirements for complexity and precision. Let's explore what each quadrant means and how it guides your architectural choices.

## The Complexity-Precision Matrix Explained

### What is Complexity?

In the context of CrewAI applications, **complexity** refers to:

* The number of distinct steps or operations required

* The diversity of tasks that need to be performed

* The interdependencies between different components

* The need for conditional logic and branching

* The sophistication of the overall workflow

### What is Precision?

**Precision** in this context refers to:

* The accuracy required in the final output

* The need for structured, predictable results

* The importance of reproducibility

* The level of control needed over each step

* The tolerance for variation in outputs

### The Four Quadrants

#### 1. Low Complexity, Low Precision

**Characteristics:**

* Simple, straightforward tasks

* Tolerance for some variation in outputs

* Limited number of steps

* Creative or exploratory applications

**Recommended Approach:** Simple Crews with minimal agents

**Example Use Cases:**

* Basic content generation

* Idea brainstorming

* Simple summarization tasks

* Creative writing assistance

#### 2. Low Complexity, High Precision

**Characteristics:**

* Simple workflows that require exact, structured outputs

* Need for reproducible results

* Limited steps but high accuracy requirements

* Often involves data processing or transformation

**Recommended Approach:** Flows with direct LLM calls or simple Crews with structured outputs

**Example Use Cases:**

* Data extraction and transformation

* Form filling and validation

* Structured content generation (JSON, XML)

* Simple classification tasks

#### 3. High Complexity, Low Precision

**Characteristics:**

* Multi-stage processes with many steps

* Creative or exploratory outputs

* Complex interactions between components

* Tolerance for variation in final results

**Recommended Approach:** Complex Crews with multiple specialized agents

**Example Use Cases:**

* Research and analysis

* Content creation pipelines

* Exploratory data analysis

* Creative problem-solving

#### 4. High Complexity, High Precision

**Characteristics:**

* Complex workflows requiring structured outputs

* Multiple interdependent steps with strict accuracy requirements

* Need for both sophisticated processing and precise results

* Often mission-critical applications

**Recommended Approach:** Flows orchestrating multiple Crews with validation steps

**Example Use Cases:**

* Enterprise decision support systems

* Complex data processing pipelines

* Multi-stage document processing

* Regulated industry applications

## Choosing Between Crews and Flows

### When to Choose Crews

Crews are ideal when:

1. **You need collaborative intelligence** - Multiple agents with different specializations need to work together

2. **The problem requires emergent thinking** - The solution benefits from different perspectives and approaches

3. **The task is primarily creative or analytical** - The work involves research, content creation, or analysis

4. **You value adaptability over strict structure** - The workflow can benefit from agent autonomy

5. **The output format can be somewhat flexible** - Some variation in output structure is acceptable

```python theme={null}

# Example: Research Crew for market analysis

from crewai import Agent, Crew, Process, Task

# Create specialized agents

researcher = Agent(

role="Market Research Specialist",

goal="Find comprehensive market data on emerging technologies",

backstory="You are an expert at discovering market trends and gathering data."

)

analyst = Agent(

role="Market Analyst",

goal="Analyze market data and identify key opportunities",

backstory="You excel at interpreting market data and spotting valuable insights."

)

# Define their tasks

research_task = Task(

description="Research the current market landscape for AI-powered healthcare solutions",

expected_output="Comprehensive market data including key players, market size, and growth trends",

agent=researcher

)

analysis_task = Task(

description="Analyze the market data and identify the top 3 investment opportunities",

expected_output="Analysis report with 3 recommended investment opportunities and rationale",

agent=analyst,

context=[research_task]

)

# Create the crew

market_analysis_crew = Crew(

agents=[researcher, analyst],

tasks=[research_task, analysis_task],

process=Process.sequential,

verbose=True

)

# Run the crew

result = market_analysis_crew.kickoff()

```

### When to Choose Flows

Flows are ideal when:

1. **You need precise control over execution** - The workflow requires exact sequencing and state management

2. **The application has complex state requirements** - You need to maintain and transform state across multiple steps

3. **You need structured, predictable outputs** - The application requires consistent, formatted results

4. **The workflow involves conditional logic** - Different paths need to be taken based on intermediate results

5. **You need to combine AI with procedural code** - The solution requires both AI capabilities and traditional programming

```python theme={null}

# Example: Customer Support Flow with structured processing

from crewai.flow.flow import Flow, listen, router, start

from pydantic import BaseModel

from typing import List, Dict

# Define structured state

class SupportTicketState(BaseModel):

ticket_id: str = ""

customer_name: str = ""

issue_description: str = ""

category: str = ""

priority: str = "medium"

resolution: str = ""

satisfaction_score: int = 0

class CustomerSupportFlow(Flow[SupportTicketState]):

@start()

def receive_ticket(self):

# In a real app, this might come from an API

self.state.ticket_id = "TKT-12345"

self.state.customer_name = "Alex Johnson"

self.state.issue_description = "Unable to access premium features after payment"

return "Ticket received"

@listen(receive_ticket)

def categorize_ticket(self, _):

# Use a direct LLM call for categorization

from crewai import LLM

llm = LLM(model="openai/gpt-4o-mini")

prompt = f"""

Categorize the following customer support issue into one of these categories:

- Billing

- Account Access

- Technical Issue

- Feature Request

- Other

Issue: {self.state.issue_description}

Return only the category name.

"""

self.state.category = llm.call(prompt).strip()

return self.state.category

@router(categorize_ticket)

def route_by_category(self, category):

# Route to different handlers based on category

return category.lower().replace(" ", "_")

@listen("billing")

def handle_billing_issue(self):

# Handle billing-specific logic

self.state.priority = "high"

# More billing-specific processing...

return "Billing issue handled"

@listen("account_access")

def handle_access_issue(self):

# Handle access-specific logic

self.state.priority = "high"

# More access-specific processing...

return "Access issue handled"

# Additional category handlers...

@listen("billing", "account_access", "technical_issue", "feature_request", "other")

def resolve_ticket(self, resolution_info):

# Final resolution step

self.state.resolution = f"Issue resolved: {resolution_info}"

return self.state.resolution

# Run the flow

support_flow = CustomerSupportFlow()

result = support_flow.kickoff()

```

### When to Combine Crews and Flows

The most sophisticated applications often benefit from combining Crews and Flows:

1. **Complex multi-stage processes** - Use Flows to orchestrate the overall process and Crews for complex subtasks

2. **Applications requiring both creativity and structure** - Use Crews for creative tasks and Flows for structured processing

3. **Enterprise-grade AI applications** - Use Flows to manage state and process flow while leveraging Crews for specialized work

```python theme={null}

# Example: Content Production Pipeline combining Crews and Flows

from crewai.flow.flow import Flow, listen, start

from crewai import Agent, Crew, Process, Task

from pydantic import BaseModel

from typing import List, Dict

class ContentState(BaseModel):

topic: str = ""

target_audience: str = ""

content_type: str = ""

outline: Dict = {}

draft_content: str = ""

final_content: str = ""

seo_score: int = 0

class ContentProductionFlow(Flow[ContentState]):

@start()

def initialize_project(self):

# Set initial parameters

self.state.topic = "Sustainable Investing"

self.state.target_audience = "Millennial Investors"

self.state.content_type = "Blog Post"

return "Project initialized"

@listen(initialize_project)

def create_outline(self, _):

# Use a research crew to create an outline

researcher = Agent(

role="Content Researcher",

goal=f"Research {self.state.topic} for {self.state.target_audience}",

backstory="You are an expert researcher with deep knowledge of content creation."

)

outliner = Agent(

role="Content Strategist",

goal=f"Create an engaging outline for a {self.state.content_type}",

backstory="You excel at structuring content for maximum engagement."

)

research_task = Task(

description=f"Research {self.state.topic} focusing on what would interest {self.state.target_audience}",

expected_output="Comprehensive research notes with key points and statistics",

agent=researcher

)

outline_task = Task(

description=f"Create an outline for a {self.state.content_type} about {self.state.topic}",

expected_output="Detailed content outline with sections and key points",

agent=outliner,

context=[research_task]

)

outline_crew = Crew(

agents=[researcher, outliner],

tasks=[research_task, outline_task],

process=Process.sequential,

verbose=True

)

# Run the crew and store the result

result = outline_crew.kickoff()

# Parse the outline (in a real app, you might use a more robust parsing approach)

import json

try:

self.state.outline = json.loads(result.raw)

except:

# Fallback if not valid JSON

self.state.outline = {"sections": result.raw}

return "Outline created"

@listen(create_outline)

def write_content(self, _):

# Use a writing crew to create the content

writer = Agent(

role="Content Writer",

goal=f"Write engaging content for {self.state.target_audience}",

backstory="You are a skilled writer who creates compelling content."

)

editor = Agent(

role="Content Editor",

goal="Ensure content is polished, accurate, and engaging",

backstory="You have a keen eye for detail and a talent for improving content."

)

writing_task = Task(

description=f"Write a {self.state.content_type} about {self.state.topic} following this outline: {self.state.outline}",

expected_output="Complete draft content in markdown format",

agent=writer

)

editing_task = Task(

description="Edit and improve the draft content for clarity, engagement, and accuracy",

expected_output="Polished final content in markdown format",

agent=editor,

context=[writing_task]

)

writing_crew = Crew(

agents=[writer, editor],

tasks=[writing_task, editing_task],

process=Process.sequential,

verbose=True

)

# Run the crew and store the result

result = writing_crew.kickoff()

self.state.final_content = result.raw

return "Content created"

@listen(write_content)

def optimize_for_seo(self, _):

# Use a direct LLM call for SEO optimization

from crewai import LLM

llm = LLM(model="openai/gpt-4o-mini")

prompt = f"""

Analyze this content for SEO effectiveness for the keyword "{self.state.topic}".

Rate it on a scale of 1-100 and provide 3 specific recommendations for improvement.

Content: {self.state.final_content[:1000]}... (truncated for brevity)

Format your response as JSON with the following structure:

{{

"score": 85,

"recommendations": [

"Recommendation 1",

"Recommendation 2",

"Recommendation 3"

]

}}

"""

seo_analysis = llm.call(prompt)

# Parse the SEO analysis

import json

try:

analysis = json.loads(seo_analysis)

self.state.seo_score = analysis.get("score", 0)

return analysis

except:

self.state.seo_score = 50

return {"score": 50, "recommendations": ["Unable to parse SEO analysis"]}

# Run the flow

content_flow = ContentProductionFlow()

result = content_flow.kickoff()

```

## Practical Evaluation Framework

To determine the right approach for your specific use case, follow this step-by-step evaluation framework:

### Step 1: Assess Complexity

Rate your application's complexity on a scale of 1-10 by considering:

1. **Number of steps**: How many distinct operations are required?

* 1-3 steps: Low complexity (1-3)

* 4-7 steps: Medium complexity (4-7)

* 8+ steps: High complexity (8-10)

2. **Interdependencies**: How interconnected are the different parts?

* Few dependencies: Low complexity (1-3)

* Some dependencies: Medium complexity (4-7)

* Many complex dependencies: High complexity (8-10)

3. **Conditional logic**: How much branching and decision-making is needed?

* Linear process: Low complexity (1-3)

* Some branching: Medium complexity (4-7)

* Complex decision trees: High complexity (8-10)

4. **Domain knowledge**: How specialized is the knowledge required?

* General knowledge: Low complexity (1-3)

* Some specialized knowledge: Medium complexity (4-7)

* Deep expertise in multiple domains: High complexity (8-10)

Calculate your average score to determine overall complexity.

### Step 2: Assess Precision Requirements

Rate your precision requirements on a scale of 1-10 by considering:

1. **Output structure**: How structured must the output be?

* Free-form text: Low precision (1-3)

* Semi-structured: Medium precision (4-7)

* Strictly formatted (JSON, XML): High precision (8-10)

2. **Accuracy needs**: How important is factual accuracy?

* Creative content: Low precision (1-3)

* Informational content: Medium precision (4-7)

* Critical information: High precision (8-10)

3. **Reproducibility**: How consistent must results be across runs?

* Variation acceptable: Low precision (1-3)

* Some consistency needed: Medium precision (4-7)

* Exact reproducibility required: High precision (8-10)

4. **Error tolerance**: What is the impact of errors?

* Low impact: Low precision (1-3)

* Moderate impact: Medium precision (4-7)

* High impact: High precision (8-10)

Calculate your average score to determine overall precision requirements.

### Step 3: Map to the Matrix

Plot your complexity and precision scores on the matrix:

* **Low Complexity (1-4), Low Precision (1-4)**: Simple Crews

* **Low Complexity (1-4), High Precision (5-10)**: Flows with direct LLM calls

* **High Complexity (5-10), Low Precision (1-4)**: Complex Crews

* **High Complexity (5-10), High Precision (5-10)**: Flows orchestrating Crews

### Step 4: Consider Additional Factors

Beyond complexity and precision, consider:

1. **Development time**: Crews are often faster to prototype

2. **Maintenance needs**: Flows provide better long-term maintainability

3. **Team expertise**: Consider your team's familiarity with different approaches

4. **Scalability requirements**: Flows typically scale better for complex applications

5. **Integration needs**: Consider how the solution will integrate with existing systems

## Conclusion

Choosing between Crews and Flows—or combining them—is a critical architectural decision that impacts the effectiveness, maintainability, and scalability of your CrewAI application. By evaluating your use case along the dimensions of complexity and precision, you can make informed decisions that align with your specific requirements.

Remember that the best approach often evolves as your application matures. Start with the simplest solution that meets your needs, and be prepared to refine your architecture as you gain experience and your requirements become clearer.

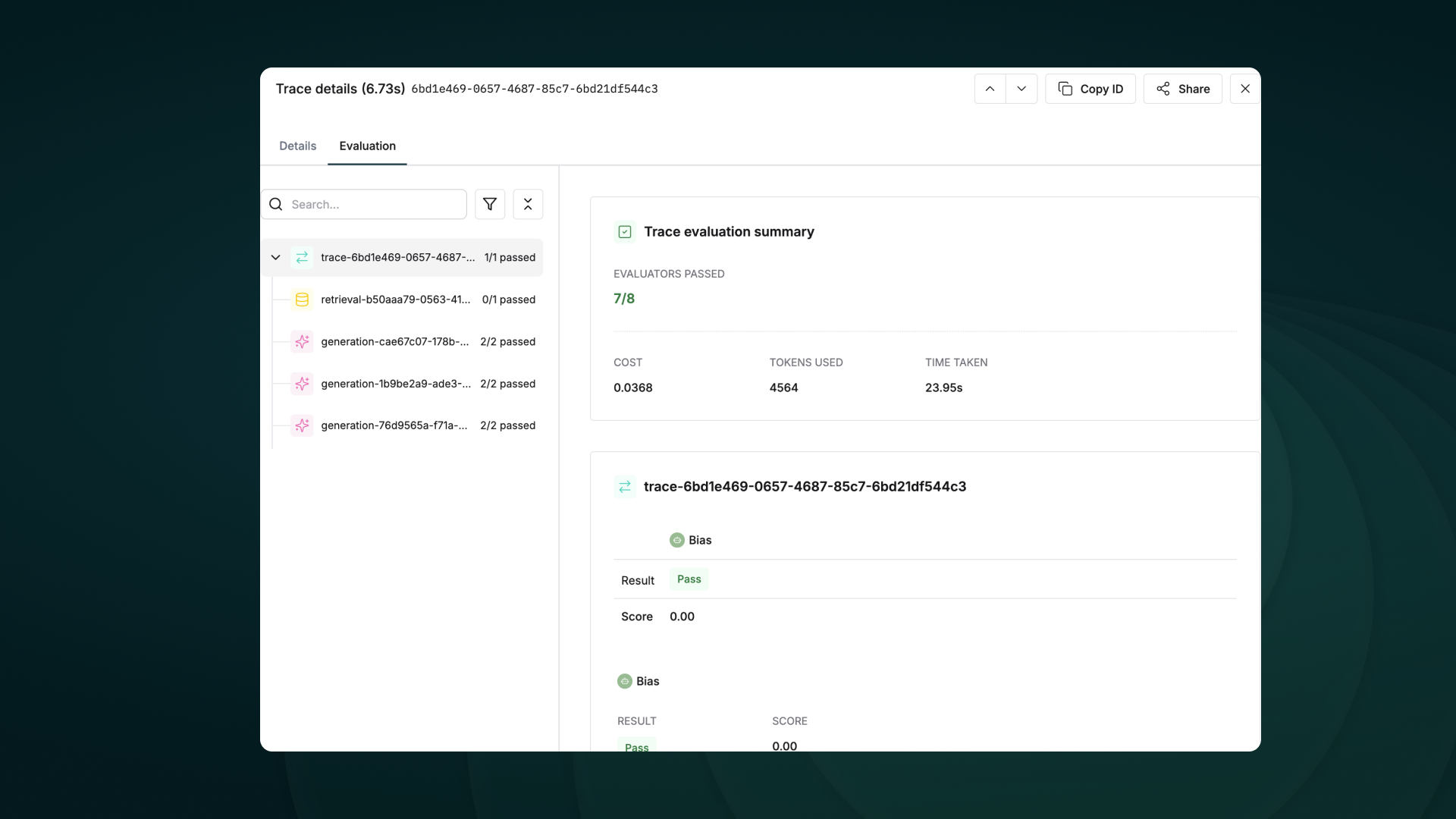

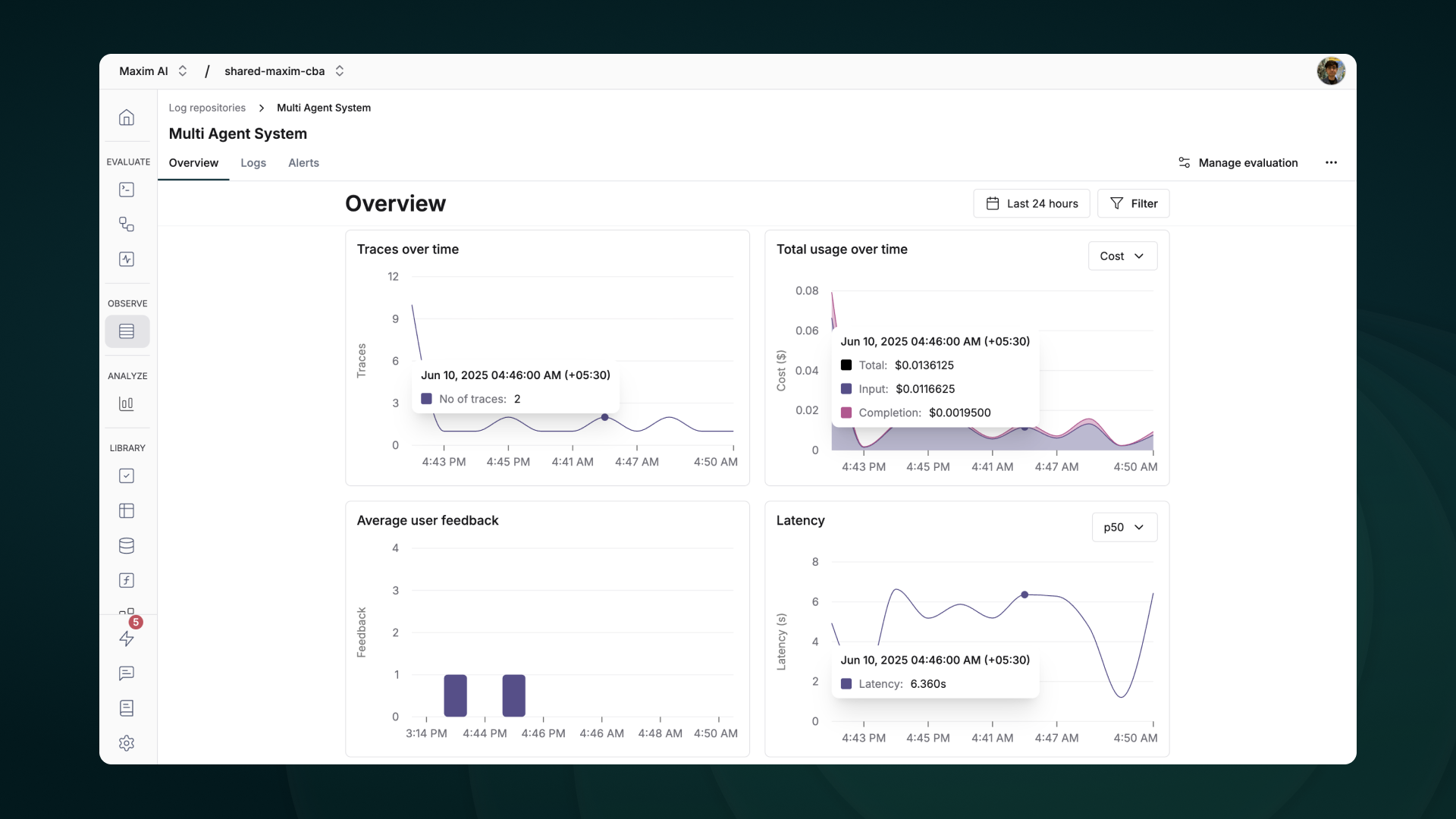

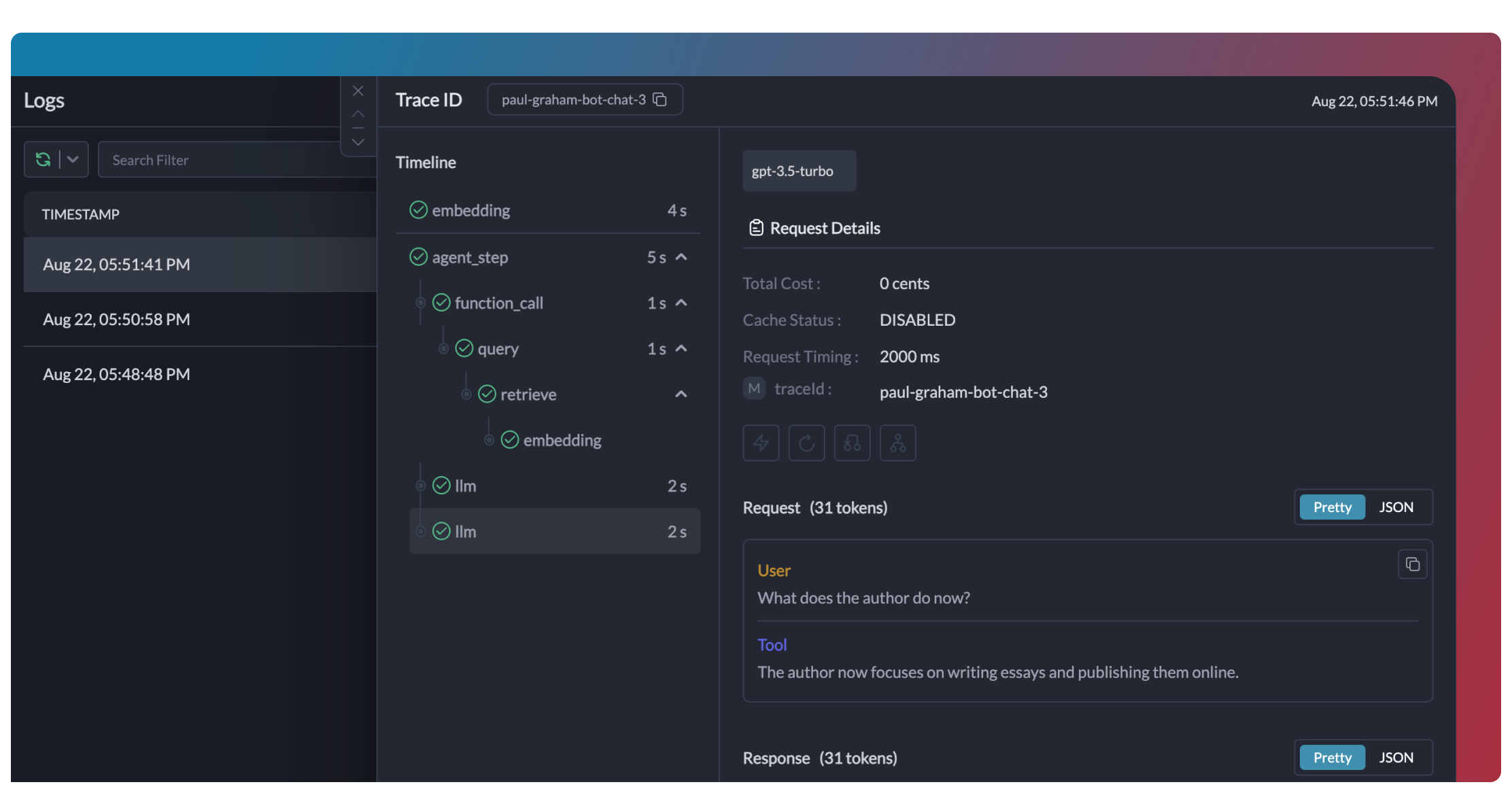

With Prompt Tracing you can:

* View the complete history of all prompts sent to your LLM

* Track token usage and costs

* Debug agent reasoning failures

* Share prompt sequences with your team

* Compare different prompt strategies

* Export traces for compliance and auditing

With Prompt Tracing you can:

* View the complete history of all prompts sent to your LLM

* Track token usage and costs

* Debug agent reasoning failures

* Share prompt sequences with your team

* Compare different prompt strategies

* Export traces for compliance and auditing

## Step 2: Explore the Project Structure

Let's take a moment to understand the project structure created by the CLI. CrewAI follows best practices for Python projects, making it easy to maintain and extend your code as your crews become more complex.

```

research_crew/

├── .gitignore

├── pyproject.toml

├── README.md

├── .env

└── src/

└── research_crew/

├── __init__.py

├── main.py

├── crew.py

├── tools/

│ ├── custom_tool.py

│ └── __init__.py

└── config/

├── agents.yaml

└── tasks.yaml

```

This structure follows best practices for Python projects and makes it easy to organize your code. The separation of configuration files (in YAML) from implementation code (in Python) makes it easy to modify your crew's behavior without changing the underlying code.

## Step 3: Configure Your Agents

Now comes the fun part - defining your AI agents! In CrewAI, agents are specialized entities with specific roles, goals, and backstories that shape their behavior. Think of them as characters in a play, each with their own personality and purpose.

For our research crew, we'll create two agents:

1. A **researcher** who excels at finding and organizing information

2. An **analyst** who can interpret research findings and create insightful reports

Let's modify the `agents.yaml` file to define these specialized agents. Be sure

to set `llm` to the provider you are using.

```yaml theme={null}

# src/research_crew/config/agents.yaml

researcher:

role: >

Senior Research Specialist for {topic}

goal: >

Find comprehensive and accurate information about {topic}

with a focus on recent developments and key insights

backstory: >

You are an experienced research specialist with a talent for

finding relevant information from various sources. You excel at

organizing information in a clear and structured manner, making

complex topics accessible to others.

llm: provider/model-id # e.g. openai/gpt-4o, google/gemini-2.0-flash, anthropic/claude...

analyst:

role: >

Data Analyst and Report Writer for {topic}

goal: >

Analyze research findings and create a comprehensive, well-structured

report that presents insights in a clear and engaging way

backstory: >

You are a skilled analyst with a background in data interpretation

and technical writing. You have a talent for identifying patterns

and extracting meaningful insights from research data, then

communicating those insights effectively through well-crafted reports.

llm: provider/model-id # e.g. openai/gpt-4o, google/gemini-2.0-flash, anthropic/claude...

```

Notice how each agent has a distinct role, goal, and backstory. These elements aren't just descriptive - they actively shape how the agent approaches its tasks. By crafting these carefully, you can create agents with specialized skills and perspectives that complement each other.

## Step 4: Define Your Tasks

With our agents defined, we now need to give them specific tasks to perform. Tasks in CrewAI represent the concrete work that agents will perform, with detailed instructions and expected outputs.

For our research crew, we'll define two main tasks:

1. A **research task** for gathering comprehensive information

2. An **analysis task** for creating an insightful report

Let's modify the `tasks.yaml` file:

```yaml theme={null}

# src/research_crew/config/tasks.yaml

research_task:

description: >

Conduct thorough research on {topic}. Focus on:

1. Key concepts and definitions

2. Historical development and recent trends

3. Major challenges and opportunities

4. Notable applications or case studies

5. Future outlook and potential developments

Make sure to organize your findings in a structured format with clear sections.

expected_output: >

A comprehensive research document with well-organized sections covering

all the requested aspects of {topic}. Include specific facts, figures,

and examples where relevant.

agent: researcher

analysis_task:

description: >

Analyze the research findings and create a comprehensive report on {topic}.

Your report should:

1. Begin with an executive summary

2. Include all key information from the research

3. Provide insightful analysis of trends and patterns

4. Offer recommendations or future considerations

5. Be formatted in a professional, easy-to-read style with clear headings

expected_output: >

A polished, professional report on {topic} that presents the research

findings with added analysis and insights. The report should be well-structured

with an executive summary, main sections, and conclusion.

agent: analyst

context:

- research_task

output_file: output/report.md

```

Note the `context` field in the analysis task - this is a powerful feature that allows the analyst to access the output of the research task. This creates a workflow where information flows naturally between agents, just as it would in a human team.

## Step 5: Configure Your Crew

Now it's time to bring everything together by configuring our crew. The crew is the container that orchestrates how agents work together to complete tasks.

Let's modify the `crew.py` file:

```python theme={null}

# src/research_crew/crew.py

from crewai import Agent, Crew, Process, Task

from crewai.project import CrewBase, agent, crew, task

from crewai_tools import SerperDevTool

from crewai.agents.agent_builder.base_agent import BaseAgent

from typing import List

@CrewBase

class ResearchCrew():

"""Research crew for comprehensive topic analysis and reporting"""

agents: List[BaseAgent]

tasks: List[Task]

@agent

def researcher(self) -> Agent:

return Agent(

config=self.agents_config['researcher'], # type: ignore[index]

verbose=True,

tools=[SerperDevTool()]

)

@agent

def analyst(self) -> Agent:

return Agent(

config=self.agents_config['analyst'], # type: ignore[index]

verbose=True

)

@task

def research_task(self) -> Task:

return Task(

config=self.tasks_config['research_task'] # type: ignore[index]

)

@task

def analysis_task(self) -> Task:

return Task(

config=self.tasks_config['analysis_task'], # type: ignore[index]

output_file='output/report.md'

)

@crew

def crew(self) -> Crew:

"""Creates the research crew"""

return Crew(

agents=self.agents,

tasks=self.tasks,

process=Process.sequential,

verbose=True,

)

```

In this code, we're:

1. Creating the researcher agent and equipping it with the SerperDevTool to search the web

2. Creating the analyst agent

3. Setting up the research and analysis tasks

4. Configuring the crew to run tasks sequentially (the analyst will wait for the researcher to finish)

This is where the magic happens - with just a few lines of code, we've defined a collaborative AI system where specialized agents work together in a coordinated process.

## Step 6: Set Up Your Main Script

Now, let's set up the main script that will run our crew. This is where we provide the specific topic we want our crew to research.

```python theme={null}

#!/usr/bin/env python

# src/research_crew/main.py

import os

from research_crew.crew import ResearchCrew

# Create output directory if it doesn't exist

os.makedirs('output', exist_ok=True)

def run():

"""

Run the research crew.

"""

inputs = {

'topic': 'Artificial Intelligence in Healthcare'

}

# Create and run the crew

result = ResearchCrew().crew().kickoff(inputs=inputs)

# Print the result

print("\n\n=== FINAL REPORT ===\n\n")

print(result.raw)

print("\n\nReport has been saved to output/report.md")

if __name__ == "__main__":

run()

```

This script prepares the environment, specifies our research topic, and kicks off the crew's work. The power of CrewAI is evident in how simple this code is - all the complexity of managing multiple AI agents is handled by the framework.

## Step 7: Set Up Your Environment Variables

Create a `.env` file in your project root with your API keys:

```sh theme={null}

SERPER_API_KEY=your_serper_api_key

# Add your provider's API key here too.

```

See the [LLM Setup guide](/en/concepts/llms#setting-up-your-llm) for details on configuring your provider of choice. You can get a Serper API key from [Serper.dev](https://serper.dev/).

## Step 8: Install Dependencies

Install the required dependencies using the CrewAI CLI:

```bash theme={null}

crewai install

```

This command will:

1. Read the dependencies from your project configuration

2. Create a virtual environment if needed

3. Install all required packages

## Step 9: Run Your Crew

Now for the exciting moment - it's time to run your crew and see AI collaboration in action!

```bash theme={null}

crewai run

```

When you run this command, you'll see your crew spring to life. The researcher will gather information about the specified topic, and the analyst will then create a comprehensive report based on that research. You'll see the agents' thought processes, actions, and outputs in real-time as they work together to complete their tasks.

## Step 10: Review the Output

Once the crew completes its work, you'll find the final report in the `output/report.md` file. The report will include:

1. An executive summary

2. Detailed information about the topic

3. Analysis and insights

4. Recommendations or future considerations

Take a moment to appreciate what you've accomplished - you've created a system where multiple AI agents collaborated on a complex task, each contributing their specialized skills to produce a result that's greater than what any single agent could achieve alone.

## Exploring Other CLI Commands

CrewAI offers several other useful CLI commands for working with crews:

```bash theme={null}

# View all available commands

crewai --help

# Run the crew

crewai run

# Test the crew

crewai test

# Reset crew memories

crewai reset-memories

# Replay from a specific task

crewai replay -t

## Step 2: Explore the Project Structure

Let's take a moment to understand the project structure created by the CLI. CrewAI follows best practices for Python projects, making it easy to maintain and extend your code as your crews become more complex.

```

research_crew/

├── .gitignore

├── pyproject.toml

├── README.md

├── .env

└── src/

└── research_crew/

├── __init__.py

├── main.py

├── crew.py

├── tools/

│ ├── custom_tool.py

│ └── __init__.py

└── config/

├── agents.yaml

└── tasks.yaml

```

This structure follows best practices for Python projects and makes it easy to organize your code. The separation of configuration files (in YAML) from implementation code (in Python) makes it easy to modify your crew's behavior without changing the underlying code.

## Step 3: Configure Your Agents

Now comes the fun part - defining your AI agents! In CrewAI, agents are specialized entities with specific roles, goals, and backstories that shape their behavior. Think of them as characters in a play, each with their own personality and purpose.

For our research crew, we'll create two agents:

1. A **researcher** who excels at finding and organizing information

2. An **analyst** who can interpret research findings and create insightful reports

Let's modify the `agents.yaml` file to define these specialized agents. Be sure

to set `llm` to the provider you are using.

```yaml theme={null}

# src/research_crew/config/agents.yaml

researcher:

role: >

Senior Research Specialist for {topic}

goal: >

Find comprehensive and accurate information about {topic}

with a focus on recent developments and key insights

backstory: >

You are an experienced research specialist with a talent for

finding relevant information from various sources. You excel at

organizing information in a clear and structured manner, making

complex topics accessible to others.

llm: provider/model-id # e.g. openai/gpt-4o, google/gemini-2.0-flash, anthropic/claude...

analyst:

role: >

Data Analyst and Report Writer for {topic}

goal: >

Analyze research findings and create a comprehensive, well-structured

report that presents insights in a clear and engaging way

backstory: >

You are a skilled analyst with a background in data interpretation

and technical writing. You have a talent for identifying patterns

and extracting meaningful insights from research data, then

communicating those insights effectively through well-crafted reports.

llm: provider/model-id # e.g. openai/gpt-4o, google/gemini-2.0-flash, anthropic/claude...

```

Notice how each agent has a distinct role, goal, and backstory. These elements aren't just descriptive - they actively shape how the agent approaches its tasks. By crafting these carefully, you can create agents with specialized skills and perspectives that complement each other.

## Step 4: Define Your Tasks

With our agents defined, we now need to give them specific tasks to perform. Tasks in CrewAI represent the concrete work that agents will perform, with detailed instructions and expected outputs.

For our research crew, we'll define two main tasks:

1. A **research task** for gathering comprehensive information

2. An **analysis task** for creating an insightful report

Let's modify the `tasks.yaml` file:

```yaml theme={null}

# src/research_crew/config/tasks.yaml

research_task:

description: >

Conduct thorough research on {topic}. Focus on:

1. Key concepts and definitions

2. Historical development and recent trends

3. Major challenges and opportunities

4. Notable applications or case studies

5. Future outlook and potential developments

Make sure to organize your findings in a structured format with clear sections.

expected_output: >