| Variable | Value Description | Value Example |

|---|---|---|

| `SANDBOX_SYSTEM_URL` |

The base URL to access the {user.productName} system. The URL is ` |

`https://sandboxes.cloud` |

| `SANDBOX_SYSTEM_DOMAIN` | The domain part of `SANDBOX_SYSTEM_URL` | `sandboxes.cloud` |

| `SANDBOX_SYSTEM_DNS_SUFFIX` | The suffix for constructing DNS names after `SANDBOX_SYSTEM_DOMAIN` | `.sandboxes.cloud` |

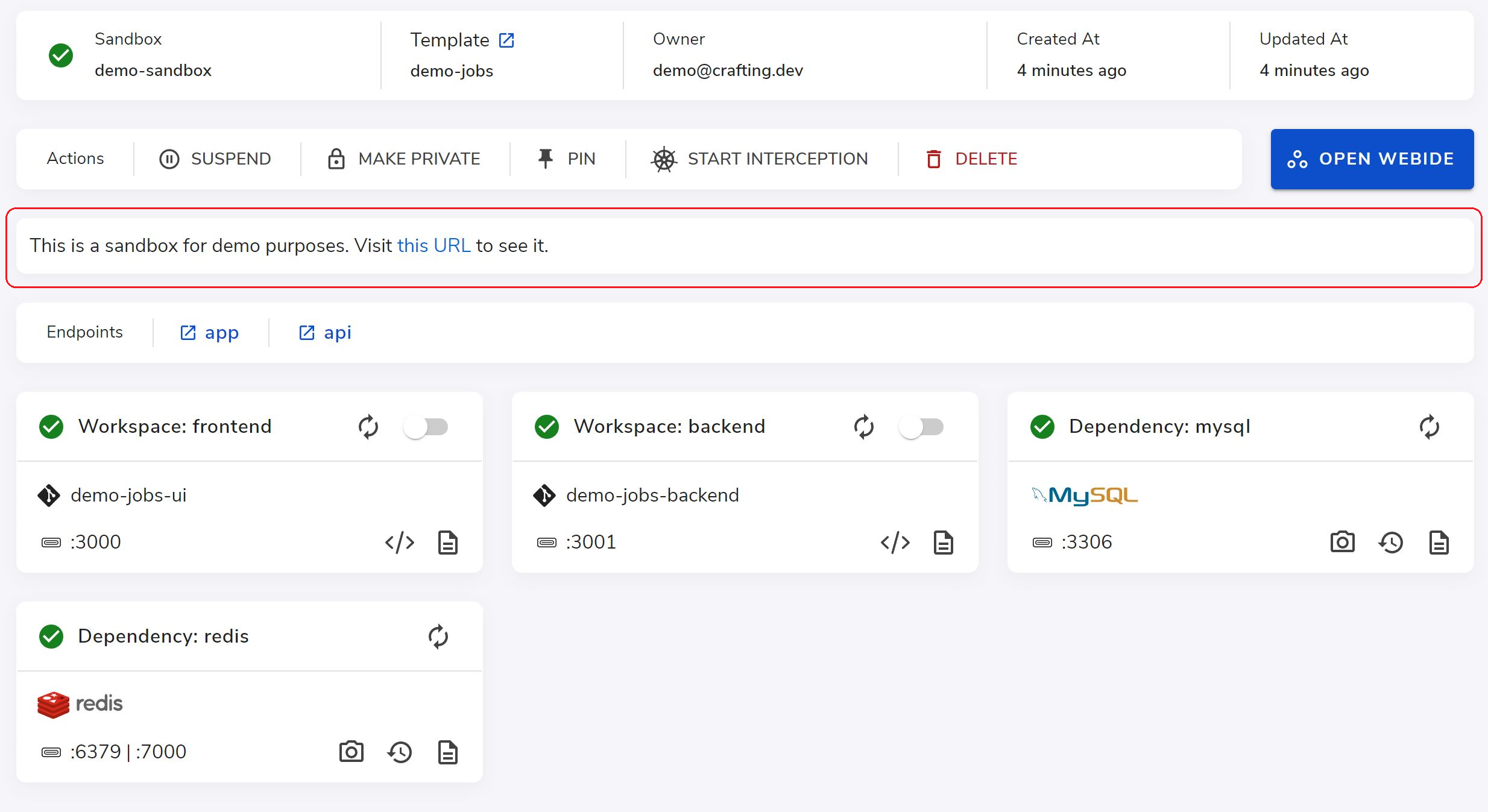

| `SANDBOX_ORG` | The name of the current organization. | `crafting` |

| `SANDBOX_ORG_ID` | The ID of the current organization. | |

| `SANDBOX_NAME` | The name of the current Sandbox. | `mysandbox` |

| `SANDBOX_ID` | The ID of the current Sandbox. | |

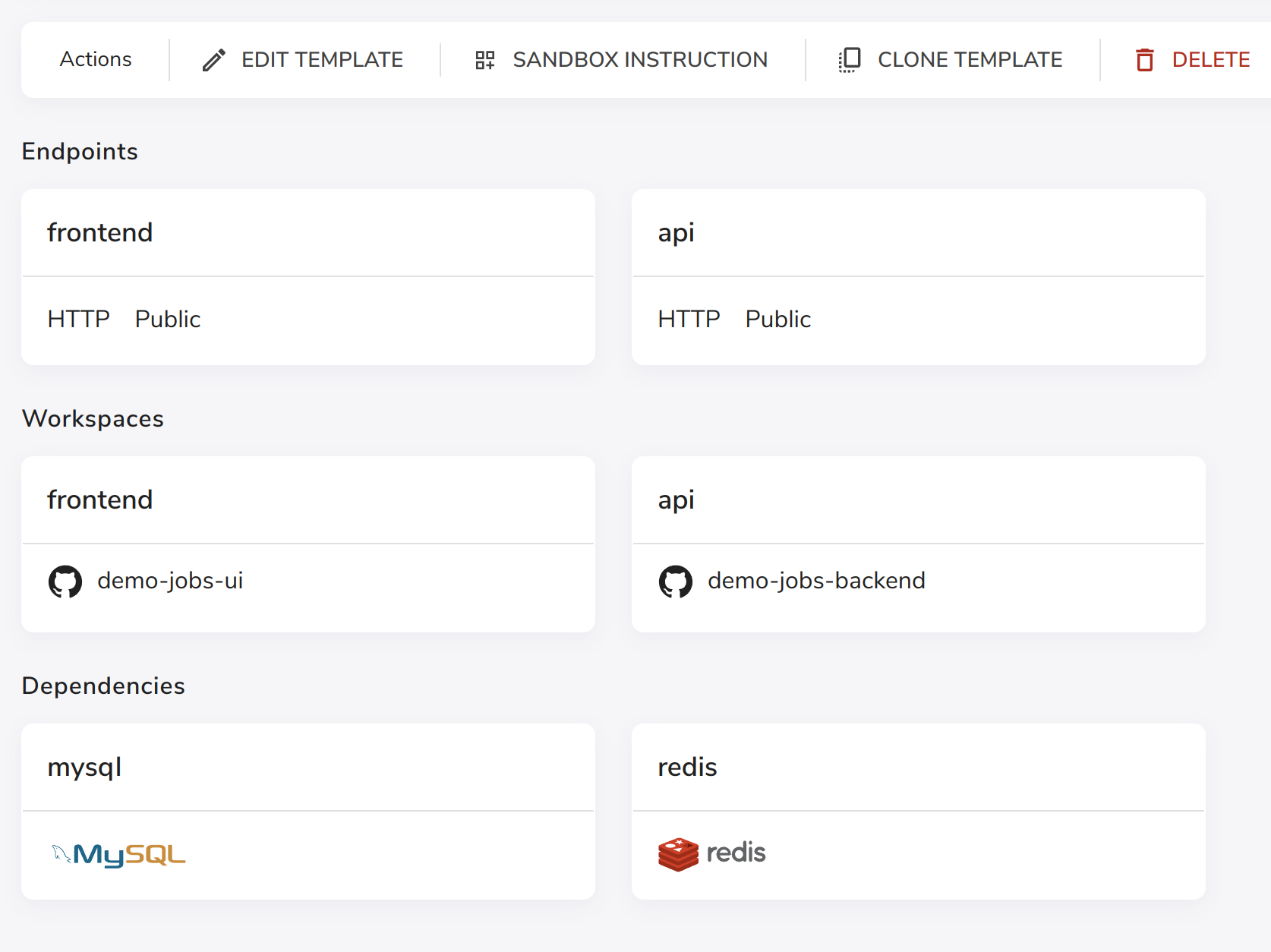

| `SANDBOX_APP` | The name of the Template that the Sandbox is created from. It's only available when the Sandbox is created from a Template. | `crafting-backend-dev` |

| `SANDBOX_WORKSPACE` | The name of the current workspace. | `api` |

| `SANDBOX_OWNER_ID` | The ID of the Sandbox owner, if available. | |

| `SANDBOX_OWNER_EMAIL` | The email of the Sandbox owner, if available. | `demo@crafting.dev` |

| `SANDBOX_OWNER_NAME` | The display name of the Sandbox owner, if available. | |

| `SANDBOX_APP_DOMAIN` | The Internet facing DNS domain of the Sandbox. Often, it has the format `${SANDBOX_NAME}-${SANDBOX_ORG}.sandboxes.run` | `mysandbox-org.sandboxes.run` |

| `SANDBOX_ENDPOINT_DNS_SUFFIX` | The suffix for Internet facing DNS names of endpoints. The complete DNS name of an endpoint can be constructed using `${ENDPOINT_NAME}${SANDBOX_ENDPOINT_DNS_SUFFIX}` | `--mysandbox-org.sandboxes.run` |

| `SANDBOX_JOB_ID` | The job ID, if the sandbox is created for a job | |

| `SANDBOX_JOB_EXEC_ID` | The job execution ID, if the sandbox is created for a job | |

| `SANDBOX_POOL_ID` | The sandbox Pool ID if the sandbox is currently in the pool | |

|

`__SERVICE_HOST`\

`__SERVICE_PORT`\

`*_SERVICE_PORT_ |

Service linking environment variables. See [Use environment variables for service linking](#use-environment-variables-for-service-linking) below. | `MYSQL_SERVICE_HOST=mysql`\ `MYSQL_SERVICE_PORT=3306`\ `MYSQL_SERVICE_PORT_MYSQL=3306` |

Image

Image

Image

| Hook | When to Run |

|---|---|

| post-checkout | Optional, after any new code change pulled from the remote repository. |

| build | After new code change is pulled from the remote repository, and after post-checkout.\ If unspecified, no build will be performed. |

| Service Type | Property Key | Description |

|---|---|---|

mysql |

root-password |

The initial password of root. Default is empty (no password required for root). |

username |

The regular user to be created. |

|

password |

The password for the regular user. It's only used if |

|

database |

The database to be created and grant access to the regular user (if |

|

postgres |

username |

The regular user to be created. |

password |

The password for the regular user. It's only used if |

|

database |

The database to be created and grant access to the regular user (if |

|

mongodb |

replicaset |

The name of replicaset. If specified, the single-instance mongodb server will be configured as a single-instance replicaset. |

redis |

persistence |

If specified, turn on persistence. Use one of the values:

|

save |

The |

|

cluster |

If the value is |

|

rabbitmq |

default-user |

The default username for authentication. If unspecified, guest user can access. |

default-pass |

The password for |