# Comfy

> ## Documentation Index

---

# Source: https://docs.comfy.org/interface/settings/3d.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ComfyUI 3D Settings

> Detailed description of ComfyUI 3D setting options

This section of settings is mainly used to control the initialization settings of 3D-related components in ComfyUI, including camera, lighting, scene, etc. When creating new 3D components, they will be initialized according to these settings. After creation, these settings can still be adjusted individually.

## Camera

### Initial Camera Type

* **Options**:

* `perspective`

* `orthographic`

* **Function**: Controls whether the default camera is perspective or orthographic when creating new 3D components. This default setting can still be switched individually for each component after creation

## Light

The light settings in this section are used to set the default lighting settings for 3D components. The corresponding settings in the 3D settings in ComfyUI can also be modified.

### Light Adjustment Increment

* **Default Value**: 0.5

* **Function**: Controls the step size when adjusting light intensity in 3D scenes. Smaller step values allow for finer light adjustments, while larger values make each adjustment more noticeable

### Light Intensity Minimum

* **Default Value**: 1

* **Function**: Sets the minimum light intensity value allowed in 3D scenes. This defines the lowest brightness that can be set when adjusting the lighting of any 3D control

### Light Intensity Maximum

* **Default Value**: 10

* **Function**: Sets the maximum light intensity value allowed in 3D scenes. This defines the upper limit of brightness that can be set when adjusting the lighting of any 3D control

### Initial Light Intensity

* **Default Value**: 3

* **Function**: Sets the default brightness level of lights in 3D scenes. This value determines the intensity with which lights illuminate objects when creating new 3D controls, but each control can be adjusted individually after creation

## Scene

### Initial Background Color

* **Function**: Controls the default background color of 3D scenes. This setting determines the background appearance when creating new 3D components, but each component can be adjusted individually after creation

* **Default Value**: `282828` (dark gray)

Change the background color, which can also be adjusted in the canvas.

### Initial Preview Visibility

* **Function**: Controls whether the preview screen is displayed by default when creating new 3D components. This default setting can still be toggled individually for each component after creation

* **Default Value**: true (enabled)

### Initial Grid Visibility

* **Function**: Controls whether the grid is displayed by default when creating new 3D components. This default setting can still be toggled individually for each component after creation

* **Default Value**: true (enabled)

Hide or show the grid on initialization

---

# Source: https://docs.comfy.org/built-in-nodes/BasicScheduler.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# BasicScheduler - ComfyUI Built-in Node Documentation

> The BasicScheduler node is used to compute a sequence of sigma values for diffusion models based on the provided scheduler, model, and denoising parameters.

The `BasicScheduler` node is designed to compute a sequence of sigma values for diffusion models based on the provided scheduler, model, and denoising parameters. It dynamically adjusts the total number of steps based on the denoise factor to fine-tune the diffusion process, providing precise "recipes" for different stages in advanced sampling processes that require fine control (such as multi-stage sampling).

## Inputs

| Parameter | Data Type | Input Type | Default | Range | Metaphor Description | Technical Purpose |

| ----------- | -------------- | ---------- | ------- | --------- | ------------------------------------------------------------------------- | ---------------------------------------------------------- |

| `model` | MODEL | Input | - | - | **Canvas Type**: Different canvas materials need different paint formulas | Diffusion model object, determines sigma calculation basis |

| `scheduler` | COMBO\[STRING] | Widget | - | 9 options | **Mixing Technique**: Choose how paint concentration changes | Scheduling algorithm, controls noise decay mode |

| `steps` | INT | Widget | 20 | 1-10000 | **Mixing Count**: 20 mixes vs 50 mixes precision difference | Sampling steps, affects generation quality and speed |

| `denoise` | FLOAT | Widget | 1.0 | 0.0-1.0 | **Creation Intensity**: Control level from fine-tuning to repainting | Denoising strength, supports partial repainting scenarios |

### Scheduler Types

Based on source code `comfy.samplers.SCHEDULER_NAMES`, supports the following 9 schedulers:

| Scheduler Name | Characteristics | Use Cases | Noise Decay Pattern |

| --------------------- | ----------------- | ----------------------------- | ----------------------------- |

| **normal** | Standard linear | General scenarios, balanced | Uniform decay |

| **karras** | Smooth transition | High quality, detail-rich | Smooth non-linear decay |

| **exponential** | Exponential decay | Fast generation, efficiency | Exponential rapid decay |

| **sgm\_uniform** | SGM uniform | Specific model optimization | SGM optimized decay |

| **simple** | Simple scheduling | Quick testing, basic use | Simplified decay |

| **ddim\_uniform** | DDIM uniform | DDIM sampling optimization | DDIM specific decay |

| **beta** | Beta distribution | Special distribution needs | Beta function decay |

| **linear\_quadratic** | Linear quadratic | Complex scenario optimization | Quadratic function decay |

| **kl\_optimal** | KL optimal | Theoretical optimization | KL divergence optimized decay |

## Outputs

| Parameter | Data Type | Output Type | Metaphor Description | Technical Meaning |

| --------- | --------- | ----------- | ------------------------------------------------------------------------------ | -------------------------------------------------------------- |

| `sigmas` | SIGMAS | Output | **Paint Recipe Chart**: Detailed paint concentration list for step-by-step use | Noise level sequence, guides diffusion model denoising process |

## Node Role: Artist's Color Mixing Assistant

Imagine you are an artist creating a clear image from a chaotic mixture of paint (noise). `BasicScheduler` acts like your **professional color mixing assistant**, whose job is to prepare a series of precise paint concentration recipes:

### Workflow

* **Step 1**: Use 90% concentration paint (high noise level)

* **Step 2**: Use 80% concentration paint

* **Step 3**: Use 70% concentration paint

* **...**

* **Final Step**: Use 0% concentration (clean canvas, no noise)

### Color Assistant's Special Skills

**Different mixing methods (scheduler)**:

* **"karras" mixing method**: Paint concentration changes very smoothly, like professional artist's gradient technique

* **"exponential" mixing method**: Paint concentration decreases rapidly, suitable for quick creation

* **"linear" mixing method**: Paint concentration decreases uniformly, stable and controllable

**Fine control (steps)**:

* **20 mixes**: Quick painting, efficiency priority

* **50 mixes**: Fine painting, quality priority

**Creation intensity (denoise)**:

* **1.0 = Complete new creation**: Start completely from blank canvas

* **0.5 = Half transformation**: Keep half of original painting, transform half

* **0.2 = Fine adjustment**: Only make subtle adjustments to original painting

### Collaboration with Other Nodes

`BasicScheduler` (Color Assistant) → Prepare Recipe → `SamplerCustom` (Artist) → Actual Painting → Completed Work

---

# Source: https://docs.comfy.org/built-in-nodes/Canny.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

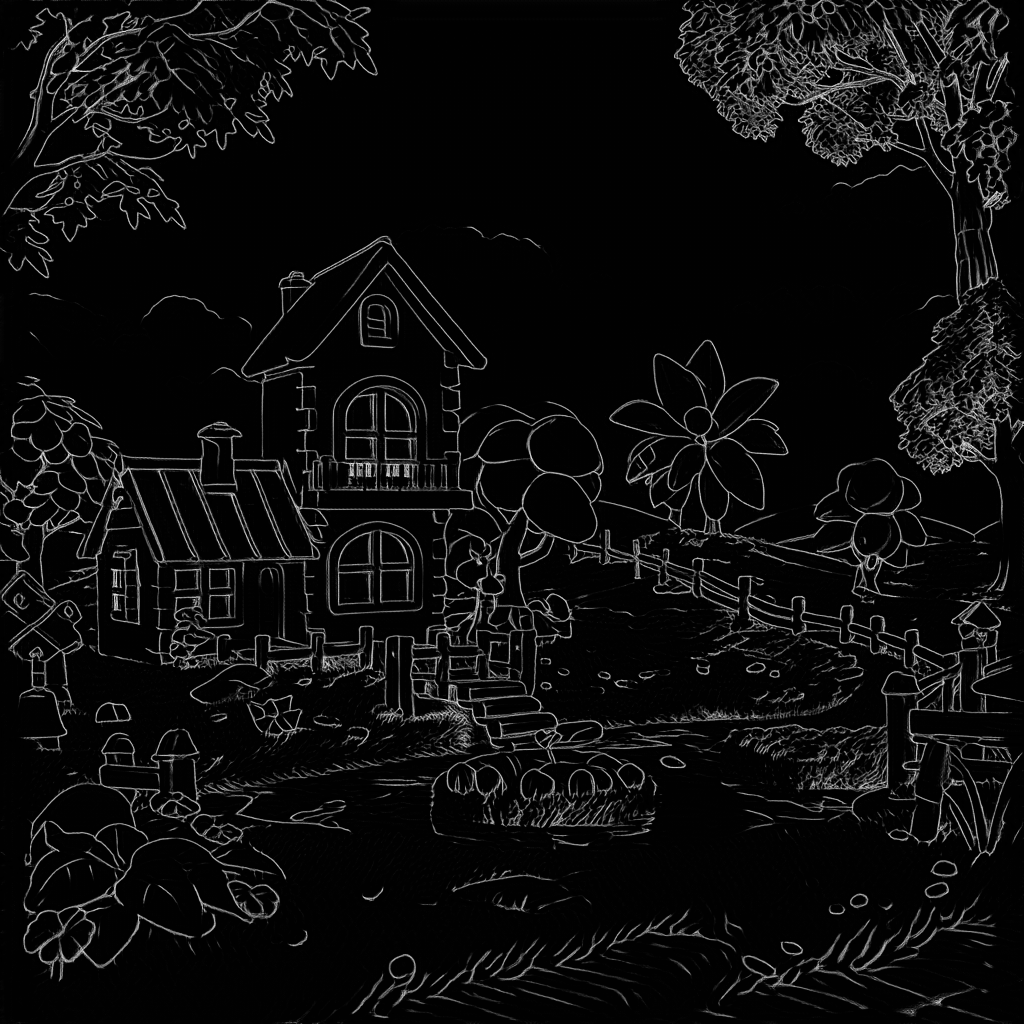

# Canny - ComfyUI Built-in Node Documentation

> The Canny node used to extract edge lines from photos.

Extract all edge lines from photos, like using a pen to outline a photo, drawing out the contours and detail boundaries of objects.

## Working Principle

Imagine you are an artist who needs to use a pen to outline a photo. The Canny node acts like an intelligent assistant, helping you decide where to draw lines (edges) and where not to.

This process is like a screening job:

* **High threshold** is the "must draw line standard": only very obvious and clear contour lines will be drawn, such as facial contours of people and building frames

* **Low threshold** is the "definitely don't draw line standard": edges that are too weak will be ignored to avoid drawing noise and meaningless lines

* **Middle area**: edges between the two standards will be drawn together if they connect to "must draw lines", but won't be drawn if they are isolated

The final output is a black and white image, where white parts are detected edge lines and black parts are areas without edges.

## Inputs

| Parameter Name | Data Type | Input Type | Default | Range | Function Description |

| ---------------- | --------- | ---------- | ------- | --------- | --------------------------------------------------------------------------------------------------------------- |

| `image` | IMAGE | Input | - | - | Original photo that needs edge extraction |

| `low_threshold` | FLOAT | Widget | 0.4 | 0.01-0.99 | Low threshold, determines how weak edges to ignore. Lower values preserve more details but may produce noise |

| `high_threshold` | FLOAT | Widget | 0.8 | 0.01-0.99 | High threshold, determines how strong edges to preserve. Higher values only keep the most obvious contour lines |

## Outputs

| Output Name | Data Type | Description |

| ----------- | --------- | ----------------------------------------------------------------------------------------------- |

| `image` | IMAGE | Black and white edge image, white lines are detected edges, black areas are parts without edges |

## Parameter Comparison

**Common Issues:**

* Broken edges: Try lowering high threshold

* Too much noise: Raise low threshold

* Missing important details: Lower low threshold

* Edges too rough: Check input image quality and resolution

---

# Source: https://docs.comfy.org/built-in-nodes/CheckpointLoaderSimple.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# CheckpointLoaderSimple - ComfyUI Built-in Node Documentation

> The CheckpointLoaderSimple node is used to load model files from specified locations and decompose them into three core components: the main model, text encoder, and image encoder/decoder.

This is a model loader node that loads model files from specified locations and decomposes them into three core components: the main model, text encoder, and image encoder/decoder.

This node automatically detects all model files in the `ComfyUI/models/checkpoints` folder, as well as additional paths configured in your `extra_model_paths.yaml` file.

1. **Model Compatibility**: Ensure the selected model is compatible with your workflow. Different model types (such as SD1.5, SDXL, Flux, etc.) need to be paired with corresponding samplers and other nodes

2. **File Management**: Place model files in the `ComfyUI/models/checkpoints` folder, or configure other paths through extra\_model\_paths.yaml

3. **Interface Refresh**: If new model files are added while ComfyUI is running, you need to refresh the browser (Ctrl+R) to see the new files in the dropdown list

## Inputs

| Parameter | Data Type | Input Type | Default | Range | Description |

| ----------- | --------- | ---------- | ------- | ------------------------------------- | ----------------------------------------------------------------------------------------------------------------- |

| `ckpt_name` | STRING | Widget | null | All model files in checkpoints folder | Select the checkpoint model file name to load, which determines the AI model used for subsequent image generation |

## Outputs

| Output Name | Data Type | Description |

| ----------- | --------- | --------------------------------------------------------------------------------------------------------------- |

| `MODEL` | MODEL | The main diffusion model used for image denoising generation, the core component of AI image creation |

| `CLIP` | CLIP | The model used for encoding text prompts, converting text descriptions into information that AI can understand |

| `VAE` | VAE | The model used for image encoding and decoding, responsible for converting between pixel space and latent space |

---

# Source: https://docs.comfy.org/built-in-nodes/ClipLoader.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipLoader - ComfyUI Built-in Node Documentation

> The ClipLoader node is used to load CLIP text encoder models independently.

This node is primarily used for loading CLIP text encoder models independently.

The model files can be detected in the following paths:

* "ComfyUI/models/text\_encoders/"

* "ComfyUI/models/clip/"

> If you save a model after ComfyUI has started, you'll need to refresh the ComfyUI frontend to get the latest model file path list

Supported model formats:

* `.ckpt`

* `.pt`

* `.pt2`

* `.bin`

* `.pth`

* `.safetensors`

* `.pkl`

* `.sft`

For more details on the latest model file loading, please refer to [folder\_paths](https://github.com/comfyanonymous/ComfyUI/blob/master/folder_paths.py)

## Inputs

| Parameter | Data Type | Description |

| ----------- | -------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `clip_name` | COMBO\[STRING] | Specifies the name of the CLIP model to be loaded. This name is used to locate the model file within a predefined directory structure. |

| `type` | COMBO\[STRING] | Determines the type of CLIP model to load. As ComfyUI supports more models, new types will be added here. Please check the `CLIPLoader` class definition in [node.py](https://github.com/comfyanonymous/ComfyUI/blob/master/nodes.py) for details. |

| `device` | COMBO\[STRING] | Choose the device for loading the CLIP model. `default` will run the model on GPU, while selecting `CPU` will force loading on CPU. |

### Device Options Explained

**When to choose "default":**

* Have sufficient GPU memory

* Want the best performance

* Let the system optimize memory usage automatically

**When to choose "cpu":**

* Insufficient GPU memory

* Need to reserve GPU memory for other models (like UNet)

* Running in a low VRAM environment

* Debugging or special purpose needs

**Performance Impact**

Running on CPU will be much slower than GPU, but it can save valuable GPU memory for other more important model components. In memory-constrained environments, putting the CLIP model on CPU is a common optimization strategy.

### Supported Combinations

| Model Type | Corresponding Encoder |

| ----------------- | ----------------------- |

| stable\_diffusion | clip-l |

| stable\_cascade | clip-g |

| sd3 | t5 xxl/ clip-g / clip-l |

| stable\_audio | t5 base |

| mochi | t5 xxl |

| cosmos | old t5 xxl |

| lumina2 | gemma 2 2B |

| wan | umt5 xxl |

As ComfyUI updates, these combinations may expand. For details, please refer to the `CLIPLoader` class definition in [node.py](https://github.com/comfyanonymous/ComfyUI/blob/master/nodes.py)

## Outputs

| Parameter | Data Type | Description |

| --------- | --------- | ------------------------------------------------------------------------------- |

| `clip` | CLIP | The loaded CLIP model, ready for use in downstream tasks or further processing. |

## Additional Notes

CLIP models play a core role as text encoders in ComfyUI, responsible for converting text prompts into numerical representations that diffusion models can understand. You can think of them as translators, responsible for translating your text into a language that large models can understand. Of course, different models have their own "dialects," so different CLIP encoders are needed between different architectures to complete the text encoding process.

---

# Source: https://docs.comfy.org/built-in-nodes/ClipMergeSimple.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipMergeSimple - ComfyUI Built-in Node Documentation

> The ClipMergeSimple node is used to combine two CLIP text encoder models based on a specified ratio.

`CLIPMergeSimple` is an advanced model merging node used to combine two CLIP text encoder models based on a specified ratio.

This node specializes in merging two CLIP models based on a specified ratio, effectively blending their characteristics. It selectively applies patches from one model to another, excluding specific components like position IDs and logit scale, to create a hybrid model that combines features from both source models.

## Inputs

| Parameter | Data Type | Description |

| --------- | --------- | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `clip1` | CLIP | The first CLIP model to be merged. It serves as the base model for the merging process. |

| `clip2` | CLIP | The second CLIP model to be merged. Its key patches, except for position IDs and logit scale, are applied to the first model based on the specified ratio. |

| `ratio` | FLOAT | Range `0.0 - 1.0`, determines the proportion of features from the second model to blend into the first model. A ratio of 1.0 means fully adopting the second model's features, while 0.0 retains only the first model's features. |

## Outputs

| Parameter | Data Type | Description |

| --------- | --------- | ---------------------------------------------------------------------------------------------------------------- |

| `clip` | CLIP | The resulting merged CLIP model, incorporating features from both input models according to the specified ratio. |

## Merging Mechanism Explained

### Merging Algorithm

The node uses weighted averaging to merge the two models:

1. **Clone Base Model**: First clones `clip1` as the base model

2. **Get Patches**: Obtains all key patches from `clip2`

3. **Filter Special Keys**: Skips keys ending with `.position_ids` and `.logit_scale`

4. **Apply Weighted Merge**: Uses the formula `(1.0 - ratio) * clip1 + ratio * clip2`

### Ratio Parameter Explained

* **ratio = 0.0**: Fully uses clip1, ignores clip2

* **ratio = 0.5**: 50% contribution from each model

* **ratio = 1.0**: Fully uses clip2, ignores clip1

## Use Cases

1. **Model Style Fusion**: Combine characteristics of CLIP models trained on different data

2. **Performance Optimization**: Balance strengths and weaknesses of different models

3. **Experimental Research**: Explore combinations of different CLIP encoders

---

# Source: https://docs.comfy.org/built-in-nodes/ClipSave.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipSave - ComfyUI Built-in Node Documentation

> The ClipSave node is used to save CLIP text encoder models in SafeTensors format.

The `CLIPSave` node is designed for saving CLIP text encoder models in SafeTensors format. This node is part of advanced model merging workflows and is typically used in conjunction with nodes like `CLIPMergeSimple` and `CLIPMergeAdd`. The saved files use the SafeTensors format to ensure security and compatibility.

## Inputs

| Parameter | Data Type | Required | Default Value | Description |

| ---------------- | -------------- | -------- | -------------- | ------------------------------------------ |

| clip | CLIP | Yes | - | The CLIP model to be saved |

| filename\_prefix | STRING | Yes | "clip/ComfyUI" | The prefix path for the saved file |

| prompt | PROMPT | Hidden | - | Workflow prompt information (for metadata) |

| extra\_pnginfo | EXTRA\_PNGINFO | Hidden | - | Additional PNG information (for metadata) |

## Outputs

This node has no defined output types. It saves the processed files to the `ComfyUI/output/` folder.

### Multi-file Saving Strategy

The node saves different components based on the CLIP model type:

| Prefix Type | File Suffix | Description |

| ------------ | ----------- | --------------------- |

| `clip_l.` | `_clip_l` | CLIP-L text encoder |

| `clip_g.` | `_clip_g` | CLIP-G text encoder |

| Empty prefix | No suffix | Other CLIP components |

## Usage Notes

1. **File Location**: All files are saved in the `ComfyUI/output/` directory

2. **File Format**: Models are saved in SafeTensors format for security

3. **Metadata**: Includes workflow information and PNG metadata if available

4. **Naming Convention**: Uses the specified prefix plus appropriate suffixes based on model type

---

# Source: https://docs.comfy.org/built-in-nodes/ClipSetLastLayer.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipSetLastLayer - ComfyUI Built-in Node Documentation

> The ClipSetLastLayer node is used to control the processing depth of CLIP models.

`CLIP Set Last Layer` is a core node in ComfyUI for controlling the processing depth of CLIP models. It allows users to precisely control where the CLIP text encoder stops processing, affecting both the depth of text understanding and the style of generated images.

Imagine the CLIP model as a 24-layer intelligent brain:

* Shallow layers (1-8): Recognize basic letters and words

* Middle layers (9-16): Understand grammar and sentence structure

* Deep layers (17-24): Grasp abstract concepts and complex semantics

`CLIP Set Last Layer` works like a **"thinking depth controller"**:

-1: Use all 24 layers (complete understanding)

-2: Stop at layer 23 (slightly simplified)

-12: Stop at layer 13 (medium understanding)

-24: Use only layer 1 (basic understanding)

## Inputs

| Parameter | Data Type | Default | Range | Description |

| -------------------- | --------- | ------- | --------- | ----------------------------------------------------------------------------------- |

| `clip` | CLIP | - | - | The CLIP model to be modified |

| `stop_at_clip_layer` | INT | -1 | -24 to -1 | Specifies which layer to stop at, -1 uses all layers, -24 uses only the first layer |

## Outputs

| Output Name | Data Type | Description |

| ----------- | --------- | -------------------------------------------------------------------- |

| clip | CLIP | The modified CLIP model with the specified layer set as the last one |

## Why Set the Last Layer

* **Performance Optimization**: Like not needing a PhD to understand simple sentences, sometimes shallow understanding is enough and faster

* **Style Control**: Different levels of understanding produce different artistic styles

* **Compatibility**: Some models might perform better at specific layers

---

# Source: https://docs.comfy.org/built-in-nodes/ClipTextEncode.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipTextEncode - ComfyUI Built-in Node Documentation

> The ClipTextEncode node is used to convert text prompts into AI-understandable 'language' for image generation.

`CLIP Text Encode (CLIPTextEncode)` acts like a translator, converting your creative text prompts into a special "language" that AI can understand, helping the AI accurately interpret what kind of image you want to create.

Imagine communicating with a foreign artist - you need a translator to help accurately convey the artwork you want. This node acts as that translator, using the CLIP model (an AI model trained on vast amounts of image-text pairs) to understand your text descriptions and convert them into "instructions" that the AI art model can understand.

## Inputs

| Parameter | Data Type | Input Method | Default | Range | Description |

| --------- | --------- | --------------- | ------- | ------------------ | ---------------------------------------------------------------------------------------------------------------------------------------------------- |

| text | STRING | Text Input | Empty | Any text | Like detailed instructions to an artist, enter your image description here. Supports multi-line text for detailed descriptions. |

| clip | CLIP | Model Selection | None | Loaded CLIP models | Like choosing a specific translator, different CLIP models are like different translators with slightly different understandings of artistic styles. |

## Outputs

| Output Name | Data Type | Description |

| ------------ | ------------ | --------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| CONDITIONING | CONDITIONING | These are the translated "painting instructions" containing detailed creative guidance that the AI model can understand. These instructions tell the AI model how to create an image matching your description. |

## Usage Tips

1. **Basic Text Prompt Usage**

* Write detailed descriptions like you're writing a short essay

* More specific descriptions lead to more accurate results

* Use English commas to separate different descriptive elements

2. **Special Feature: Using Embedding Models**

* Embedding models are like preset art style packages that can quickly apply specific artistic effects

* Currently supports .safetensors, .pt, and .bin file formats, and you don't necessarily need to use the complete model name

* How to use:

1. Place the embedding model file (in .pt format) in the `ComfyUI/models/embeddings` folder

2. Use `embedding:model_name` in your text

Example: If you have a model called `EasyNegative.pt`, you can use it like this:

```

a beautiful landscape, embedding:EasyNegative, high quality

```

3. **Prompt Weight Adjustment**

* Use parentheses to adjust the importance of certain descriptions

* For example: `(beautiful:1.2)` will make the "beautiful" feature more prominent

* Regular parentheses `()` have a default weight of 1.1

* Use keyboard shortcuts `ctrl + up/down arrow` to quickly adjust weights

* The weight adjustment step size can be modified in settings

4. **Important Notes**

* Ensure the CLIP model is properly loaded

* Use positive and clear text descriptions

* When using embedding models, make sure the file name is correct and compatible with your current main model's architecture

---

# Source: https://docs.comfy.org/built-in-nodes/ClipTextEncodeFlux.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipTextEncodeFlux - ComfyUI Built-in Node Documentation

> The ClipTextEncodeFlux node is used to encode text prompts into Flux-compatible conditioning embeddings.

`CLIPTextEncodeFlux` is an advanced text encoding node in ComfyUI, specifically designed for the Flux architecture. It uses a dual-encoder mechanism (CLIP-L and T5XXL) to process both structured keywords and detailed natural language descriptions, providing the Flux model with more accurate and comprehensive text understanding for improved text-to-image generation quality.

This node is based on a dual-encoder collaboration mechanism:

1. The `clip_l` input is processed by the CLIP-L encoder, extracting style, theme, and other keyword features—ideal for concise descriptions.

2. The `t5xxl` input is processed by the T5XXL encoder, which excels at understanding complex and detailed natural language scene descriptions.

3. The outputs from both encoders are fused, and combined with the `guidance` parameter to generate unified conditioning embeddings (`CONDITIONING`) for downstream Flux sampler nodes, controlling how closely the generated content matches the text description.

## Inputs

| Parameter | Data Type | Input Method | Default | Range | Description |

| ---------- | --------- | ------------ | ------- | ---------------- | ---------------------------------------------------------------------------------------------------------------------- |

| `clip` | CLIP | Node input | None | - | Must be a CLIP model supporting the Flux architecture, including both CLIP-L and T5XXL encoders |

| `clip_l` | STRING | Text box | None | Up to 77 tokens | Suitable for concise keyword descriptions, such as style or theme |

| `t5xxl` | STRING | Text box | None | Nearly unlimited | Suitable for detailed natural language descriptions, expressing complex scenes and details |

| `guidance` | FLOAT | Slider | 3.5 | 0.0 - 100.0 | Controls the influence of text conditions on the generation process; higher values mean stricter adherence to the text |

## Outputs

| Output Name | Data Type | Description |

| -------------- | ------------ | ------------------------------------------------------------------------------------------------------------------ |

| `CONDITIONING` | CONDITIONING | Contains the fused embeddings from both encoders and the guidance parameter, used for conditional image generation |

## Usage Examples

### Prompt Examples

* **clip\_l input** (keyword style):

* Use structured, concise keyword combinations

* Example: `masterpiece, best quality, portrait, oil painting, dramatic lighting`

* Focus on style, quality, and main subject

* **t5xxl input** (natural language description):

* Use complete, fluent scene descriptions

* Example: `A highly detailed portrait in oil painting style, featuring dramatic chiaroscuro lighting that creates deep shadows and bright highlights, emphasizing the subject's features with renaissance-inspired composition.`

* Focus on scene details, spatial relationships, and lighting effects

### Notes

1. Make sure to use a CLIP model compatible with the Flux architecture

2. It is recommended to fill in both `clip_l` and `t5xxl` to leverage the dual-encoder advantage

3. Note the 77-token limit for `clip_l`

4. Adjust the `guidance` parameter based on the generated results

---

# Source: https://docs.comfy.org/built-in-nodes/ClipTextEncodeHunyuanDit.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipTextEncodeHunyuanDit - ComfyUI Built-in Node Documentation

> The ClipTextEncodeHunyuanDit node is used to encode text prompts into HunyuanDiT-compatible conditioning embeddings.

The `CLIPTextEncodeHunyuanDiT` node's main function is to convert input text into a form that the model can understand. It is an advanced conditioning node specifically designed for the dual text encoder architecture of the HunyuanDiT model.

Its primary role is like a translator, converting our text descriptions into "machine language" that the AI model can understand. The `bert` and `mt5xl` inputs prefer different types of prompt inputs.

## Inputs

| Parameter | Data Type | Description |

| --------- | --------- | -------------------------------------------------------------------------------------------------------------------------------------------- |

| `clip` | CLIP | A CLIP model instance used for text tokenization and encoding, which is core to generating conditions. |

| `bert` | STRING | Text input for encoding, prefers phrases and keywords, supports multiline and dynamic prompts. |

| `mt5xl` | STRING | Another text input for encoding, supports multiline and dynamic prompts (multilingual), can use complete sentences and complex descriptions. |

## Outputs

| Parameter | Data Type | Description |

| -------------- | ------------ | ------------------------------------------------------------------------------- |

| `CONDITIONING` | CONDITIONING | The encoded conditional output used for further processing in generation tasks. |

---

# Source: https://docs.comfy.org/built-in-nodes/ClipTextEncodeSdxl.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipTextEncodeSdxl - ComfyUI Built-in Node Documentation

> The ClipTextEncodeSdxl node is used to encode text prompts into SDXL-compatible conditioning embeddings.

This node is designed to encode text input using a CLIP model specifically customized for the SDXL architecture. It uses a dual encoder system (CLIP-L and CLIP-G) to process text descriptions, resulting in more accurate image generation.

## Inputs

| Parameter | Data Type | Description |

| --------------- | --------- | ------------------------------------------------------ |

| `clip` | CLIP | CLIP model instance used for text encoding. |

| `width` | INT | Specifies the image width in pixels, default 1024. |

| `height` | INT | Specifies the image height in pixels, default 1024. |

| `crop_w` | INT | Width of the crop area in pixels, default 0. |

| `crop_h` | INT | Height of the crop area in pixels, default 0. |

| `target_width` | INT | Target width for the output image, default 1024. |

| `target_height` | INT | Target height for the output image, default 1024. |

| `text_g` | STRING | Global text description for overall scene description. |

| `text_l` | STRING | Local text description for detail description. |

## Outputs

| Parameter | Data Type | Description |

| -------------- | ------------ | ------------------------------------------------------------------------------ |

| `CONDITIONING` | CONDITIONING | Contains encoded text and conditional information needed for image generation. |

---

# Source: https://docs.comfy.org/built-in-nodes/ClipTextEncodeSdxlRefiner.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipTextEncodeSdxlRefiner - ComfyUI Built-in Node Documentation

> The ClipTextEncodeSdxlRefiner node is used to encode text prompts into SDXL Refiner-compatible conditioning embeddings.

This node is specifically designed for the SDXL Refiner model to convert text prompts into conditioning information by incorporating aesthetic scores and dimensional information to enhance the conditions for generation tasks, thereby improving the final refinement effect. It acts like a professional art director, not only conveying your creative intent but also injecting precise aesthetic standards and specification requirements into the work.

## About SDXL Refiner

SDXL Refiner is a specialized refinement model that focuses on enhancing image details and quality based on the SDXL base model. This process is like having an art retoucher:

1. First, it receives preliminary images or text descriptions generated by the base model

2. Then, it guides the refinement process through precise aesthetic scoring and dimensional parameters

3. Finally, it focuses on processing high-frequency image details to improve overall quality

Refiner can be used in two ways:

* As a standalone refinement step for post-processing images generated by the base model

* As part of an expert integration system, taking over processing during the low-noise phase of generation

## Inputs

| Parameter Name | Data Type | Input Type | Default Value | Value Range | Description |

| -------------- | --------- | ---------- | ------------- | ----------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- |

| `clip` | CLIP | Required | - | - | CLIP model instance used for text tokenization and encoding, the core component for converting text into model-understandable format |

| `ascore` | FLOAT | Optional | 6.0 | 0.0-1000.0 | Controls the visual quality and aesthetics of generated images, similar to setting quality standards for artwork: - High scores(7.5-8.5): Pursues more refined, detail-rich effects - Medium scores(6.0-7.0): Balanced quality control - Low scores(2.0-3.0): Suitable for negative prompts |

| `width` | INT | Required | 1024 | 64-16384 | Specifies output image width (pixels), must be multiple of 8. SDXL performs best when total pixel count is close to 1024×1024 (about 1M pixels) |

| `height` | INT | Required | 1024 | 64-16384 | Specifies output image height (pixels), must be multiple of 8. SDXL performs best when total pixel count is close to 1024×1024 (about 1M pixels) |

| `text` | STRING | Required | - | - | Text prompt description, supports multi-line input and dynamic prompt syntax. In Refiner, text prompts should focus more on describing desired visual quality and detail characteristics |

## Outputs

| Output Name | Data Type | Description |

| -------------- | ------------ | ------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------ |

| `CONDITIONING` | CONDITIONING | Refined conditional output containing integrated encoding of text semantics, aesthetic standards, and dimensional information, specifically for guiding SDXL Refiner model in precise image refinement |

## Notes

1. This node is specifically optimized for the SDXL Refiner model and differs from regular CLIPTextEncode nodes

2. An aesthetic score of 7.5 is recommended as the baseline, which is the standard setting used in SDXL training

3. All dimensional parameters must be multiples of 8, and total pixel count close to 1024×1024 (about 1M pixels) is recommended

4. The Refiner model focuses on enhancing image details and quality, so text prompts should emphasize desired visual effects rather than scene content

5. In practical use, Refiner is typically used in the later stages of generation (approximately the last 20% of steps), focusing on detail optimization

---

# Source: https://docs.comfy.org/built-in-nodes/ClipVisionEncode.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ClipVisionEncode - ComfyUI Built-in Node Documentation

> The ClipVisionEncode node is used to encode input images into visual feature vectors through the CLIP Vision model.

The `CLIP Vision Encode` node is an image encoding node in ComfyUI, used to convert input images into visual feature vectors through the CLIP Vision model. This node is an important bridge connecting image and text understanding, and is widely used in various AI image generation and processing workflows.

**Node Functionality**

* **Image feature extraction**: Converts input images into high-dimensional feature vectors

* **Multimodal bridging**: Provides a foundation for joint processing of images and text

* **Conditional generation**: Provides visual conditions for image-based conditional generation

## Inputs

| Parameter Name | Data Type | Description |

| -------------- | ------------ | -------------------------------------------------------------------- |

| `clip_vision` | CLIP\_VISION | CLIP vision model, usually loaded via the CLIPVisionLoader node |

| `image` | IMAGE | The input image to be encoded |

| `crop` | Dropdown | Image cropping method, options: center (center crop), none (no crop) |

## Outputs

| Output Name | Data Type | Description |

| -------------------- | -------------------- | ----------------------- |

| CLIP\_VISION\_OUTPUT | CLIP\_VISION\_OUTPUT | Encoded visual features |

This output object contains:

* `last_hidden_state`: The last hidden state

* `image_embeds`: Image embedding vector

* `penultimate_hidden_states`: The penultimate hidden state

* `mm_projected`: Multimodal projection result (if available)

---

# Source: https://docs.comfy.org/built-in-nodes/ClipVisionLoader.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# Load CLIP Vision - ComfyUI Built-in Node Documentation

> The Load CLIP Vision node is used to load CLIP Vision models from the `ComfyUI/models/clip_vision` folder.

This node automatically detects models located in the `ComfyUI/models/clip_vision` folder, as well as any additional model paths configured in the `extra_model_paths.yaml` file. If you add models after starting ComfyUI, please **refresh the ComfyUI interface** to ensure the latest model files are listed.

## Inputs

| Field | Data Type | Description |

| ----------- | -------------- | --------------------------------------------------------------------------- |

| `clip_name` | COMBO\[STRING] | Lists all supported model files in the `ComfyUI/models/clip_vision` folder. |

## Outputs

| Field | Data Type | Description |

| ------------- | ------------ | ---------------------------------------------------------------------------------- |

| `clip_vision` | CLIP\_VISION | Loaded CLIP Vision model, ready for encoding images or other vision-related tasks. |

---

# Source: https://docs.comfy.org/built-in-nodes/Load3D.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# Load3D - ComfyUI Built-in Node Documentation

> The Load3D node is a core node in ComfyUI for loading and previewing various 3D model files, supporting multi-format import and rich three-dimensional view operations.

The Load3D node is a core node for loading and processing 3D model files. When loading the node, it automatically retrieves available 3D resources from `ComfyUI/input/3d/`. You can also upload supported 3D files for preview using the upload function.

**Supported Formats**

Currently, this node supports multiple 3D file formats, including `.gltf`, `.glb`, `.obj`, `.fbx`, and `.stl`.

**3D Node Preferences**

Some related preferences for 3D nodes can be configured in ComfyUI's settings menu. Please refer to the following documentation for corresponding settings:

[Settings Menu - 3D](/interface/settings/3d)

Besides regular node outputs, Load3D has lots of 3D view-related settings in the canvas menu.

## Inputs

| Parameter Name | Type | Description | Default | Range |

| -------------- | -------------- | --------------------------------------------------------------------------------------------- | ------- | ----------------- |

| model\_file | File Selection | 3D model file path, supports upload, defaults to reading model files from `ComfyUI/input/3d/` | - | Supported formats |

| width | INT | Canvas rendering width | 1024 | 1-4096 |

| height | INT | Canvas rendering height | 1024 | 1-4096 |

## Outputs

| Parameter Name | Data Type | Description |

| ---------------- | -------------- | ---------------------------------------------------------------------------------------------- |

| image | IMAGE | Canvas rendered image |

| mask | MASK | Mask containing current model position |

| mesh\_path | STRING | Model file path |

| normal | IMAGE | Normal map |

| lineart | IMAGE | Line art image output, corresponding `edge_threshold` can be adjusted in the canvas model menu |

| camera\_info | LOAD3D\_CAMERA | Camera information |

| recording\_video | VIDEO | Recorded video (only when recording exists) |

All corresponding outputs preview

## Canvas Area Description

The Load3D node's Canvas area contains numerous view operations, including:

* Preview view settings (grid, background color, preview view)

* Camera control: Control FOV, camera type

* Global illumination intensity: Adjust lighting intensity

* Video recording: Record and export videos

* Model export: Supports `GLB`, `OBJ`, `STL` formats

* And more

1. Contains multiple menus and hidden menus of the Load 3D node

2. Menu for `resizing preview window` and `canvas video recording`

3. 3D view operation axis

4. Preview thumbnail

5. Preview size settings, scale preview view display by setting dimensions and then resizing window

### 1. View Operations

View control operations:

* Left-click + drag: Rotate the view

* Right-click + drag: Pan the view

* Middle wheel scroll or middle-click + drag: Zoom in/out

* Coordinate axis: Switch views

### 2. Left Menu Functions

In the canvas, some settings are hidden in the menu. Click the menu button to expand different menus

* 1. Scene: Contains preview window grid, background color, preview settings

* 2. Model: Model rendering mode, texture materials, up direction settings

* 3. Camera: Switch between orthographic and perspective views, and set the perspective angle size

* 4. Light: Scene global illumination intensity

* 5. Export: Export model to other formats (GLB, OBJ, STL)

#### Scene

The Scene menu provides some basic scene setting functions

1. Show/Hide grid

2. Set background color

3. Click to upload a background image

4. Hide the preview

#### Model

The Model menu provides some model-related functions

1. **Up direction**: Determine which axis is the up direction for the model

2. **Material mode**: Switch model rendering modes - Original, Normal, Wireframe, Lineart

#### Camera

This menu provides switching between orthographic and perspective views, and perspective angle size settings

1. **Camera**: Quickly switch between orthographic and orthographic views

2. **FOV**: Adjust FOV angle

#### Light

Through this menu, you can quickly adjust the scene's global illumination intensity

#### Export

This menu provides the ability to quickly convert and export model formats

### 3. Right Menu Functions

The right menu has two main functions:

1. **Reset view ratio**: After clicking the button, the view will adjust the canvas rendering area ratio according to the set width and height

2. **Video recording**: Allows you to record current 3D view operations as video, allows import, and can be output as `recording_video` to subsequent nodes

---

# Source: https://docs.comfy.org/interface/settings/about.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# About Page

> Detailed description of ComfyUI About settings page

The About page is an information display panel in the ComfyUI settings system, used to show application version information, related links, and system statistics. These settings can provide us with critical information when you submit feedback or report issues.

### Version Information Badges

The About page displays the following core version information:

* **ComfyUI Version**: Shows the backend ComfyUI version number, linked to the official GitHub repository

* **ComfyUI\_frontend Version**: Shows the frontend interface version number, linked to the frontend GitHub repository

* **Discord Community**: Provides a link to the ComfyOrg Discord server

* **Official Website**: Links to the ComfyOrg official website

Since the version information here mainly corresponds to stable version information, if you are using the nightly version, the corresponding commit hash will not be displayed here. If you are using the nightly version, you can use the `git log` command in the corresponding ComfyUI main directory to view the corresponding commit hash and other information.

Another common issue is that different dependency packages may fail and rollback during updates.

### Custom Node Badges

If custom nodes are installed, the About page will also display additional badge information provided by custom nodes. These badges are registered by each custom node through the `aboutPageBadges` property.

### System Info

The bottom of the page displays detailed system statistics, including:

* Hardware configuration information

* Software environment information

* System performance data

## Extension Developer Guide

Extension developers can add custom badges to the About page by adding the `aboutPageBadges` property to their extension configuration:

```javascript theme={null}

app.registerExtension({

name: 'MyExtension',

aboutPageBadges: [

{

label: 'My Extension v1.0.0',

url: 'https://github.com/myuser/myextension',

icon: 'pi pi-github'

}

]

})

```

---

# Source: https://docs.comfy.org/support/payment/accepted-payment-methods.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# Accepted payment methods

> Learn about the payment methods accepted by Comfy Organization Inc

Comfy Organization Inc uses Stripe as our payment processor, which means you can only use payment methods that Stripe accepts. Generally, you can pay with major credit and debit cards like Visa, Mastercard, and American Express, as well as some digital wallets such as Google Pay and Link in some regions. The options you see during checkout are the ones available to you.

## Credit and debit cards

We accept the following major credit and debit cards:

* Visa

* Mastercard

* American Express

## Digital wallets

You can also pay using digital wallet services:

* Google Pay

* Link

## Alternative payment methods

We also support the following payment methods, but only in USD:

* Alipay

* WeChat Pay

WeChat Pay and Alipay are only available in specific regions. Check the `Business locations` section in the Stripe documentation to verify availability in your country:

* [Stripe WeChat Pay documentation](https://docs.stripe.com/payments/wechat-pay#get-started)

* [Stripe Alipay documentation](https://docs.stripe.com/payments/alipay)

*Select **USD** as your payment currency (1) to unlock the Alipay and WeChat Pay options (2) during checkout.*

Alipay and WeChat Pay are not yet supported for Comfy Cloud Subscription.

To enable these options, choose **USD** in the Stripe billing portal currency selector. Once USD is selected, Alipay and WeChat Pay appear in the payment method list.

## Requirements

To successfully process your payment:

* Your card must have sufficient funds or available credit

* Your billing address must match the address on file with your card issuer

* International cards are accepted, but may be subject to currency conversion fees from your bank

## Security

Stripe is a PCI Service Provider Level 1, the most stringent level of certification available in the payments industry. All payment information is processed securely using industry-standard encryption. Comfy Organization Inc does not store your complete card details on our servers.

## Payment processing

Payments are processed immediately upon subscription or renewal. You will receive a confirmation email once your payment has been successfully processed.

If you experience any issues with payment processing, please contact our [support team](https://support.comfy.org/) for assistance.

---

# Source: https://docs.comfy.org/tutorials/audio/ace-step/ace-step-v1.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# ComfyUI ACE-Step Native Example

> This guide will help you create dynamic music using the ACE-Step model in ComfyUI

ACE-Step is an open-source foundational music generation model jointly developed by Chinese team StepFun and ACE Studio, aimed at providing music creators with efficient, flexible and high-quality music generation and editing tools.

The model is released under the [Apache-2.0](https://github.com/ace-step/ACE-Step?tab=readme-ov-file#-license) license and is free for commercial use.

As a powerful music generation foundation, ACE-Step provides rich extensibility. Through fine-tuning techniques like LoRA and ControlNet, developers can customize the model according to their actual needs.

Whether it's audio editing, vocal synthesis, accompaniment production, voice cloning or style transfer applications, ACE-Step provides stable and reliable technical support.

This flexible architecture greatly simplifies the development process of music AI applications, allowing more creators to quickly apply AI technology to music creation.

Currently, ACE-Step has released related training code, including LoRA model training, and the corresponding ControlNet training code will be released in the future.

You can visit their [Github](https://github.com/ace-step/ACE-Step?tab=readme-ov-file#-roadmap) to learn more details.

Make sure your ComfyUI is updated.

* [Download ComfyUI](https://www.comfy.org/download)

* [Update Guide](/installation/update_comfyui)

Workflows in this guide can be found in the [Workflow Templates](/interface/features/template).

If you can't find them in the template, your ComfyUI may be outdated. (Desktop version's update will delay sometime)

If nodes are missing when loading a workflow, possible reasons:

1. You are not using the latest ComfyUI version (Nightly version)

2. Some nodes failed to import at startup

* The Desktop is base on ComfyUI stable release, it will auto-update when there is a new Desktop stable release available.

* [Cloud](https://cloud.comfy.org) will update after ComfyUI stable release.

So, if you find any core node missing in this document, it might be because the new core nodes have not yet been released in the latest stable version. Please wait for the next stable release.

## ACE-Step ComfyUI Text-to-Audio Generation Workflow Example

### 1. Download Workflow and Related Models

Click the button below to download the corresponding workflow file. Drag it into ComfyUI to load the workflow information. The workflow includes model download information.

You can also manually download [ace\_step\_v1\_3.5b.safetensors](https://huggingface.co/Comfy-Org/ACE-Step_ComfyUI_repackaged/blob/main/all_in_one/ace_step_v1_3.5b.safetensors) and save it to the `ComfyUI/models/checkpoints` folder

### 2. Complete the Workflow Step by Step

1. Ensure the `Load Checkpoints` node has loaded the `ace_step_v1_3.5b.safetensors` model

2. (Optional) In the `EmptyAceStepLatentAudio` node, you can set the duration of the music to be generated

3. (Optional) In the `LatentOperationTonemapReinhard` node, you can adjust the `multiplier` to control the volume of the vocals (higher numbers result in more prominent vocals)

4. (Optional) Input corresponding music styles etc. in the `tags` field of `TextEncodeAceStepAudio`

5. (Optional) Input corresponding lyrics in the `lyrics` field of `TextEncodeAceStepAudio`

6. Click the `Run` button, or use the shortcut `Ctrl(cmd) + Enter` to execute the audio generation

7. After the workflow completes,, you can preview the generated audio in the `Save Audio` node. You can click to play and listen to it, and the audio will also be saved to `ComfyUI/output/audio` (subdirectory determined by the `Save Audio` node).

## ACE-Step ComfyUI Audio-to-Audio Workflow

Similar to image-to-image workflows, you can input a piece of music and use the workflow below to resample and generate music. You can also adjust the difference from the original audio by controlling the `denoise` parameter in the `Ksampler`.

### 1. Download Workflow File

Click the button below to download the corresponding workflow file. Drag it into ComfyUI to load the workflow information.

### 2. Complete the Workflow Step by Step

1. Ensure the `Load Checkpoints` node has loaded the `ace_step_v1_3.5b.safetensors` model

2. Upload the provided audio file in the `LoadAudio` node

3. (Optional) Input corresponding music styles and lyrics in the `tags` and `lyrics` fields of `TextEncodeAceStepAudio`. Providing lyrics is very important for audio editing

4. (Optional) Modify the `denoise` parameter in the `Ksampler` node to adjust the noise added during sampling to control similarity with the original audio (smaller values result in more similarity to the original audio; setting it to `1.00` is approximately equivalent to having no audio input)

5. Click the `Run` button, or use the shortcut `Ctrl(cmd) + Enter` to execute the audio generation

6. After the workflow completes, you can preview the generated audio in the `Save Audio` node. You can click to play and listen to it, and the audio will also be saved to `ComfyUI/output/audio` (subdirectory determined by the `Save Audio` node).

You can also implement the lyrics modification and editing functionality from the ACE-Step project page, modifying the original lyrics to change the audio effect.

### 3. Additional Workflow Notes

1. In the example workflow, you can change the `tags` in `TextEncodeAceStepAudio` from `male voice` to `female voice` to generate female vocals.

2. You can also modify the `lyrics` in `TextEncodeAceStepAudio` to change the lyrics and thus the generated audio. Refer to the examples on the ACE-Step project page for more details.

## ACE-Step Prompt Guide

ACE currently uses two types of prompts: `tags` and `lyrics`.

* `tags`: Mainly used to describe music styles, scenes, etc. Similar to prompts we use for other generations, they primarily describe the overall style and requirements of the audio, separated by English commas

* `lyrics`: Mainly used to describe lyrics, supporting lyric structure tags such as \[verse], \[chorus], and \[bridge] to distinguish different parts of the lyrics. You can also input instrument names for purely instrumental music

You can find rich examples of `tags` and `lyrics` on the [ACE-Step model homepage](https://ace-step.github.io/). You can refer to these examples to try corresponding prompts. This document's prompt guide is organized based on the project to help you quickly try combinations to achieve your desired effect.

### Tags (prompt)

#### Mainstream Music Styles

Use short tag combinations to generate specific music styles

* electronic

* rock

* pop

* funk

* soul

* cyberpunk

* Acid jazz

* electro

* em (electronic music)

* soft electric drums

* melodic

#### Scene Types

Combine specific usage scenarios and atmospheres to generate music that matches the corresponding mood

* background music for parties

* radio broadcasts

* workout playlists

#### Instrumental Elements

* saxophone

* jazz

* piano, violin

#### Vocal Types

* female voice

* male voice

* clean vocals

#### Professional Terms

Use some professional terms commonly used in music to precisely control music effects

* 110 bpm (beats per minute is 110)

* fast tempo

* slow tempo

* loops

* fills

* acoustic guitar

* electric bass

{/* - Lyrics editing:

- edit lyrics: 'When I was young' -> 'When you were kid' (lyrics editing example) */}

### Lyrics

#### Lyric Structure Tags

* \[outro]

* \[verse]

* \[chorus]

* \[bridge]

#### Multilingual Support

* ACE-Step V1 supports multiple languages. When used, ACE-Step converts different languages into English letters and then generates music.

* In ComfyUI, we haven't fully implemented the conversion of all languages to English letters. Currently, only [Japanese hiragana and katakana characters](https://github.com/comfyanonymous/ComfyUI/commit/5d3cc85e13833aeb6ef9242cdae243083e30c6fc) are implemented.

So if you need to use multiple languages for music generation, you need to first convert the corresponding language to English letters, and then input the language code abbreviation at the beginning of the `lyrics`, such as Chinese `[zh]`, Korean `[ko]`, etc.

For example:

```

[verse]

[zh]wo3zou3guo4shen1ye4de5jie1dao4

[zh]leng3feng1chui1luan4si1nian4de5piao4liang4wai4tao4

[zh]ni3de5wei1xiao4xiang4xing1guang1hen3xuan4yao4

[zh]zhao4liang4le5wo3gu1du2de5mei3fen1mei3miao3

[chorus]

[verse]

[ko]hamkke si-kkeuleo-un sesang-ui sodong-eul pihae

[ko]honja ogsang-eseo dalbich-ui eolyeompus-ileul balaboda

[ko]niga salang-eun lideum-i ganghan eum-ag gatdago malhaess-eo

[ko]han ta han tamada ma-eum-ui ondoga eolmana heojeonhanji ijge hae

[bridge]

[es]cantar mi anhelo por ti sin ocultar

[es]como poesía y pintura, lleno de anhelo indescifrable

[es]tu sombra es tan terca como el viento, inborrable

[es]persiguiéndote en vuelo, brilla como cruzar una mar de nubes

[chorus]

[fr]que tu sois le vent qui souffle sur ma main

[fr]un contact chaud comme la douce pluie printanière

[fr]que tu sois le vent qui s'entoure de mon corps

[fr]un amour profond qui ne s'éloignera jamais

```

Currently, ACE-Step supports 19 languages, but the following ten languages have better support:

* English

* Chinese: \[zh]

* Russian: \[ru]

* Spanish: \[es]

* Japanese: \[ja]

* German: \[de]

* French: \[fr]

* Portuguese: \[pt]

* Italian: \[it]

* Korean: \[ko]

The language tags above have not been fully tested at the time of writing this documentation. If any language tag is incorrect, please [submit an issue to our documentation repository](https://github.com/Comfy-Org/docs/issues) and we will make timely corrections.

## ACE-Step Related Resources

* [Project Page](https://ace-step.github.io/)

* [Hugging Face](https://huggingface.co/ACE-Step/ACE-Step-v1-3.5B)

* [GitHub](https://github.com/ace-step/ACE-Step)

* [Training Scripts](https://github.com/ace-step/ACE-Step?tab=readme-ov-file#-train)

---

# Source: https://docs.comfy.org/api-reference/registry/add-review-to-a-specific-version-of-a-node.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# Add review to a specific version of a node

## OpenAPI

````yaml https://api.comfy.org/openapi post /nodes/{nodeId}/reviews

openapi: 3.0.2

info:

title: Comfy API

version: '1.0'

servers:

- url: https://api.comfy.org

security: []

paths:

/nodes/{nodeId}/reviews:

post:

tags:

- Registry

summary: Add review to a specific version of a node

operationId: PostNodeReview

parameters:

- in: path

name: nodeId

required: true

schema:

type: string

- description: number of star given to the node version

in: query

name: star

required: true

schema:

type: integer

responses:

'200':

content:

application/json:

schema:

$ref: '#/components/schemas/Node'

description: Detailed information about a specific node

'400':

description: Bad Request

'404':

content:

application/json:

schema:

$ref: '#/components/schemas/Error'

description: Node version not found

'500':

content:

application/json:

schema:

$ref: '#/components/schemas/ErrorResponse'

description: Internal server error

components:

schemas:

Node:

properties:

author:

type: string

banner_url:

description: URL to the node's banner.

type: string

category:

deprecated: true

description: >-

DEPRECATED: The category of the node. Use 'tags' field instead. This

field will be removed in a future version.

type: string

created_at:

description: The date and time when the node was created

format: date-time

type: string

description:

type: string

downloads:

description: The number of downloads of the node.

type: integer

github_stars:

description: Number of stars on the GitHub repository.

type: integer

icon:

description: URL to the node's icon.

type: string

id:

description: The unique identifier of the node.

type: string

latest_version:

$ref: '#/components/schemas/NodeVersion'

license:

description: The path to the LICENSE file in the node's repository.

type: string

name:

description: The display name of the node.

type: string

preempted_comfy_node_names:

description: A list of Comfy node names that are preempted by this node.

items:

type: string

type: array

publisher:

$ref: '#/components/schemas/Publisher'

rating:

description: The average rating of the node.

type: number

repository:

description: URL to the node's repository.

type: string

search_ranking:

description: >-

A numerical value representing the node's search ranking, used for

sorting search results.

type: integer

status:

$ref: '#/components/schemas/NodeStatus'

status_detail:

description: The status detail of the node.

type: string

supported_accelerators:

description: >-

List of accelerators (e.g. CUDA, DirectML, ROCm) that this node

supports

items:

type: string

type: array

supported_comfyui_frontend_version:

description: Supported versions of ComfyUI frontend

type: string

supported_comfyui_version:

description: Supported versions of ComfyUI

type: string

supported_os:

description: List of operating systems that this node supports

items:

type: string

type: array

tags:

items:

type: string

type: array

tags_admin:

description: Admin-only tags for security warnings and admin metadata

items:

type: string

type: array

translations:

additionalProperties:

additionalProperties: true

type: object

description: Translations of node metadata in different languages.

type: object

type: object

Error:

properties:

details:

description: >-

Optional detailed information about the error or hints for resolving

it.

items:

type: string

type: array

message:

description: A clear and concise description of the error.

type: string

type: object

ErrorResponse:

properties:

error:

type: string

message:

type: string

required:

- error

- message

type: object

NodeVersion:

properties:

changelog:

description: Summary of changes made in this version

type: string

comfy_node_extract_status:

description: The status of comfy node extraction process.

type: string

createdAt:

description: The date and time the version was created.

format: date-time

type: string

dependencies:

description: A list of pip dependencies required by the node.

items:

type: string

type: array

deprecated:

description: Indicates if this version is deprecated.

type: boolean

downloadUrl:

description: '[Output Only] URL to download this version of the node'

type: string

id:

type: string

node_id:

description: The unique identifier of the node.

type: string

status:

$ref: '#/components/schemas/NodeVersionStatus'

status_reason:

type: string

supported_accelerators:

description: >-

List of accelerators (e.g. CUDA, DirectML, ROCm) that this node

supports

items:

type: string

type: array

supported_comfyui_frontend_version:

description: Supported versions of ComfyUI frontend

type: string

supported_comfyui_version:

description: Supported versions of ComfyUI

type: string

supported_os:

description: List of operating systems that this node supports

items:

type: string

type: array

tags:

items:

type: string

type: array

tags_admin:

description: Admin-only tags for security warnings and admin metadata

items:

type: string

type: array

version:

description: >-

The version identifier, following semantic versioning. Must be

unique for the node.

type: string

type: object

Publisher:

properties:

createdAt:

description: The date and time the publisher was created.

format: date-time

type: string

description:

type: string

id:

description: >-

The unique identifier for the publisher. It's akin to a username.

Should be lowercase.

type: string

logo:

description: URL to the publisher's logo.

type: string

members:

description: A list of members in the publisher.

items:

$ref: '#/components/schemas/PublisherMember'

type: array

name:

type: string

source_code_repo:

type: string

status:

$ref: '#/components/schemas/PublisherStatus'

support:

type: string

website:

type: string

type: object

NodeStatus:

enum:

- NodeStatusActive

- NodeStatusDeleted

- NodeStatusBanned

type: string

NodeVersionStatus:

enum:

- NodeVersionStatusActive

- NodeVersionStatusDeleted

- NodeVersionStatusBanned

- NodeVersionStatusPending

- NodeVersionStatusFlagged

type: string

PublisherMember:

properties:

id:

description: The unique identifier for the publisher member.

type: string

role:

description: The role of the user in the publisher.

type: string

user:

$ref: '#/components/schemas/PublisherUser'

type: object

PublisherStatus:

enum:

- PublisherStatusActive

- PublisherStatusBanned

type: string

PublisherUser:

properties:

email:

description: The email address for this user.

type: string

id:

description: The unique id for this user.

type: string

name:

description: The name for this user.

type: string

type: object

````

---

# Source: https://docs.comfy.org/api-reference/cloud/asset/add-tags-to-asset.md

> ## Documentation Index

> Fetch the complete documentation index at: https://docs.comfy.org/llms.txt

> Use this file to discover all available pages before exploring further.

# Add tags to asset

> Adds one or more tags to an existing asset

## OpenAPI

````yaml openapi-cloud.yaml post /api/assets/{id}/tags

openapi: 3.0.3

info:

title: Comfy Cloud API

description: >

**Experimental API:** This API is experimental and subject to change.

Endpoints, request/response formats, and behavior may be modified without

notice.

API for Comfy Cloud - Run ComfyUI workflows on cloud infrastructure.

This API allows you to interact with Comfy Cloud programmatically,

including:

- Submitting and managing workflows

- Uploading and downloading files

- Monitoring job status and progress

## Cloud vs OSS ComfyUI Compatibility

Comfy Cloud implements the same API interfaces as OSS ComfyUI for maximum

compatibility,

but some fields are accepted for compatibility while being handled

differently or ignored:

| Field | Endpoints | Cloud Behavior |

|-------|-----------|----------------|

| `subfolder` | `/api/view`, `/api/upload/*` | **Ignored** - Cloud uses

content-addressed storage (hash-based). Returned in responses for

client-side organization. |

| `type` (input/output/temp) | `/api/view`, `/api/upload/*` | Partially used

- All files stored with tag-based organization rather than directory

structure. |

| `overwrite` | `/api/upload/*` | **Ignored** - Content-addressed storage

means identical content always has the same hash. |

| `number`, `front` | `/api/prompt` | **Ignored** - Cloud uses its own fair

queue scheduling per user. |

| `split`, `full_info` | `/api/userdata` | **Ignored** - Cloud always

returns full file metadata. |

These fields are retained in the API schema for drop-in compatibility with

existing ComfyUI clients and workflows.

version: 1.0.0

license:

name: GNU General Public License v3.0

url: https://github.com/comfyanonymous/ComfyUI/blob/master/LICENSE

servers:

- url: https://cloud.comfy.org

description: Comfy Cloud API

security:

- ApiKeyAuth: []

tags:

- name: workflow

description: |

Submit workflows for execution and manage the execution queue.

This is the primary way to run ComfyUI workflows on the cloud.

- name: job

description: |

Monitor job status, view execution history, and manage running jobs.

Jobs are created when you submit a workflow via POST /api/prompt.

- name: asset

description: |

Upload, download, and manage persistent assets (images, models, outputs).

Assets provide durable storage with tagging and metadata support.

- name: file

description: |

Legacy file upload and download endpoints compatible with local ComfyUI.

For new integrations, consider using the Assets API instead.

- name: model

description: |

Browse available AI models. Models are pre-loaded on cloud infrastructure.

- name: node

description: |

Get information about available ComfyUI nodes and their inputs/outputs.

Useful for building dynamic workflow interfaces.

- name: user

description: |

User account information and personal data storage.

- name: system

description: |

Server status, health checks, and system information.

paths:

/api/assets/{id}/tags:

post:

tags:

- asset

summary: Add tags to asset

description: Adds one or more tags to an existing asset

operationId: addAssetTags

parameters:

- name: id

in: path

required: true

description: Asset ID

schema:

type: string

format: uuid

requestBody:

required: true

content:

application/json:

schema:

type: object

required:

- tags

properties:

tags:

type: array

items:

type: string

minItems: 1

description: Tags to add to the asset

responses:

'200':

description: Tags added successfully

content:

application/json:

schema:

$ref: '#/components/schemas/TagsModificationResponse'

'400':

description: Invalid request

content:

application/json:

schema:

$ref: '#/components/schemas/ErrorResponse'

'401':

description: Unauthorized

content: