`. Example: `doc:0`.

Here is an example of using custom IDs. Here, we are adding custom IDs `100` and `101` to each of the two documents we are passing as context.

```python PYTHON

---

# ! pip install -U cohere

import cohere

import json

co = cohere.ClientV2(

"COHERE_API_KEY"

) # Get your free API key here: https://dashboard.cohere.com/api-keys

documents = [

{

"data": {

"title": "Tall penguins",

"snippet": "Emperor penguins are the tallest.",

},

"id": "100",

},

{

"data": {

"title": "Penguin habitats",

"snippet": "Emperor penguins only live in Antarctica.",

},

"id": "101",

},

]

```

When document IDs are provided, the citation will refer to the documents using these IDs.

```python PYTHON

messages = [

{"role": "user", "content": "Where do the tallest penguins live?"}

]

response = co.chat(

model="command-a-03-2025",

messages=messages,

documents=documents,

)

print(response.message.content[0].text)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "Where do the tallest penguins live?"

}

],

"documents": [

{

"data": {

"title": "Tall penguins",

"snippet": "Emperor penguins are the tallest."

},

"id": "100"

},

{

"data": {

"title": "Penguin habitats",

"snippet": "Emperor penguins only live in Antarctica."

},

"id": "101"

}

]

}'

```

Note the `id` fields in the citations, which refer to the IDs in the `document` object.

Example response:

```mdx wordWrap

The tallest penguins are the Emperor penguins, which only live in Antarctica.

start=29 end=45 text='Emperor penguins' sources=[DocumentSource(type='document', id='100', document={'id': '100', 'snippet': 'Emperor penguins are the tallest.', 'title': 'Tall penguins'})] type='TEXT_CONTENT'

start=66 end=77 text='Antarctica.' sources=[DocumentSource(type='document', id='101', document={'id': '101', 'snippet': 'Emperor penguins only live in Antarctica.', 'title': 'Penguin habitats'})] type='TEXT_CONTENT'

```

In contrast, here's an example citation when the IDs are not provided.

Example response:

```mdx wordWrap

The tallest penguins are the Emperor penguins, which only live in Antarctica.

start=29 end=45 text='Emperor penguins' sources=[DocumentSource(type='document', id='doc:0', document={'id': 'doc:0', 'snippet': 'Emperor penguins are the tallest.', 'title': 'Tall penguins'})] type='TEXT_CONTENT'

start=66 end=77 text='Antarctica.' sources=[DocumentSource(type='document', id='doc:1', document={'id': 'doc:1', 'snippet': 'Emperor penguins only live in Antarctica.', 'title': 'Penguin habitats'})] type='TEXT_CONTENT'

```

## Citation modes

When running RAG in streaming mode, it’s possible to configure how citations are generated and presented. You can choose between fast citations or accurate citations, depending on your latency and precision needs.

### Accurate citations

The model produces its answer first, and then, after the entire response is generated, it provides citations that map to specific segments of the response text. This approach may incur slightly higher latency, but it ensures the citation indices are more precisely aligned with the final text segments of the model’s answer.

This is the default option, or you can explicitly specify it by adding the `citation_options={"mode": "accurate"}` argument in the API call.

Here is an example using the same list of pre-defined `messages` as the above.

With the `citation_options` mode set to `accurate`, we get the citations after the entire response is generated.

```python PYTHON

documents = [

{

"data": {

"title": "Tall penguins",

"snippet": "Emperor penguins are the tallest.",

},

"id": "100",

},

{

"data": {

"title": "Penguin habitats",

"snippet": "Emperor penguins only live in Antarctica.",

},

"id": "101",

},

]

messages = [

{"role": "user", "content": "Where do the tallest penguins live?"}

]

response = co.chat_stream(

model="command-a-03-2025",

messages=messages,

documents=documents,

citation_options={"mode": "accurate"},

)

response_text = ""

citations = []

for chunk in response:

if chunk:

if chunk.type == "content-delta":

response_text += chunk.delta.message.content.text

print(chunk.delta.message.content.text, end="")

if chunk.type == "citation-start":

citations.append(chunk.delta.message.citations)

print("\n")

for citation in citations:

print(citation, "\n")

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: text/event-stream' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "Where do the tallest penguins live?"

}

],

"documents": [

{

"data": {

"title": "Tall penguins",

"snippet": "Emperor penguins are the tallest."

},

"id": "100"

},

{

"data": {

"title": "Penguin habitats",

"snippet": "Emperor penguins only live in Antarctica."

},

"id": "101"

}

],

"citation_options": {

"mode": "accurate"

},

"stream": true

}'

```

Example response:

```mdx wordWrap

The tallest penguins are the Emperor penguins. They live in Antarctica.

start=29 end=46 text='Emperor penguins.' sources=[DocumentSource(type='document', id='100', document={'id': '100', 'snippet': 'Emperor penguins are the tallest.', 'title': 'Tall penguins'})] type='TEXT_CONTENT'

start=60 end=71 text='Antarctica.' sources=[DocumentSource(type='document', id='101', document={'id': '101', 'snippet': 'Emperor penguins only live in Antarctica.', 'title': 'Penguin habitats'})] type='TEXT_CONTENT'

```

### Fast citations

The model generates citations inline, as the response is being produced. In streaming mode, you will see citations injected at the exact moment the model uses a particular piece of external context. This approach provides immediate traceability at the expense of slightly less precision in citation relevance.

You can specify it by adding the `citation_options={"mode": "fast"}` argument in the API call.

With the `citation_options` mode set to `fast`, we get the citations inline as the model generates the response.

```python PYTHON

documents = [

{

"data": {

"title": "Tall penguins",

"snippet": "Emperor penguins are the tallest.",

},

"id": "100",

},

{

"data": {

"title": "Penguin habitats",

"snippet": "Emperor penguins only live in Antarctica.",

},

"id": "101",

},

]

messages = [

{"role": "user", "content": "Where do the tallest penguins live?"}

]

response = co.chat_stream(

model="command-a-03-2025",

messages=messages,

documents=documents,

citation_options={"mode": "fast"},

)

response_text = ""

for chunk in response:

if chunk:

if chunk.type == "content-delta":

response_text += chunk.delta.message.content.text

print(chunk.delta.message.content.text, end="")

if chunk.type == "citation-start":

print(

f" [{chunk.delta.message.citations.sources[0].id}]",

end="",

)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: text/event-stream' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "Where do the tallest penguins live?"

}

],

"documents": [

{

"data": {

"title": "Tall penguins",

"snippet": "Emperor penguins are the tallest."

},

"id": "100"

},

{

"data": {

"title": "Penguin habitats",

"snippet": "Emperor penguins only live in Antarctica."

},

"id": "101"

}

],

"citation_options": {

"mode": "fast"

},

"stream": true

}'

```

Example response:

```mdx wordWrap

The tallest penguins [100] are the Emperor penguins [100] which only live in Antarctica. [101]

```

---

# An Overview of Tool Use with Cohere

> Learn when to use leverage multi-step tool use in your workflows.

Here, you'll find context on using tools with Cohere models:

* The [basic usage](https://docs.cohere.com/docs/tool-use-overview) discusses 'function calling,' including how to define and create the tool, how to give it a schema, and how to incorporate it into common workflows.

* [Usage patterns](https://docs.cohere.com/docs/tool-use-usage-patterns) builds on this, covering parallel execution, state management, and more.

* [Parameter types](https://docs.cohere.com/docs/tool-use-parameter-types) talks about structured output in the context of tool use.

* As its name implies, [Streaming](https://docs.cohere.com/docs/tool-use-streaming) explains how to deal with tools when output must be streamed.

* It's often important to double-check model output, which is made much easier with [citations](https://docs.cohere.com/docs/tool-use-citations).

These should help you leverage Cohere's tool use functionality to get the most out of our models.

---

# Basic usage of tool use (function calling)

> An overview of using Cohere's tool use capabilities, enabling developers to build agentic workflows (API v2).

## Overview

Tool use is a technique which allows developers to connect Cohere’s Command family models to external tools like search engines, APIs, functions, databases, etc.

This opens up a richer set of behaviors by leveraging tools to access external data sources, taking actions through APIs, interacting with a vector database, querying a search engine, etc., and is particularly valuable for enterprise developers, since a lot of enterprise data lives in external sources.

The Chat endpoint comes with built-in tool use capabilities such as function calling, multi-step reasoning, and citation generation.

## Setup

First, import the Cohere library and create a client.

```python PYTHON

# ! pip install -U cohere

import cohere

co = cohere.ClientV2(

"COHERE_API_KEY"

) # Get your free API key here: https://dashboard.cohere.com/api-keys

```

```python PYTHON

# ! pip install -U cohere

import cohere

co = cohere.ClientV2(

api_key="", # Leave this blank

base_url="",

)

```

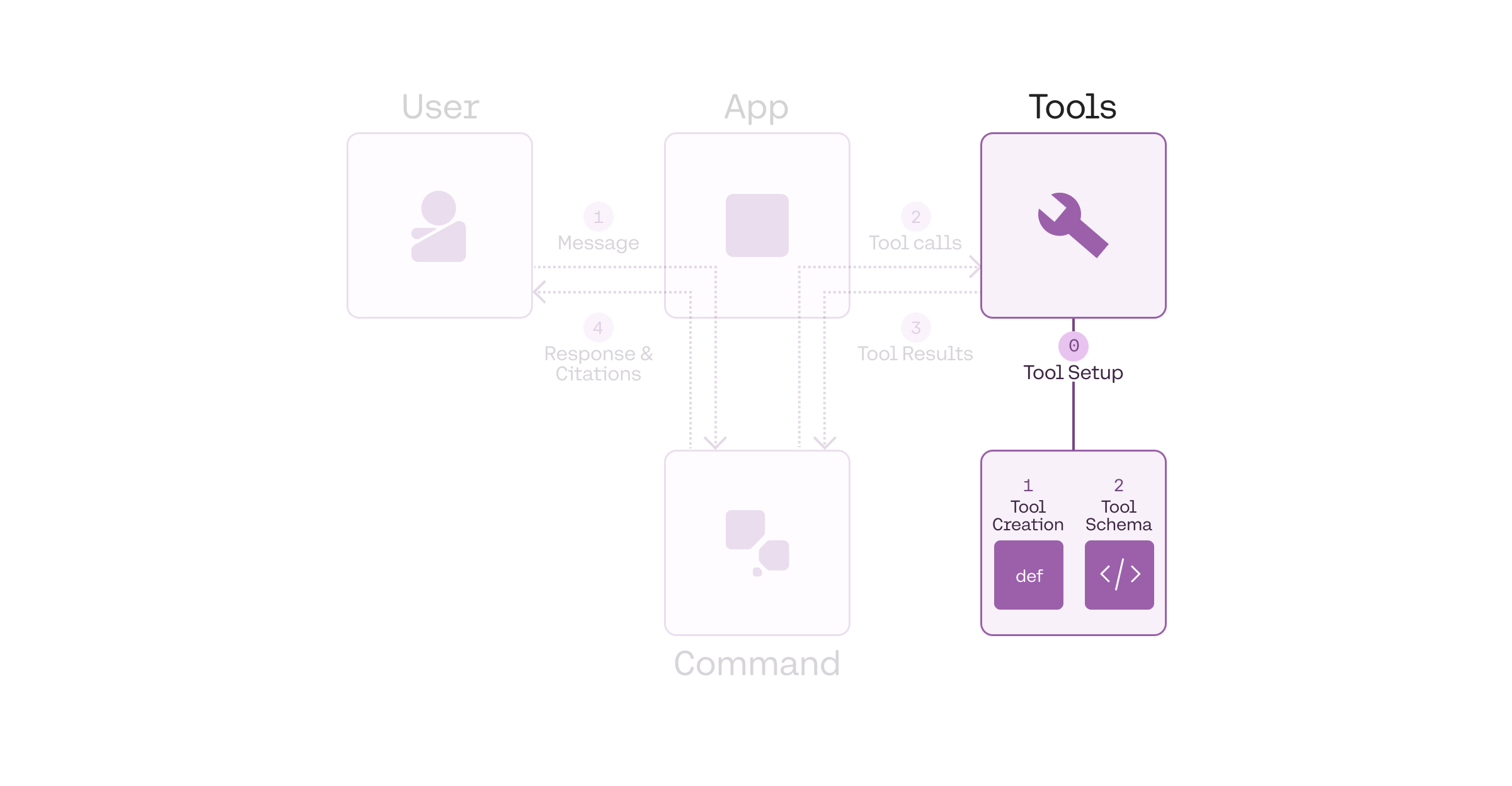

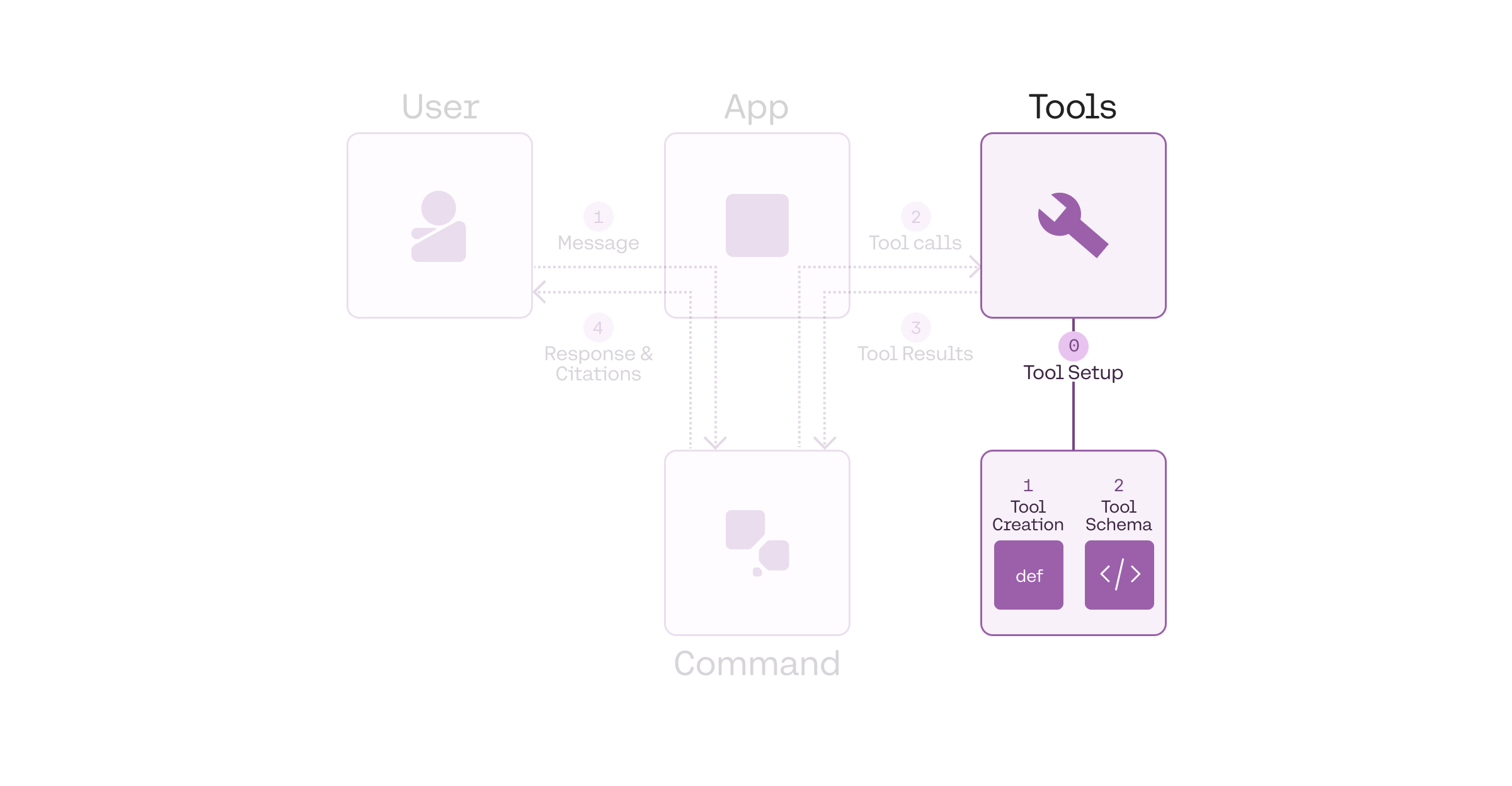

## Tool definition

The pre-requisite, or Step 0, before we can run a tool use workflow, is to define the tools. We can break this further into two steps:

* Creating the tool

* Defining the tool schema

## Setup

First, import the Cohere library and create a client.

```python PYTHON

# ! pip install -U cohere

import cohere

co = cohere.ClientV2(

"COHERE_API_KEY"

) # Get your free API key here: https://dashboard.cohere.com/api-keys

```

```python PYTHON

# ! pip install -U cohere

import cohere

co = cohere.ClientV2(

api_key="", # Leave this blank

base_url="",

)

```

## Tool definition

The pre-requisite, or Step 0, before we can run a tool use workflow, is to define the tools. We can break this further into two steps:

* Creating the tool

* Defining the tool schema

### Creating the tool

A tool can be any function that you create or external services that return an object for a given input. Some examples: a web search engine, an email service, an SQL database, a vector database, a weather data service, a sports data service, or even another LLM.

In this example, we define a `get_weather` function that returns the temperature for a given query, which is the location. You can implement any logic here, but to simplify the example, here we are hardcoding the return value to be the same for all queries.

```python PYTHON

def get_weather(location):

# Implement any logic here

return [{"temperature": "20°C"}]

# Return a JSON object string, or a list of tool content blocks e.g. [{"url": "abc.com", "text": "..."}, {"url": "xyz.com", "text": "..."}]

functions_map = {"get_weather": get_weather}

```

The Chat endpoint accepts [a string or a list of objects](https://docs.cohere.com/reference/chat#request.body.messages.tool.content) as the tool results. Thus, you should format the return value in this way. The following are some examples.

```python PYTHON

---

# Example: List of objects

weather_search_results = [

{"city": "Toronto", "date": "250207", "temperature": "20°C"},

{"city": "Toronto", "date": "250208", "temperature": "21°C"},

]

```

### Defining the tool schema

We also need to define the tool schemas in a format that can be passed to the Chat endpoint. The schema follows the [JSON Schema specification](https://json-schema.org/understanding-json-schema) and must contain the following fields:

* `name`: the name of the tool.

* `description`: a description of what the tool is and what it is used for.

* `parameters`: a list of parameters that the tool accepts. For each parameter, we need to define the following fields:

* `type`: the type of the parameter.

* `properties`: the name of the parameter and the following fields:

* `type`: the type of the parameter.

* `description`: a description of what the parameter is and what it is used for.

* `required`: a list of required properties by name, which appear as keys in the `properties` object

This schema informs the LLM about what the tool does, and the LLM decides whether to use a particular tool based on the information that it contains.

Therefore, the more descriptive and clear the schema, the more likely the LLM will make the right tool call decisions.

In a typical development cycle, some fields such as `name`, `description`, and `properties` will likely require a few rounds of iterations in order to get the best results (a similar approach to prompt engineering).

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco.",

}

},

"required": ["location"],

},

},

},

]

```

The endpoint supports a subset of the JSON Schema specification. Refer to the

[Structured Outputs documentation](https://docs.cohere.com/docs/structured-outputs#parameter-types-support)

for the list of supported and unsupported parameters.

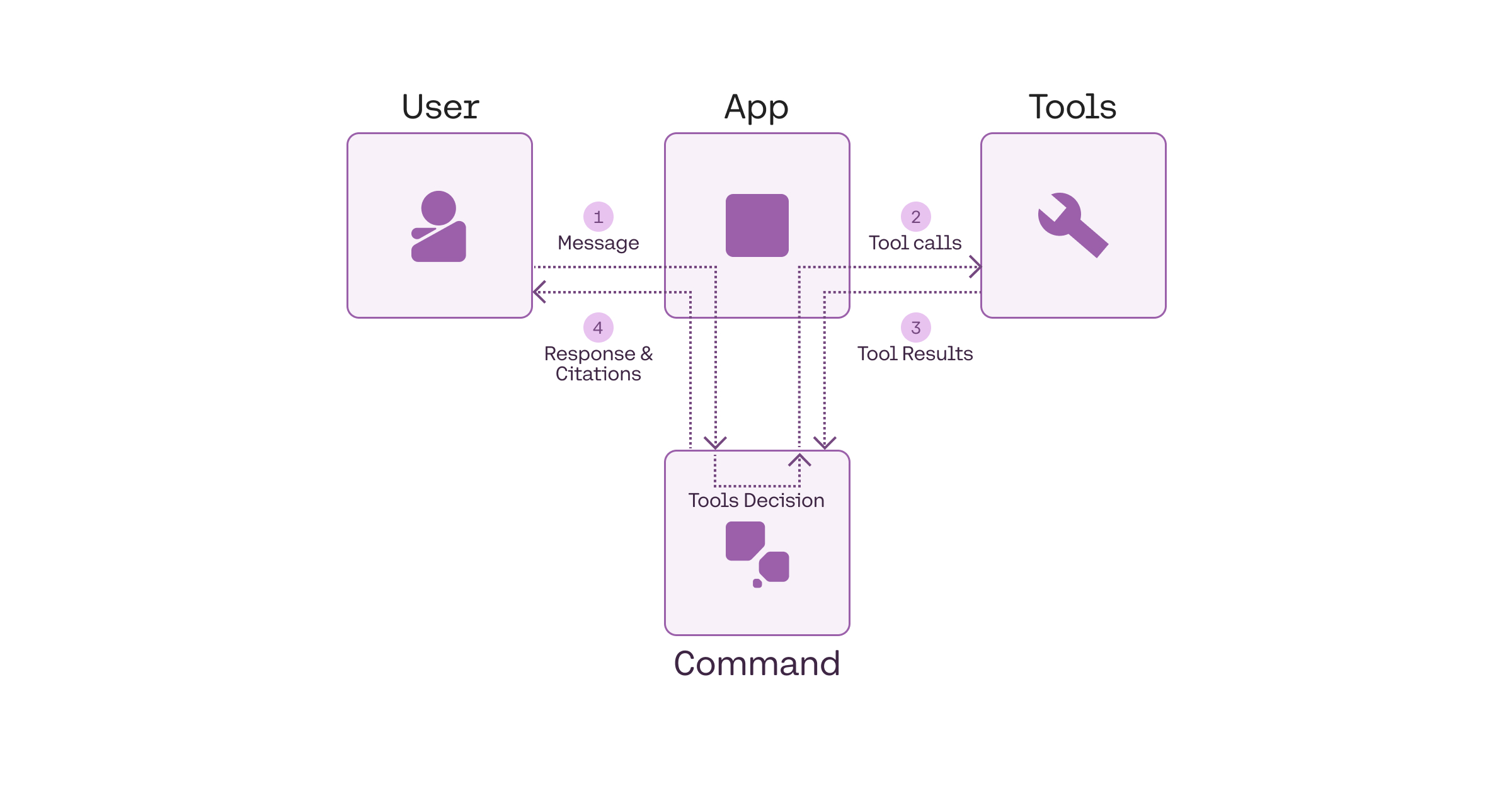

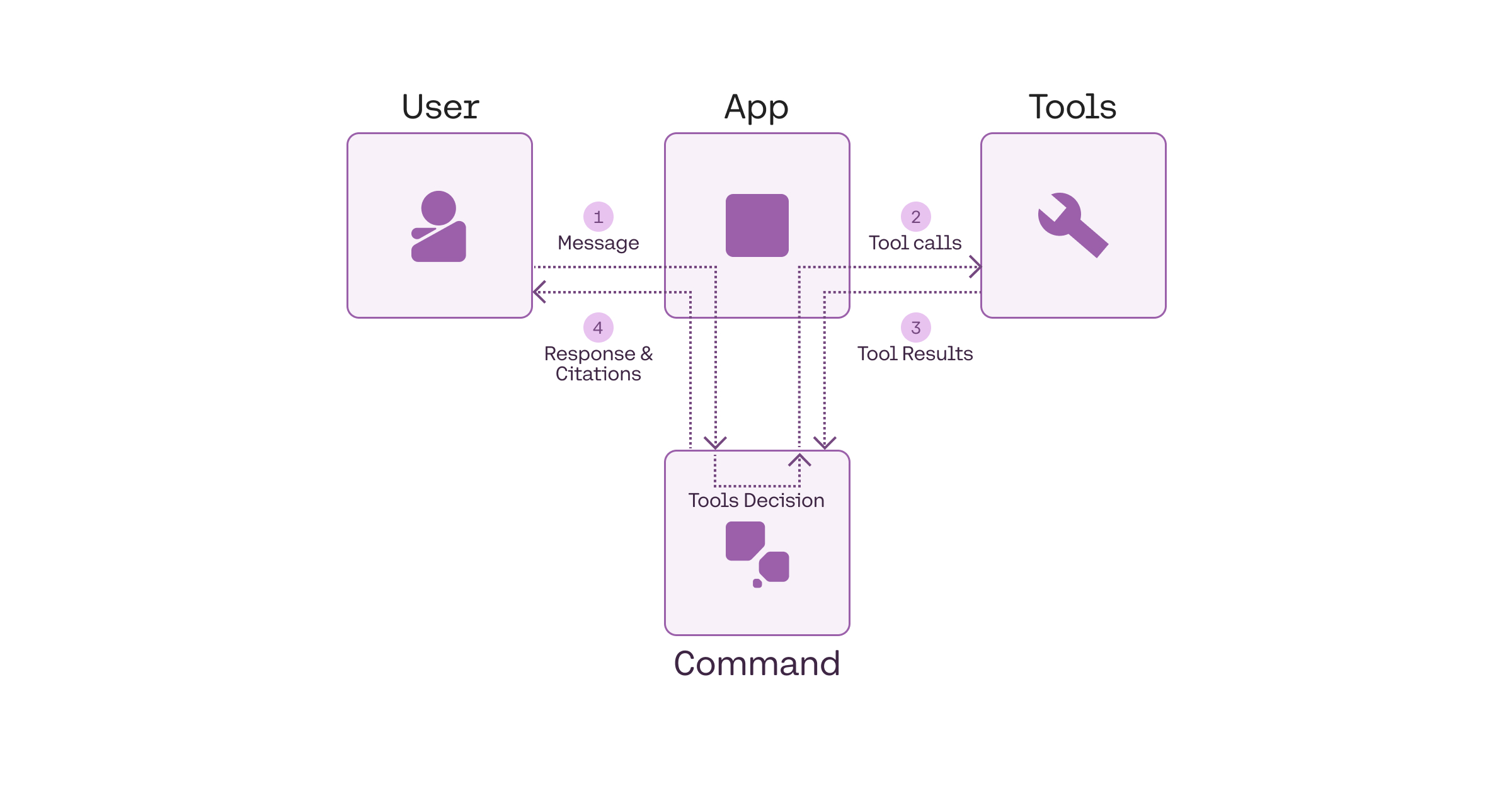

## Tool use workflow

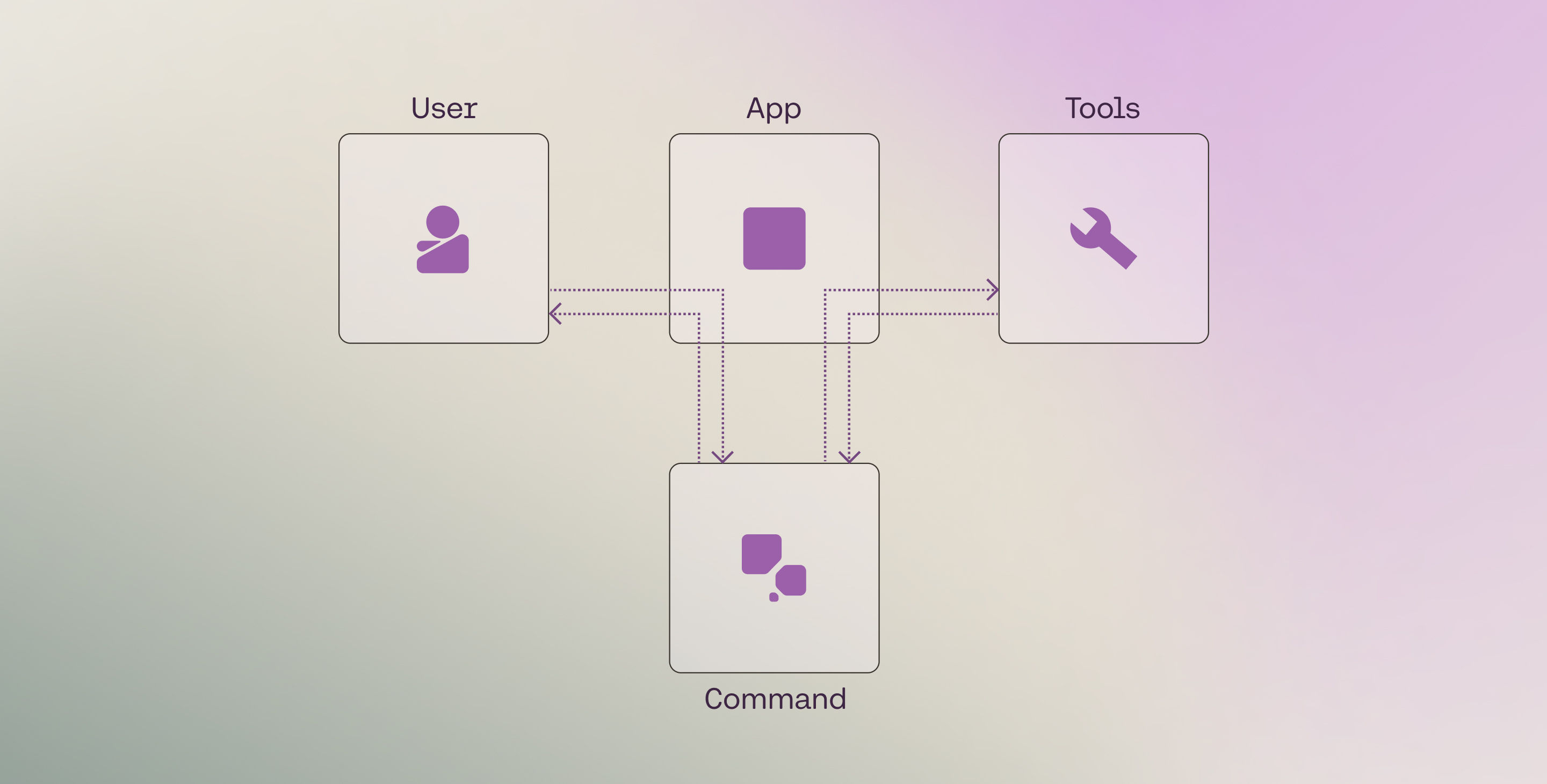

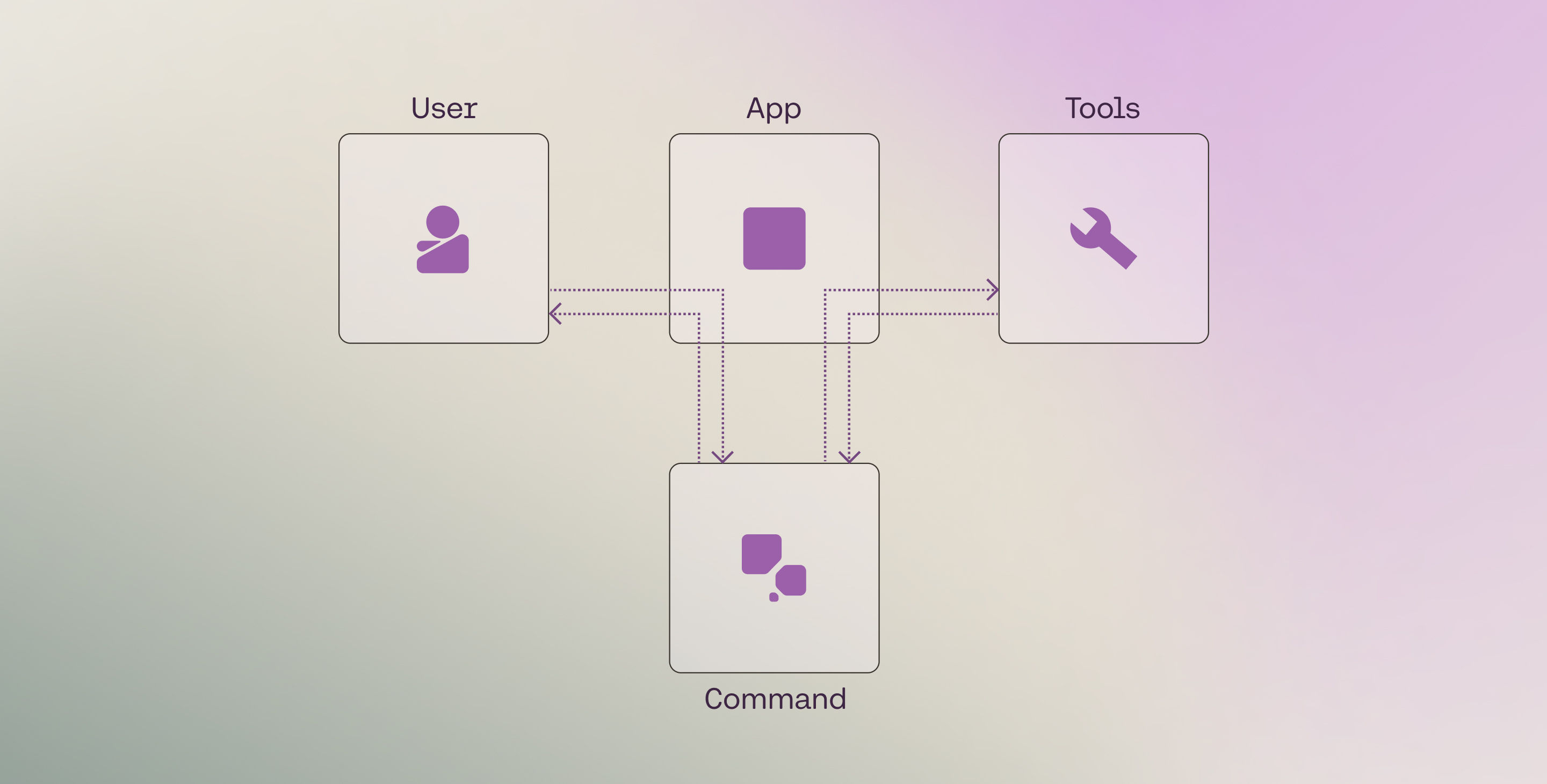

We can think of a tool use system as consisting of four components:

* The user

* The application

* The LLM

* The tools

At its most basic, these four components interact in a workflow through four steps:

* Step 1: **Get user message**: The LLM gets the user message (via the application).

* Step 2: **Generate tool calls**: The LLM decides which tools to call (if any) and generates the tool calls.

* Step 3: **Get tool results**: The application executes the tools, and the results are sent to the LLM.

* Step 4: **Generate response and citations**: The LLM generates the response and citations back to the user.

### Creating the tool

A tool can be any function that you create or external services that return an object for a given input. Some examples: a web search engine, an email service, an SQL database, a vector database, a weather data service, a sports data service, or even another LLM.

In this example, we define a `get_weather` function that returns the temperature for a given query, which is the location. You can implement any logic here, but to simplify the example, here we are hardcoding the return value to be the same for all queries.

```python PYTHON

def get_weather(location):

# Implement any logic here

return [{"temperature": "20°C"}]

# Return a JSON object string, or a list of tool content blocks e.g. [{"url": "abc.com", "text": "..."}, {"url": "xyz.com", "text": "..."}]

functions_map = {"get_weather": get_weather}

```

The Chat endpoint accepts [a string or a list of objects](https://docs.cohere.com/reference/chat#request.body.messages.tool.content) as the tool results. Thus, you should format the return value in this way. The following are some examples.

```python PYTHON

---

# Example: List of objects

weather_search_results = [

{"city": "Toronto", "date": "250207", "temperature": "20°C"},

{"city": "Toronto", "date": "250208", "temperature": "21°C"},

]

```

### Defining the tool schema

We also need to define the tool schemas in a format that can be passed to the Chat endpoint. The schema follows the [JSON Schema specification](https://json-schema.org/understanding-json-schema) and must contain the following fields:

* `name`: the name of the tool.

* `description`: a description of what the tool is and what it is used for.

* `parameters`: a list of parameters that the tool accepts. For each parameter, we need to define the following fields:

* `type`: the type of the parameter.

* `properties`: the name of the parameter and the following fields:

* `type`: the type of the parameter.

* `description`: a description of what the parameter is and what it is used for.

* `required`: a list of required properties by name, which appear as keys in the `properties` object

This schema informs the LLM about what the tool does, and the LLM decides whether to use a particular tool based on the information that it contains.

Therefore, the more descriptive and clear the schema, the more likely the LLM will make the right tool call decisions.

In a typical development cycle, some fields such as `name`, `description`, and `properties` will likely require a few rounds of iterations in order to get the best results (a similar approach to prompt engineering).

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco.",

}

},

"required": ["location"],

},

},

},

]

```

The endpoint supports a subset of the JSON Schema specification. Refer to the

[Structured Outputs documentation](https://docs.cohere.com/docs/structured-outputs#parameter-types-support)

for the list of supported and unsupported parameters.

## Tool use workflow

We can think of a tool use system as consisting of four components:

* The user

* The application

* The LLM

* The tools

At its most basic, these four components interact in a workflow through four steps:

* Step 1: **Get user message**: The LLM gets the user message (via the application).

* Step 2: **Generate tool calls**: The LLM decides which tools to call (if any) and generates the tool calls.

* Step 3: **Get tool results**: The application executes the tools, and the results are sent to the LLM.

* Step 4: **Generate response and citations**: The LLM generates the response and citations back to the user.

As an example, a weather search workflow might looks like the following:

* Step 1: **Get user message**: A user asks, "What's the weather in Toronto?"

* Step 2: **Generate tool calls**: A tool call is made to an external weather service with something like `get_weather(“toronto”)`.

* Step 3: **Get tool results**: The weather service returns the results, e.g. "20°C".

* Step 4: **Generate response and citations**: The model provides the answer, "The weather in Toronto is 20 degrees Celcius".

The following sections go through the implementation of these steps in detail.

### Step 1: Get user message

In the first step, we get the user's message and append it to the `messages` list with the `role` set to `user`.

```python PYTHON

messages = [

{"role": "user", "content": "What's the weather in Toronto?"}

]

```

Optional: If you want to define a system message, you can add it to the `messages` list with the `role` set to `system`.

```python PYTHON

system_message = """## Task & Context

You help people answer their questions and other requests interactively. You will be asked a very wide array of requests on all kinds of topics. You will be equipped with a wide range of search engines or similar tools to help you, which you use to research your answer. You should focus on serving the user's needs as best you can, which will be wide-ranging.

## Style Guide

Unless the user asks for a different style of answer, you should answer in full sentences, using proper grammar and spelling.

"""

messages = [

{"role": "system", "content": system_message},

{"role": "user", "content": "What's the weather in Toronto?"},

]

```

### Step 2: Generate tool calls

Next, we call the Chat endpoint to generate the list of tool calls. This is done by passing the parameters `model`, `messages`, and `tools` to the Chat endpoint.

The endpoint will send back a list of tool calls to be made if the model determines that tools are required. If it does, it will return two types of information:

* `tool_plan`: its reflection on the next steps it should take, given the user query.

* `tool_calls`: a list of tool calls to be made (if any), together with auto-generated tool call IDs. Each generated tool call contains:

* `id`: the tool call ID

* `type`: the type of the tool call (`function`)

* `function`: the function to be called, which contains the function's `name` and `arguments` to be passed to the function.

We then append these to the `messages` list with the `role` set to `assistant`.

```python PYTHON

response = co.chat(

model="command-a-03-2025", messages=messages, tools=tools

)

if response.message.tool_calls:

messages.append(response.message)

print(response.message.tool_plan, "\n")

print(response.message.tool_calls)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "What'\''s the weather in Toronto?"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco."

}

},

"required": ["location"]

}

}

}

]

}'

```

Example response:

```mdx wordWrap

I will search for the weather in Toronto.

[

ToolCallV2(

id="get_weather_1byjy32y4hvq",

type="function",

function=ToolCallV2Function(

name="get_weather", arguments='{"location":"Toronto"}'

),

)

]

```

By default, when using the Python SDK, the endpoint passes the tool calls as objects of type `ToolCallV2` and `ToolCallV2Function`. With these, you get built-in type safety and validation that helps prevent common errors during development.

Alternatively, you can use plain dictionaries to structure the tool call message.

These two options are shown below.

```python PYTHON

messages = [

{

"role": "user",

"content": "What's the weather in Madrid and Brasilia?",

},

{

"role": "assistant",

"tool_plan": "I will search for the weather in Madrid and Brasilia.",

"tool_calls": [

ToolCallV2(

id="get_weather_dkf0akqdazjb",

type="function",

function=ToolCallV2Function(

name="get_weather",

arguments='{"location":"Madrid"}',

),

),

ToolCallV2(

id="get_weather_gh65bt2tcdy1",

type="function",

function=ToolCallV2Function(

name="get_weather",

arguments='{"location":"Brasilia"}',

),

),

],

},

]

```

```python PYTHON

messages = [

{

"role": "user",

"content": "What's the weather in Madrid and Brasilia?",

},

{

"role": "assistant",

"tool_plan": "I will search for the weather in Madrid and Brasilia.",

"tool_calls": [

{

"id": "get_weather_dkf0akqdazjb",

"type": "function",

"function": {

"name": "get_weather",

"arguments": '{"location":"Madrid"}',

},

},

{

"id": "get_weather_gh65bt2tcdy1",

"type": "function",

"function": {

"name": "get_weather",

"arguments": '{"location":"Brasilia"}',

},

},

],

},

]

```

The model can decide to *not* make any tool call, and instead, respond to a user message directly. This is described [here](https://docs.cohere.com/docs/tool-use-usage-patterns#directly-answering).

The model can determine that more than one tool call is required. This can be calling the same tool multiple times or different tools for any number of calls. This is described [here](https://docs.cohere.com/docs/tool-use-usage-patterns#parallel-tool-calling).

### Step 3: Get tool results

During this step, we perform the function calling. We call the necessary tools based on the tool call payloads given by the endpoint.

For each tool call, we append the `messages` list with:

* the `tool_call_id` generated in the previous step.

* the `content` of each tool result with the following fields:

* `type` which is `document`

* `document` containing

* `data`: which stores the contents of the tool result.

* `id` (optional): you can provide each document with a unique ID for use in citations, otherwise auto-generated

```python PYTHON

import json

if response.message.tool_calls:

for tc in response.message.tool_calls:

tool_result = functions_map[tc.function.name](

**json.loads(tc.function.arguments)

)

tool_content = []

for data in tool_result:

# Optional: the "document" object can take an "id" field for use in citations, otherwise auto-generated

tool_content.append(

{

"type": "document",

"document": {"data": json.dumps(data)},

}

)

messages.append(

{

"role": "tool",

"tool_call_id": tc.id,

"content": tool_content,

}

)

```

### Step 4: Generate response and citations

By this time, the tool call has already been executed, and the result has been returned to the LLM.

In this step, we call the Chat endpoint to generate the response to the user, again by passing the parameters `model`, `messages` (which has now been updated with information fromthe tool calling and tool execution steps), and `tools`.

The model generates a response to the user, grounded on the information provided by the tool.

We then append the response to the `messages` list with the `role` set to `assistant`.

```python PYTHON

response = co.chat(

model="command-a-03-2025", messages=messages, tools=tools

)

messages.append(

{"role": "assistant", "content": response.message.content[0].text}

)

print(response.message.content[0].text)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "What'\''s the weather in Toronto?"

},

{

"role": "assistant",

"tool_plan": "I will search for the weather in Toronto.",

"tool_calls": [

{

"id": "get_weather_1byjy32y4hvq",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Toronto\"}"

}

}

]

},

{

"role": "tool",

"tool_call_id": "get_weather_1byjy32y4hvq",

"content": [

{

"type": "document",

"document": {

"data": "{\"temperature\": \"20°C\"}"

}

}

]

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco."

}

},

"required": ["location"]

}

}

}

]

}'

```

Example response:

```mdx wordWrap

It's 20°C in Toronto.

```

It also generates fine-grained citations, which are included out-of-the-box with the Command family of models. Here, we see the model generating two citations, one for each specific span in its response, where it uses the tool result to answer the question.

```python PYTHON

print(response.message.citations)

```

Example response:

```mdx wordWrap

[Citation(start=5, end=9, text='20°C', sources=[ToolSource(type='tool', id='get_weather_1byjy32y4hvq:0', tool_output={'temperature': '20C'})], type='TEXT_CONTENT')]

```

Above, we assume the model performs tool calling only once (either single call or parallel calls), and then generates its response. This is not always the case: the model might decide to do a sequence of tool calls in order to answer the user request. This means that steps 2 and 3 will run multiple times in loop. It is called multi-step tool use and is described [here](https://docs.cohere.com/docs/tool-use-usage-patterns#multi-step-tool-use).

### State management

This section provides a more detailed look at how the state is managed via the `messages` list as described in the [tool use workflow](#tool-use-workflow) above.

At each step of the workflow, the endpoint requires that we append specific types of information to the `messages` list. This is to ensure that the model has the necessary context to generate its response at a given point.

In summary, each single turn of a conversation that involves tool calling consists of:

1. A `user` message containing the user message

* `content`

2. An `assistant` message, containing the tool calling information

* `tool_plan`

* `tool_calls`

* `id`

* `type`

* `function` (consisting of `name` and `arguments`)

3. A `tool` message, containing the tool results

* `tool_call_id`

* `content` containing a list of documents where each document contains the following fields:

* `type`

* `document` (consisting of `data` and optionally `id`)

4. A final `assistant` message, containing the model's response

* `content`

These correspond to the four steps described above. The list of `messages` is shown below.

```python PYTHON

for message in messages:

print(message, "\n")

```

```json

{

"role": "user",

"content": "What's the weather in Toronto?"

}

{

"role": "assistant",

"tool_plan": "I will search for the weather in Toronto.",

"tool_calls": [

ToolCallV2(

id="get_weather_1byjy32y4hvq",

type="function",

function=ToolCallV2Function(

name="get_weather", arguments='{"location":"Toronto"}'

),

)

],

}

{

"role": "tool",

"tool_call_id": "get_weather_1byjy32y4hvq",

"content": [{"type": "document", "document": {"data": '{"temperature": "20C"}'}}],

}

{

"role": "assistant",

"content": "It's 20°C in Toronto."

}

```

The sequence of `messages` is represented in the diagram below.

```mermaid

%%{init: {'htmlLabels': true}}%%

flowchart TD

classDef defaultStyle fill:#fff,stroke:#000,color:#000;

A["

As an example, a weather search workflow might looks like the following:

* Step 1: **Get user message**: A user asks, "What's the weather in Toronto?"

* Step 2: **Generate tool calls**: A tool call is made to an external weather service with something like `get_weather(“toronto”)`.

* Step 3: **Get tool results**: The weather service returns the results, e.g. "20°C".

* Step 4: **Generate response and citations**: The model provides the answer, "The weather in Toronto is 20 degrees Celcius".

The following sections go through the implementation of these steps in detail.

### Step 1: Get user message

In the first step, we get the user's message and append it to the `messages` list with the `role` set to `user`.

```python PYTHON

messages = [

{"role": "user", "content": "What's the weather in Toronto?"}

]

```

Optional: If you want to define a system message, you can add it to the `messages` list with the `role` set to `system`.

```python PYTHON

system_message = """## Task & Context

You help people answer their questions and other requests interactively. You will be asked a very wide array of requests on all kinds of topics. You will be equipped with a wide range of search engines or similar tools to help you, which you use to research your answer. You should focus on serving the user's needs as best you can, which will be wide-ranging.

## Style Guide

Unless the user asks for a different style of answer, you should answer in full sentences, using proper grammar and spelling.

"""

messages = [

{"role": "system", "content": system_message},

{"role": "user", "content": "What's the weather in Toronto?"},

]

```

### Step 2: Generate tool calls

Next, we call the Chat endpoint to generate the list of tool calls. This is done by passing the parameters `model`, `messages`, and `tools` to the Chat endpoint.

The endpoint will send back a list of tool calls to be made if the model determines that tools are required. If it does, it will return two types of information:

* `tool_plan`: its reflection on the next steps it should take, given the user query.

* `tool_calls`: a list of tool calls to be made (if any), together with auto-generated tool call IDs. Each generated tool call contains:

* `id`: the tool call ID

* `type`: the type of the tool call (`function`)

* `function`: the function to be called, which contains the function's `name` and `arguments` to be passed to the function.

We then append these to the `messages` list with the `role` set to `assistant`.

```python PYTHON

response = co.chat(

model="command-a-03-2025", messages=messages, tools=tools

)

if response.message.tool_calls:

messages.append(response.message)

print(response.message.tool_plan, "\n")

print(response.message.tool_calls)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "What'\''s the weather in Toronto?"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco."

}

},

"required": ["location"]

}

}

}

]

}'

```

Example response:

```mdx wordWrap

I will search for the weather in Toronto.

[

ToolCallV2(

id="get_weather_1byjy32y4hvq",

type="function",

function=ToolCallV2Function(

name="get_weather", arguments='{"location":"Toronto"}'

),

)

]

```

By default, when using the Python SDK, the endpoint passes the tool calls as objects of type `ToolCallV2` and `ToolCallV2Function`. With these, you get built-in type safety and validation that helps prevent common errors during development.

Alternatively, you can use plain dictionaries to structure the tool call message.

These two options are shown below.

```python PYTHON

messages = [

{

"role": "user",

"content": "What's the weather in Madrid and Brasilia?",

},

{

"role": "assistant",

"tool_plan": "I will search for the weather in Madrid and Brasilia.",

"tool_calls": [

ToolCallV2(

id="get_weather_dkf0akqdazjb",

type="function",

function=ToolCallV2Function(

name="get_weather",

arguments='{"location":"Madrid"}',

),

),

ToolCallV2(

id="get_weather_gh65bt2tcdy1",

type="function",

function=ToolCallV2Function(

name="get_weather",

arguments='{"location":"Brasilia"}',

),

),

],

},

]

```

```python PYTHON

messages = [

{

"role": "user",

"content": "What's the weather in Madrid and Brasilia?",

},

{

"role": "assistant",

"tool_plan": "I will search for the weather in Madrid and Brasilia.",

"tool_calls": [

{

"id": "get_weather_dkf0akqdazjb",

"type": "function",

"function": {

"name": "get_weather",

"arguments": '{"location":"Madrid"}',

},

},

{

"id": "get_weather_gh65bt2tcdy1",

"type": "function",

"function": {

"name": "get_weather",

"arguments": '{"location":"Brasilia"}',

},

},

],

},

]

```

The model can decide to *not* make any tool call, and instead, respond to a user message directly. This is described [here](https://docs.cohere.com/docs/tool-use-usage-patterns#directly-answering).

The model can determine that more than one tool call is required. This can be calling the same tool multiple times or different tools for any number of calls. This is described [here](https://docs.cohere.com/docs/tool-use-usage-patterns#parallel-tool-calling).

### Step 3: Get tool results

During this step, we perform the function calling. We call the necessary tools based on the tool call payloads given by the endpoint.

For each tool call, we append the `messages` list with:

* the `tool_call_id` generated in the previous step.

* the `content` of each tool result with the following fields:

* `type` which is `document`

* `document` containing

* `data`: which stores the contents of the tool result.

* `id` (optional): you can provide each document with a unique ID for use in citations, otherwise auto-generated

```python PYTHON

import json

if response.message.tool_calls:

for tc in response.message.tool_calls:

tool_result = functions_map[tc.function.name](

**json.loads(tc.function.arguments)

)

tool_content = []

for data in tool_result:

# Optional: the "document" object can take an "id" field for use in citations, otherwise auto-generated

tool_content.append(

{

"type": "document",

"document": {"data": json.dumps(data)},

}

)

messages.append(

{

"role": "tool",

"tool_call_id": tc.id,

"content": tool_content,

}

)

```

### Step 4: Generate response and citations

By this time, the tool call has already been executed, and the result has been returned to the LLM.

In this step, we call the Chat endpoint to generate the response to the user, again by passing the parameters `model`, `messages` (which has now been updated with information fromthe tool calling and tool execution steps), and `tools`.

The model generates a response to the user, grounded on the information provided by the tool.

We then append the response to the `messages` list with the `role` set to `assistant`.

```python PYTHON

response = co.chat(

model="command-a-03-2025", messages=messages, tools=tools

)

messages.append(

{"role": "assistant", "content": response.message.content[0].text}

)

print(response.message.content[0].text)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "What'\''s the weather in Toronto?"

},

{

"role": "assistant",

"tool_plan": "I will search for the weather in Toronto.",

"tool_calls": [

{

"id": "get_weather_1byjy32y4hvq",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Toronto\"}"

}

}

]

},

{

"role": "tool",

"tool_call_id": "get_weather_1byjy32y4hvq",

"content": [

{

"type": "document",

"document": {

"data": "{\"temperature\": \"20°C\"}"

}

}

]

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco."

}

},

"required": ["location"]

}

}

}

]

}'

```

Example response:

```mdx wordWrap

It's 20°C in Toronto.

```

It also generates fine-grained citations, which are included out-of-the-box with the Command family of models. Here, we see the model generating two citations, one for each specific span in its response, where it uses the tool result to answer the question.

```python PYTHON

print(response.message.citations)

```

Example response:

```mdx wordWrap

[Citation(start=5, end=9, text='20°C', sources=[ToolSource(type='tool', id='get_weather_1byjy32y4hvq:0', tool_output={'temperature': '20C'})], type='TEXT_CONTENT')]

```

Above, we assume the model performs tool calling only once (either single call or parallel calls), and then generates its response. This is not always the case: the model might decide to do a sequence of tool calls in order to answer the user request. This means that steps 2 and 3 will run multiple times in loop. It is called multi-step tool use and is described [here](https://docs.cohere.com/docs/tool-use-usage-patterns#multi-step-tool-use).

### State management

This section provides a more detailed look at how the state is managed via the `messages` list as described in the [tool use workflow](#tool-use-workflow) above.

At each step of the workflow, the endpoint requires that we append specific types of information to the `messages` list. This is to ensure that the model has the necessary context to generate its response at a given point.

In summary, each single turn of a conversation that involves tool calling consists of:

1. A `user` message containing the user message

* `content`

2. An `assistant` message, containing the tool calling information

* `tool_plan`

* `tool_calls`

* `id`

* `type`

* `function` (consisting of `name` and `arguments`)

3. A `tool` message, containing the tool results

* `tool_call_id`

* `content` containing a list of documents where each document contains the following fields:

* `type`

* `document` (consisting of `data` and optionally `id`)

4. A final `assistant` message, containing the model's response

* `content`

These correspond to the four steps described above. The list of `messages` is shown below.

```python PYTHON

for message in messages:

print(message, "\n")

```

```json

{

"role": "user",

"content": "What's the weather in Toronto?"

}

{

"role": "assistant",

"tool_plan": "I will search for the weather in Toronto.",

"tool_calls": [

ToolCallV2(

id="get_weather_1byjy32y4hvq",

type="function",

function=ToolCallV2Function(

name="get_weather", arguments='{"location":"Toronto"}'

),

)

],

}

{

"role": "tool",

"tool_call_id": "get_weather_1byjy32y4hvq",

"content": [{"type": "document", "document": {"data": '{"temperature": "20C"}'}}],

}

{

"role": "assistant",

"content": "It's 20°C in Toronto."

}

```

The sequence of `messages` is represented in the diagram below.

```mermaid

%%{init: {'htmlLabels': true}}%%

flowchart TD

classDef defaultStyle fill:#fff,stroke:#000,color:#000;

A["USER

Query

"]

B["ASSISTANT

Tool call

"]

C["TOOL

Tool result

"]

D["ASSISTANT

Response

"]

A -.-> B

B -.-> C

C -.-> D

class A,B,C,D defaultStyle;

```

Note that this sequence represents a basic usage pattern in tool use. The [next page](https://docs.cohere.com/v2/docs/tool-use-usage-patterns) describes how this is adapted for other scenarios.

---

# Usage patterns for tool use (function calling)

> Guide on implementing various tool use patterns with the Cohere Chat endpoint such as parallel tool calling, multi-step tool use, and more (API v2).

The tool use feature of the Chat endpoint comes with a set of capabilities that enable developers to implement a variety of tool use scenarios. This section describes the different patterns of tool use implementation supported by these capabilities. Each pattern can be implemented on its own or in combination with the others.

## Setup

First, import the Cohere library and create a client.

```python PYTHON

# ! pip install -U cohere

import cohere

co = cohere.ClientV2(

"COHERE_API_KEY"

) # Get your free API key here: https://dashboard.cohere.com/api-keys

```

```python PYTHON

# ! pip install -U cohere

import cohere

co = cohere.ClientV2(

api_key="", # Leave this blank

base_url="",

)

```

We'll use the same `get_weather` tool as in the [previous example](https://docs.cohere.com/v2/docs/tool-use-overview#creating-the-tool).

```python PYTHON

def get_weather(location):

# Implement any logic here

return [{"temperature": "20C"}]

# Return a list of objects e.g. [{"url": "abc.com", "text": "..."}, {"url": "xyz.com", "text": "..."}]

functions_map = {"get_weather": get_weather}

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco.",

}

},

"required": ["location"],

},

},

},

]

```

## Parallel tool calling

The model can determine that more than one tool call is required, where it will call multiple tools in parallel. This can be calling the same tool multiple times or different tools for any number of calls.

In the example below, the user asks for the weather in Toronto and New York. This requires calling the `get_weather` function twice, one for each location. This is reflected in the model's response, where two parallel tool calls are generated.

```python PYTHON

messages = [

{

"role": "user",

"content": "What's the weather in Toronto and New York?",

}

]

response = co.chat(

model="command-a-03-2025", messages=messages, tools=tools

)

if response.message.tool_calls:

messages.append(response.message)

print(response.message.tool_plan, "\n")

print(response.message.tool_calls)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "What'\''s the weather in Toronto and New York?"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco."

}

},

"required": ["location"]

}

}

}

]

}'

```

Example response:

```mdx wordWrap

I will search for the weather in Toronto and New York.

[

ToolCallV2(

id="get_weather_9b0nr4kg58a8",

type="function",

function=ToolCallV2Function(

name="get_weather", arguments='{"location":"Toronto"}'

),

),

ToolCallV2(

id="get_weather_0qq0mz9gwnqr",

type="function",

function=ToolCallV2Function(

name="get_weather", arguments='{"location":"New York"}'

),

),

]

```

**State management**

When tools are called in parallel, we append the messages list with one single `assistant` message containing all the tool calls and one `tool` message for each tool call.

```python PYTHON

import json

if response.message.tool_calls:

for tc in response.message.tool_calls:

tool_result = functions_map[tc.function.name](

**json.loads(tc.function.arguments)

)

tool_content = []

for data in tool_result:

# Optional: the "document" object can take an "id" field for use in citations, otherwise auto-generated

tool_content.append(

{

"type": "document",

"document": {"data": json.dumps(data)},

}

)

messages.append(

{

"role": "tool",

"tool_call_id": tc.id,

"content": tool_content,

}

)

```

The sequence of messages is represented in the diagram below.

```mermaid

%%{init: {'htmlLabels': true}}%%

flowchart TD

classDef defaultStyle fill:#fff,stroke:#000,color:#000;

A["USER

Query

"]

B["ASSISTANT

Tool calls

"]

C["TOOL

Tool result #1

"]

D["TOOL

Tool result #2

"]

E["TOOL

Tool result #N

"]

F["ASSISTANT

Response

"]

A -.-> B

B -.-> C

C -.-> D

D -.-> E

E -.-> F

class A,B,C,D,E,F defaultStyle;

```

## Directly answering

A key attribute of tool use systems is the model’s ability to choose the right tools for a task. This includes the model's ability to decide to *not* use any tool, and instead, respond to a user message directly.

In the example below, the user asks for a simple arithmetic question. The model determines that it does not need to use any of the available tools (only one, `get_weather`, in this case), and instead, directly answers the user.

```python PYTHON

messages = [{"role": "user", "content": "What's 2+2?"}]

response = co.chat(

model="command-a-03-2025", messages=messages, tools=tools

)

if response.message.tool_calls:

print(response.message.tool_plan, "\n")

print(response.message.tool_calls)

else:

print(response.message.content[0].text)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "What'\''s 2+2?"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco."

}

},

"required": ["location"]

}

}

}

]

}'

```

Example response:

```mdx wordWrap

The answer to 2+2 is 4.

```

**State management**

When the model opts to respond directly to the user, there will be no items 2 and 3 above (the tool calling and tool response messages). Instead, the final `assistant` message will contain the model's direct response to the user.

```mermaid

%%{init: {'htmlLabels': true}}%%

flowchart TD

classDef defaultStyle fill:#fff,stroke:#000,color:#000;

A["USER

Query

"]

B["ASSISTANT

Response

"]

A -.-> B

class A,B defaultStyle;

```

Note: you can force the model to directly answer every time using the `tool_choice` parameter, [described here](#forcing-tool-usage)

## Multi-step tool use

The Chat endpoint supports multi-step tool use, which enables the model to perform sequential reasoning. This is especially useful in agentic workflows that require multiple steps to complete a task.

As an example, suppose a tool use application has access to a web search tool. Given the question "What was the revenue of the most valuable company in the US in 2023?”, it will need to perform a series of steps in a specific order:

* Identify the most valuable company in the US in 2023

* Then only get the revenue figure now that the company has been identified

To illustrate this, let's start with the same weather example and add another tool called `get_capital_city`, which returns the capital city of a given country.

Here's the function definitions for the tools:

```python PYTHON

def get_weather(location):

temperature = {

"bern": "22°C",

"madrid": "24°C",

"brasilia": "28°C",

}

loc = location.lower()

if loc in temperature:

return [{"temperature": {loc: temperature[loc]}}]

return [{"temperature": {loc: "Unknown"}}]

def get_capital_city(country):

capital_city = {

"switzerland": "bern",

"spain": "madrid",

"brazil": "brasilia",

}

country = country.lower()

if country in capital_city:

return [{"capital_city": {country: capital_city[country]}}]

return [{"capital_city": {country: "Unknown"}}]

functions_map = {

"get_capital_city": get_capital_city,

"get_weather": get_weather,

}

```

And here are the corresponding tool schemas:

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco.",

}

},

"required": ["location"],

},

},

},

{

"type": "function",

"function": {

"name": "get_capital_city",

"description": "gets the capital city of a given country",

"parameters": {

"type": "object",

"properties": {

"country": {

"type": "string",

"description": "the country to get the capital city for",

}

},

"required": ["country"],

},

},

},

]

```

Next, we implement the four-step tool use workflow as described in the [previous page](https://docs.cohere.com/v2/docs/tool-use-overview).

The key difference here is the second (tool calling) and third (tool execution) steps are put in a `while` loop, which means that a sequence of this pair can happen for a number of times. This stops when the model decides in the tool calling step that no more tool calls are needed, which then triggers the fourth step (response generation).

In this example, the user asks for the temperature in Brazil's capital city.

```python PYTHON

---

# Step 1: Get the user message

messages = [

{

"role": "user",

"content": "What's the temperature in Brazil's capital city?",

}

]

---

# Step 2: Generate tool calls (if any)

model = "command-a-03-2025"

response = co.chat(

model=model, messages=messages, tools=tools, temperature=0.3

)

while response.message.tool_calls:

print("TOOL PLAN:")

print(response.message.tool_plan, "\n")

print("TOOL CALLS:")

for tc in response.message.tool_calls:

print(

f"Tool name: {tc.function.name} | Parameters: {tc.function.arguments}"

)

print("=" * 50)

messages.append(response.message)

# Step 3: Get tool results

print("TOOL RESULT:")

for tc in response.message.tool_calls:

tool_result = functions_map[tc.function.name](

**json.loads(tc.function.arguments)

)

tool_content = []

print(tool_result)

for data in tool_result:

# Optional: the "document" object can take an "id" field for use in citations, otherwise auto-generated

tool_content.append(

{

"type": "document",

"document": {"data": json.dumps(data)},

}

)

messages.append(

{

"role": "tool",

"tool_call_id": tc.id,

"content": tool_content,

}

)

# Step 4: Generate response and citations

response = co.chat(

model=model,

messages=messages,

tools=tools,

temperature=0.1,

)

messages.append(

{

"role": "assistant",

"content": response.message.content[0].text,

}

)

---

# Print final response

print("RESPONSE:")

print(response.message.content[0].text)

print("=" * 50)

---

# Print citations (if any)

verbose_source = (

True # Change to True to display the contents of a source

)

if response.message.citations:

print("CITATIONS:\n")

for citation in response.message.citations:

print(

f"Start: {citation.start}| End:{citation.end}| Text:'{citation.text}' "

)

print("Sources:")

for idx, source in enumerate(citation.sources):

print(f"{idx+1}. {source.id}")

if verbose_source:

print(f"{source.tool_output}")

print("\n")

```

The model first determines that it needs to find out the capital city of Brazil. Once it has this information, it proceeds with the next step in the sequence, which is to look up the temperature of that city.

This is reflected in the model's response, where two tool calling-result pairs are generated in a sequence.

Example response:

```mdx wordWrap

TOOL PLAN:

First, I will search for the capital city of Brazil. Then, I will search for the temperature in that city.

TOOL CALLS:

Tool name: get_capital_city | Parameters: {"country":"Brazil"}

==================================================

TOOL RESULT:

[{'capital_city': {'brazil': 'brasilia'}}]

TOOL PLAN:

I have found that the capital city of Brazil is Brasilia. Now, I will search for the temperature in Brasilia.

TOOL CALLS:

Tool name: get_weather | Parameters: {"location":"Brasilia"}

==================================================

TOOL RESULT:

[{'temperature': {'brasilia': '28°C'}}]

RESPONSE:

The temperature in Brasilia, the capital city of Brazil, is 28°C.

==================================================

CITATIONS:

Start: 60| End:65| Text:'28°C.'

Sources:

1. get_weather_p0dage9q1nv4:0

{'temperature': '{"brasilia":"28°C"}'}

```

**State management**

In a multi-step tool use scenario, instead of just one occurence of `assistant`-`tool` messages, there will be a sequence of `assistant`-`tool` messages to reflect the multiple steps of tool calling involved.

```mermaid

%%{init: {'htmlLabels': true}}%%

flowchart TD

classDef defaultStyle fill:#fff,stroke:#000,color:#000;

A["USER

Query

"]

B1["ASSISTANT

Tool call step #1

"]

C1["TOOL

Tool result step #1

"]

B2["ASSISTANT

Tool call step #2

"]

C2["TOOL

Tool result step #2

"]

BN["ASSISTANT

Tool call step #N

"]

CN["TOOL

Tool result step #N

"]

D["ASSISTANT

Response

"]

A -.-> B1

B1 -.-> C1

C1 -.-> B2

B2 -.-> C2

C2 -.-> BN

BN -.-> CN

CN -.-> D

class A,B1,C1,B2,C2,BN,CN,D defaultStyle;

```

## Forcing tool usage

This feature is only compatible with the

[Command R7B](https://docs.cohere.com/v2/docs/command-r7b)

and newer models.

As shown in the previous examples, during the tool calling step, the model may decide to either:

* make tool call(s)

* or, respond to a user message directly.

You can, however, force the model to choose one of these options. This is done via the `tool_choice` parameter.

* You can force the model to make tool call(s), i.e. to not respond directly, by setting the `tool_choice` parameter to `REQUIRED`.

* Alternatively, you can force the model to respond directly, i.e. to not make tool call(s), by setting the `tool_choice` parameter to `NONE`.

By default, if you don’t specify the `tool_choice` parameter, then it is up to the model to decide whether to make tool calls or respond directly.

```python PYTHON {5}

response = co.chat(

model="command-a-03-2025",

messages=messages,

tools=tools,

tool_choice="REQUIRED" # optional, to force tool calls

# tool_choice="NONE" # optional, to force a direct response

)

```

**State management**

Here's the sequence of messages when `tool_choice` is set to `REQUIRED`.

```mermaid

%%{init: {'htmlLabels': true}}%%

flowchart TD

classDef defaultStyle fill:#fff,stroke:#000,color:#000;

A["USER

Query

"]

B["ASSISTANT

Tool call

"]

C["TOOL

Tool result

"]

D["ASSISTANT

Response

"]

A -.-> B

B -.-> C

C -.-> D

class A,B,C,D defaultStyle;

```

Here's the sequence of messages when `tool_choice` is set to `NONE`.

```mermaid

%%{init: {'htmlLabels': true}}%%

flowchart TD

classDef defaultStyle fill:#fff,stroke:#000,color:#000;

A["USER

Query

"]

B["ASSISTANT

Response

"]

A -.-> B

class A,B defaultStyle;

```

## Chatbots (multi-turn)

Building chatbots requires maintaining the memory or state of a conversation over multiple turns. To do this, we can keep appending each turn of a conversation to the `messages` list.

As an example, here's the messages list from the first turn of a conversation.

```python PYTHON

from cohere import ToolCallV2, ToolCallV2Function

messages = [

{"role": "user", "content": "What's the weather in Toronto?"},

{

"role": "assistant",

"tool_plan": "I will search for the weather in Toronto.",

"tool_calls": [

ToolCallV2(

id="get_weather_1byjy32y4hvq",

type="function",

function=ToolCallV2Function(

name="get_weather",

arguments='{"location":"Toronto"}',

),

)

],

},

{

"role": "tool",

"tool_call_id": "get_weather_1byjy32y4hvq",

"content": [

{

"type": "document",

"document": {"data": '{"temperature": "20C"}'},

}

],

},

{"role": "assistant", "content": "It's 20°C in Toronto."},

]

```

Then, in the second turn, when provided with a rather vague follow-up user message, the model correctly infers that the context is about the weather.

```python PYTHON

messages.append({"role": "user", "content": "What about London?"})

response = co.chat(

model="command-a-03-2025", messages=messages, tools=tools

)

if response.message.tool_calls:

messages.append(response.message)

print(response.message.tool_plan, "\n")

print(response.message.tool_calls)

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "What'\''s the weather in Toronto?"

},

{

"role": "assistant",

"tool_plan": "I will search for the weather in Toronto.",

"tool_calls": [

{

"id": "get_weather_1byjy32y4hvq",

"type": "function",

"function": {

"name": "get_weather",

"arguments": "{\"location\":\"Toronto\"}"

}

}

]

},

{

"role": "tool",

"tool_call_id": "get_weather_1byjy32y4hvq",

"content": [

{

"type": "document",

"document": {

"data": "{\"temperature\": \"20C\"}"

}

}

]

},

{

"role": "assistant",

"content": "It'\''s 20°C in Toronto."

},

{

"role": "user",

"content": "What about London?"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco."

}

},

"required": ["location"]

}

}

}

]

}'

```

Example response:

```mdx wordWrap

I will search for the weather in London.

[ToolCallV2(id='get_weather_8hwpm7d4wr14', type='function', function=ToolCallV2Function(name='get_weather', arguments='{"location":"London"}'))]

```

**State management**

The sequence of messages is represented in the diagram below.

```mermaid

%%{init: {'htmlLabels': true}}%%

flowchart TD

classDef defaultStyle fill:#fff,stroke:#000,color:#000;

A["USER

Query - turn #1

"]

B["ASSISTANT

Tool call - turn #1

"]

C["TOOL

Tool result - turn #1

"]

D["ASSISTANT

Response - turn #1

"]

E["USER

Query - turn #2

"]

F["ASSISTANT

Tool call - turn #2

"]

G["TOOL

Tool result - turn #2

"]

H["ASSISTANT

Response - turn #2

"]

I["USER

...

"]

A -.-> B

B -.-> C

C -.-> D

D -.-> E

E -.-> F

F -.-> G

G -.-> H

H -.-> I

class A,B,C,D,E,F,G,H,I defaultStyle;

```

---

# Parameter types for tool use (function calling)

> Guide on using structured outputs with tool parameters in the Cohere Chat API. Includes guide on supported parameter types and usage examples (API v2).

## Structured Outputs (Tools)

The [Structured Outputs](https://docs.cohere.com/docs/structured-outputs) feature guarantees that an LLM’s response will strictly follow a schema specified by the user.

While this feature is supported in two scenarios (JSON and tools), this page will focus on the tools scenario.

### Usage

When you use the Chat API with `tools`, setting the `strict_tools` parameter to `True` will guarantee that every generated tool call follows the specified tool schema.

Concretely, this means:

* No hallucinated tool names

* No hallucinated tool parameters

* Every `required` parameter is included in the tool call

* All parameters produce the requested data types

With `strict_tools` enabled, the API will ensure that the tool names and tool parameters are generated according to the tool definitions. This eliminates tool name and parameter hallucinations, ensures that each parameter matches the specified data type, and that all required parameters are included in the model response.

Additionally, this results in faster development. You don’t need to spend a lot of time prompt engineering the model to avoid hallucinations.

When the `strict_tools` parameter is set to `True`, you can define a maximum of 200 fields across all tools being passed to an API call.

```python PYTHON {4}

response = co.chat(model="command-a-03-2025",

messages=[{"role": "user", "content": "What's the weather in Toronto?"}],

tools=tools,

strict_tools=True

)

```

### Important notes

When using `strict_tools`, the following notes apply:

* This parameter is only supported in Chat API V2 via the strict\_tools parameter (not API V1).

* You must specify at least one `required` parameter. Tools with only optional parameters are not supported in this mode.

* You can define a maximum of 200 fields across all tools in a single Chat API call.

## Supported parameter types

Structured Outputs supports a subset of the JSON Schema specification. Refer to the [Structured Outputs documentation](https://docs.cohere.com/docs/structured-outputs#parameter-types-support) for the list of supported and unsupported parameters.

## Usage examples

This section provides usage examples of the JSON Schema [parameters that are supported](https://docs.cohere.com/v2/docs/tool-use#structured-outputs-tools) in Structured Outputs (Tools).

The examples on this page each provide a tool schema and a `message` (the user message). To get an output, pass those values to a Chat endpoint call, as shown in the helper code below.

```python PYTHON

# ! pip install -U cohere

import cohere

co = cohere.ClientV2(

"COHERE_API_KEY"

) # Get your free API key here: https://dashboard.cohere.com/api-keys

```

```python PYTHON

# ! pip install -U cohere

import cohere

co = cohere.ClientV2(

api_key="", # Leave this blank

base_url="",

)

```

```python PYTHON

response = co.chat(

# The model name. Example: command-a-03-2025

model="MODEL_NAME",

# The user message. Optional - you can first add a `system_message` role

messages=[

{

"role": "user",

"content": message,

}

],

# The tool schema that you define

tools=tools,

# This guarantees that the output will adhere to the schema

strict_tools=True,

# Typically, you'll need a low temperature for more deterministic outputs

temperature=0,

)

for tc in response.message.tool_calls:

print(f"{tc.function.name} | Parameters: {tc.function.arguments}")

```

```bash cURL

curl --request POST \

--url https://api.cohere.ai/v2/chat \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--header "Authorization: bearer $CO_API_KEY" \

--data '{

"model": "command-a-03-2025",

"messages": [

{

"role": "user",

"content": "What'\''s the weather in Toronto?"

}

],

"tools": [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco."

}

},

"required": ["location"]

}

}

}

],

"strict_tools": true,

"temperature": 0

}'

```

### Basic types

#### String

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"location": {

"type": "string",

"description": "the location to get the weather, example: San Francisco.",

}

},

"required": ["location"],

},

},

},

]

message = "What's the weather in Toronto?"

```

Example response:

```mdx wordWrap

get_weather

{

"location": "Toronto"

}

```

#### Integer

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "add_numbers",

"description": "Adds two numbers",

"parameters": {

"type": "object",

"properties": {

"first_number": {

"type": "integer",

"description": "The first number to add.",

},

"second_number": {

"type": "integer",

"description": "The second number to add.",

},

},

"required": ["first_number", "second_number"],

},

},

}

]

message = "What is five plus two"

```

Example response:

```mdx wordWrap

add_numbers

{

"first_number": 5,

"second_number": 2

}

```

#### Float

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "add_numbers",

"description": "Adds two numbers",

"parameters": {

"type": "object",

"properties": {

"first_number": {

"type": "number",

"description": "The first number to add.",

},

"second_number": {

"type": "number",

"description": "The second number to add.",

},

},

"required": ["first_number", "second_number"],

},

},

}

]

message = "What is 5.3 plus 2"

```

Example response:

```mdx wordWrap

add_numbers

{

"first_number": 5.3,

"second_number": 2

}

```

#### Boolean

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "reserve_tickets",

"description": "Reserves a train ticket",

"parameters": {

"type": "object",

"properties": {

"quantity": {

"type": "integer",

"description": "The quantity of tickets to reserve.",

},

"trip_protection": {

"type": "boolean",

"description": "Indicates whether to add trip protection.",

},

},

"required": ["quantity", "trip_protection"],

},

},

}

]

message = "Book me 2 tickets. I don't need trip protection."

```

Example response:

```mdx wordWrap

reserve_tickets

{

"quantity": 2,

"trip_protection": false

}

```

### Array

#### With specific types

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "get_weather",

"description": "Gets the weather of a given location",

"parameters": {

"type": "object",

"properties": {

"locations": {

"type": "array",

"items": {"type": "string"},

"description": "The locations to get weather.",

}

},

"required": ["locations"],

},

},

},

]

message = "What's the weather in Toronto and New York?"

```

Example response:

```mdx wordWrap

get_weather

{

"locations": [

"Toronto",

"New York"

]

}

```

#### Without specific types

```python PYTHON

tools = [

{

"type": "function",

"function": {

"name": "get_weather",